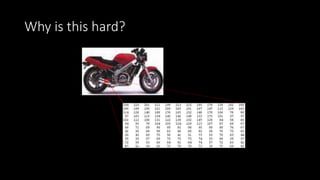

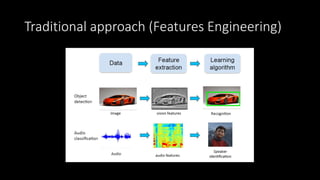

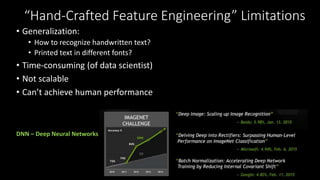

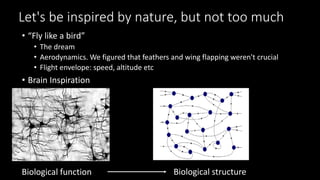

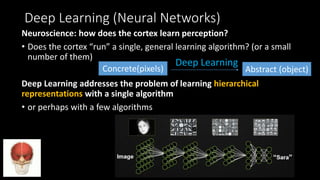

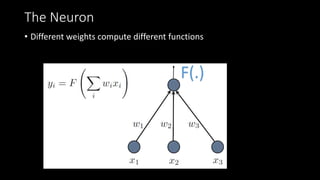

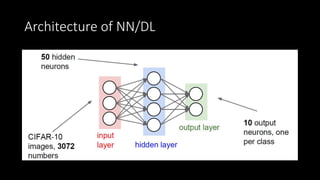

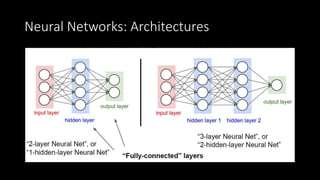

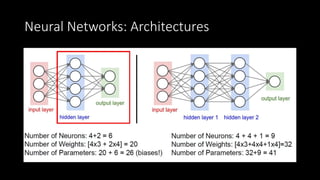

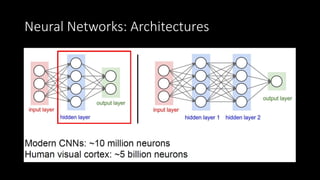

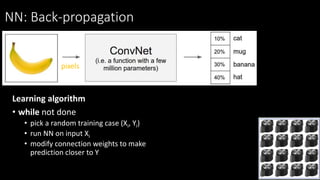

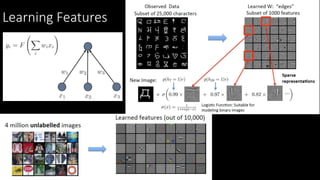

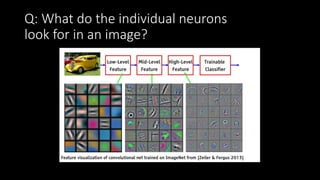

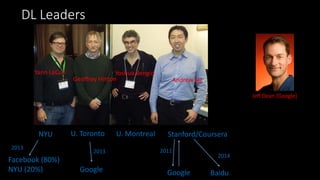

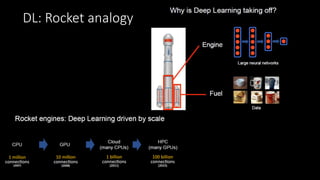

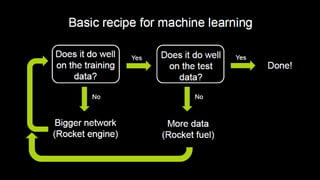

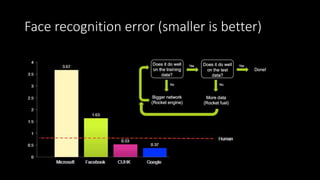

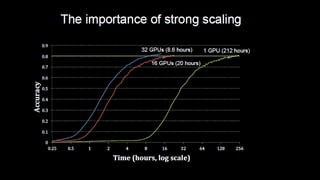

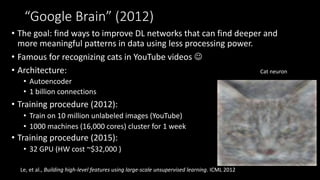

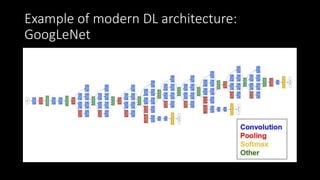

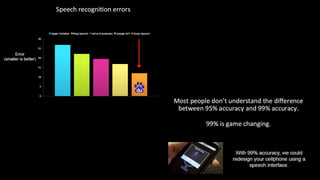

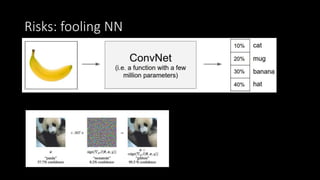

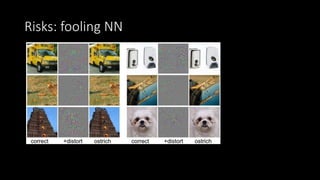

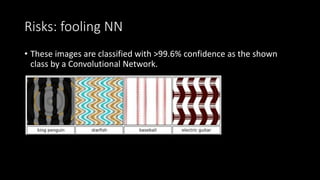

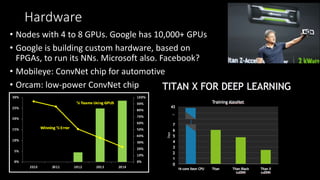

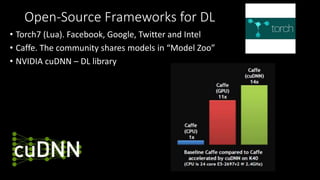

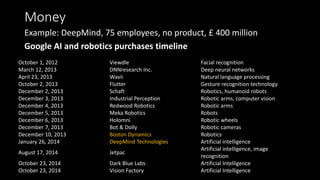

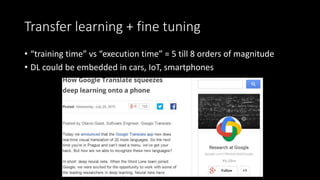

The document discusses the evolution and applications of deep learning (DL) and neural networks (NN), emphasizing the need for general algorithms that learn from observations to improve capabilities in perception, speech, and image recognition. It covers projects, architectures, and advances in DL, including examples from major companies like Google and Facebook, while also addressing challenges and risks associated with the technology. The talk aims to provide an intuitive understanding of DL's significance and its potential impact on various fields.