The document discusses a zero-shot learning framework that enables classification of both seen and unseen classes using semantic word vector representations and a Bayesian approach. It emphasizes the transfer of knowledge between modalities through visual-semantic mappings, allowing better classification and knowledge transfer with minimal training data. Key contributions include the integration of zero-shot and multi-shot learning and the potential for unsupervised learning in various applications.

![Key Ideas

• Semantic word vector representations:

• Allows transfer of knowledge between modalities

• Even when these representations are learned in an

unsupervised way

• Bayesian framework:

1. Differentiate between unseen/seen classes

2. From points on the semantic manifold of trained classes

3. Allows combining both zero-shot and seen classification

into one framework

[ Ø-shot + 1-shot = “multi-shot” :) ]](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-3-320.jpg)

![Visual-Semantic Word Space

• Word vectors capture distributional similarities

from a large, unsupervised text corpus. [Word

vectors create a semantic space] (Huang et al. 2012)

• Images are mapped into a semantic space of words that is

learned by a neural network model (Coates & Ng 2011;

Coates et al. 2011)

• By learning an image mapping into this space, the word

vectors get implicitly grounded by the visual modality,

allowing us to give prototypical instances for various word

(Socher et al. 2013, this paper)](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-4-320.jpg)

![• Word vectors capture distributional similarities from a

large, unsupervised text corpus. [Word vectors create a

semantic space] (Huang et al. 2012)

• Images are mapped into a semantic space of words that is

learned by a neural network model (Coates & Ng 2011;

Coates et al. 2011)

• By learning an image mapping into this space, the word

vectors get implicitly grounded by the visual modality,

allowing us to give prototypical instances for various word

(Socher et al. 2013, this paper)

Visual-Semantic Word Space

Semantic Space](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-5-320.jpg)

![http://www.socher.org/index.php/Main/ImprovingWordRepresentationsViaGlobalContextAndMultipleWordPrototypes

[Huang et al. 2012]

Semantic Space](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-7-320.jpg)

![[Huang et al. 2012]](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-9-320.jpg)

![[Huang et al. 2012]](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-10-320.jpg)

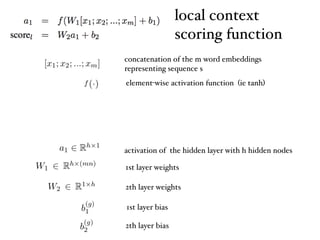

![local context:

global context:

final score:

[Huang et al. 2012]](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-11-320.jpg)

![• Word vectors capture distributional similarities from a

large, unsupervised text corpus. [Word vectors create a

semantic space] (Huang et al. 2012)

• Images are mapped into a semantic space of words

that is learned by a neural network model (Coates &

Ng 2011; Coates et al. 2011)

• By learning an image mapping into this space, the word

vectors get implicitly grounded by the visual modality,

allowing us to give prototypical instances for various word

(Socher et al. 2013, this paper)

Visual-Semantic Word Space

Semantic Space](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-14-320.jpg)

![• Word vectors capture distributional similarities from a

large, unsupervised text corpus. [Word vectors create a

semantic space] (Huang et al. 2012)

• Images are mapped into a semantic space of words that is

learned by a neural network model (Coates & Ng 2011;

Coates et al. 2011)

• By learning an image mapping into this space, the word

vectors get implicitly grounded by the visual modality,

allowing us to give prototypical instances for various word

(Socher et al. 2013, this paper)

Visual-Semantic Word Space

Semantic Space

Image Features](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-15-320.jpg)

![Image Feature Learning

• high level description: extract random patches, extract features

from sub-patches, pool features, train liner classifier to predict

labels

• = fast simple algorithms with the correct parameters work as

well as complex, slow algorithms

[Coates et al. 2011 (used by Coates & Ng 2011)]](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-16-320.jpg)

![• Word vectors capture distributional similarities from a

large, unsupervised text corpus. [Word vectors create a

semantic space] (Huang et al. 2012)

• Images are mapped into a semantic space of words that is

learned by a neural network model (Coates & Ng 2011;

Coates et al. 2011)

• By learning an image mapping into this space, the

word vectors get implicitly grounded by the visual

modality, allowing us to give prototypical instances

for various word (Socher et al. 2013, this paper)

Visual-Semantic Word Space

Semantic Space

Image Features](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-17-320.jpg)

![• Word vectors capture distributional similarities from a

large, unsupervised text corpus. [Word vectors create a

semantic space] (Huang et al. 2012)

• Images are mapped into a semantic space of words that is

learned by a neural network model (Coates & Ng 2011;

Coates et al. 2011)

• By learning an image mapping into this space, the word

vectors get implicitly grounded by the visual modality,

allowing us to give prototypical instances for various word

(Socher et al. 2013, this paper)

Visual-Semantic Word Space

Semantic Space

Visual-Semantic Space

Image Features](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-18-320.jpg)

![[this paper, Socher et al. 2013]

Visual-Semantic Space](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-19-320.jpg)

![Projecting Images into Visual Space

Objective function(s):

[Socher et al. 2013]

training images

set of word vectors seen/unseen visual classes

mapped to the word vector (class name)](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-20-320.jpg)

![Projecting Images into Visual Space

Objective function(s):

[Socher et al. 2013]

training images

set of word vectors seen/unseen visual classes

mapped to the word vector (class name)](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-21-320.jpg)

![T-SNE visualization of the semantic word space [Socher et al. 2013]](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-22-320.jpg)

![[Socher et al. 2013]

Projecting Images into Visual Space

Mapped points of

seen classes:

(Outlier Detection)

Predicting class y:

binary visibility random variable

probability of an image being in an unseen class

Treshold T:](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-23-320.jpg)

![[Socher et al. 2013]

Projecting Images into Visual Space

(Outlier Detection)

binary visibility random variable

probability of an image being in an unseen class

known class prediction:](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-24-320.jpg)

![[Socher et al. 2013][Socher et al. NIPS 2013]

Results](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-25-320.jpg)

![Main Contributions

Zero-shot learning

• Good classification of (pairs of) unseen classes can be

achieved based on learned representations for these

classes

• => as opposed to hand designed representations

• => extends (Lampert 2009; Guo-Jun 2011) [Manual defined

visual/semantic attributes to classify unseen classes]](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-26-320.jpg)

![Main Contributions

“Multi”-shot learning

• Deal with both seen and unseen classes: Allows combining both

zero-shot and seen classification into one framework:

[ Ø-shot + 1-shot = “multi-shot” :) ]

• Assumption: unseen classes as outliers

• Major weakness:

drop from 80% to 70% for 15%-30% accuracy (on particular classes)

• => extends (Lampert 2009; Palatucci 2009) [manual defined

representations, limited to zero-shot classes], using outlier

detection

• => extends (Weston et al. 2010) (joint embedding images and labels

through linear mapping) [linear mapping only, so cant generalise to

new classes: 1-shot], using outlier detection](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-27-320.jpg)

![Main Contributions

Knowledge-Transfer

• Allows transfer of knowledge between modalities, within

multimodal embeddings

• Allows for unsupervised matching

• => extends (Socher & Fei-Fei 2012) (kernelized canonical

correlation analysis) [still require small amount of training

data for each class: 1-shot]

• => extends (Salakhutdinov et al. 2012) (learn low-level image

features followed by a probabilistic model to transfer

knowledge) [also limited to 1-shot classes]](https://image.slidesharecdn.com/zero-shotlearningthroughcross-modaltransfer-150220101019-conversion-gate01/85/Zero-shot-learning-through-cross-modal-transfer-28-320.jpg)