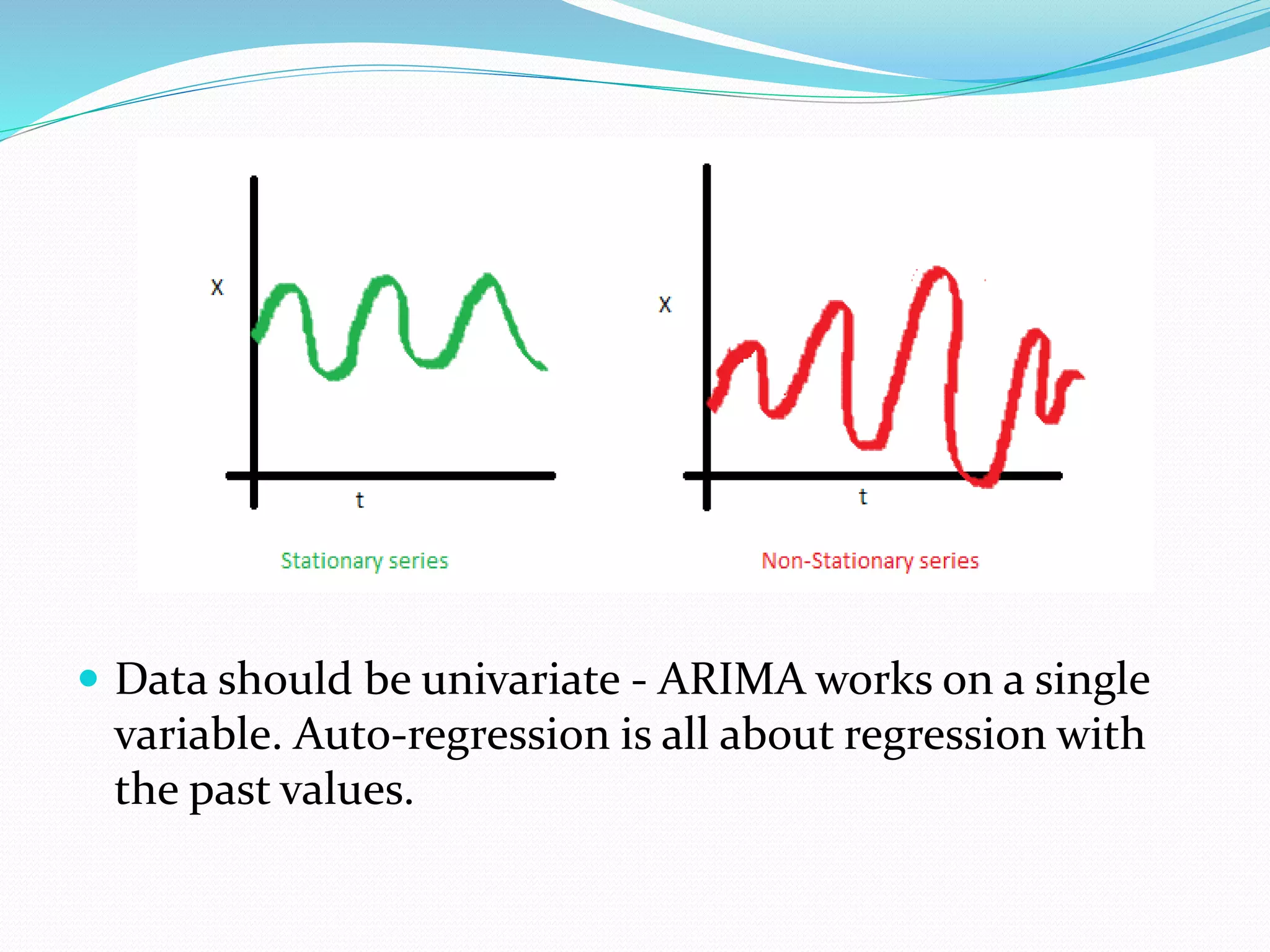

This document provides an introduction to ARIMA (AutoRegressive Integrated Moving Average) models. It discusses key assumptions of ARIMA including stationarity. ARIMA models combine autoregressive (AR) terms, differences or integrations (I), and moving averages (MA). The document outlines the Box-Jenkins approach for ARIMA modeling including identifying a model through correlograms and partial correlograms, estimating parameters, and diagnostic checking to validate the model prior to forecasting.

![PACF Graph-0.50

0.000.501.00

0 10 20 30 40

Lag

95% Confidence bands [se = 1/sqrt(n)]](https://image.slidesharecdn.com/arimamodel-170204090012/75/Arima-model-20-2048.jpg)