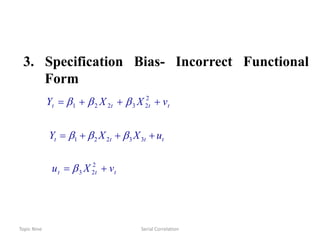

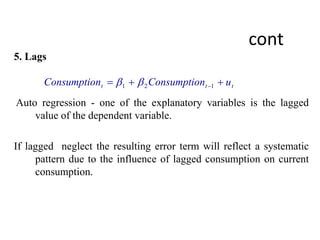

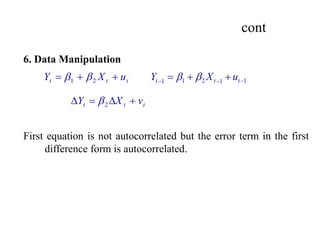

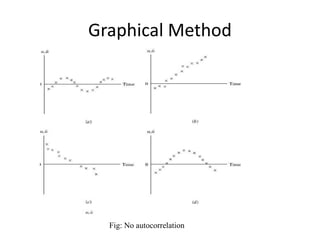

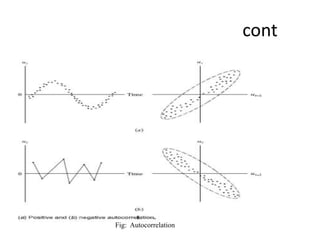

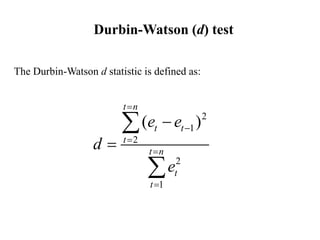

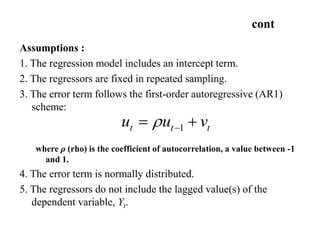

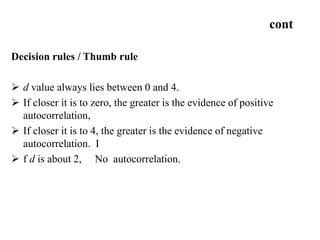

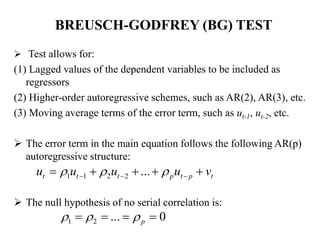

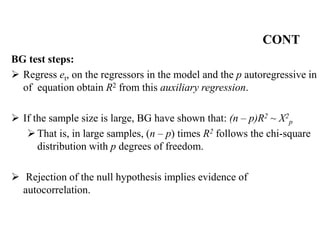

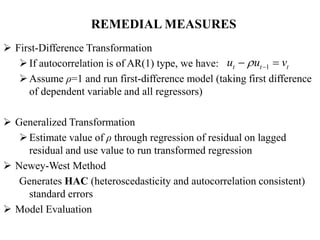

This document discusses autocorrelation, which occurs when there is a correlation between members of a series of observed data ordered over time or space. This violates an assumption of classical linear regression that error terms are uncorrelated. Causes of autocorrelation include inertia in macroeconomic data, specification bias from excluded or incorrectly specified variables, lags, data manipulation, and non-stationarity of time series data. Autocorrelation can be detected graphically or using the Durbin-Watson and Breusch-Godfrey tests. Remedial measures include first-difference transformation, generalized transformation, and using Newey-West standard errors.