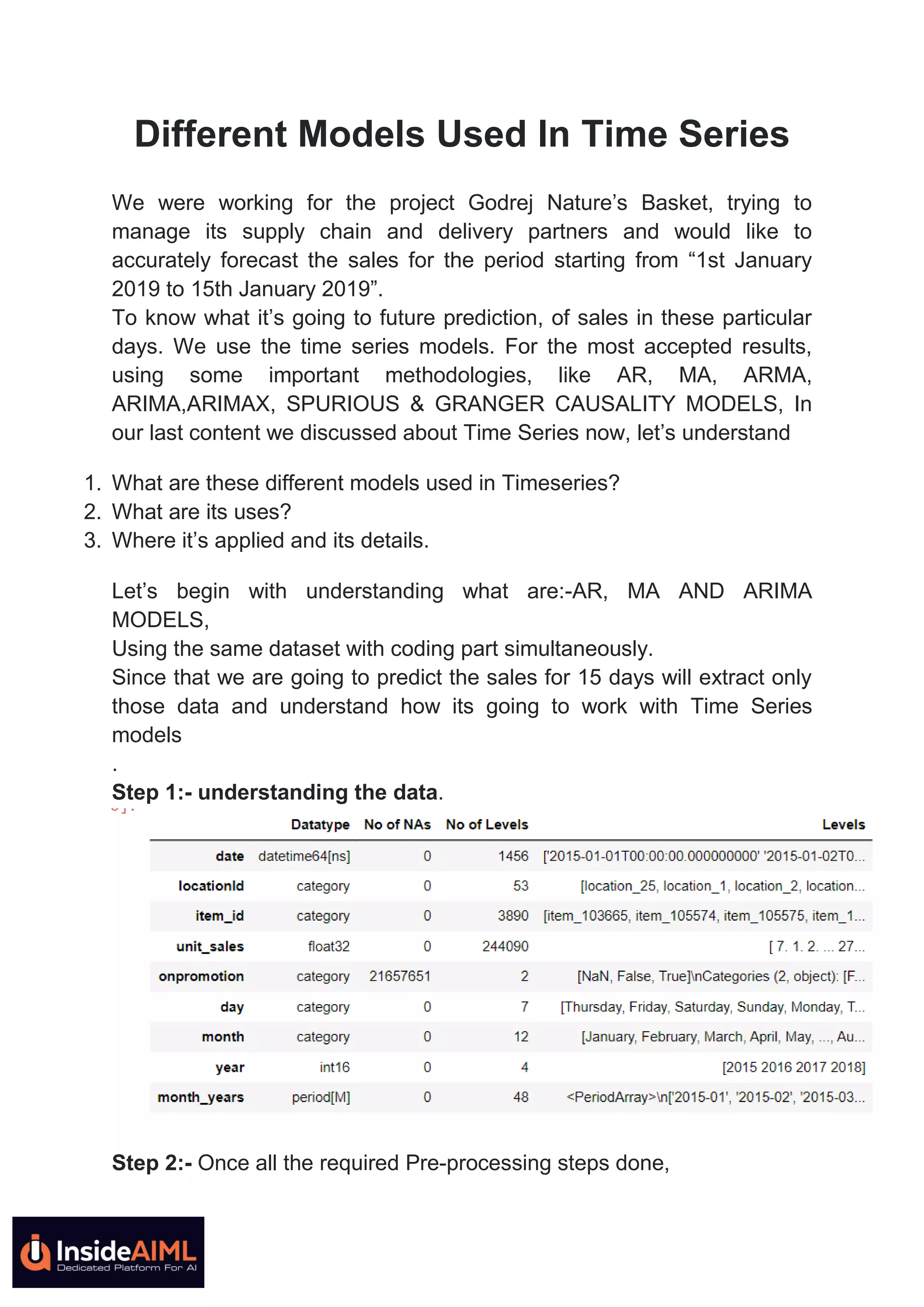

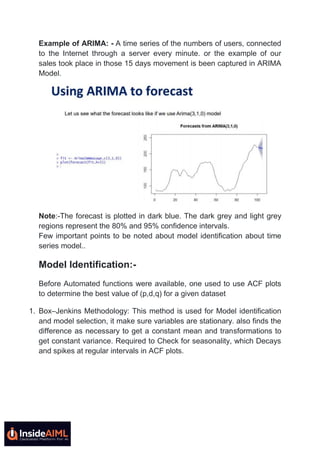

The document discusses various time series models such as AR, MA, ARMA, ARIMA, and ARIMAX, which are used to forecast sales for Godrej Nature's Basket from January 1 to January 15, 2019. It describes the methodologies, key observations, model identification, evaluation phases, and the importance of handling seasonality and trends in the data. Additionally, it highlights concepts like spurious regression and Granger causality, emphasizing the need for stationary data to improve predictive accuracy.