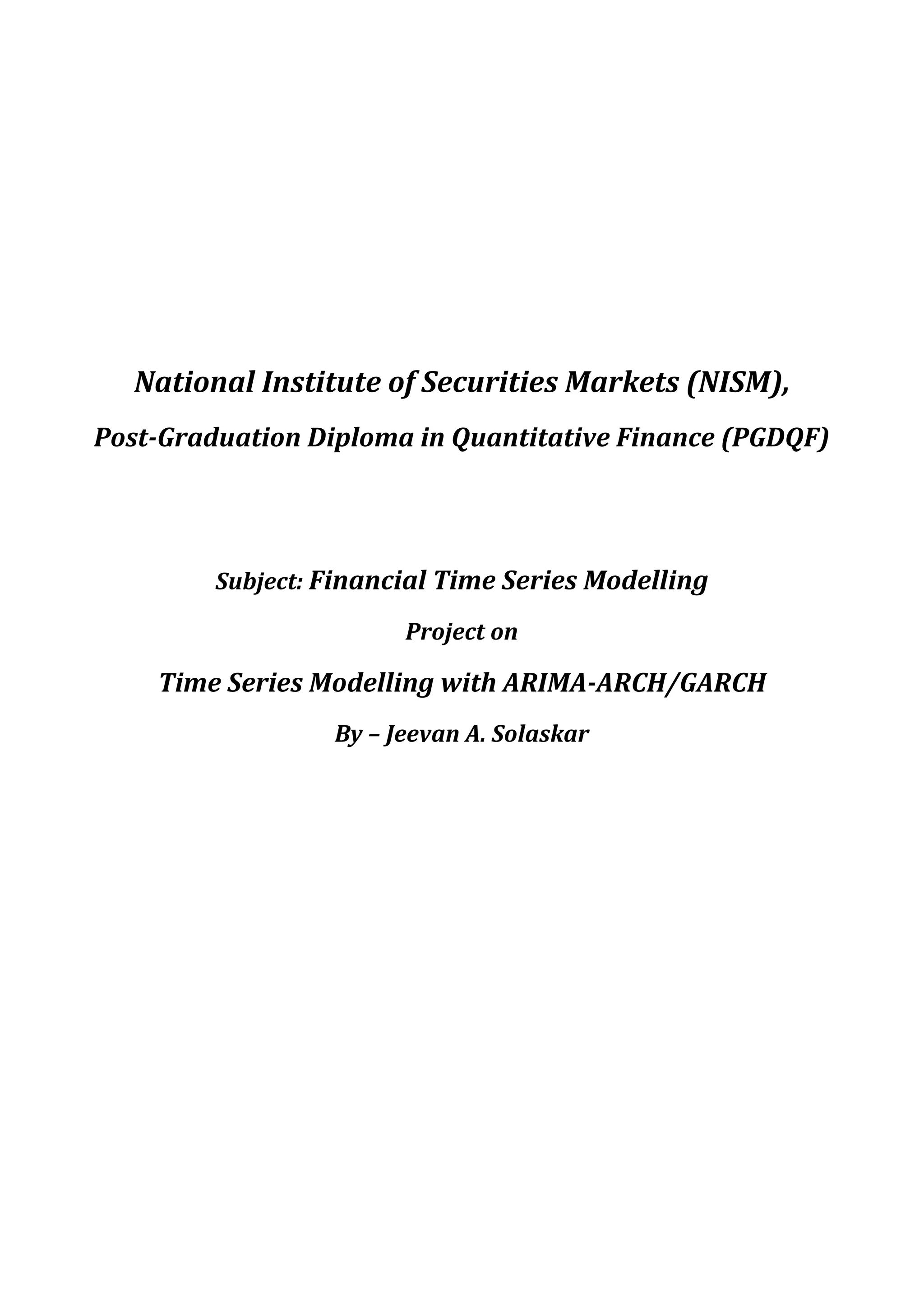

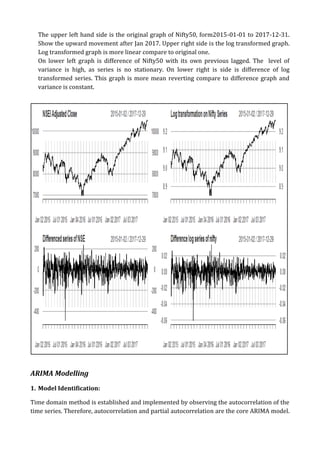

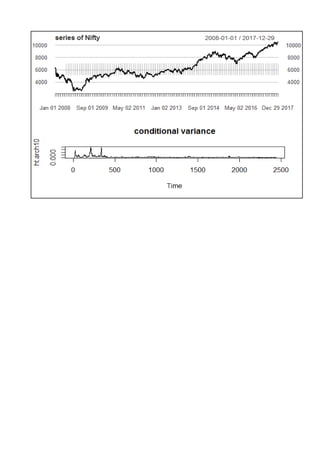

The document summarizes a project on time series modeling using ARIMA and ARCH/GARCH methods for forecasting the future price movements of the Nifty 50 index in India. It details the steps for data preparation, achieving stationarity, model identification, parameter estimation, and diagnostic checking. The analysis concludes with the selection of appropriate models based on Akaike Information Criterion (AIC) and residual analysis, ultimately recommending ARIMA (2,1,2) and ARCH(10) for modeling volatility.