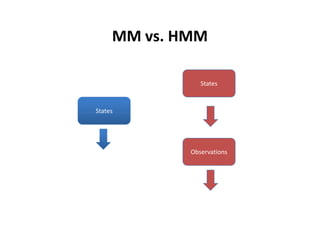

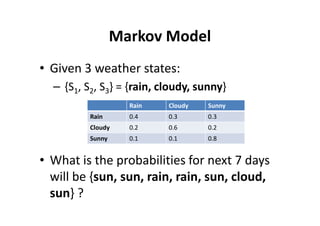

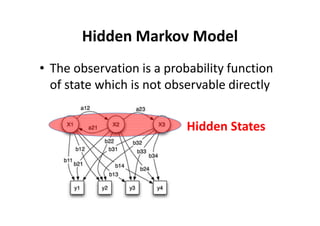

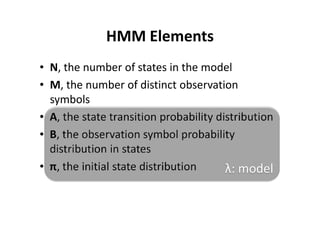

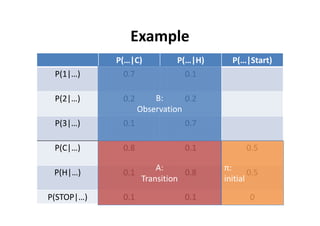

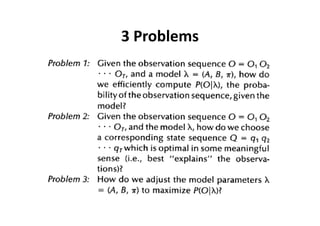

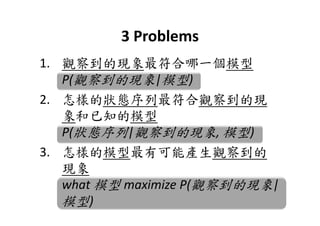

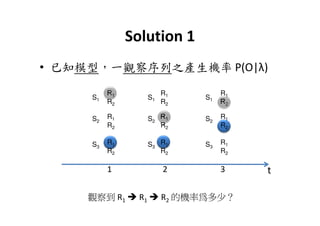

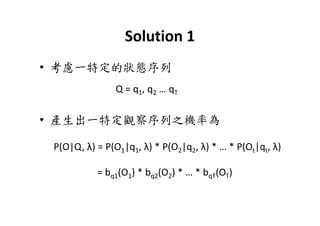

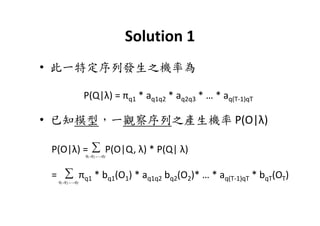

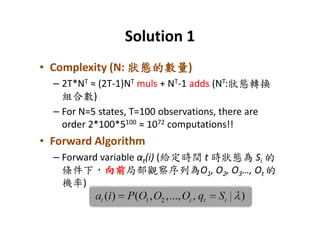

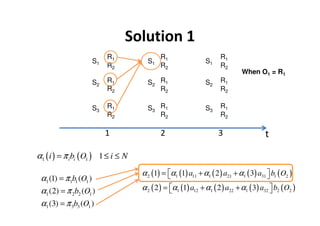

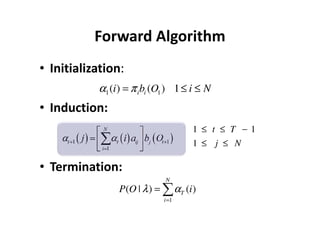

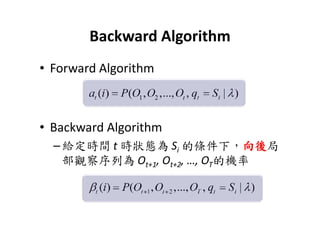

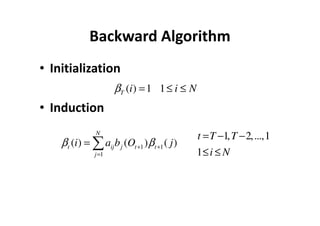

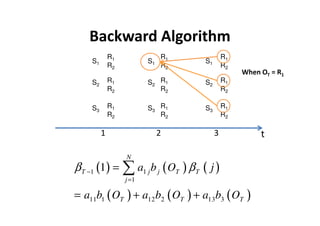

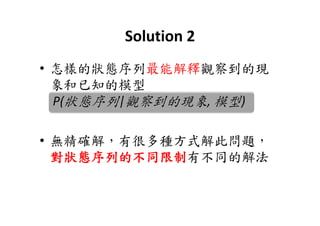

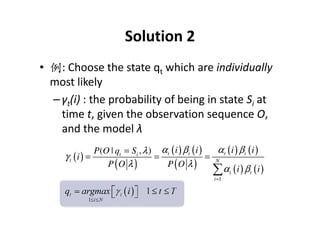

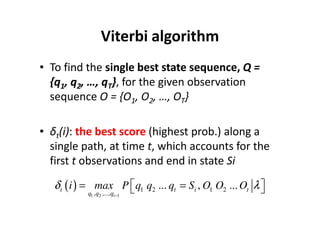

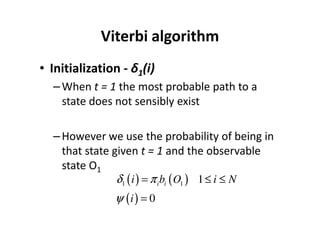

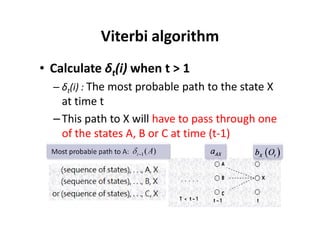

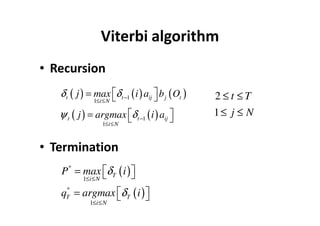

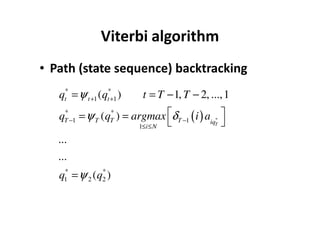

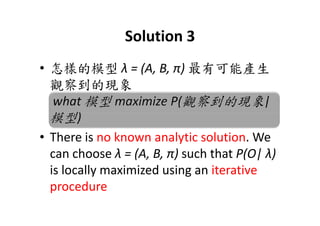

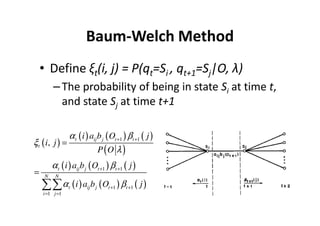

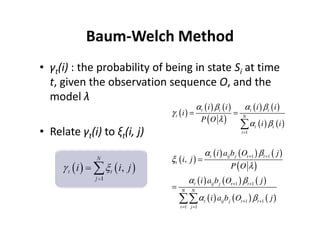

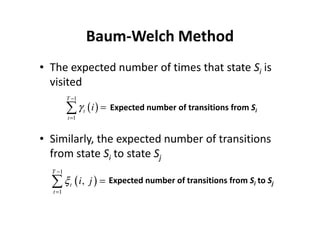

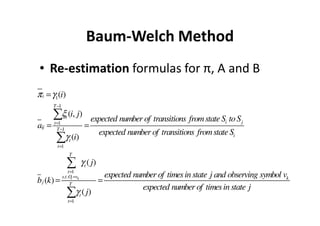

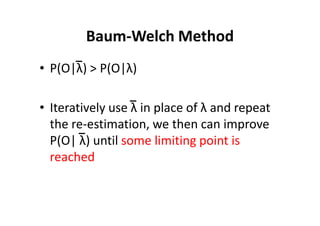

This document provides an introduction to Hidden Markov Models (HMMs). It begins by explaining the key differences between Markov Models and HMMs, noting that in HMMs the states are hidden and can only be indirectly observed through observations. It then outlines the main elements of an HMM - the number of states, observations, state transition probabilities, observation probabilities, and initial state distribution. An example HMM is provided. Finally, it briefly introduces three common problems in HMMs - determining the most likely model given observations, determining the most likely state sequence, and determining the model parameters that are most likely to have generated the observations.