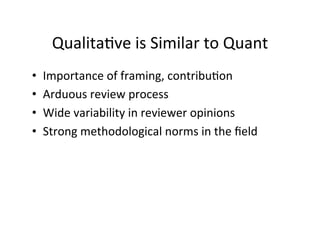

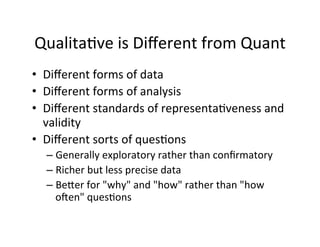

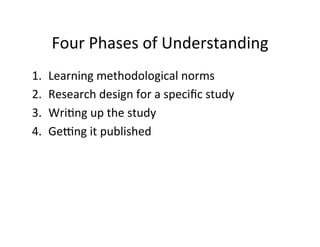

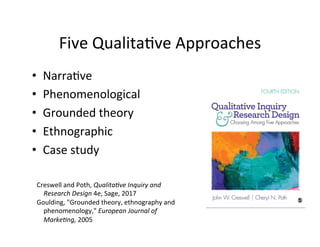

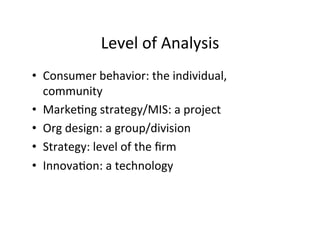

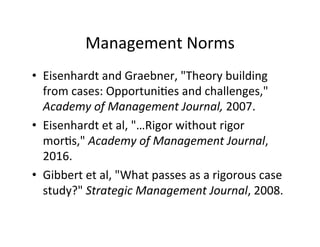

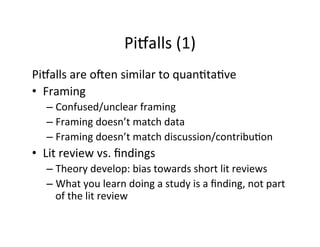

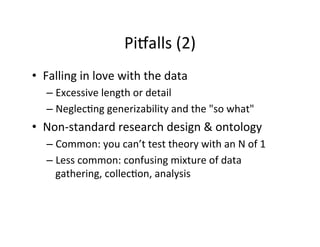

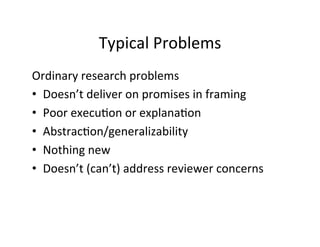

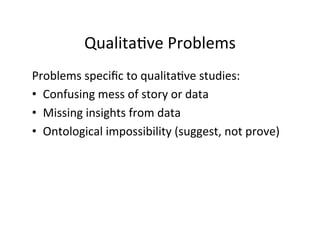

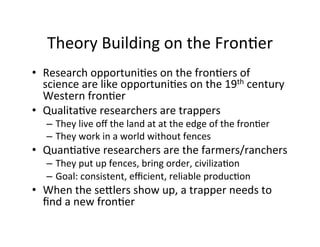

The document discusses the complexities of publishing qualitative research, including the nuances that distinguish qualitative from quantitative approaches, and highlights key methodological norms and phases of research design. It emphasizes the importance of framing research questions, rigorous data collection and analysis, and the challenges faced in getting research published. The text also explores both common problems in qualitative studies and successful strategies for addressing them, providing insights into effective research practices and publication standards.

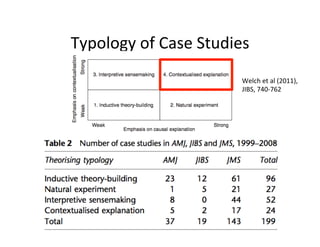

![“Contextualized”

Explana.on

• "Overall,

case

studies

that

emphasised

causal

explana.on

…

were

in

the

minority.

…

[W]e

paid

aYen.on

to

how

authors

in

this

quadrant

were

able

to

combine

the

inherent

strength

of

the

case

study

to

contextualise

with

its

explanatory

poten.al.

…

• "In

this

quadrant,

authors

were

more

open

about

the

explanatory

aims

of

their

paper

…

what

typifies

the

authors’

language

is

a

very

par.cular

view

of

causality

as

a

complex

and

dynamic

set

of

interac.ons

that

are

treated

holis.cally.

"

Welch

et

al

(2011),

JIBS,

p.

753-‐754](https://image.slidesharecdn.com/publishingqualitativeresearch-190101215004/85/Publishing-Qualitative-Research-43-320.jpg)

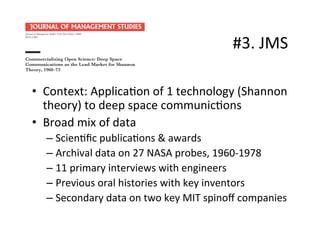

![West

(2008):

Final

Framing

• "Technological

breakthroughs

can

[o[en]

be

traced

back

to

basic

research

disseminated

through

the

peer-‐reviewed

process

of

open

science,

o[en

from

public

research

ins.tu.ons

such

as

universi.es.

• "But

how

does

such

open

science†

get

commercialized?

In

par.cular,

absent

an

explicit

policy

to

align

the

interests

of

scien.sts

and

firms,

how

does

the

knowledge

disseminated

in

open

science

become

incorporated

into

the

offerings

of

for-‐profit

companies?"

†

Paul

A.

David

(1998),

‘Common

agency

contrac.ng

and

the

emergence

of

open

science

ins.tu.ons,’

American

Economic

Review,

88](https://image.slidesharecdn.com/publishingqualitativeresearch-190101215004/85/Publishing-Qualitative-Research-44-320.jpg)