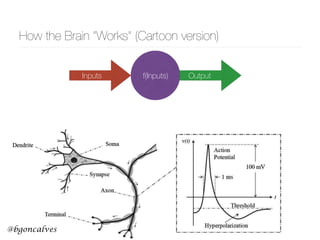

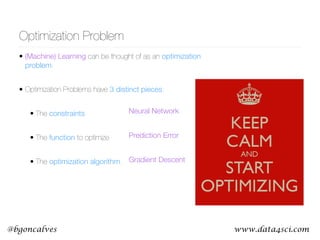

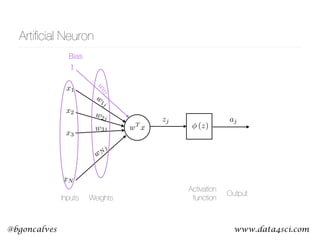

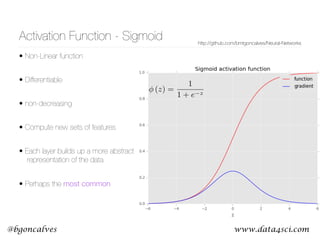

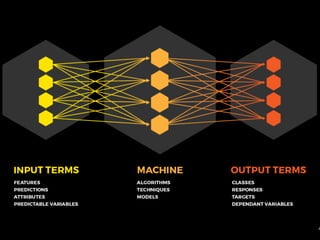

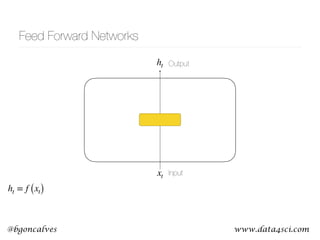

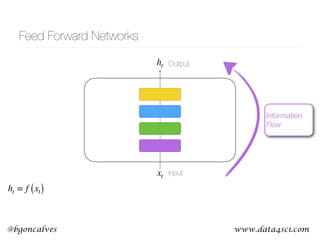

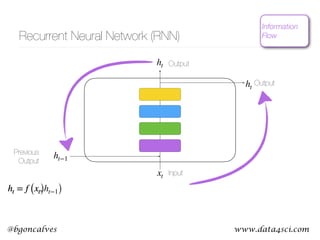

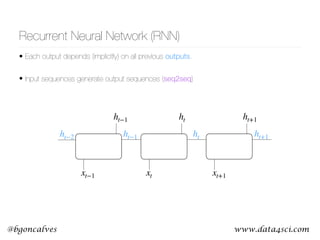

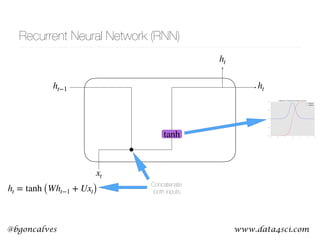

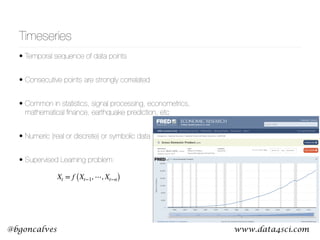

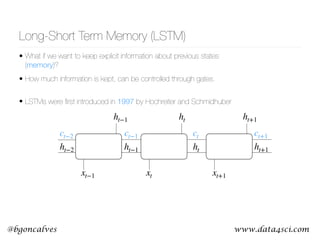

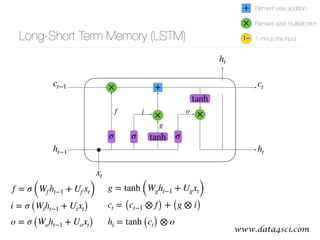

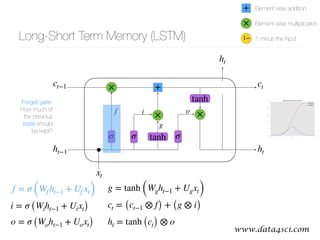

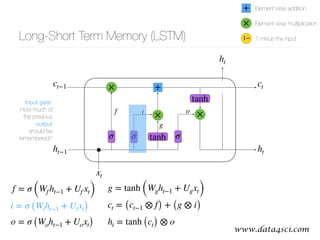

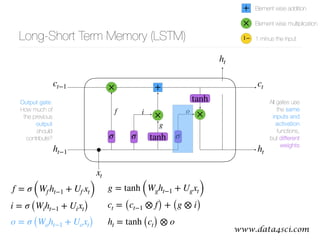

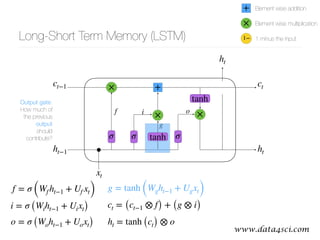

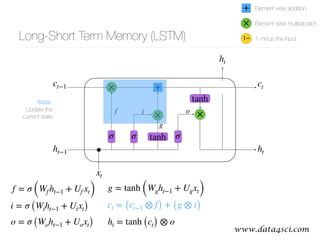

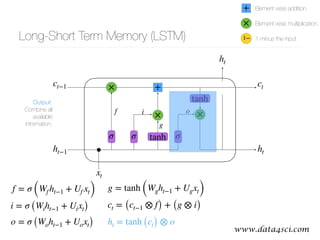

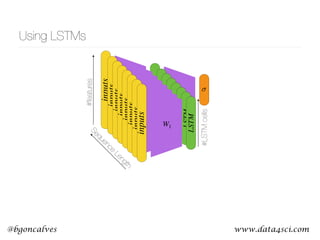

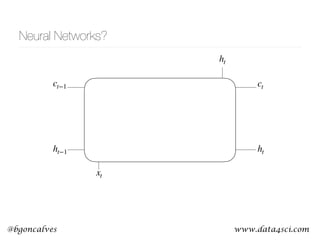

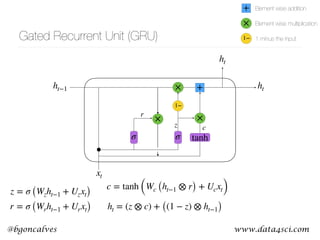

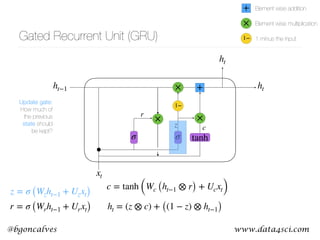

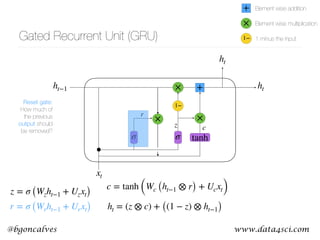

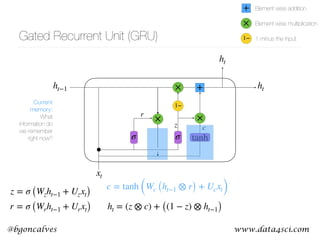

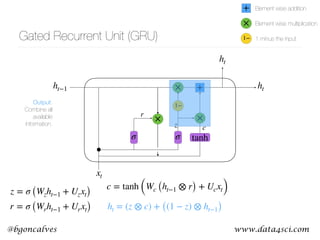

This document presents a comprehensive overview of recurrent neural networks (RNNs) and their applications in time series analysis, including fundamental concepts such as feedforward networks, backpropagation, and long short-term memory (LSTM) architectures. It discusses the optimization problems involved in machine learning, introduces gated recurrent units (GRUs) as a simplified alternative to LSTMs, and highlights the use of Keras for building neural network models. Practical applications of these techniques span various fields, including language modeling, speech recognition, and time series forecasting.