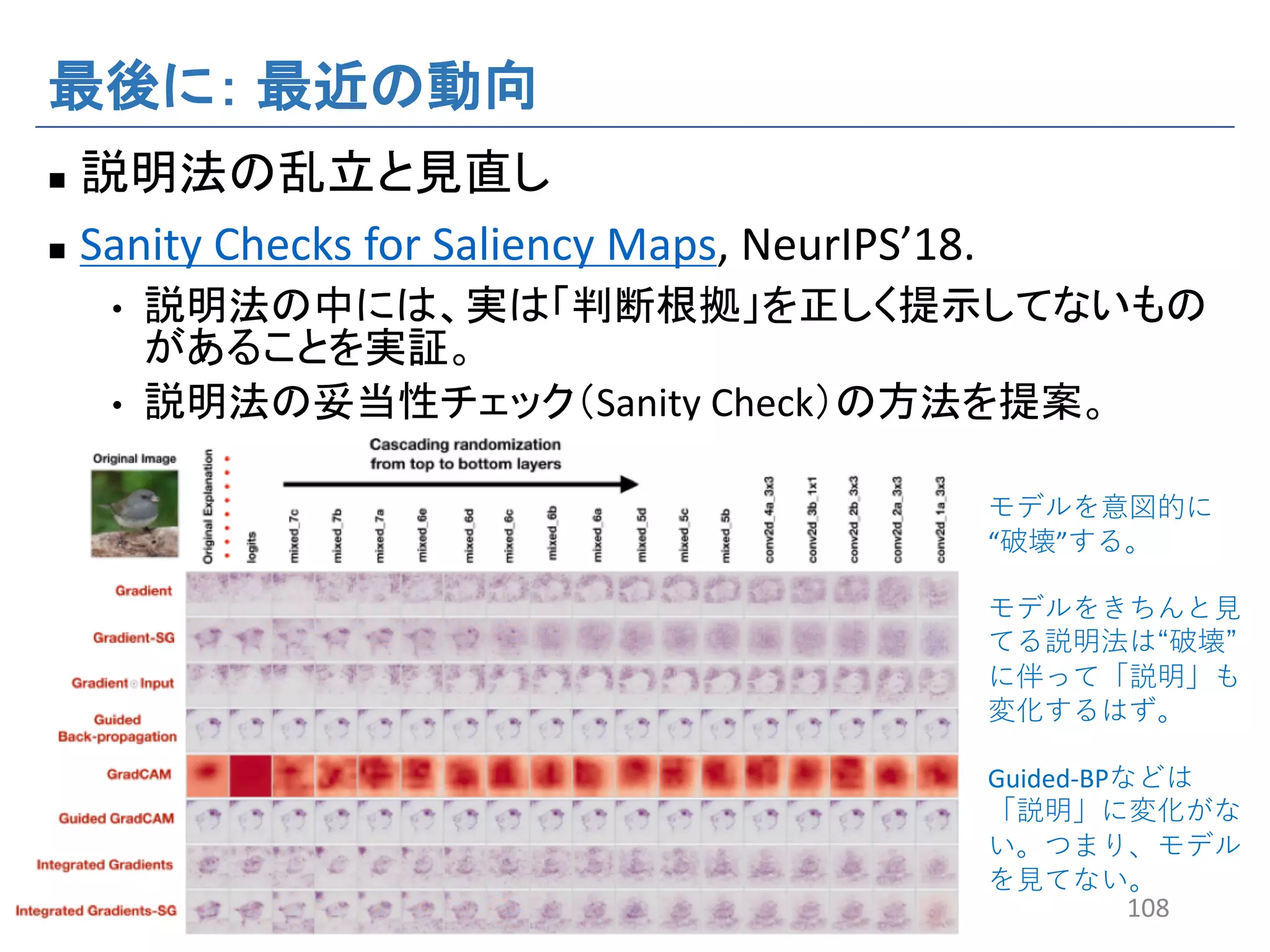

The document discusses the rights of data subjects under the EU GDPR, particularly regarding automated decision-making and profiling. It outlines conditions under which such decisions can be made, emphasizing the need for measures that protect the data subjects' rights and freedoms. Additionally, it includes references to various machine learning and artificial intelligence interpretability frameworks and studies.

![n

n Why Should I Trust You?: Explaining the Predictions of

Any Classifier, KDD'16 [Python LIME; R LIME]

n A Unified Approach to Interpreting Model Predictions,

NIPS'17 [Python SHAP]

n Anchors: High-Precision Model-Agnostic Explanations,

AAAI'18 [Python Anchor]

n Understanding Black-box Predictions via Influence

Functions, ICML’17 [Python influence-release]

16](https://image.slidesharecdn.com/harastairseminar181221v1-181218021738/75/slide-16-2048.jpg)

![n

n Born Again Trees

n Making Tree Ensembles Interpretable: A Bayesian Model

Selection Approach, AISTATS'18 [Python defragTrees]

17](https://image.slidesharecdn.com/harastairseminar181221v1-181218021738/75/slide-17-2048.jpg)

![n

n [Python+Tensorflow

saliency; DeepExplain]

• Striving for Simplicity: The All Convolutional Net

(GuidedBackprop)

• On Pixel-Wise Explanations for Non-Linear Classifier Decisions

by Layer-Wise Relevance Propagation (Epsilon-LRP)

• Axiomatic Attribution for Deep Networks (IntegratedGrad)

• SmoothGrad: Removing Noise by Adding Noise (SmoothGrad)

• Learning Important Features Through Propagating Activation

Differences (DeepLIFT)

18](https://image.slidesharecdn.com/harastairseminar181221v1-181218021738/75/slide-18-2048.jpg)

![n

n Why Should I Trust You?: Explaining the Predictions of

Any Classifier, KDD'16 [Python LIME; R LIME]

n A Unified Approach to Interpreting Model Predictions,

NIPS'17 [Python SHAP]

n Anchors: High-Precision Model-Agnostic Explanations,

AAAI'18 [Python Anchor]

n Understanding Black-box Predictions via Influence

Functions, ICML’17 [Python influence-release]

20](https://image.slidesharecdn.com/harastairseminar181221v1-181218021738/75/slide-20-2048.jpg)

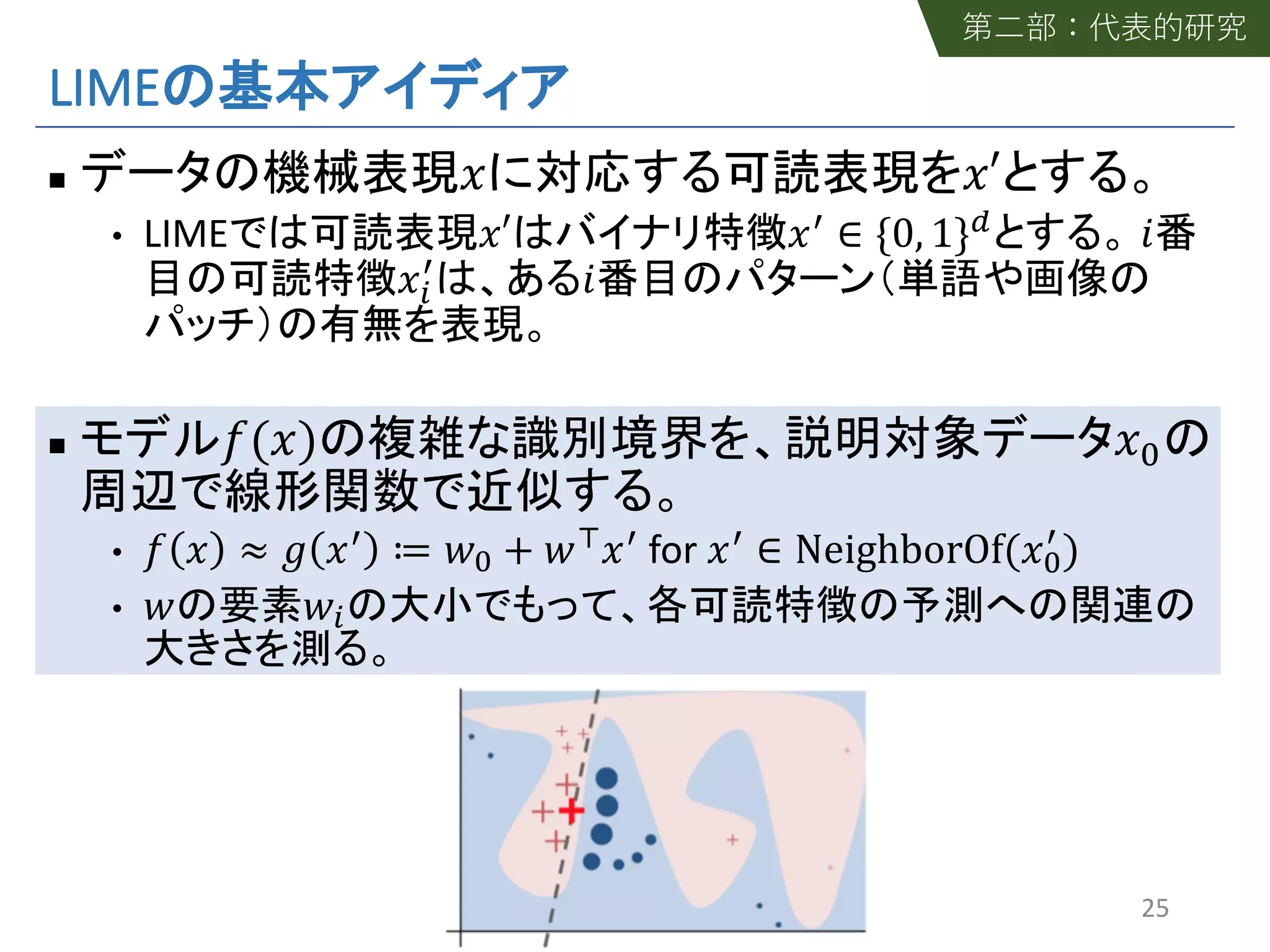

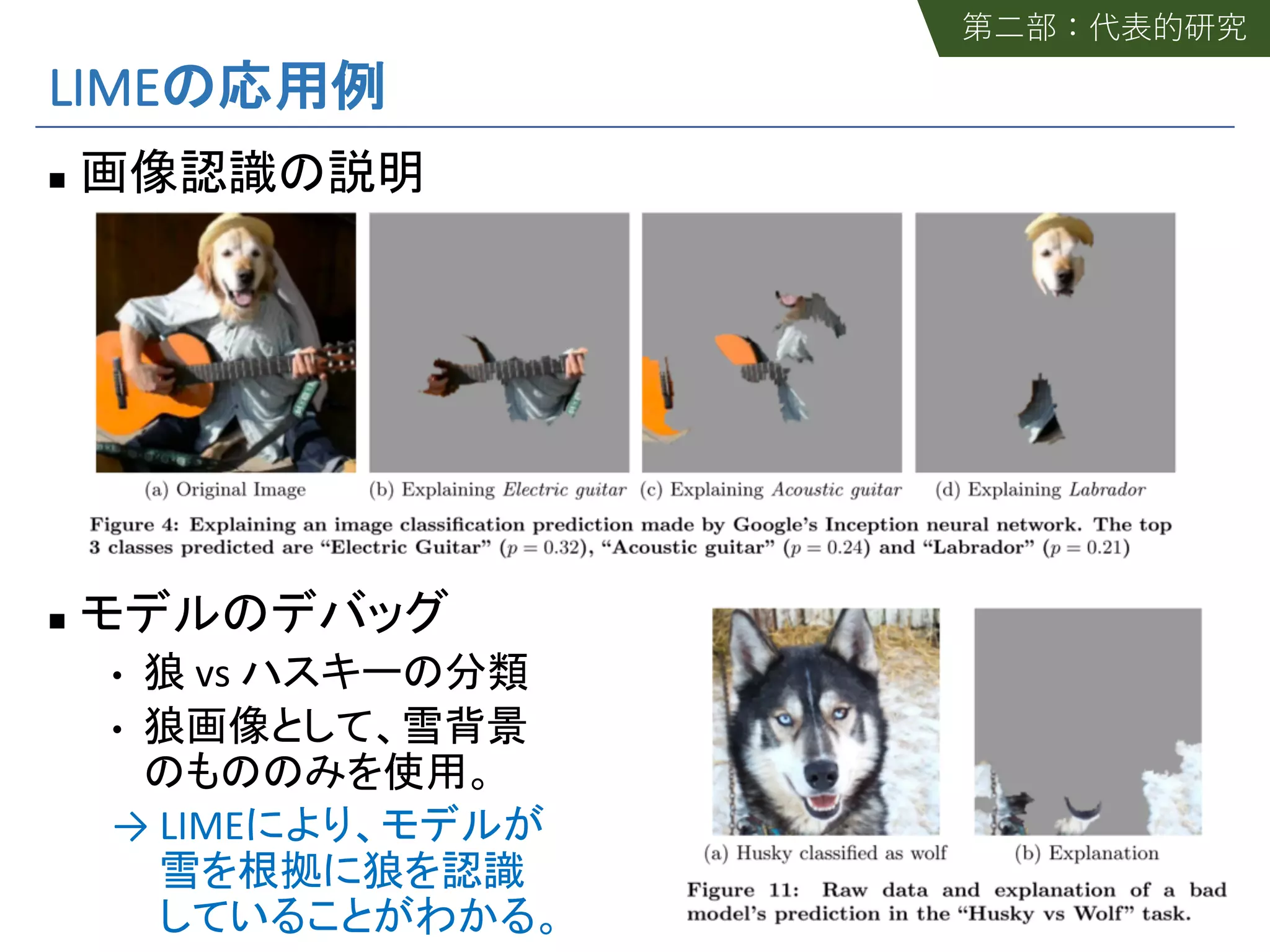

![LIME

n Why Should I Trust You?: Explaining the Predictions of

Any Classifier, KDD'16 [Python LIME; R LIME]

•

•

22](https://image.slidesharecdn.com/harastairseminar181221v1-181218021738/75/slide-22-2048.jpg)

![SHAP

n A Unified Approach to Interpreting Model Predictions,

NIPS'17 [Python SHAP]

•

• LIME

28](https://image.slidesharecdn.com/harastairseminar181221v1-181218021738/75/slide-28-2048.jpg)

![Anchor

n Anchors: High-Precision Model-Agnostic Explanations,

AAAI'18 [Python Anchor]

•

36](https://image.slidesharecdn.com/harastairseminar181221v1-181218021738/75/slide-36-2048.jpg)

![Anchor

n ! =

!

• !

• max

%

&' ( [!(+)] s.t. Pr &' + ! 11 ( 21 3 ≥ 5 ≥ 1 − 7

n 1.

n 2.

• Pr &' + ! 11 ( 21 3 ≥ 5 1 − 7 !

42](https://image.slidesharecdn.com/harastairseminar181221v1-181218021738/75/slide-42-2048.jpg)

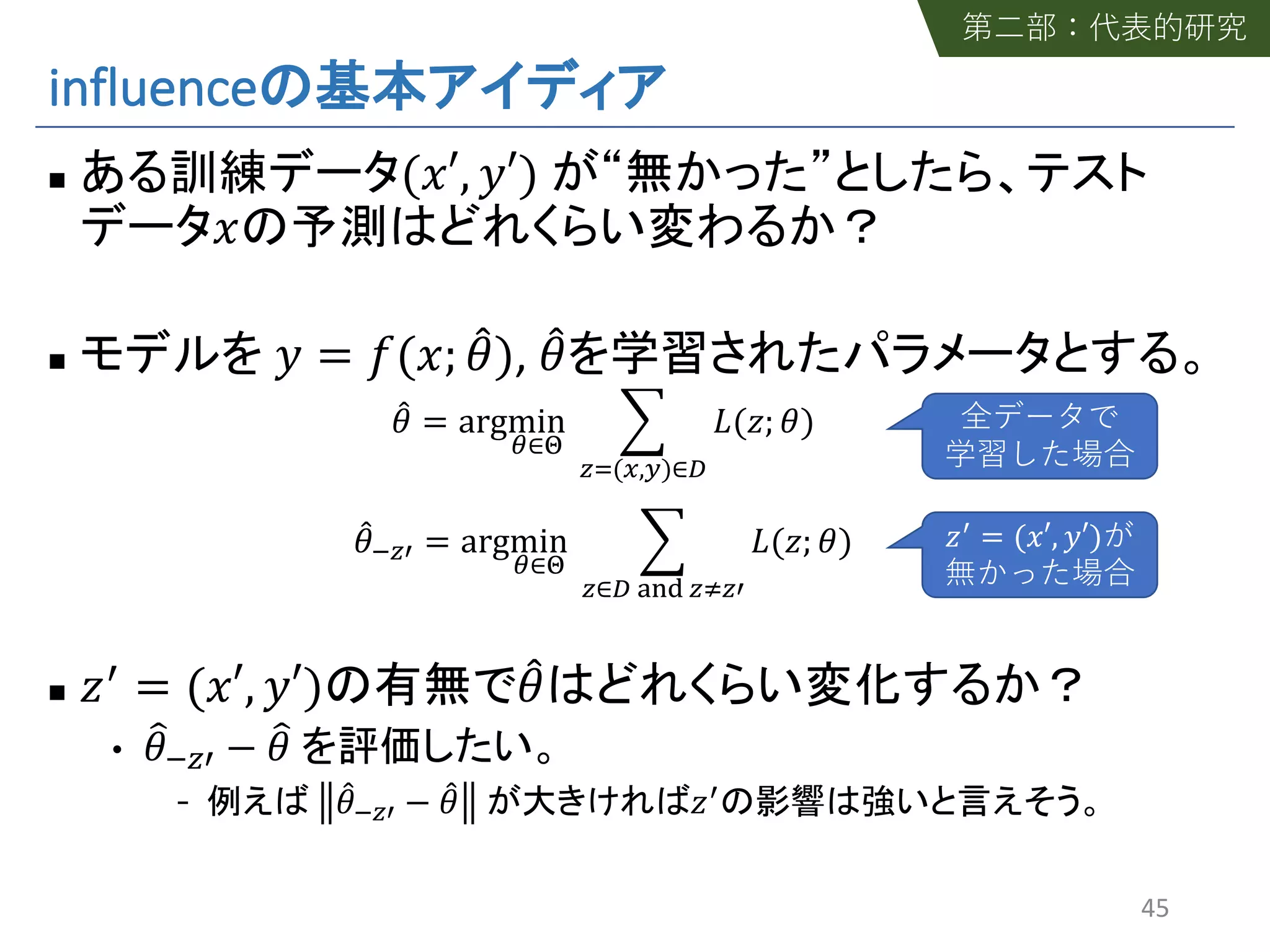

![influence

n Understanding Black-box Predictions via Influence

Functions, ICML’17 [Python influence-release]

n ("′, %′)

"

44](https://image.slidesharecdn.com/harastairseminar181221v1-181218021738/75/slide-44-2048.jpg)

![n

n Born Again Trees

n Making Tree Ensembles Interpretable: A Bayesian Model

Selection Approach, AISTATS'18 [Python defragTrees]

53](https://image.slidesharecdn.com/harastairseminar181221v1-181218021738/75/slide-53-2048.jpg)

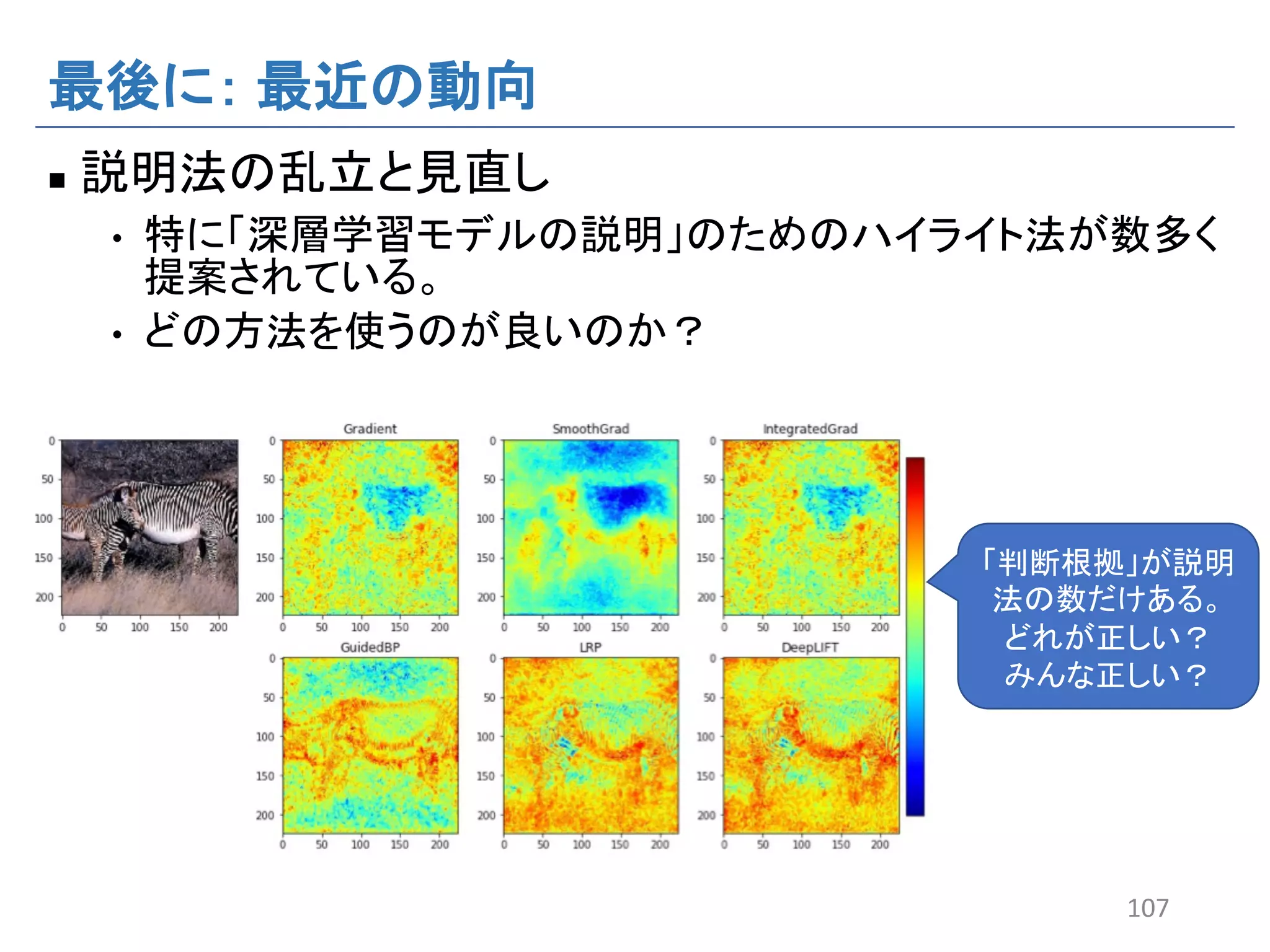

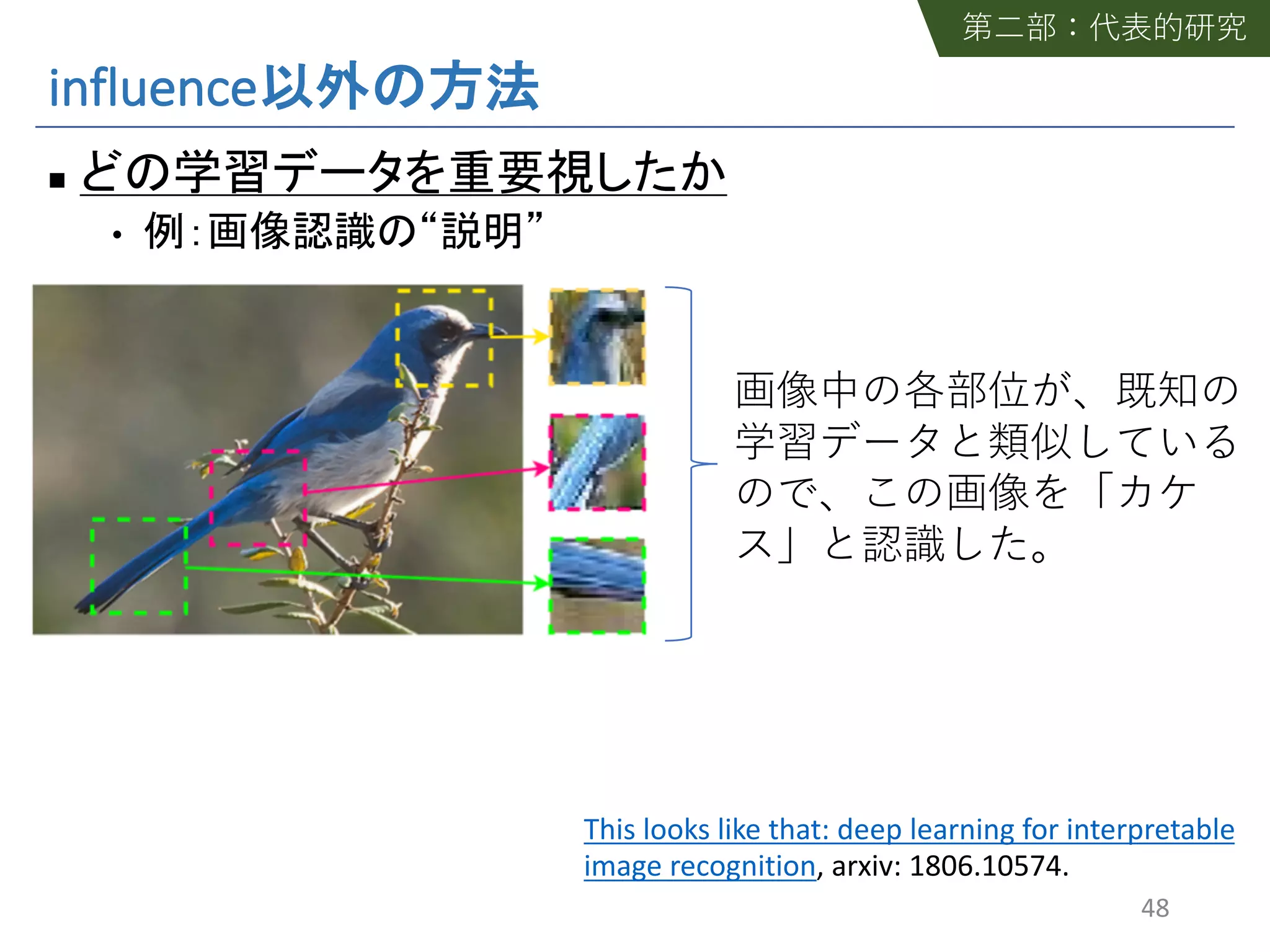

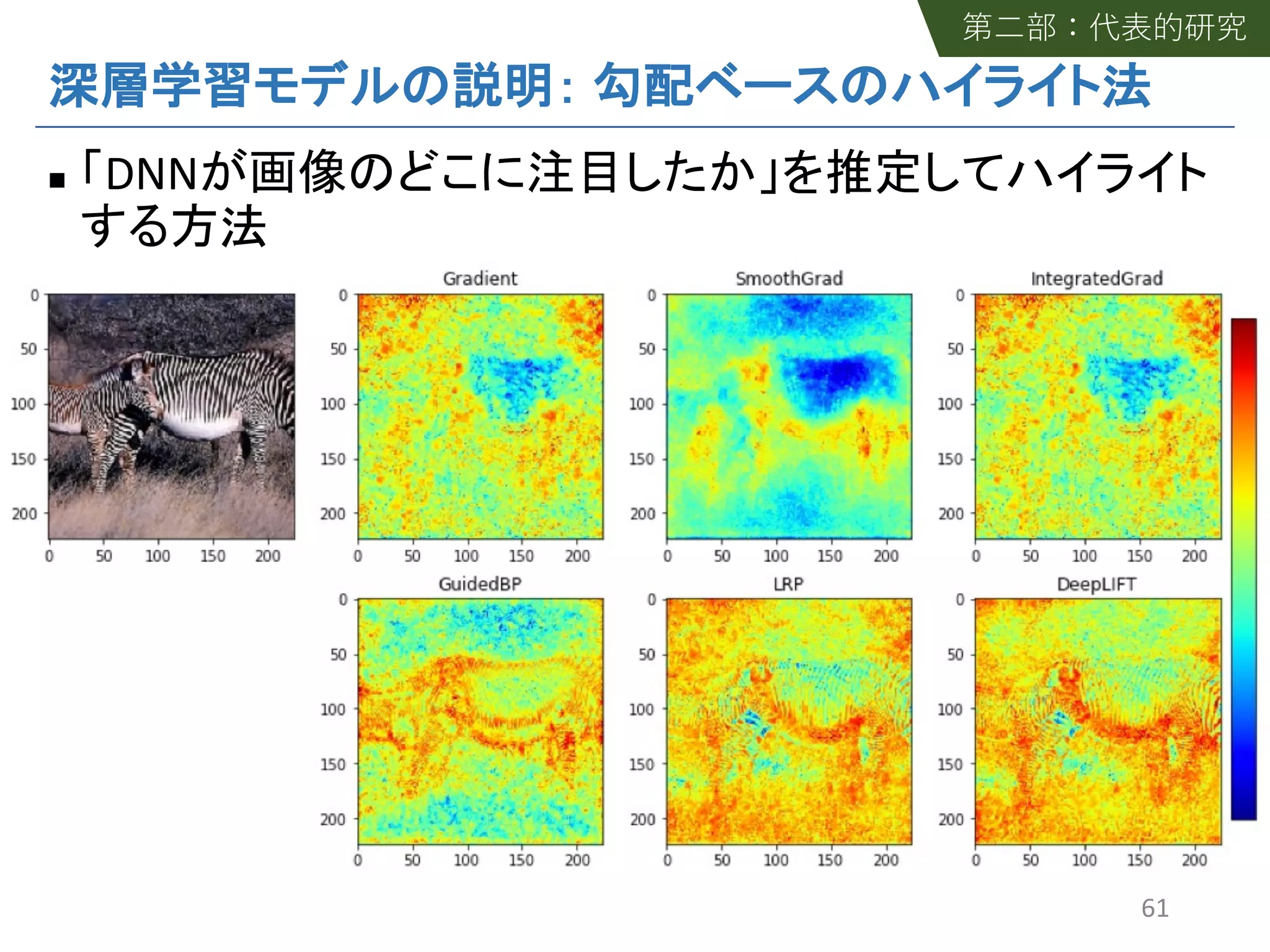

![n

n [Python+Tensorflow

saliency; DeepExplain]

• Striving for Simplicity: The All Convolutional Net

(GuidedBackprop)

• On Pixel-Wise Explanations for Non-Linear Classifier Decisions

by Layer-Wise Relevance Propagation (Epsilon-LRP)

• Axiomatic Attribution for Deep Networks (IntegratedGrad)

• SmoothGrad: Removing Noise by Adding Noise (SmoothGrad)

• Learning Important Features Through Propagating Activation

Differences (DeepLIFT)

57](https://image.slidesharecdn.com/harastairseminar181221v1-181218021738/75/slide-57-2048.jpg)

![n ! = # $

n $

n [Simonyan et al., arXiv’14]

$%

&' (

&()

•

→ →

*+ ,

*,-

→

•

→ →

&' (

&()

→

63](https://image.slidesharecdn.com/harastairseminar181221v1-181218021738/75/slide-63-2048.jpg)

![n [Simonyan et al., arXiv’14]

!"

#$ %

#%&

n

• GuidedBP [Springenberg et al., arXiv’14]

back propagation

• LRP [Bach et al., PloS ONE’15]

• IntegratedGrad [Sundararajan et al., arXiv’17]

• SmoothGrad [Smilkov et al., arXiv’17]

• DeepLIFT [Shrikumar et al., ICML’17]

64](https://image.slidesharecdn.com/harastairseminar181221v1-181218021738/75/slide-64-2048.jpg)

![n

n

[Python+Tensorflow saliency; DeepExplain]

• Striving for Simplicity: The All Convolutional Net

(GuidedBackprop)

• On Pixel-Wise Explanations for Non-Linear Classifier Decisions

by Layer-Wise Relevance Propagation (Epsilon-LRP)

• Axiomatic Attribution for Deep Networks (IntegratedGrad)

• SmoothGrad: Removing Noise by Adding Noise (SmoothGrad)

• Learning Important Features Through Propagating Activation

Differences (DeepLIFT)

65](https://image.slidesharecdn.com/harastairseminar181221v1-181218021738/75/slide-65-2048.jpg)

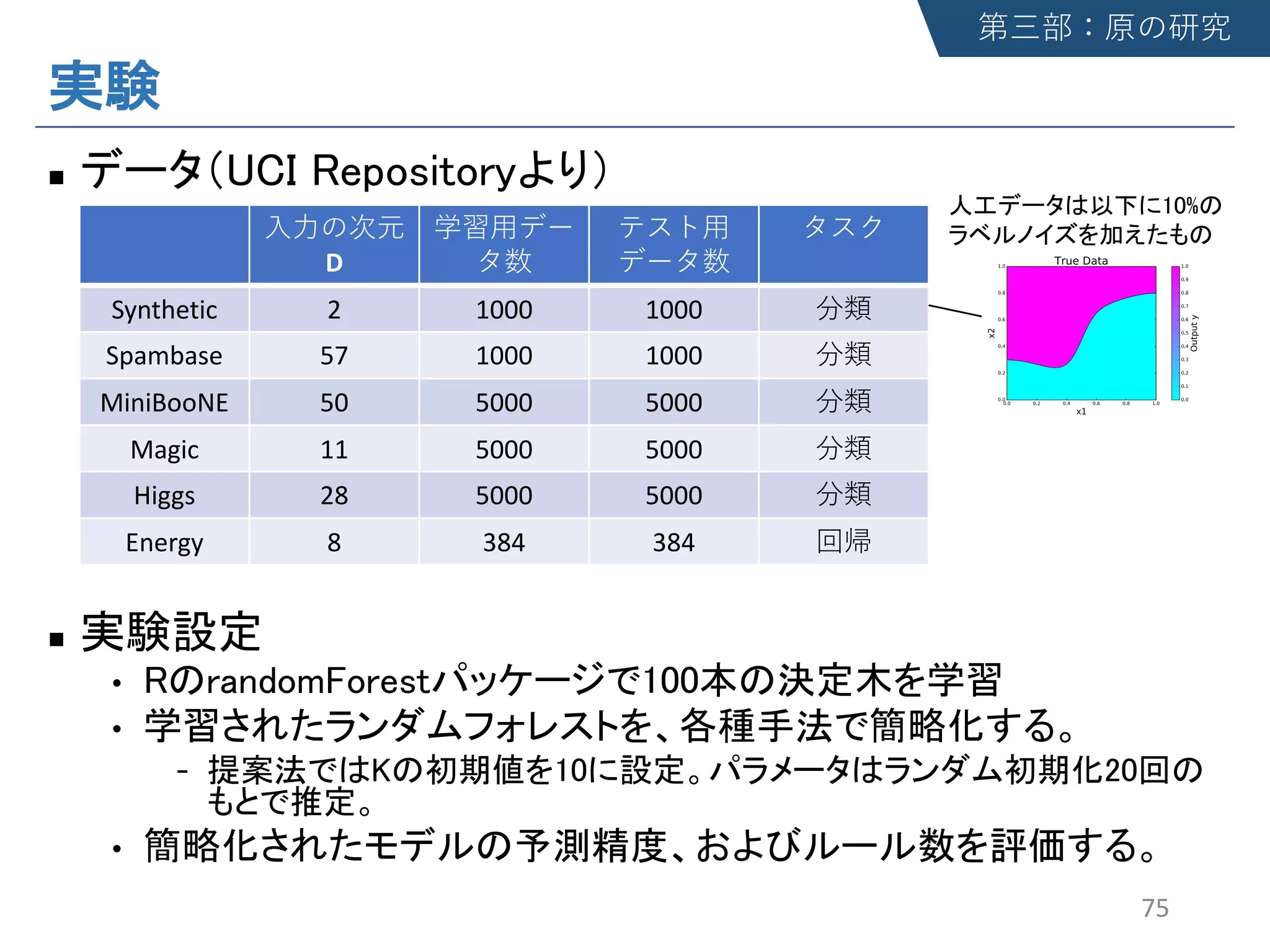

![defragTrees

n Making Tree Ensembles Interpretable: A Bayesian Model

Selection Approach, AISTATS'18 [Python defragTrees]

•

•

n

69

when

Relationship ≠ Not-in-family, Wife

Capital Gain < 7370

when

Relationship ≠ Not-in-family

Capital Gain >= 7370

when

Relationship ≠ Not-in-family, Unmarried

Capital Gain < 5095

Capital Loss < 2114

when

Relationship = Not-in-family

Country ≠ China, Peru

Capital Gain < 5095

when

Relationship ≠ Not-in-family

Country ≠ China

Capital Gain < 5095

when

Relationship ≠ Not-in-family

Capital Gain >= 7370

…

…](https://image.slidesharecdn.com/harastairseminar181221v1-181218021738/75/slide-69-2048.jpg)

![n Enumerate Lasso Solu/ons for Feature Selec/on,

AAAI’17 [Python LassoVariants].

n Approximate and Exact Enumera/on of Rule Models,

AAAI'18.](https://image.slidesharecdn.com/harastairseminar181221v1-181218021738/75/slide-81-2048.jpg)