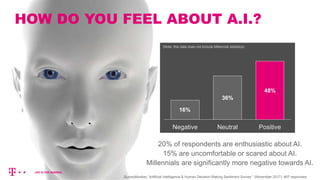

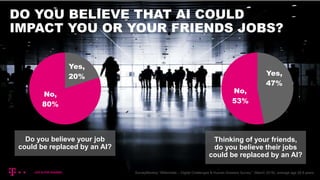

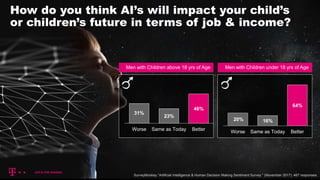

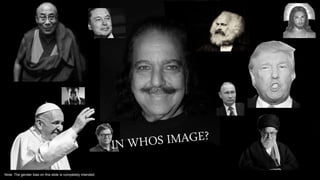

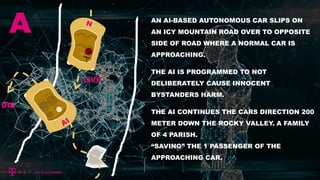

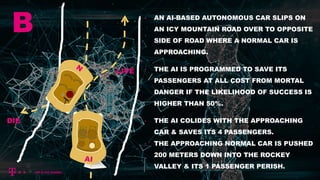

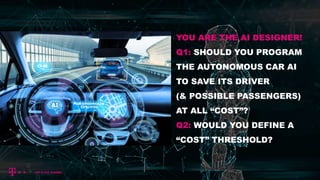

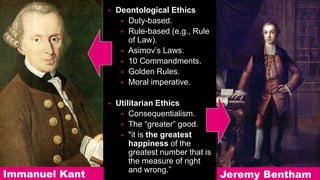

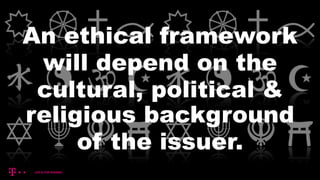

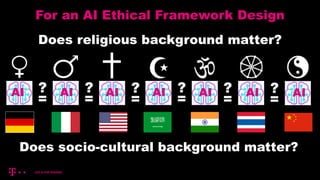

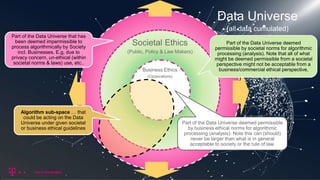

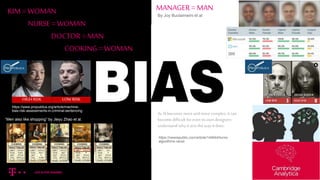

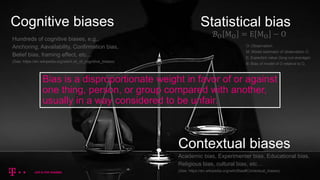

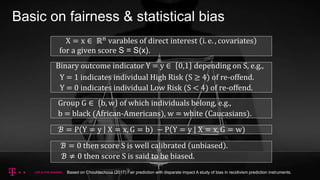

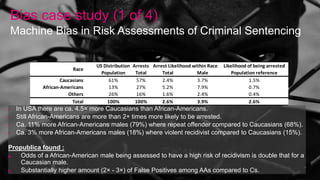

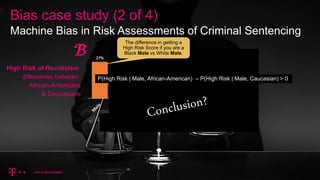

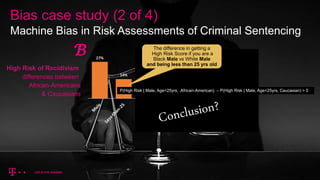

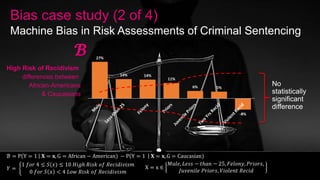

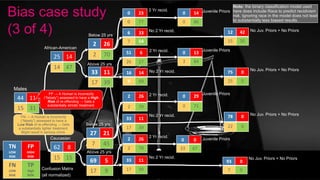

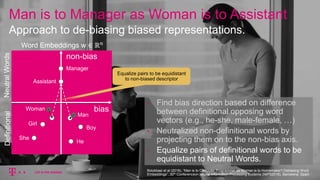

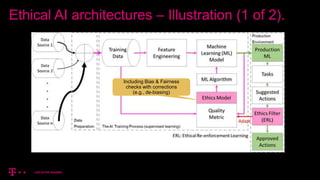

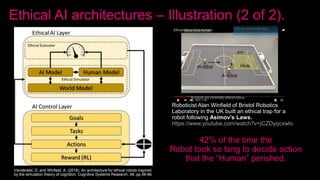

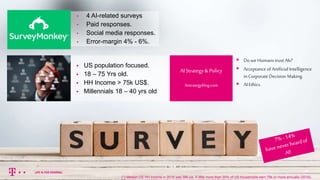

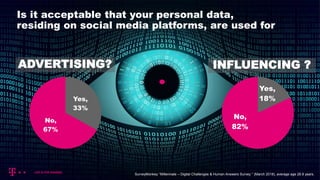

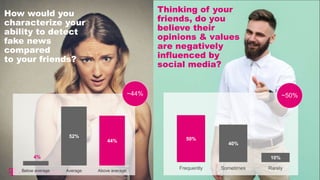

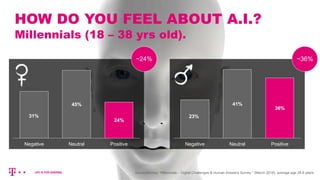

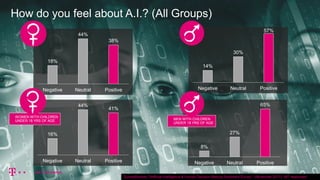

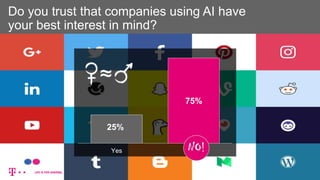

The document discusses ethical concerns related to artificial intelligence (AI), focusing on public sentiment and potential job impacts, as indicated by various surveys. It presents ethical dilemmas faced by AI designers, particularly in autonomous vehicle programming decisions, and highlights biases in AI, particularly in criminal sentencing algorithms. It emphasizes the necessity for ethical frameworks in AI development that consider cultural and societal norms to address issues of bias and fairness.