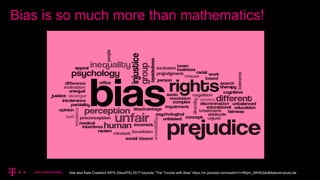

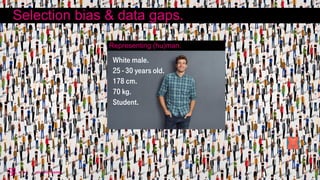

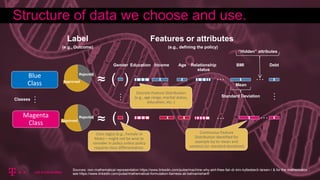

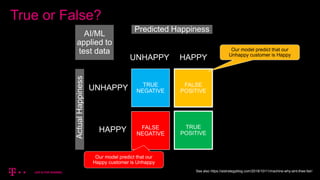

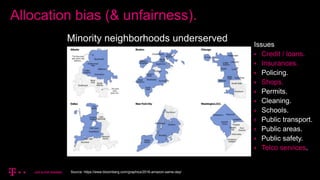

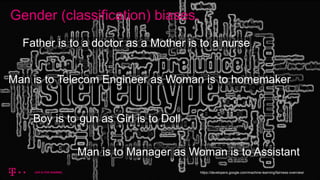

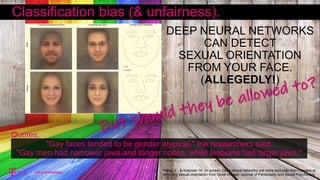

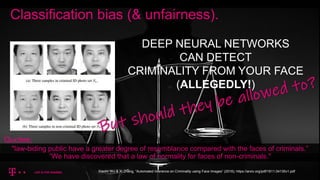

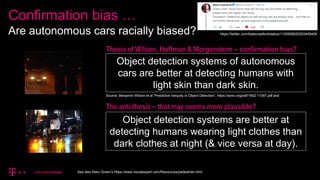

Dr. Kim Kyllesbech Larsen discusses the impact of human bias on artificial intelligence (AI) and highlights that AI systems reflect societal biases present in their training data. The document outlines various examples of classification and allocation biases across different sectors, including policing, recruitment, and public service. It emphasizes the necessity for ethical measures in identifying and mitigating biases within AI systems to ensure fairness.