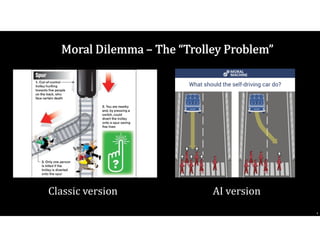

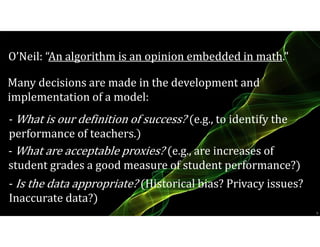

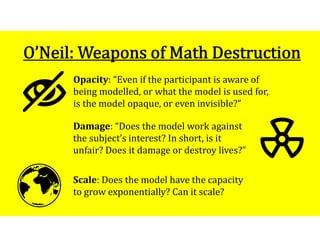

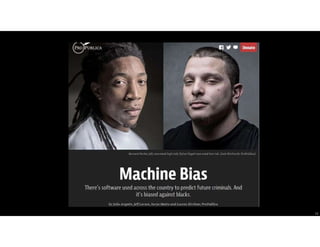

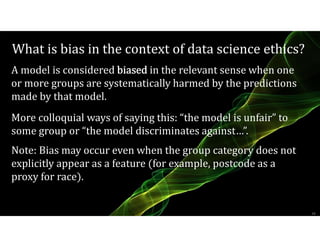

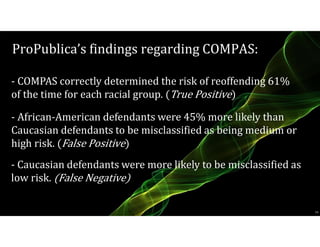

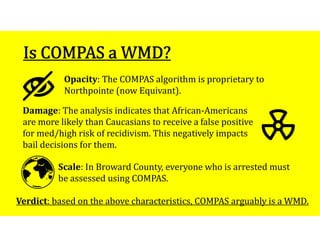

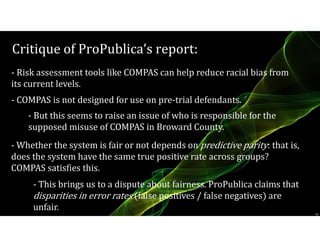

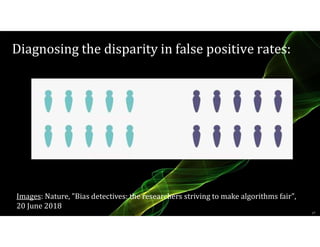

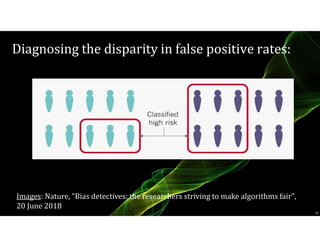

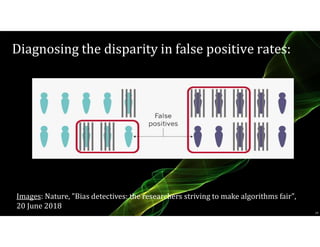

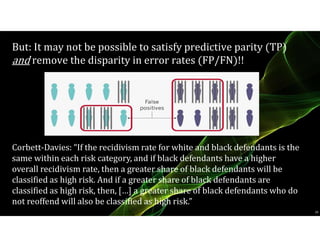

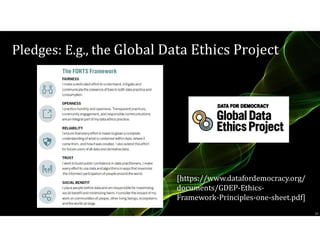

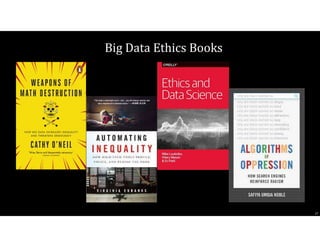

The document discusses big data ethics, data science ethics, and AI ethics, highlighting the importance of transparency, consent, and fairness in algorithms. It examines O'Neil's analysis of 'weapons of math destruction' and presents a case study on the COMPAS algorithm used for predicting recidivism, which disproportionately affects African-American individuals. Ethical concerns raised include data ownership, the scale and opacity of algorithms, and their potential damage to individuals' lives.