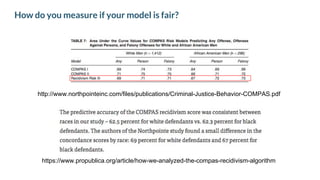

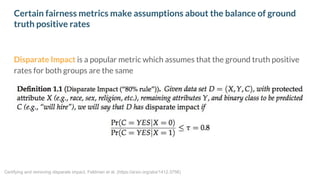

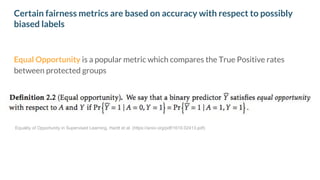

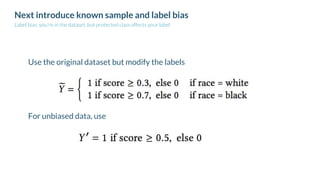

The document discusses the complex issue of measuring model fairness in machine learning, emphasizing that bias often originates from datasets rather than the models themselves. It highlights different types of biases, including label and sample bias, and underscores the need for thoughtful consideration of fairness metrics and their implications in various contexts. Ultimately, the message is that fairness in modeling requires human oversight and ethical considerations, rather than reliance on mathematical solutions alone.