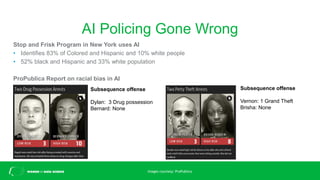

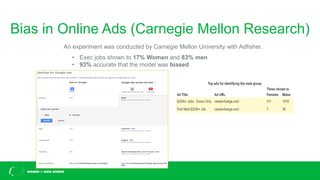

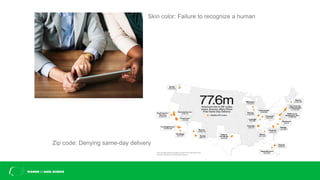

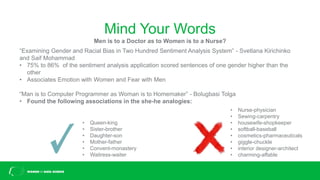

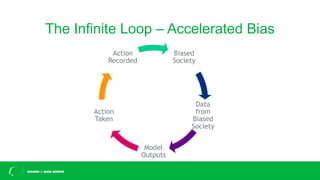

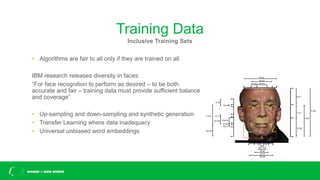

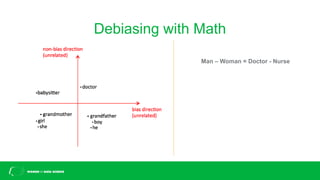

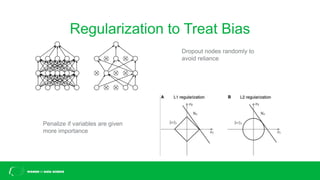

This document discusses challenges with bias in artificial intelligence systems and potential solutions. It begins by providing examples of biased AI in areas like recruitment, law enforcement, online ads, and language. Common sources of bias include biased training data, lack of diversity in data science teams, and overly relying on proxy variables. Potential solutions proposed include algorithmic accountability, improving training data diversity and representation, decoupling classifiers to achieve group fairness, debiasing models with mathematics, and introducing randomness into systems. The overall message is that eliminating bias is complex but progress can be made through approaches like these.