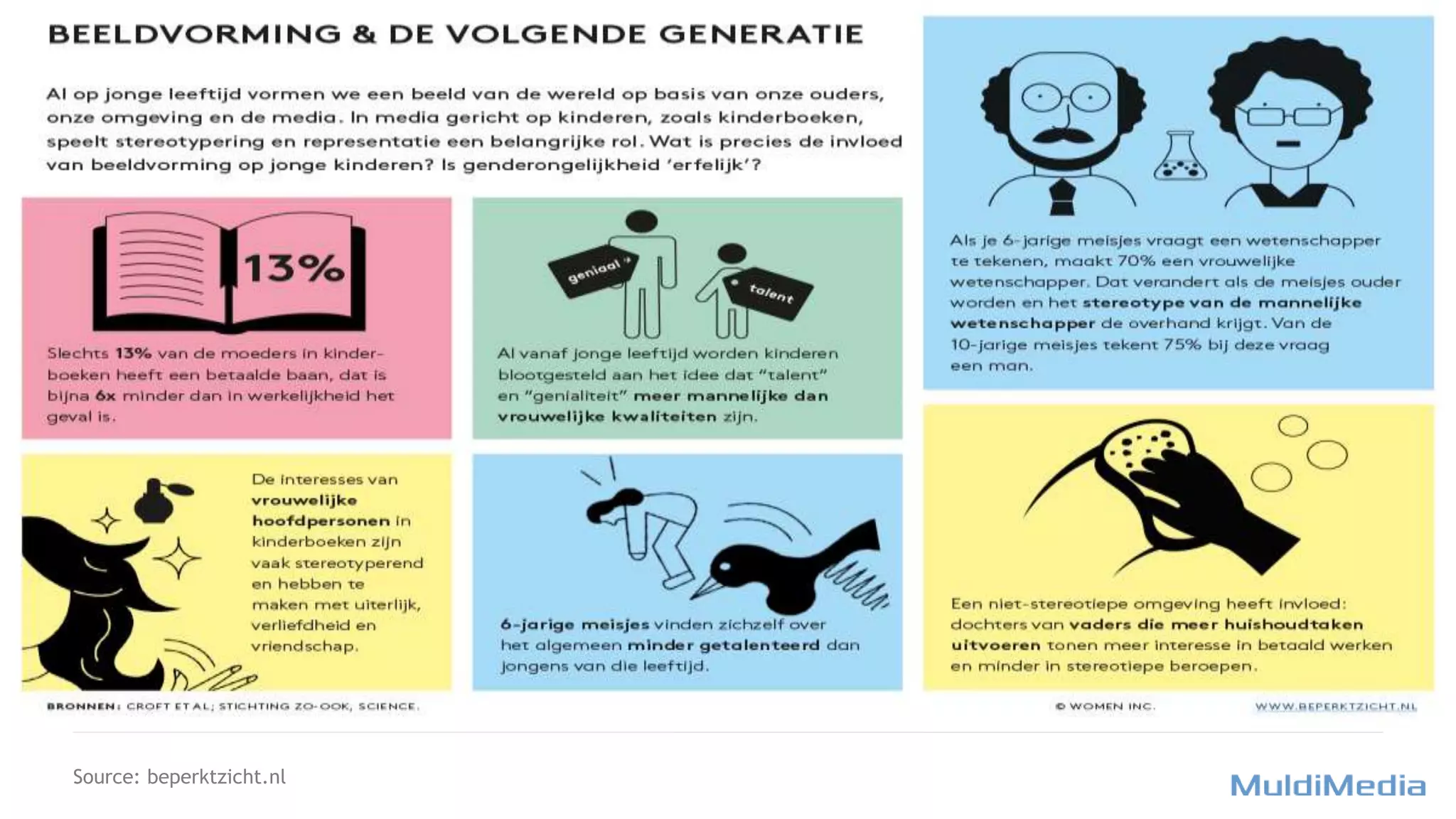

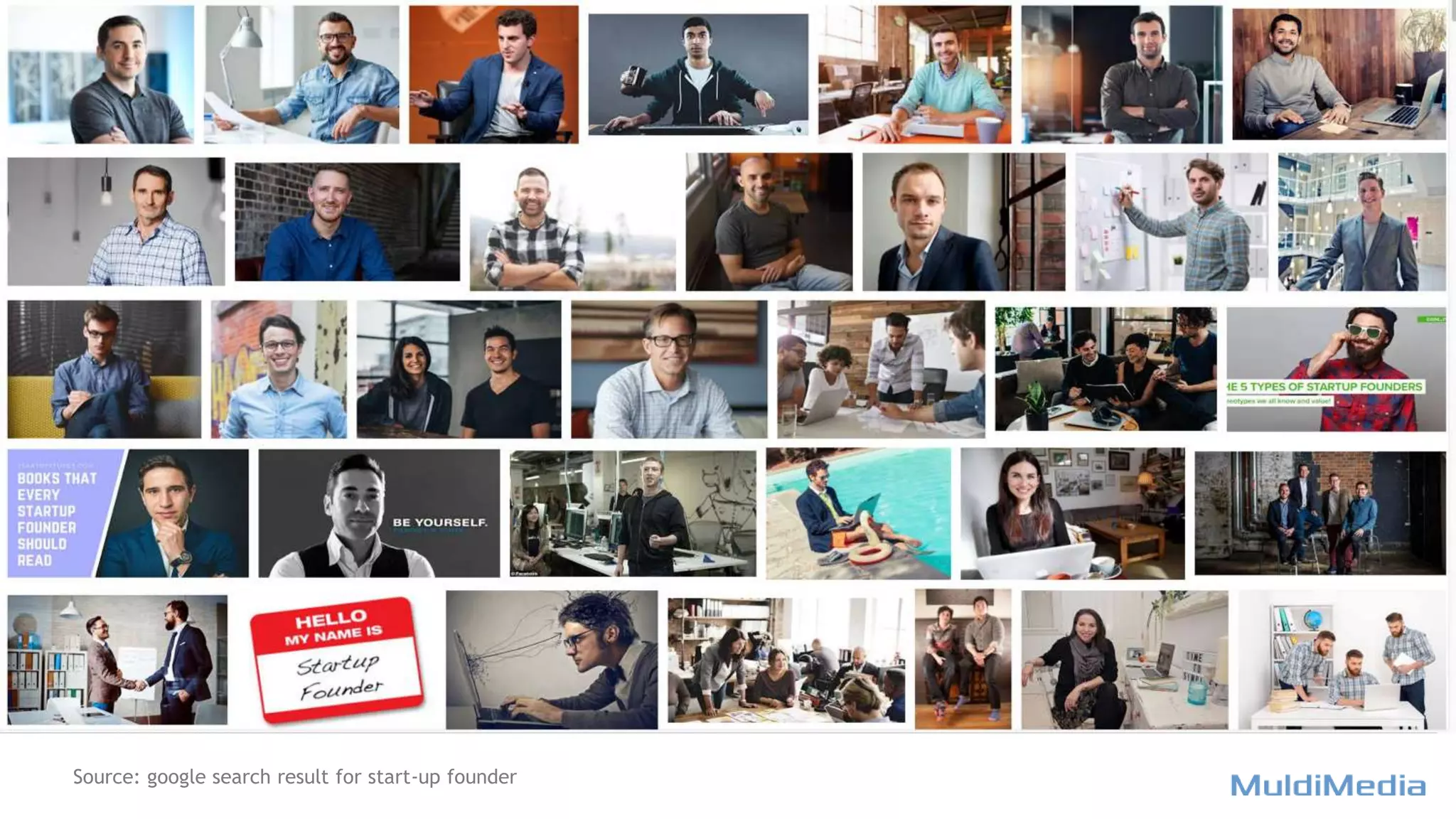

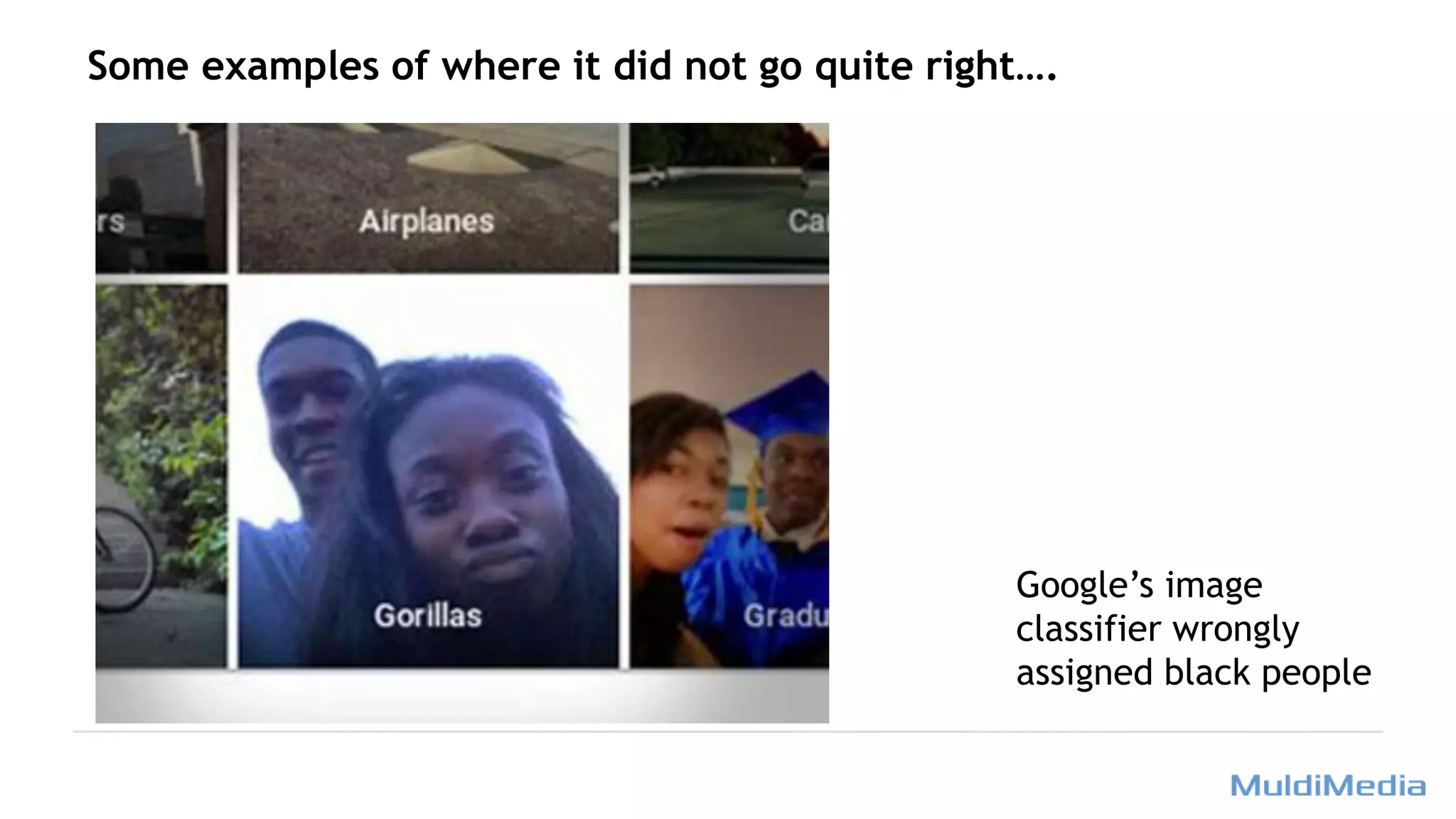

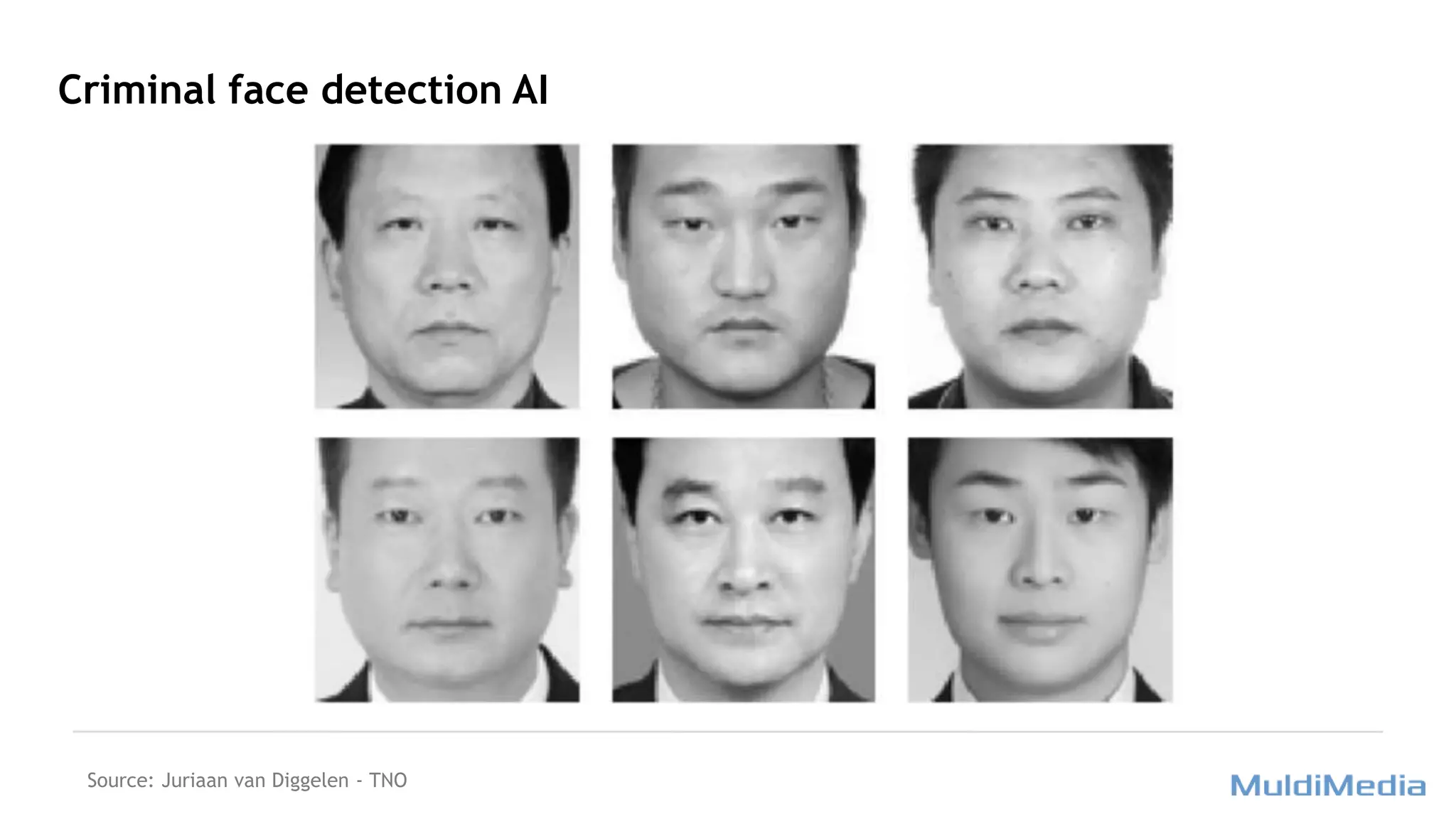

The document discusses the challenges and ethical considerations related to AI bias, highlighting how human biases and insufficiently diverse data sets can lead to flawed outcomes in AI applications, such as product design and automated systems. It emphasizes the need for ethical guidelines, human involvement, and transparency in the development of AI technologies, alongside a consideration of various regulatory frameworks. Key initiatives from organizations like IEEE are cited to provide guidance on responsible AI design and implementation.

![People blindly trust a robot to lead them out of a building In case of a fire emergency.

[Overtrust of robots in emergency evacuation scenarios, P Robinette, W Li, R Allen, AM Howard, AR Wagner - Human-Robot Interaction, 2016]

Source: Juriaan van Diggelen - TNO](https://image.slidesharecdn.com/aiethicsmmtoontechtalks27092018-180928095705/75/Technology-for-everyone-AI-ethics-and-Bias-20-2048.jpg)