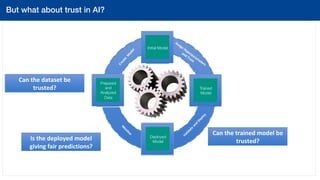

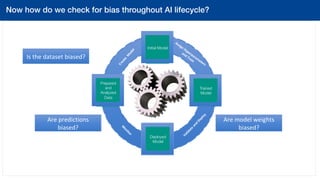

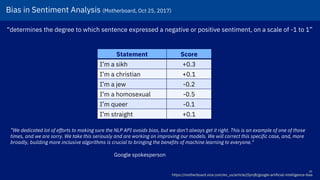

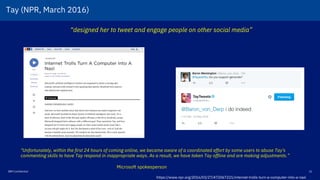

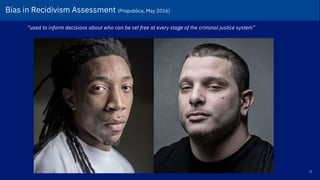

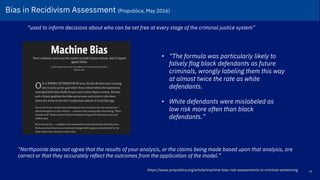

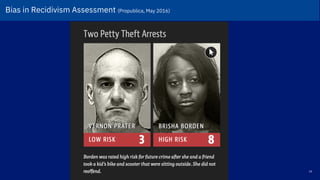

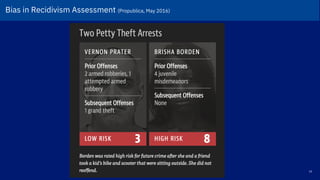

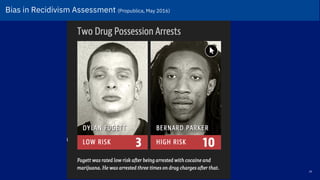

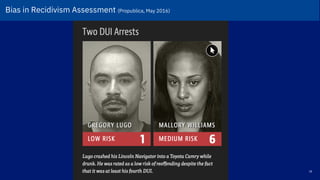

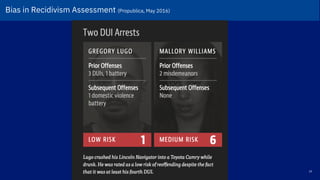

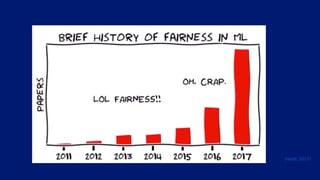

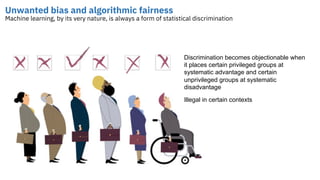

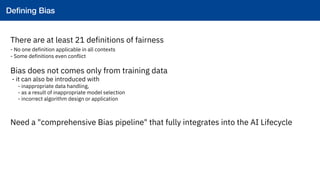

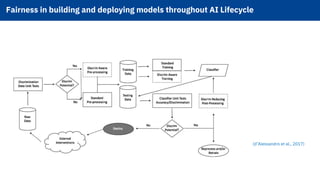

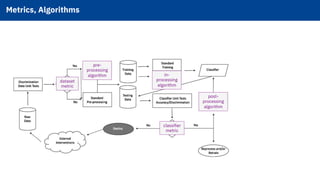

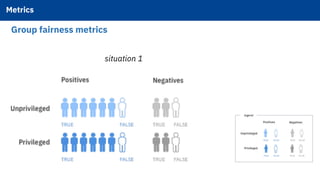

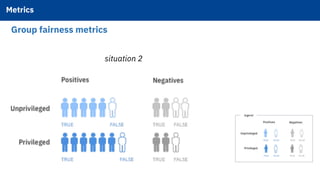

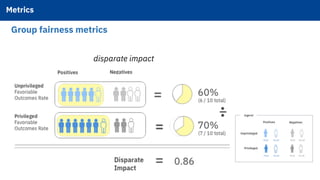

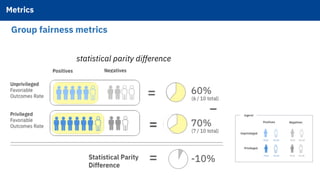

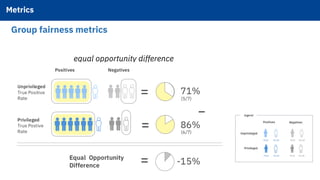

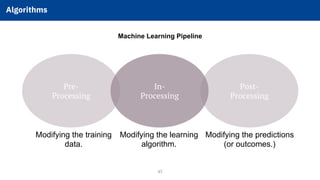

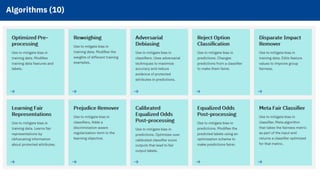

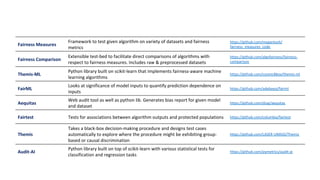

The document discusses various ways that bias can arise in artificial intelligence systems and machine learning models. It provides examples of bias found in facial recognition systems against dark-skinned women, sentiment analysis showing preference for some religions over others, and risk assessment algorithms used in criminal justice showing racial disparities. The document also discusses definitions of fairness and bias in machine learning. It notes there are at least 21 definitions of fairness and bias can be introduced during data handling and model selection in addition to through training data.

![Open Source AIOps Platform!

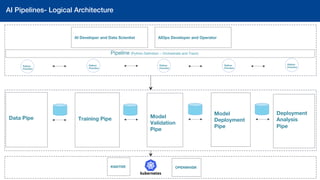

AISphere Pipeline: Continued

# Include simple pipeline into a complicated pipeline

overallPipe = Pipe('OverallPipeline')

overallPipe.add_jobs([

Job(check_data_fairness),

training_pipe,

model_validation_pipe,

Job(s2i),

model_deployment_pipe,

Job(explain_model_predictions)

])

overallPipe.run()](https://image.slidesharecdn.com/aif360-190520172937/85/AIF360-Trusted-and-Fair-AI-53-320.jpg)