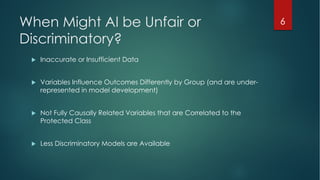

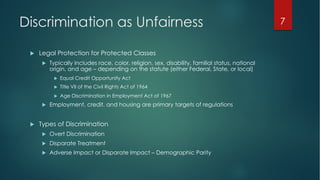

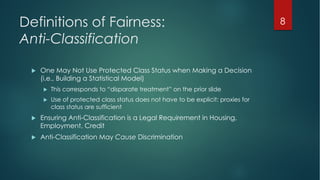

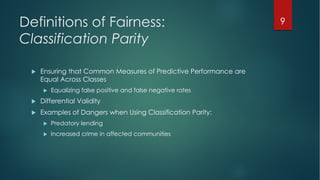

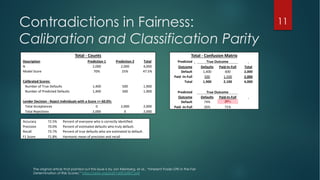

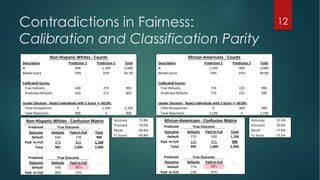

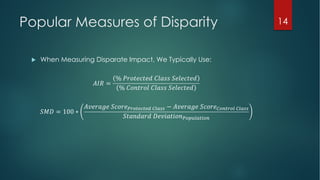

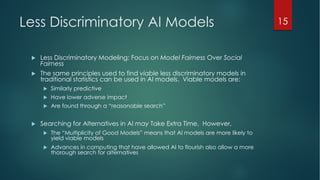

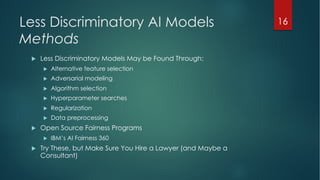

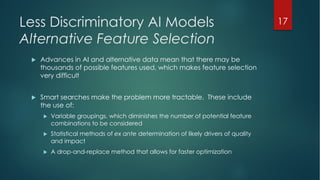

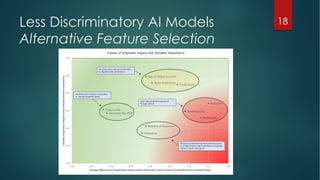

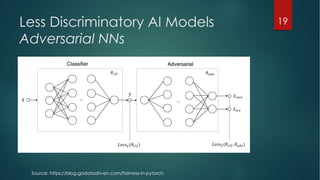

The document discusses the urgent issues of fairness, accountability, and transparency in AI, emphasizing the importance of addressing these to prevent adverse outcomes and improve model performance. It outlines various definitions and mathematical interpretations of fairness, provides examples of bias in AI systems, and suggests practical methods for creating less discriminatory AI models. The speaker highlights the moral imperative of fairness, potential regulatory impacts, and offers resources for further learning.