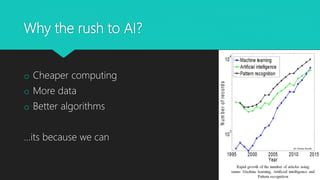

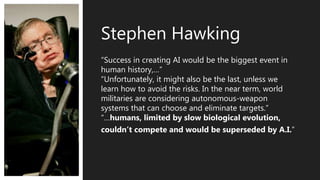

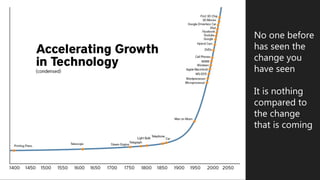

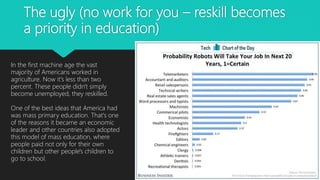

The document discusses the ethics of artificial intelligence and outlines both benefits and risks. It begins by introducing speakers on the topic and defining artificial intelligence. It then notes that AI is already used widely to make decisions that affect people's lives. Both benefits of AI like increased precision and risks like job loss requiring retraining are discussed. Concerns are raised by experts like Bill Gates, Elon Musk, and Stephen Hawking about potential existential threats from advanced AI. The document calls for safe and robust AI to avoid negative outcomes through exploration and oversight. It concludes that forward-thinking people are working to address the challenges of ensuring AI is developed and applied responsibly.