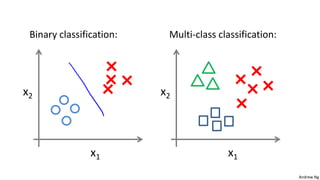

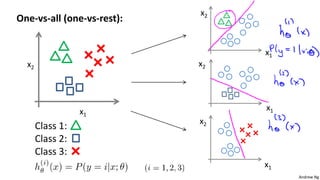

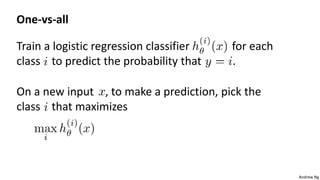

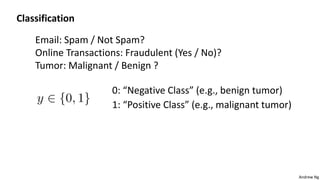

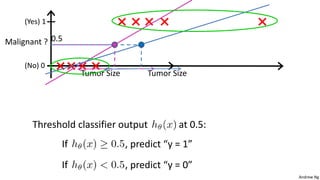

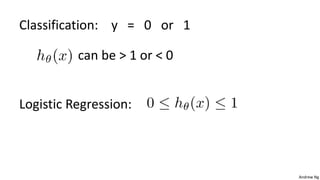

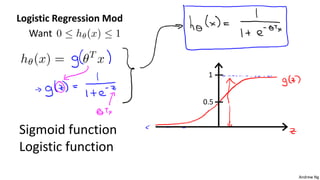

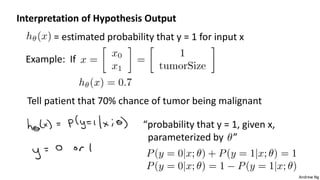

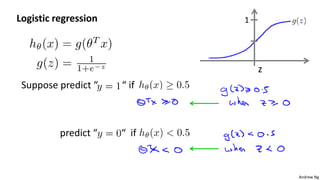

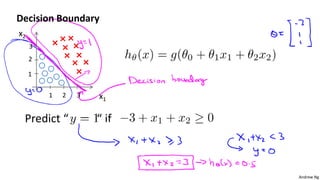

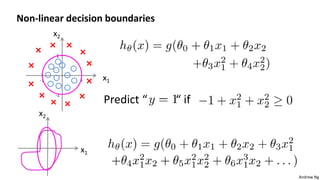

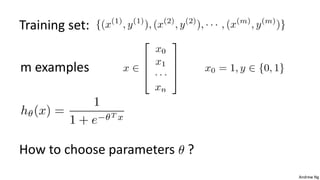

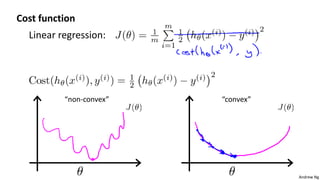

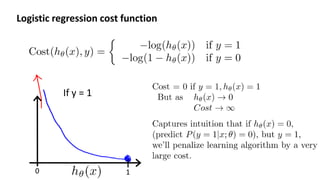

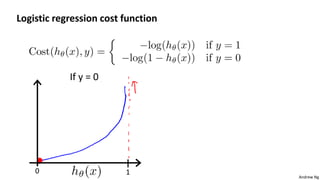

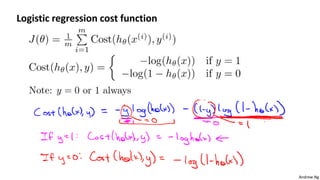

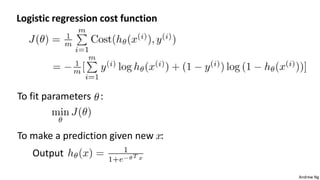

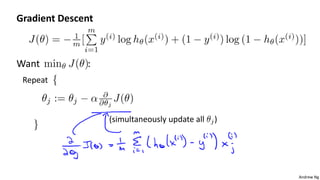

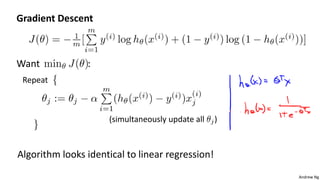

This document discusses logistic regression for classification problems. Logistic regression models the probability of an output belonging to a particular class using a logistic function. The model parameters are estimated by minimizing a cost function using gradient descent or other advanced optimization algorithms. Logistic regression can be extended to multi-class classification problems using a one-vs-all approach that trains a separate binary classifier for each class.

![Andrew Ng

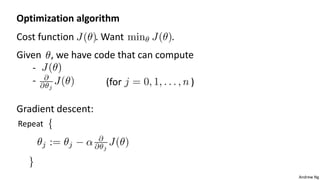

Example:

function [jVal, gradient]

= costFunction(theta)

jVal = (theta(1)-5)^2 + ...

(theta(2)-5)^2;

gradient = zeros(2,1);

gradient(1) = 2*(theta(1)-5);

gradient(2) = 2*(theta(2)-5);

options = optimset(‘GradObj’, ‘on’, ‘MaxIter’, ‘100’);

initialTheta = zeros(2,1);

[optTheta, functionVal, exitFlag] ...

= fminunc(@costFunction, initialTheta, options);](https://image.slidesharecdn.com/copyoflecture4logisticregressio-200812164711/85/Machine-Learning-lecture4-logistic-regression-25-320.jpg)

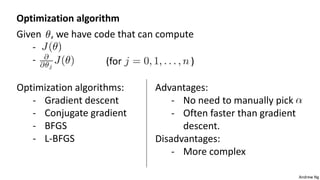

![Andrew Ng

gradient(1) = [ ];

function [jVal, gradient] = costFunction(theta)

theta =

jVal = [ ];

gradient(2) = [ ];

gradient(n+1) = [ ];

code to compute

code to compute

code to compute

code to compute](https://image.slidesharecdn.com/copyoflecture4logisticregressio-200812164711/85/Machine-Learning-lecture4-logistic-regression-26-320.jpg)