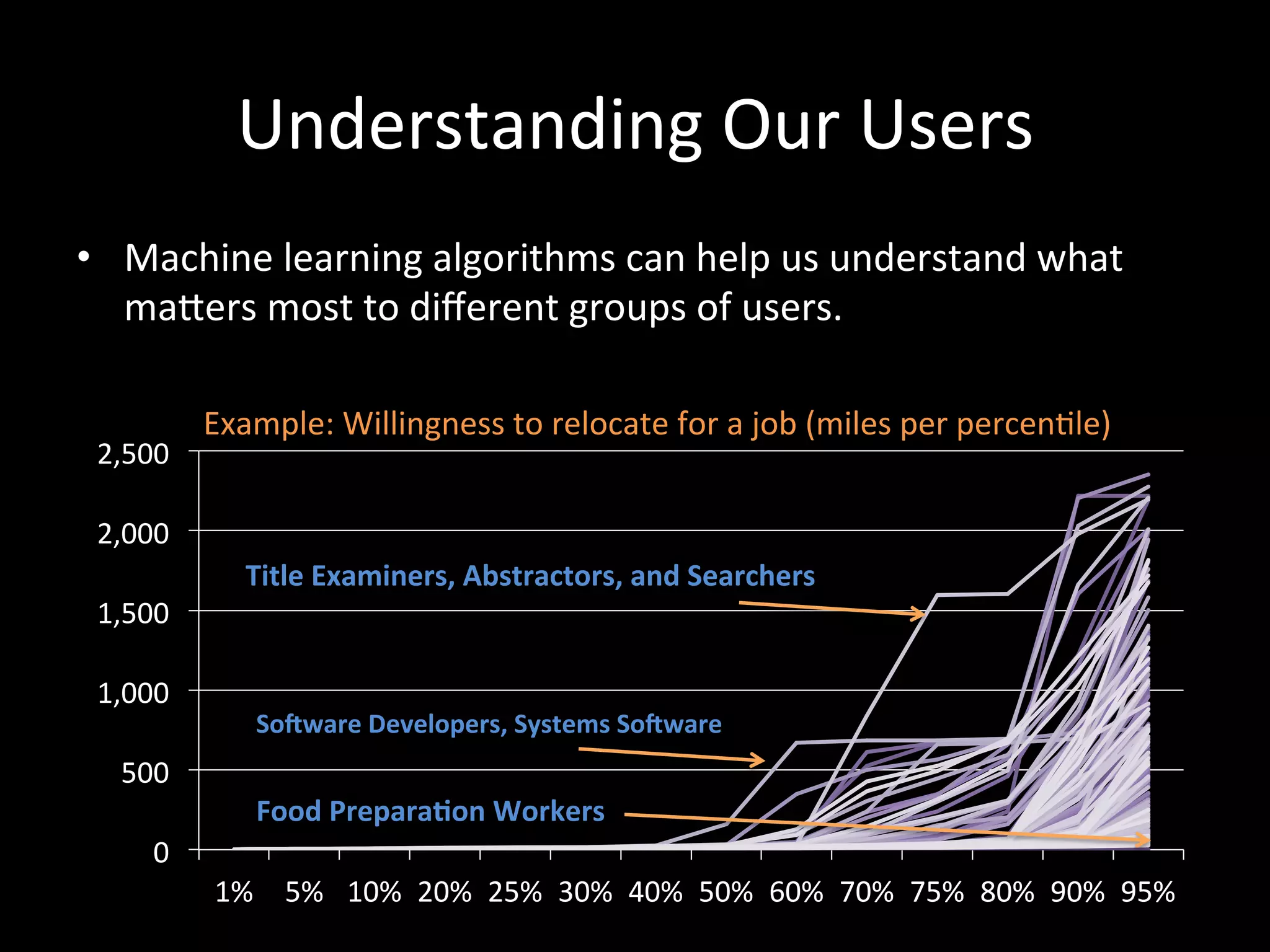

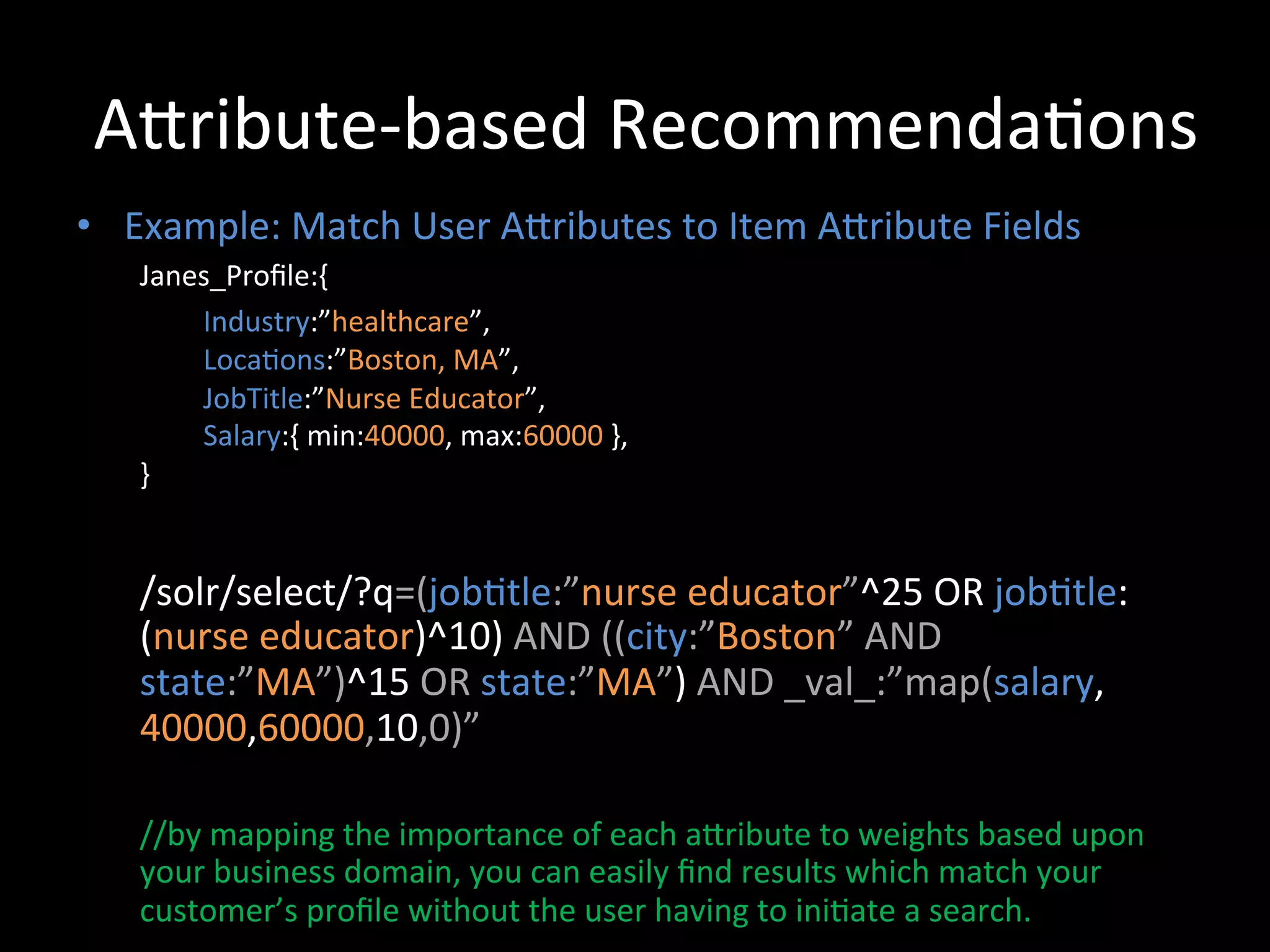

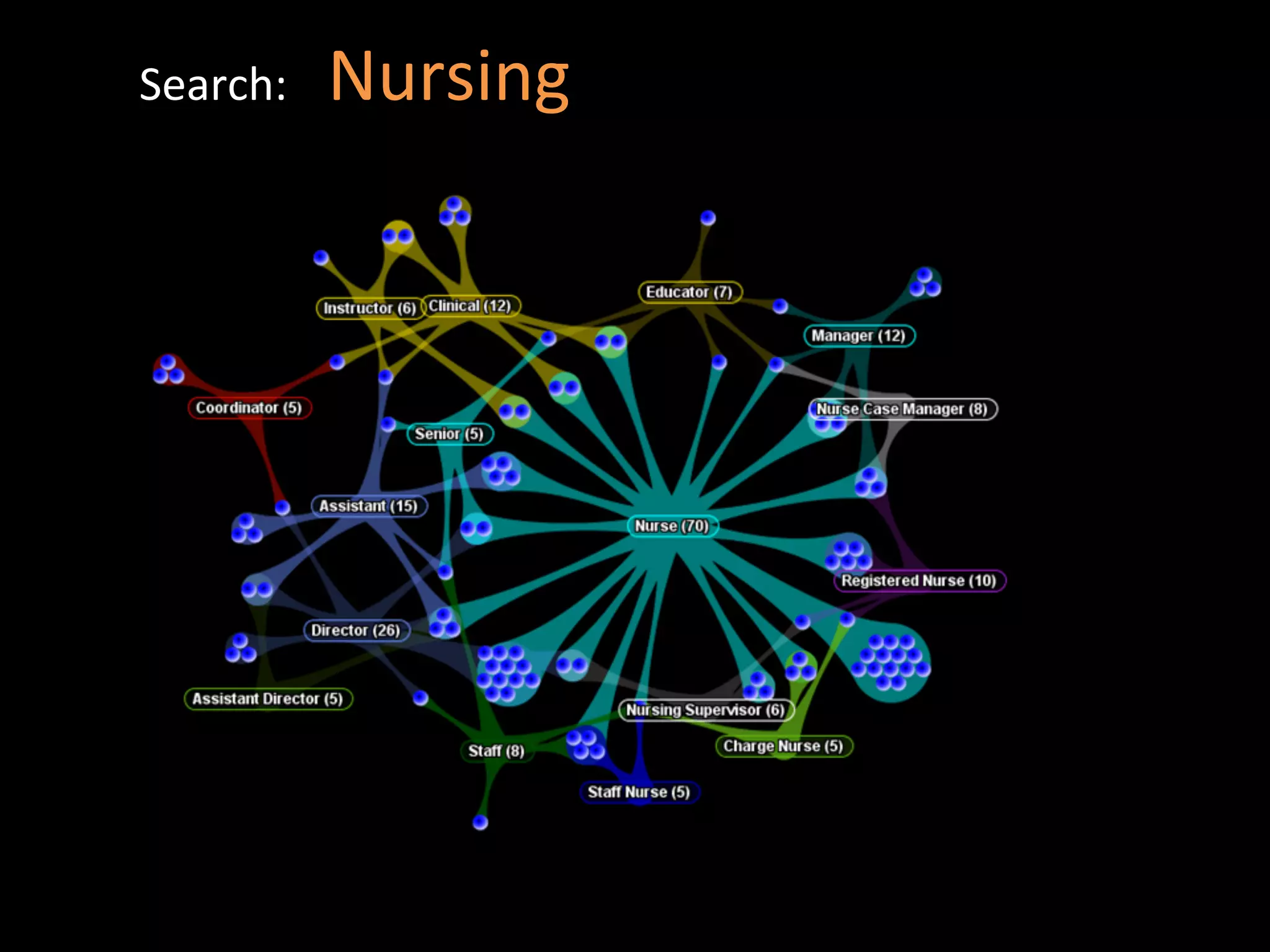

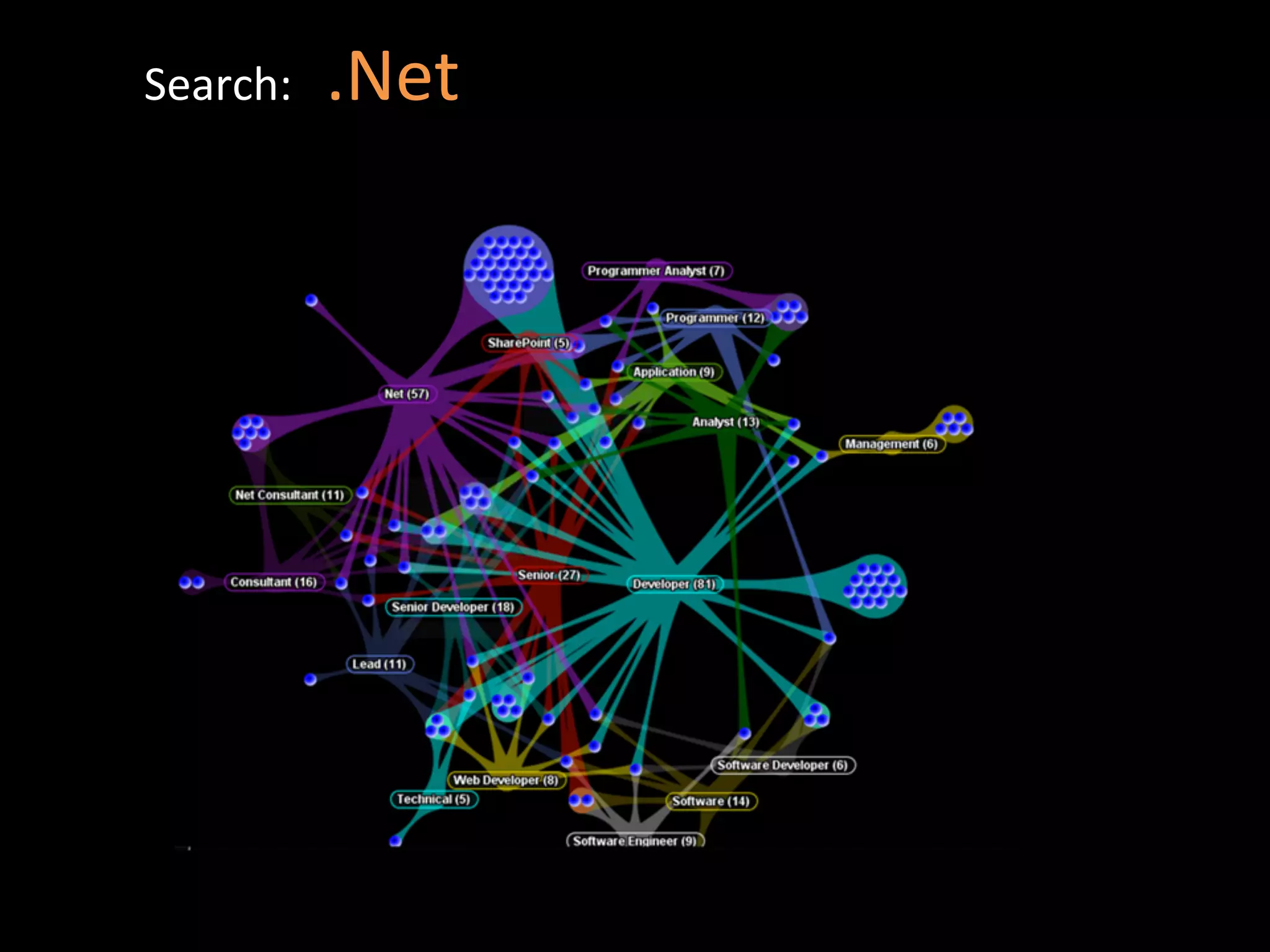

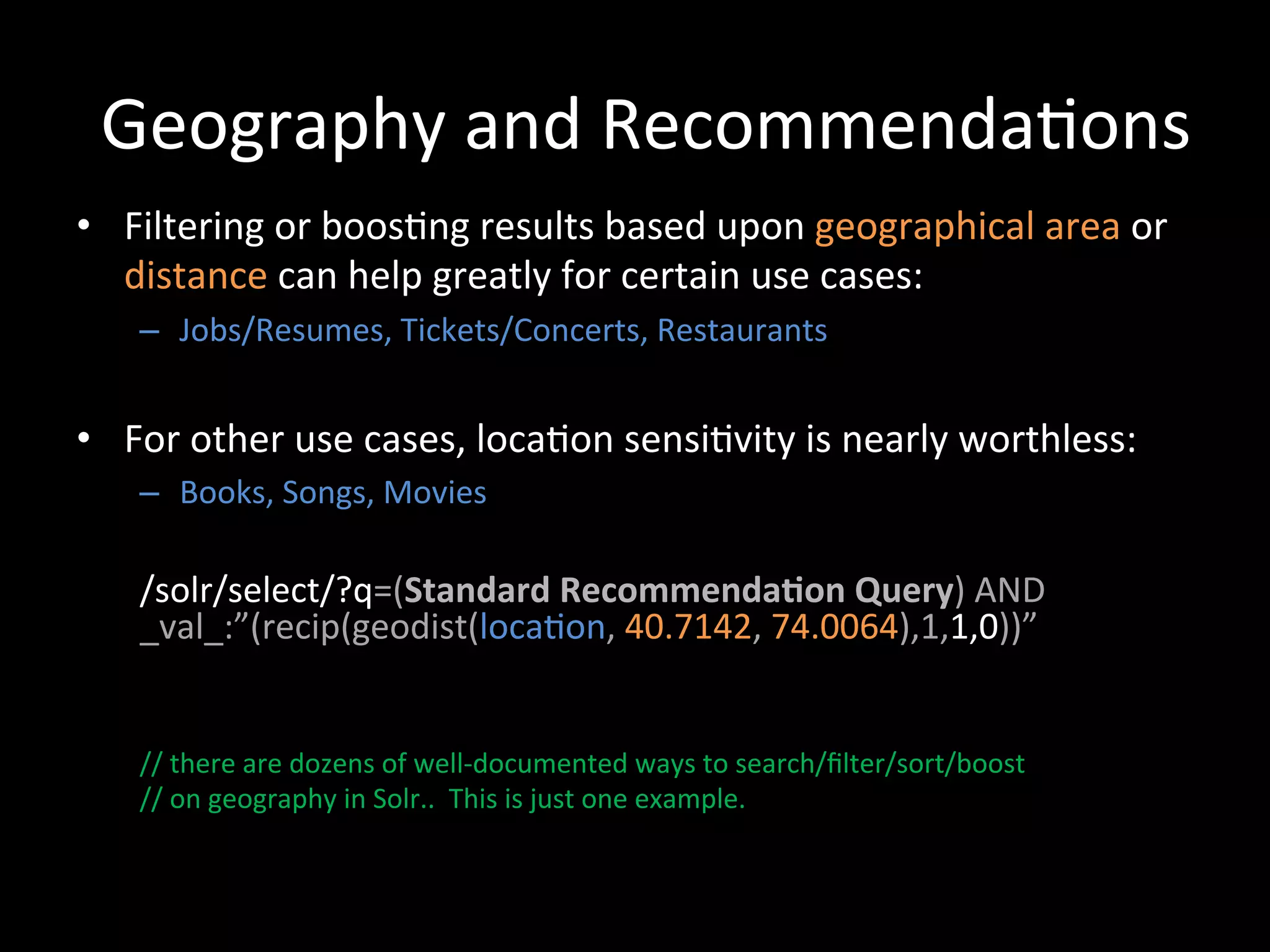

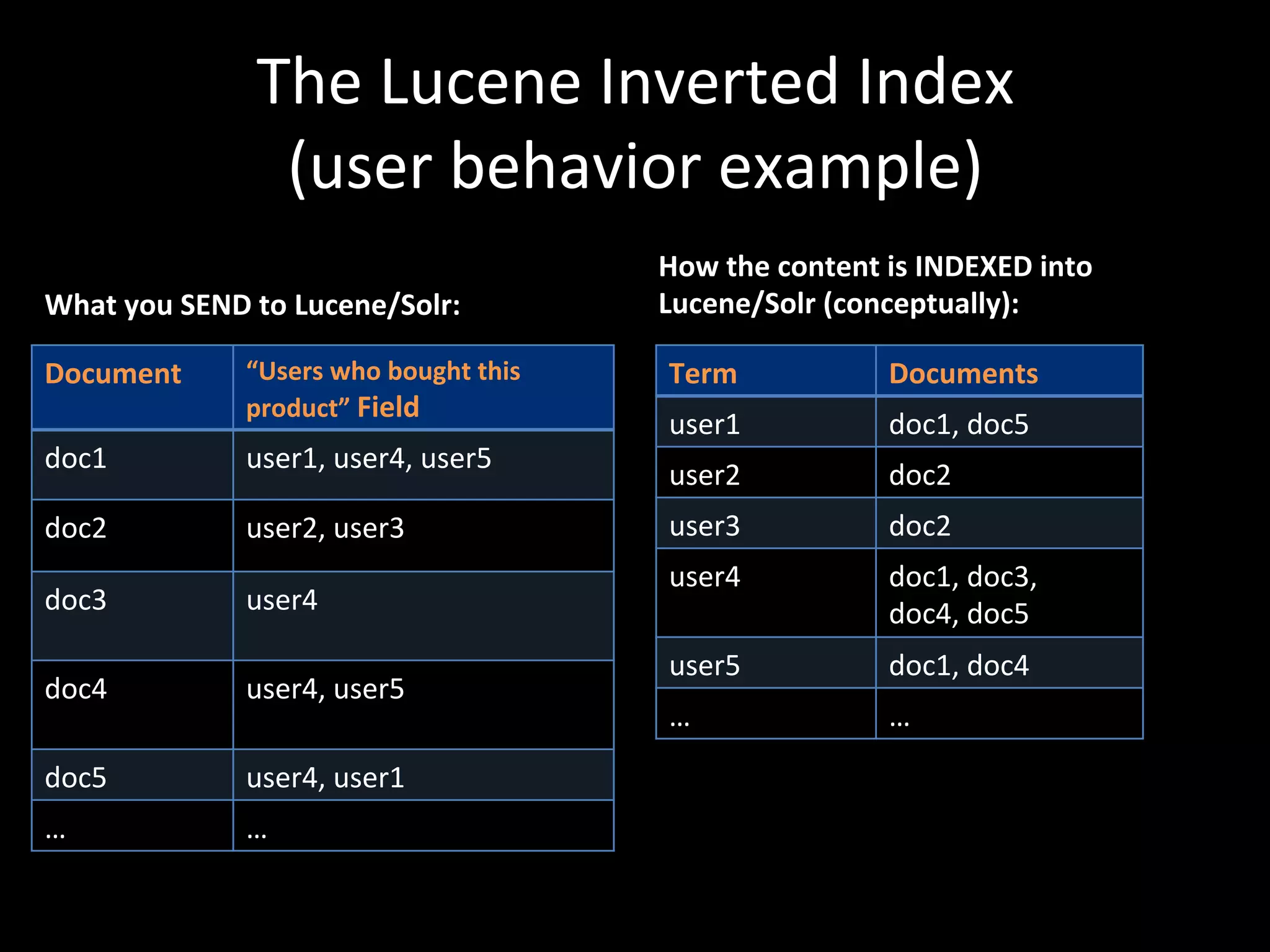

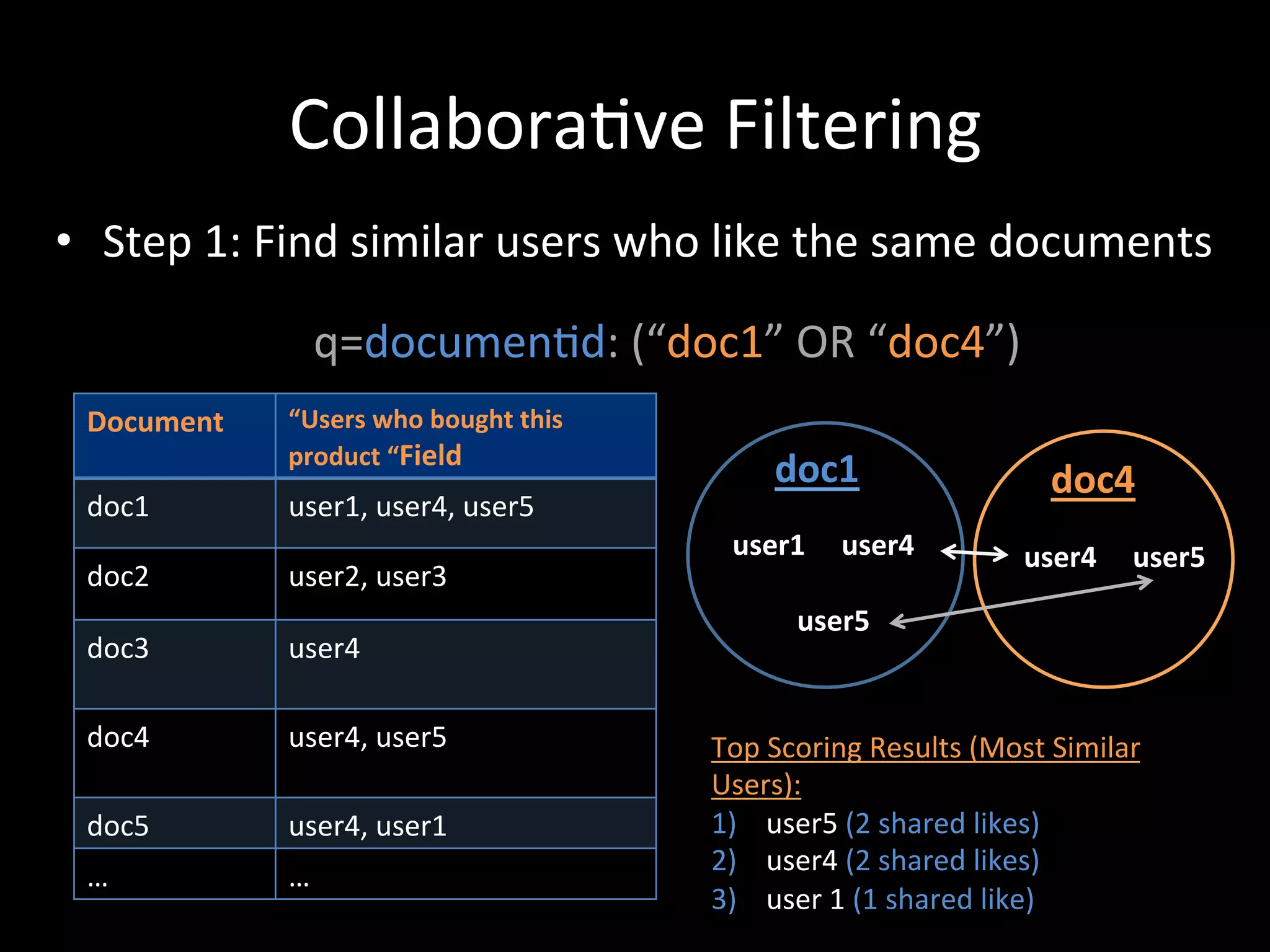

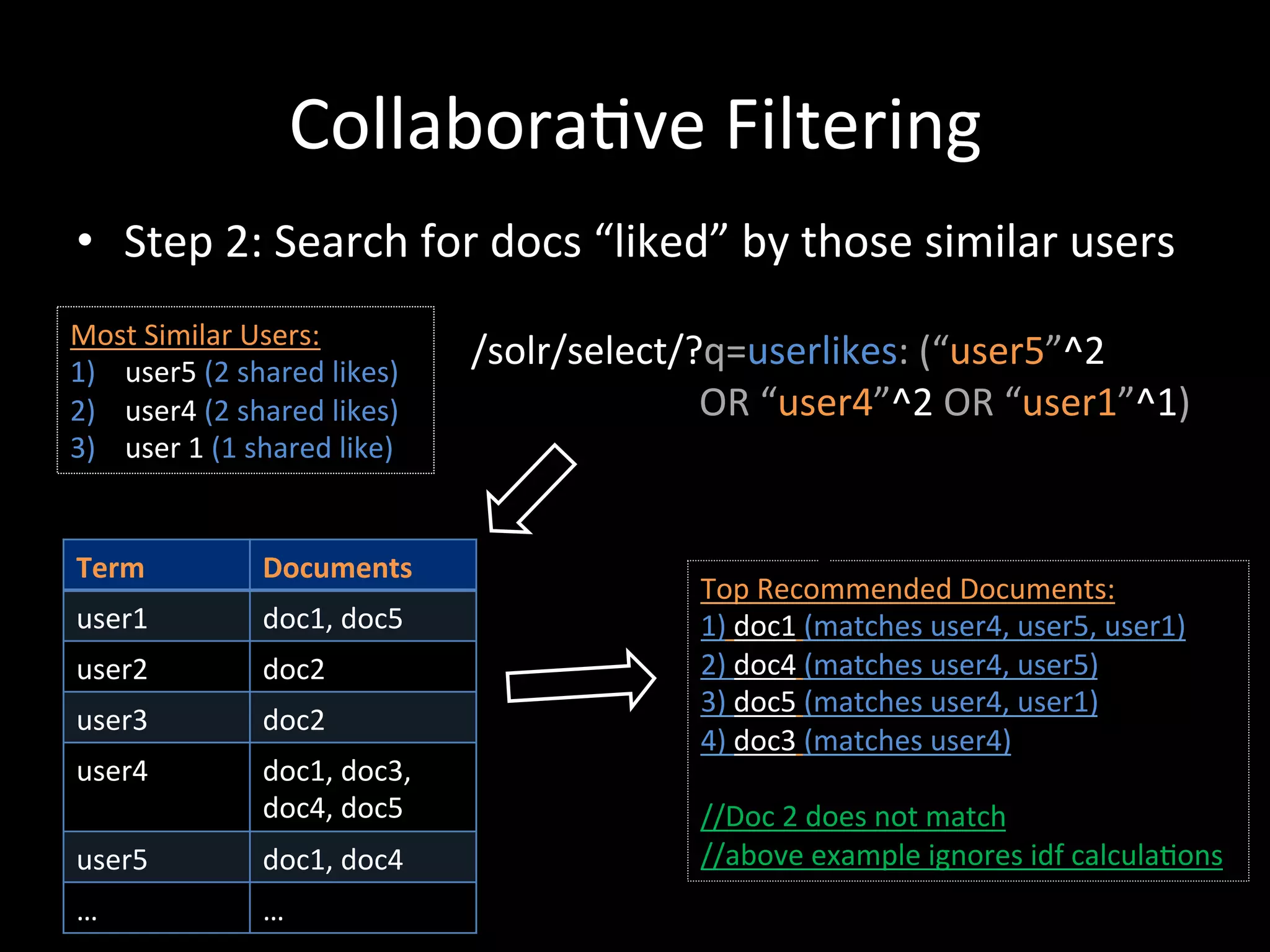

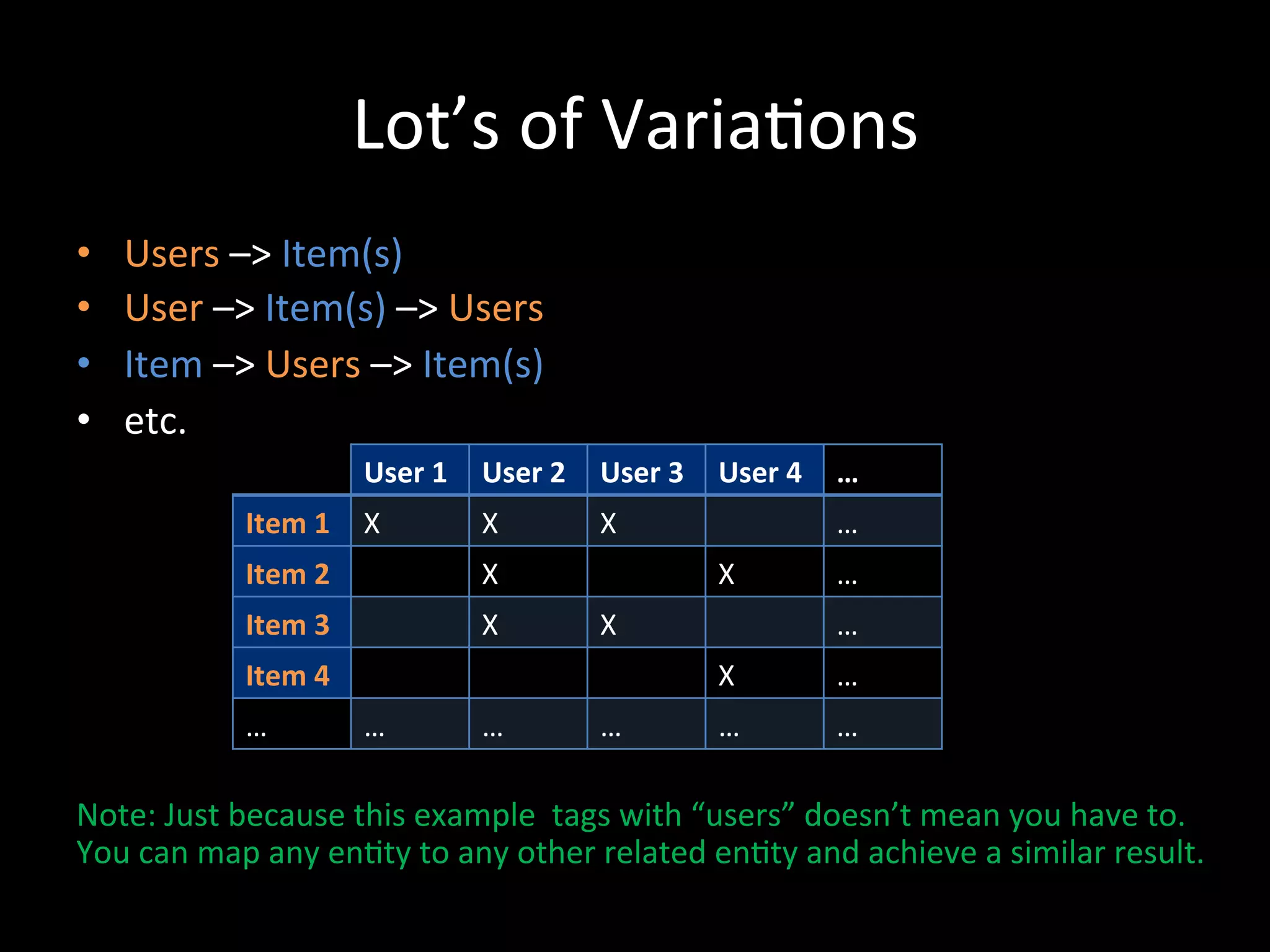

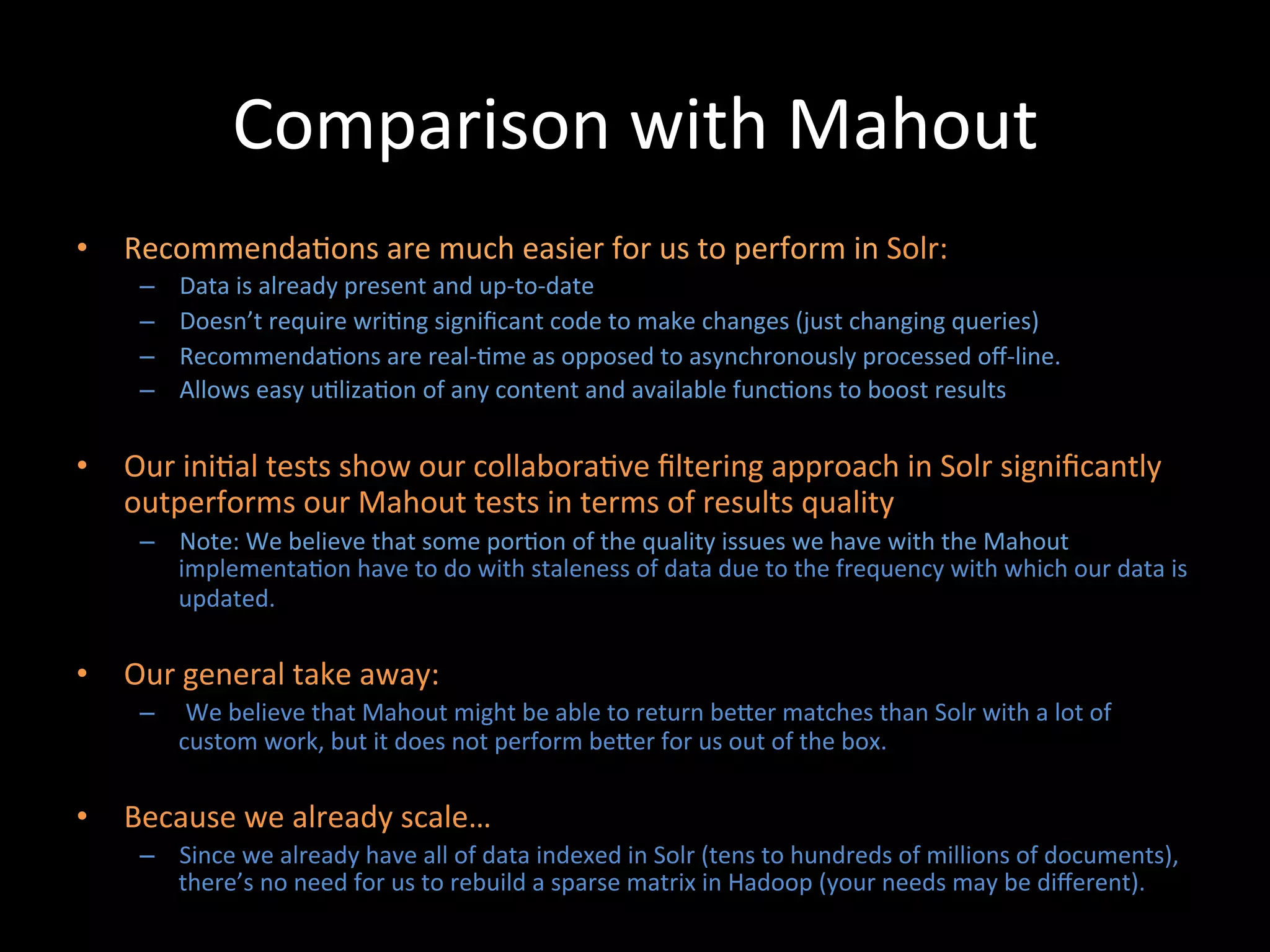

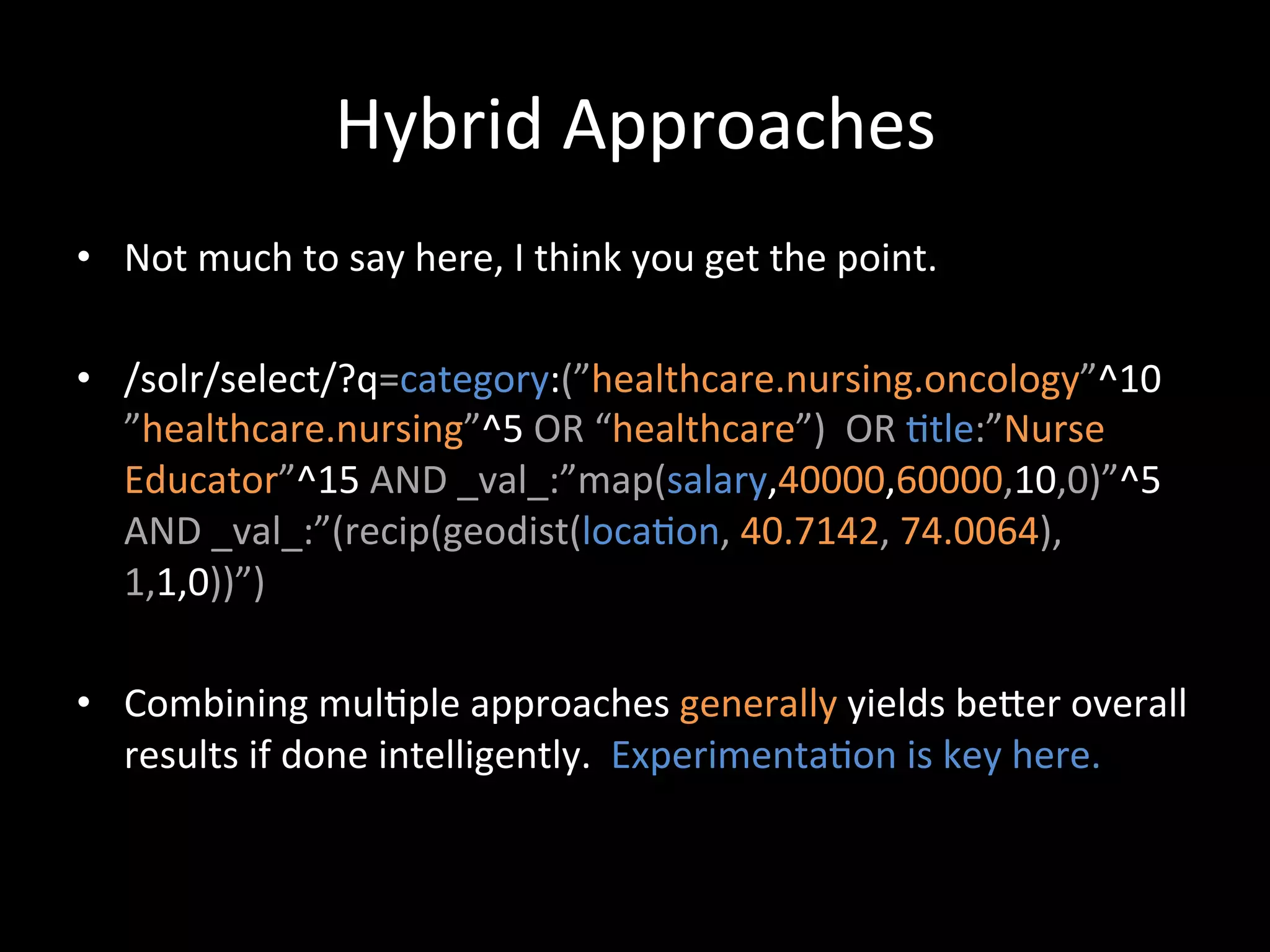

The document presents an overview of building a real-time, Solr-powered recommendation engine, detailing various recommendation approaches such as attribute-based, hierarchical classification, and collaborative filtering. It discusses the importance of recommendations for companies like CareerBuilder in driving user engagement and the integration of machine learning techniques to enhance recommendation systems. Finally, it highlights the differences between Solr and Mahout in terms of real-time processing and the effectiveness of collaborative filtering methods.

![The

Lucene

Inverted

Index

(tradi@onal

text

example)

How

the

content

is

INDEXED

into

What

you

SEND

to

Lucene/Solr:

Lucene/Solr

(conceptually):

Document

Content

Field

Term

Documents

doc1

once

upon

a

@me,

in

a

land

a

doc1

[2x]

far,

far

away

brown

doc3

[1x]

,

doc5

[1x]

doc2

the

cow

jumped

over

the

cat

doc4

[1x]

moon.

cow

doc2

[1x]

,

doc5

[1x]

doc3

the

quick

brown

fox

jumped

over

the

lazy

dog.

…

...

doc4

the

cat

in

the

hat

once

doc1

[1x],

doc5

[1x]

doc5

The

brown

cow

said

“moo”

over

doc2

[1x],

doc3

[1x]

once.

the

doc2

[2x],

doc3

[2x],

doc4[2x],

doc5

[1x]

…

…

…

…](https://image.slidesharecdn.com/buildingareal-timesolr-poweredrecommendationengine-120518121336-phpapp02/75/Building-a-Real-time-Solr-powered-Recommendation-Engine-8-2048.jpg)

![Custom

Scoring

with

Payloads

• In

addi@on

to

boos@ng

search

terms

and

fields,

content

within

the

same

field

can

also

be

boosted

differently

using

Payloads

(requires

a

custom

scoring

implementa@on):

• Content

Field:

design

[1]

/

engineer

[1]

/

really

[

]

/

great

[

]

/

job

[

]

/

ten[3]

/

years[3]

/

experience[3]

/

careerbuilder

[2]

/

design

[2],

…

Payload

Bucket

Mappings:

job@tle:

bucket=[1]

boost=10;

company:

bucket=[2]

boost=4;

jobdescrip@on:

bucket=[]

weight=1;

experience:

bucket=[3]

weight=1.5

We

can

pass

in

a

parameter

to

solr

at

query

@me

specifying

the

boost

to

apply

to

each

bucket

i.e.

…&bucketWeights=1:10;2:4;3:1.5;default:1;

• This

allows

us

to

map

many

relevancy

buckets

to

search

terms

at

index

@me

and

adjust

the

weigh@ng

at

query

@me

without

having

to

search

across

hundreds

of

fields.

• By

making

all

scoring

parameters

overridable

at

query

@me,

we

are

able

to

do

A

/

B

tes@ng

to

consistently

improve

our

relevancy

model](https://image.slidesharecdn.com/buildingareal-timesolr-poweredrecommendationengine-120518121336-phpapp02/75/Building-a-Real-time-Solr-powered-Recommendation-Engine-38-2048.jpg)