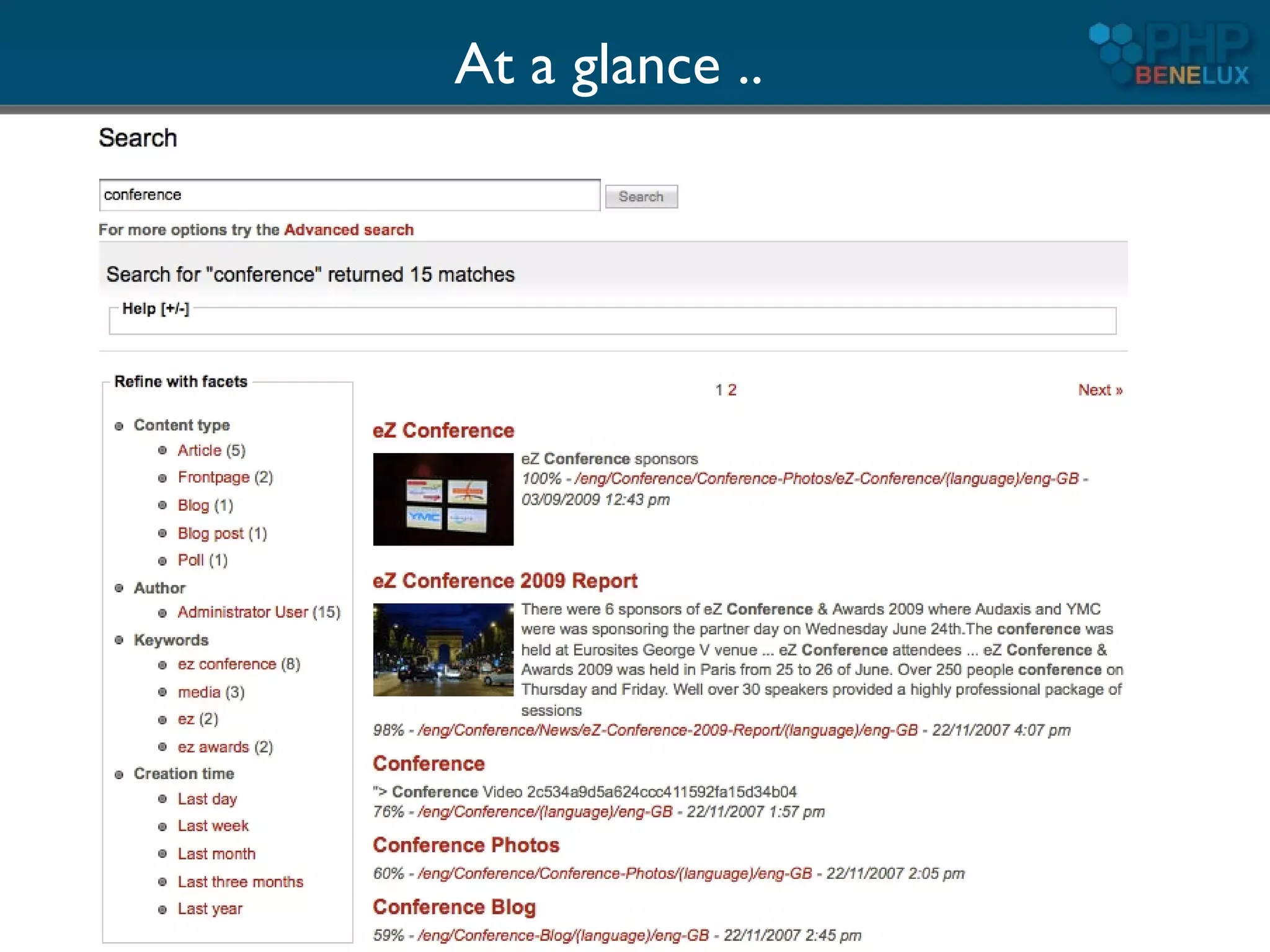

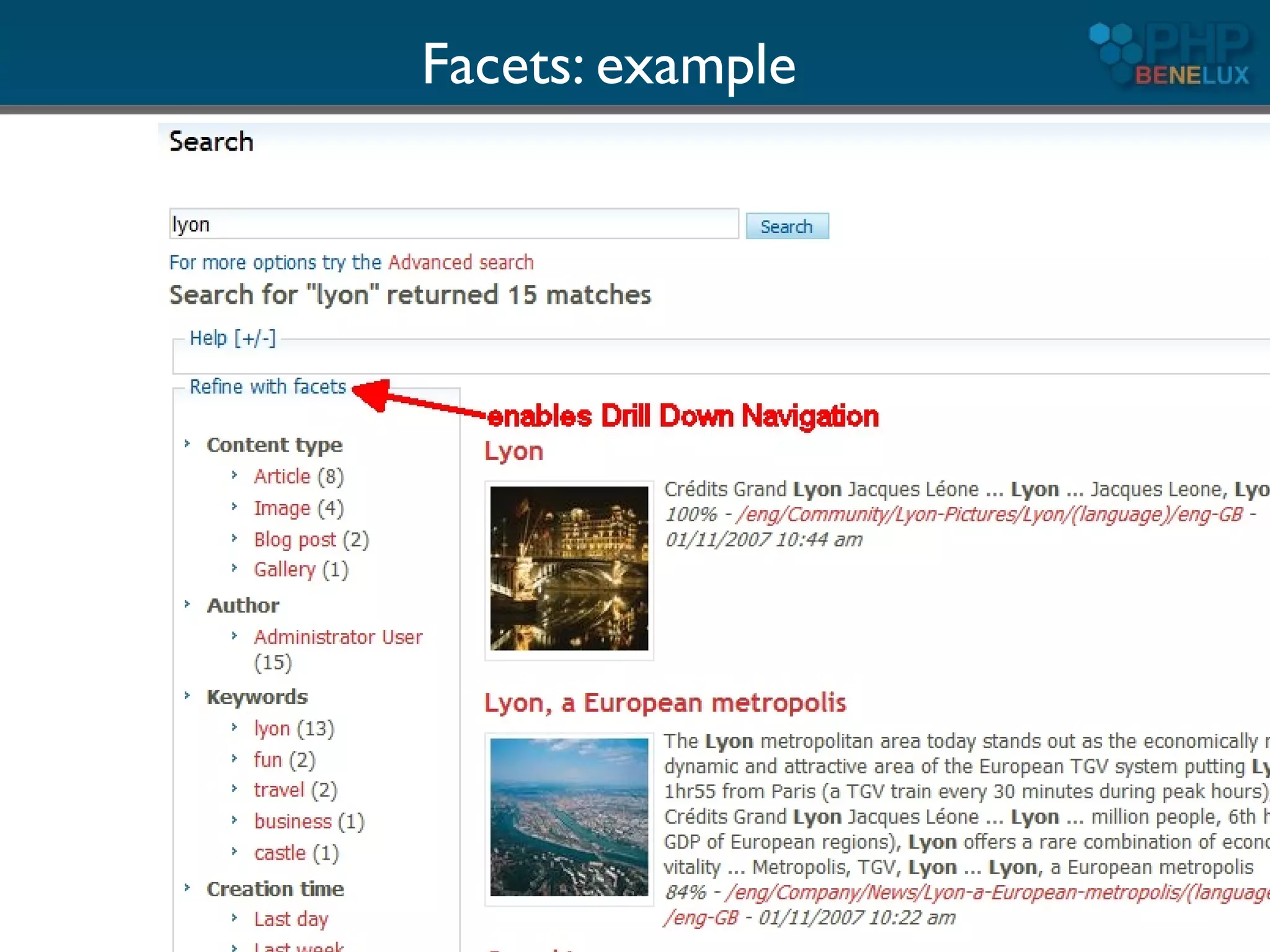

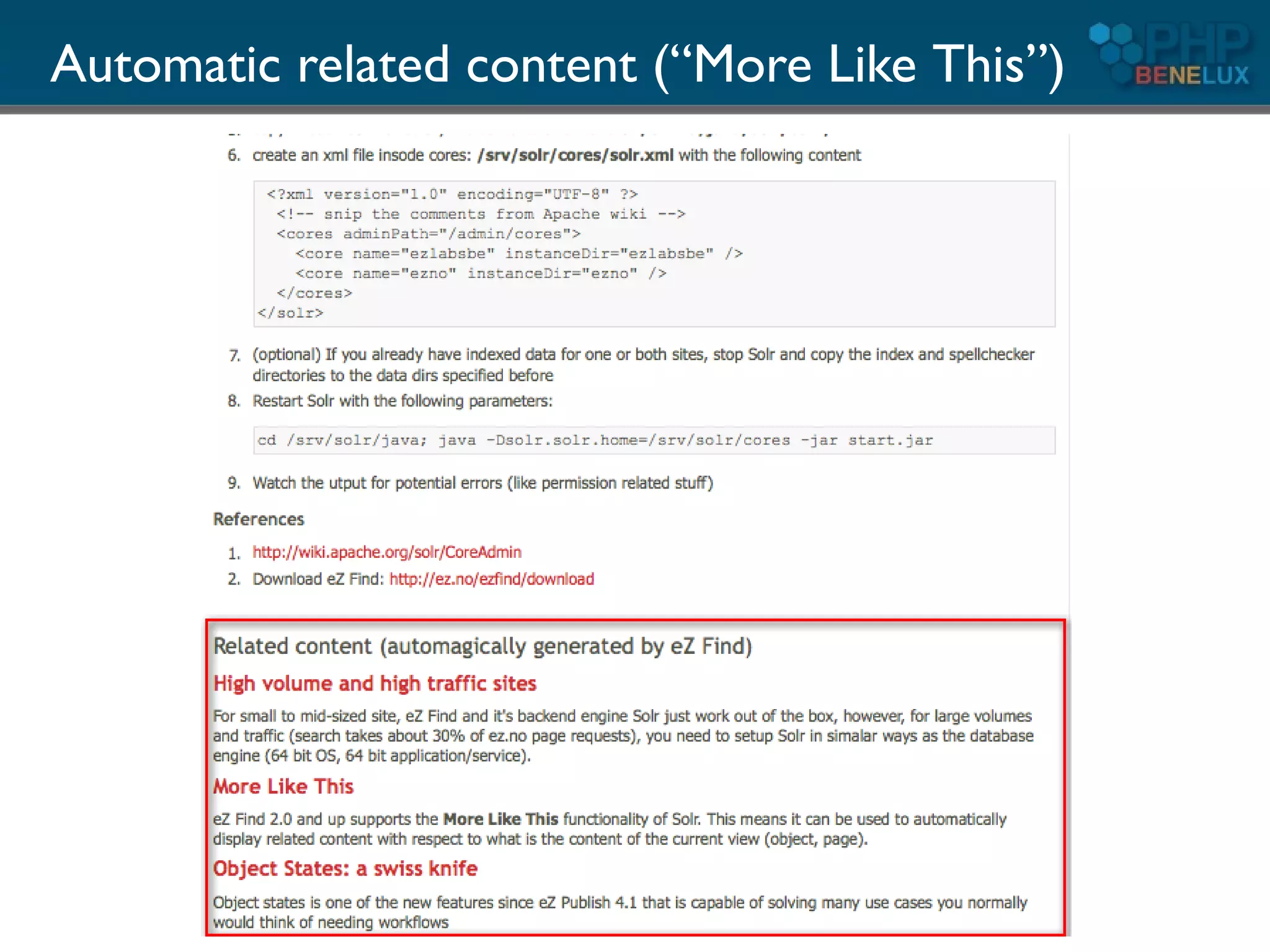

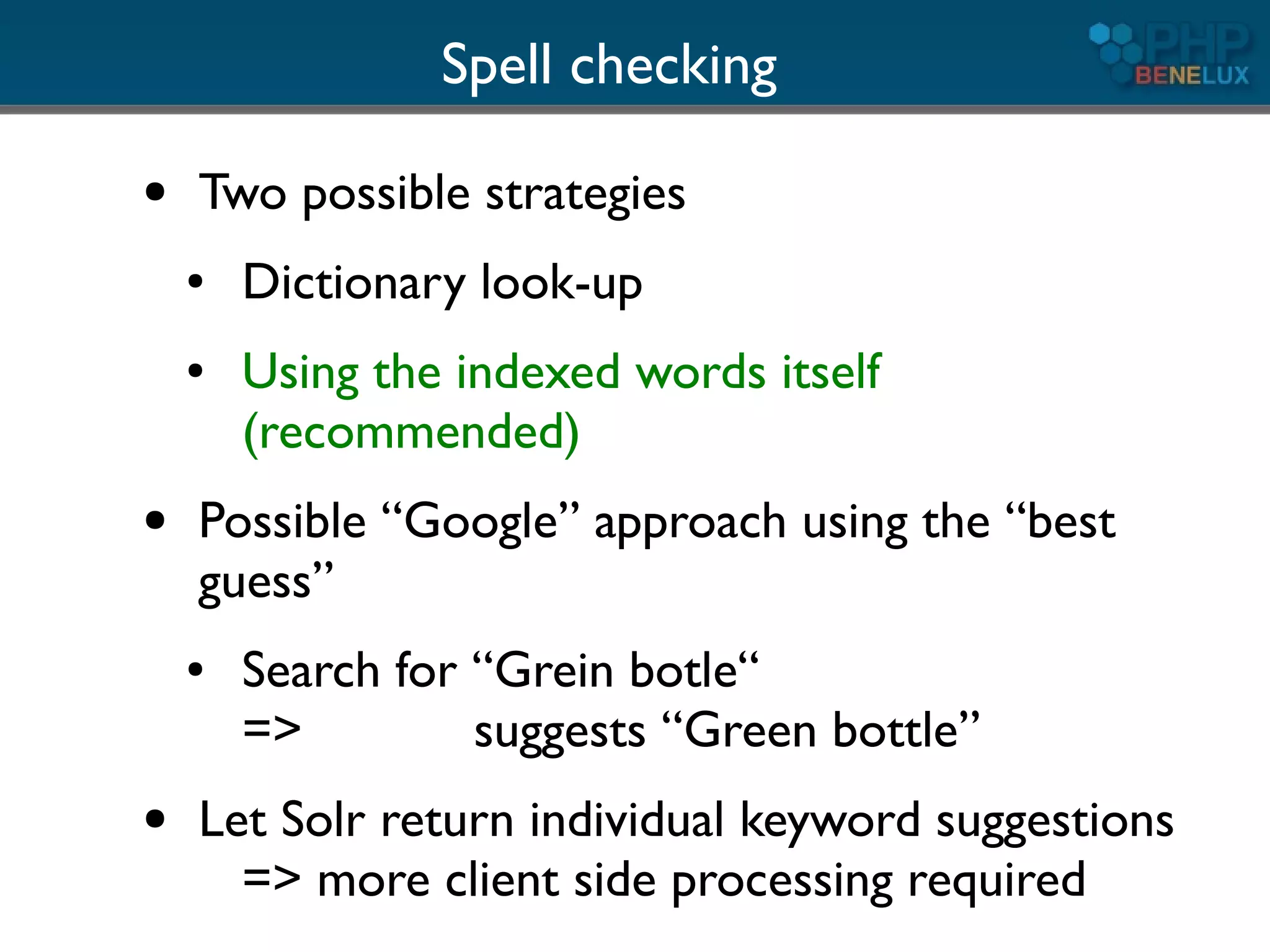

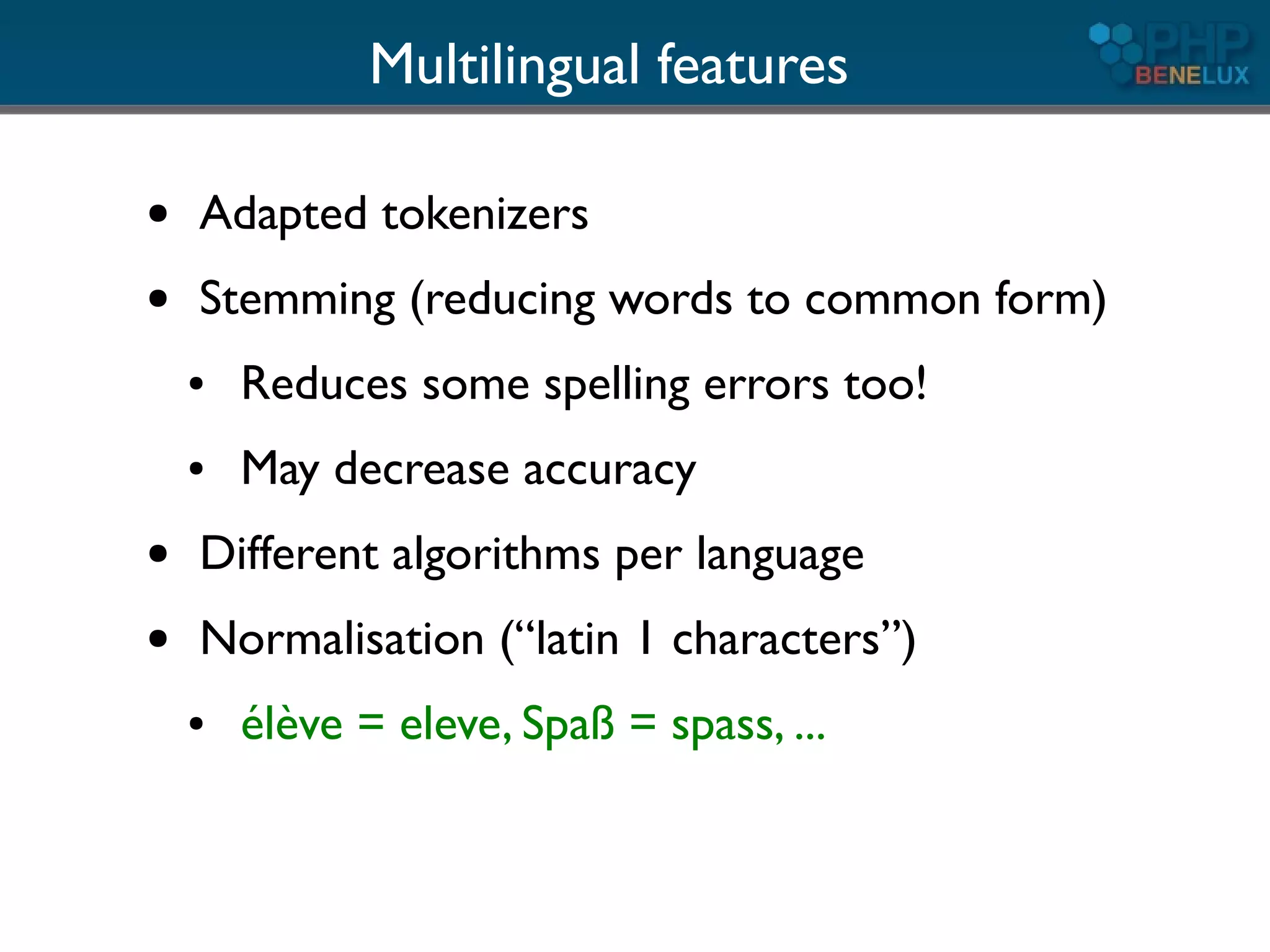

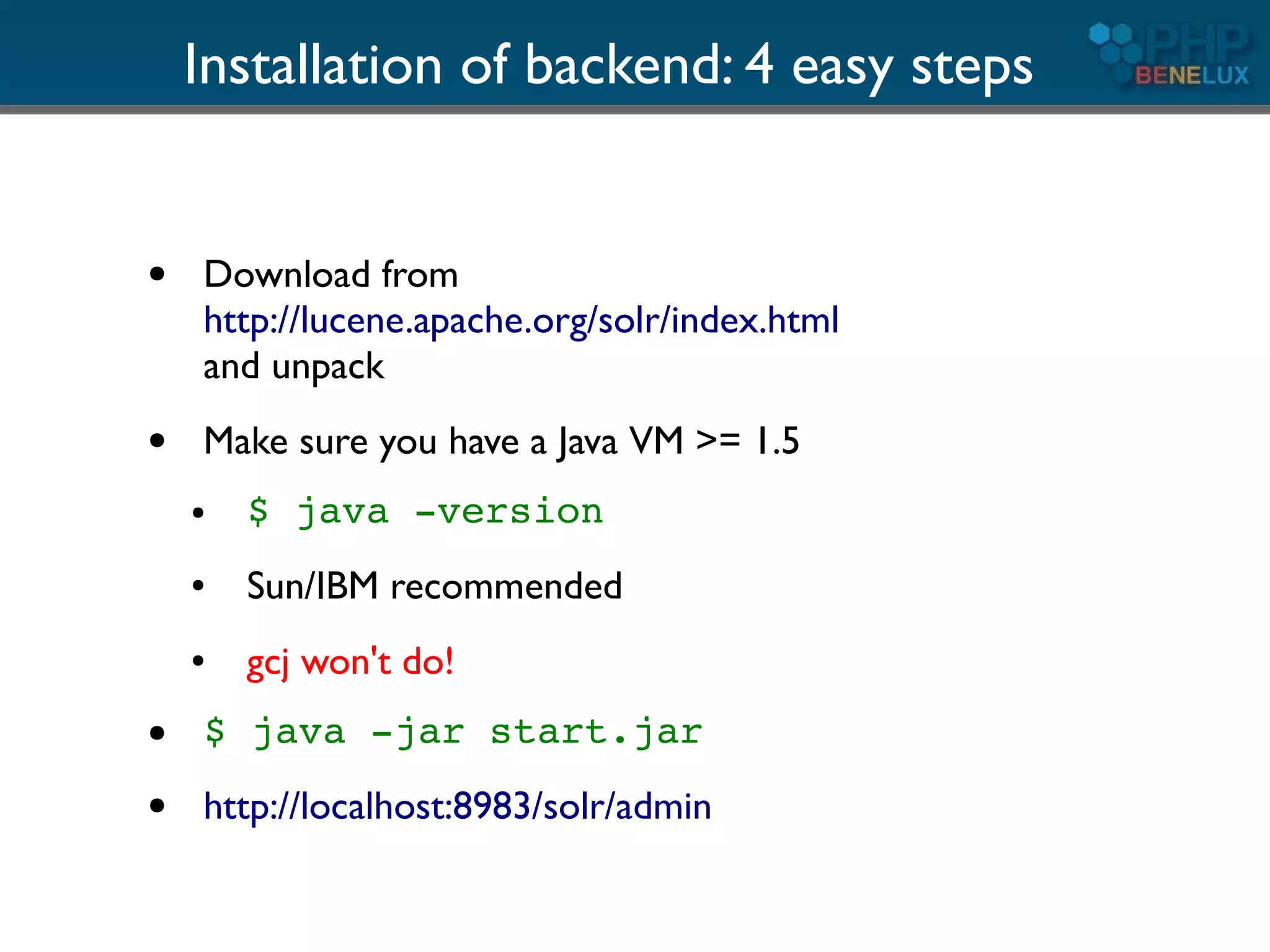

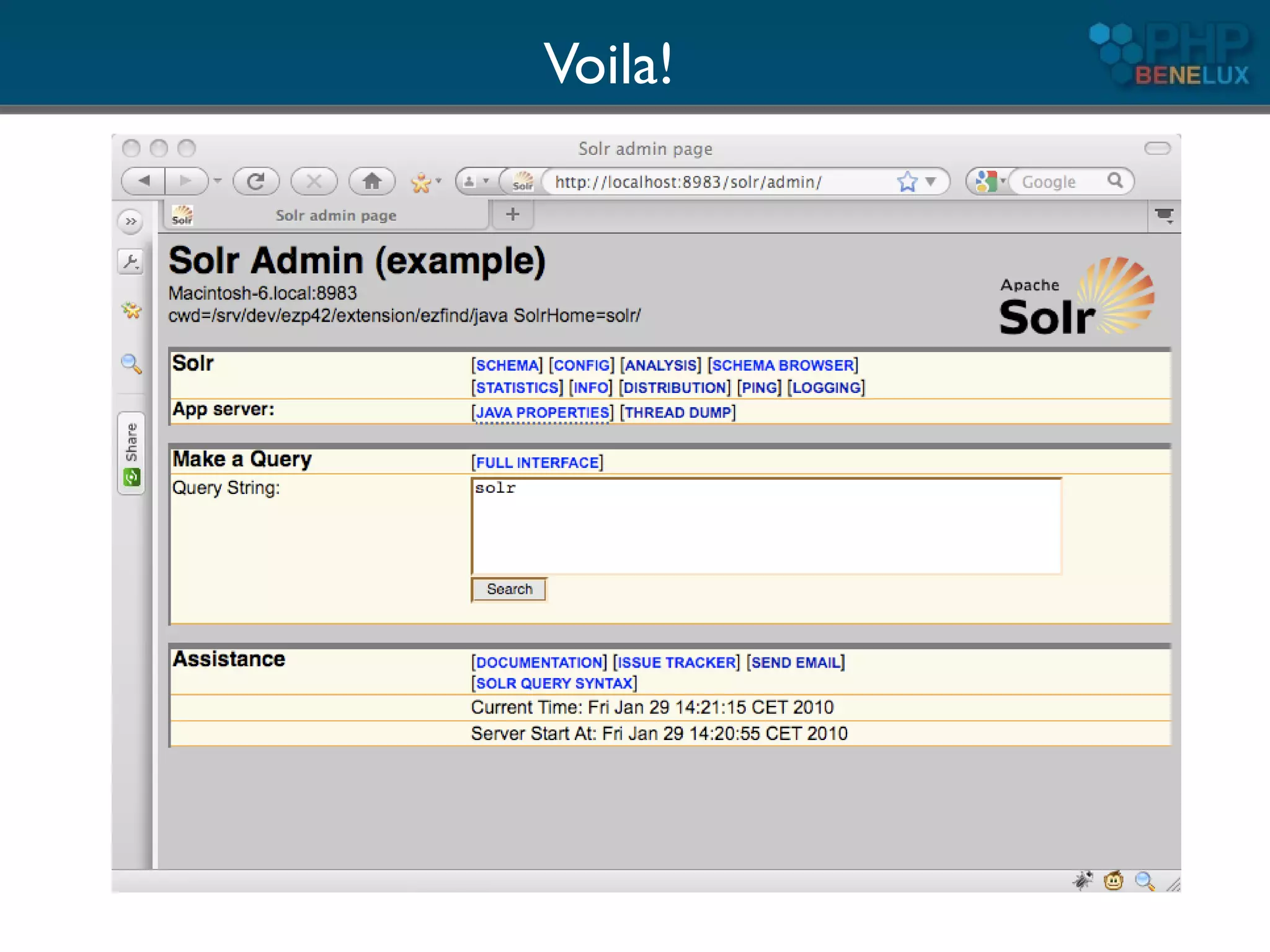

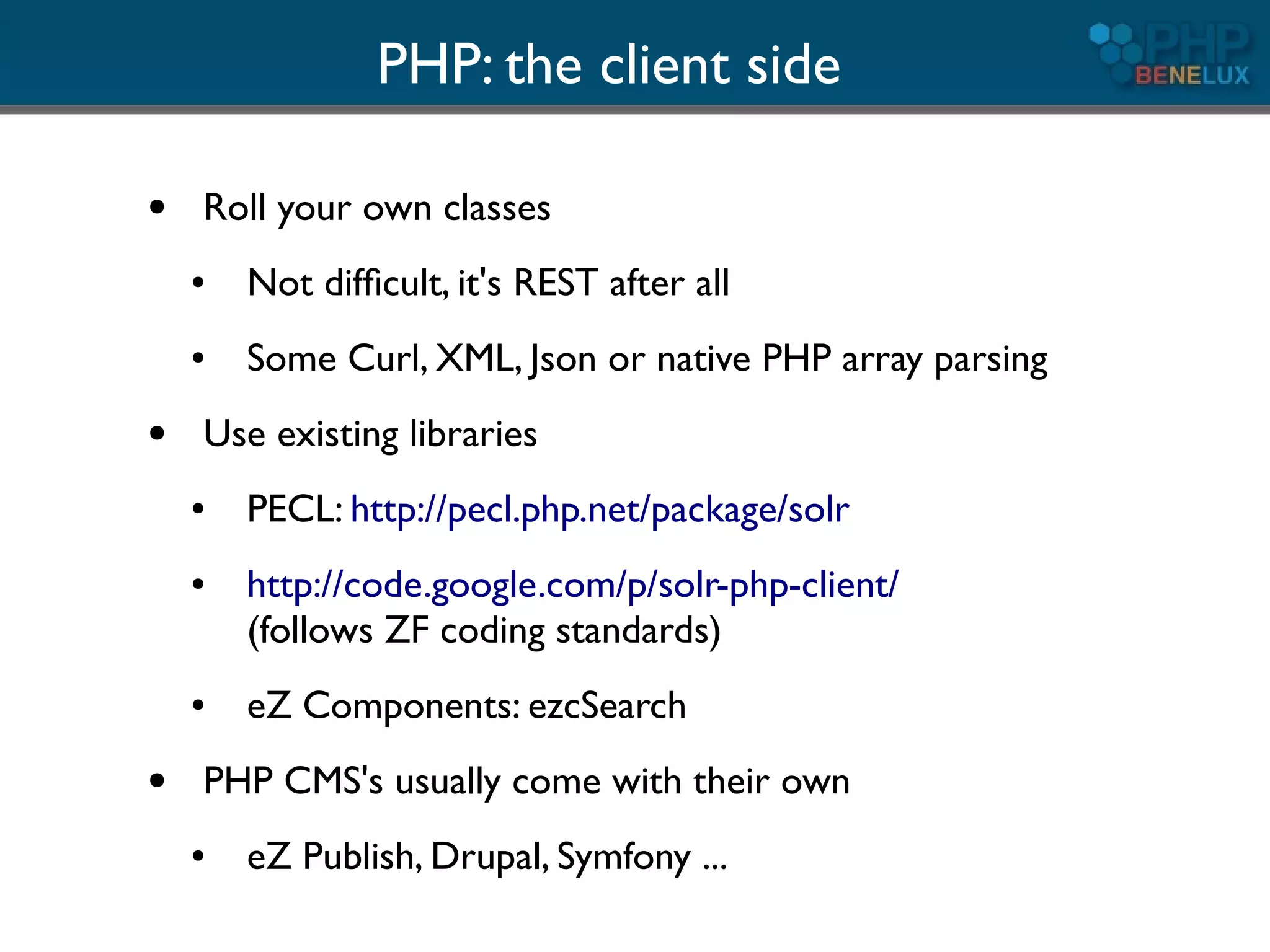

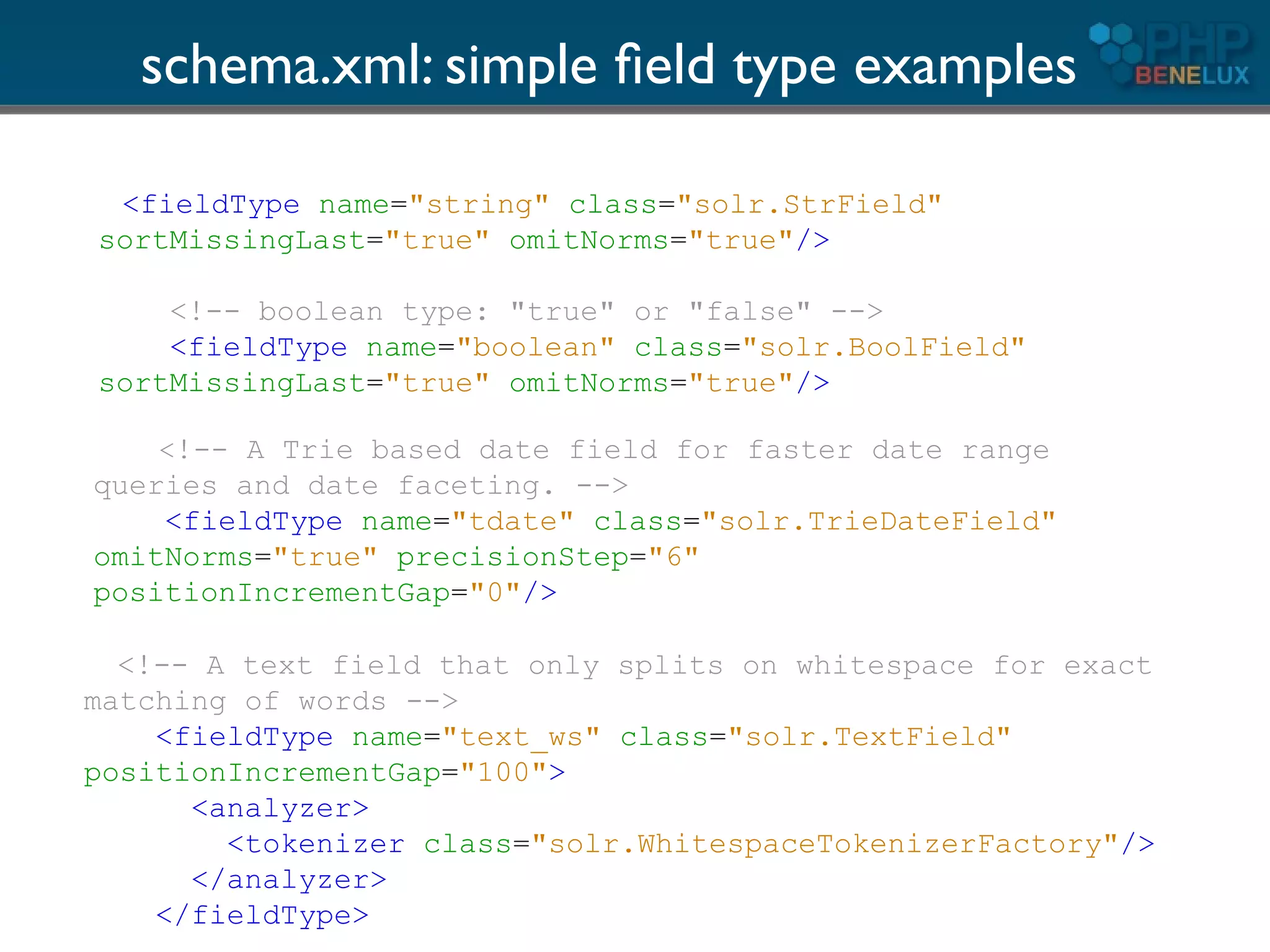

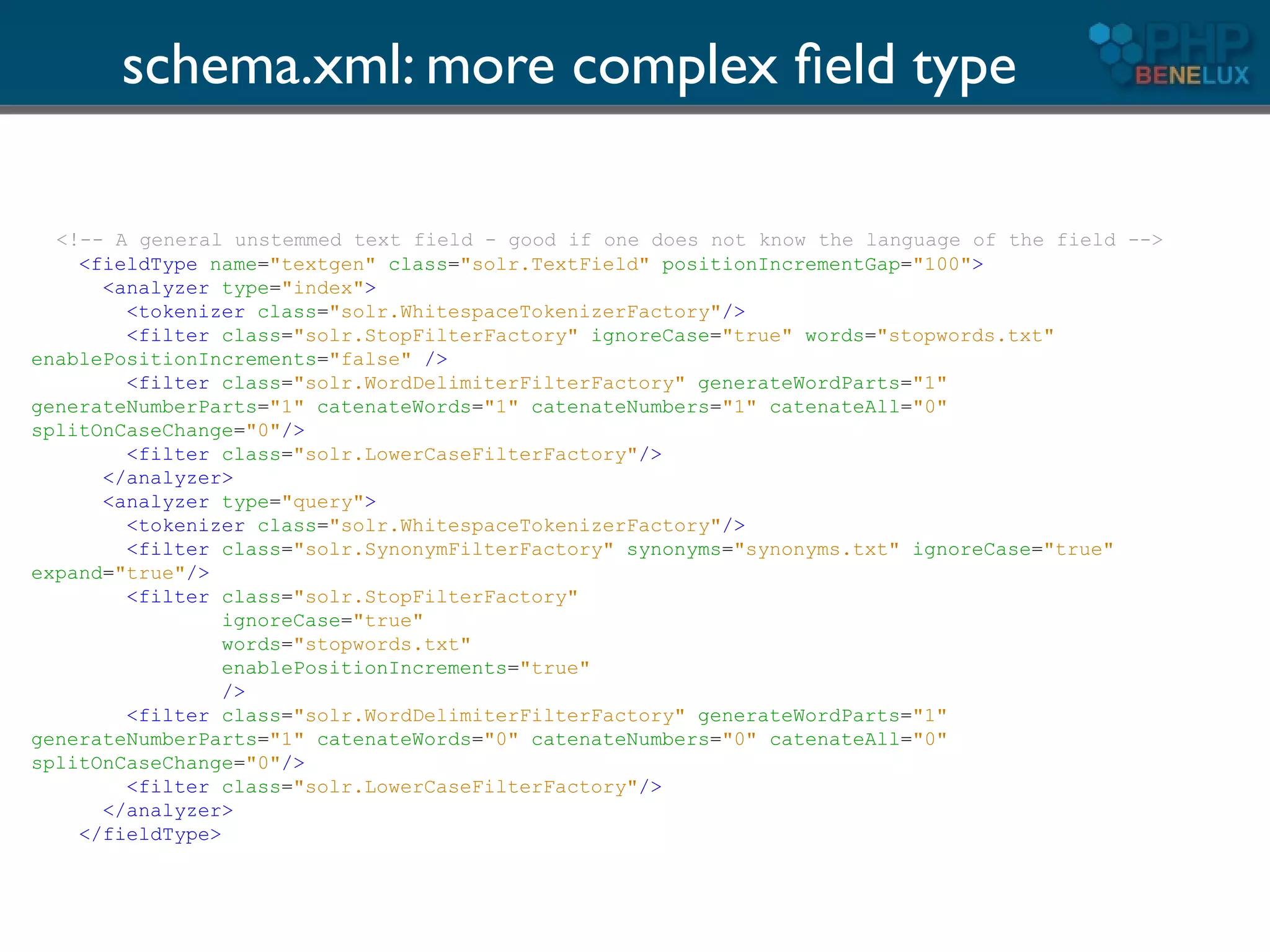

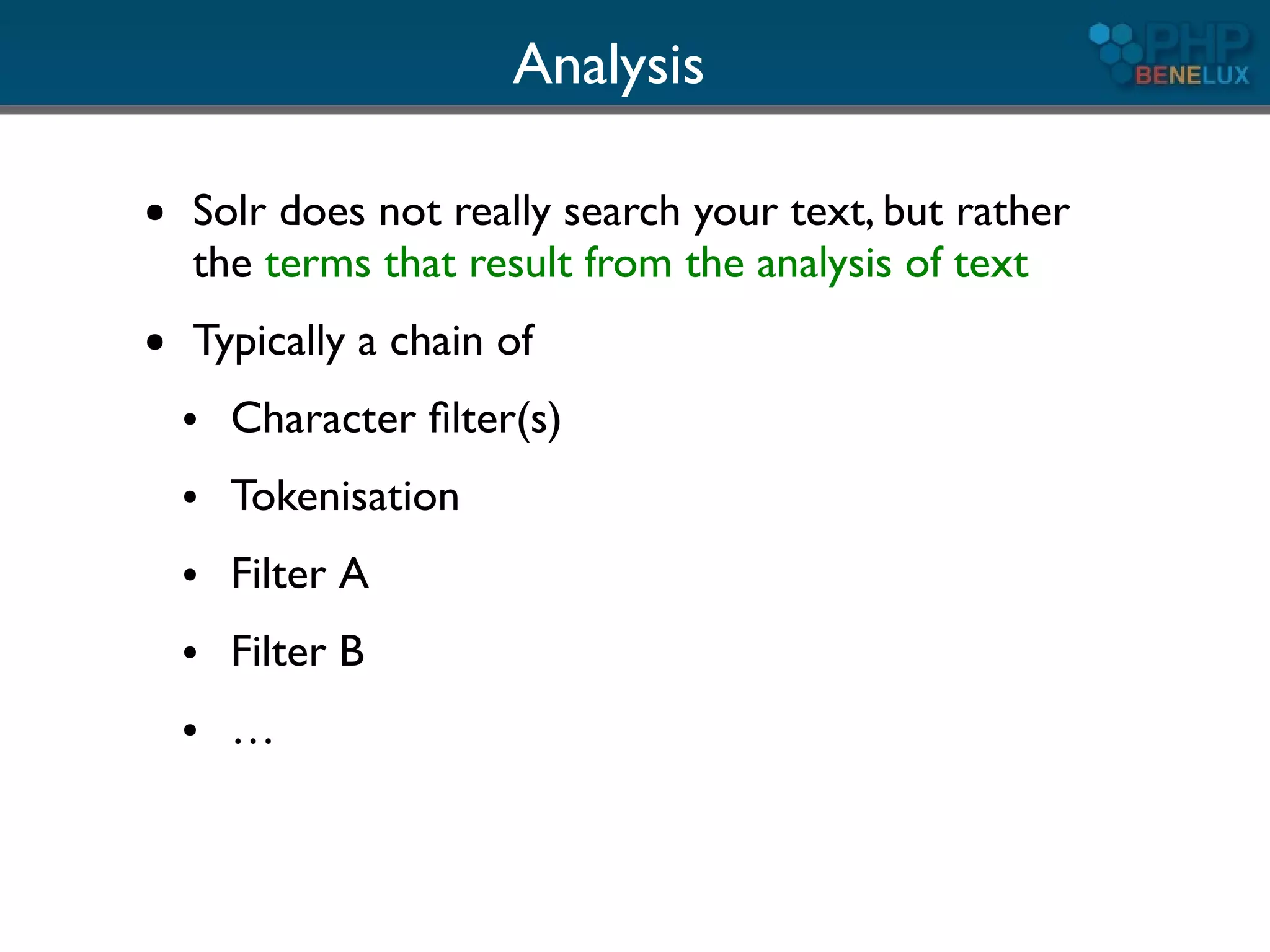

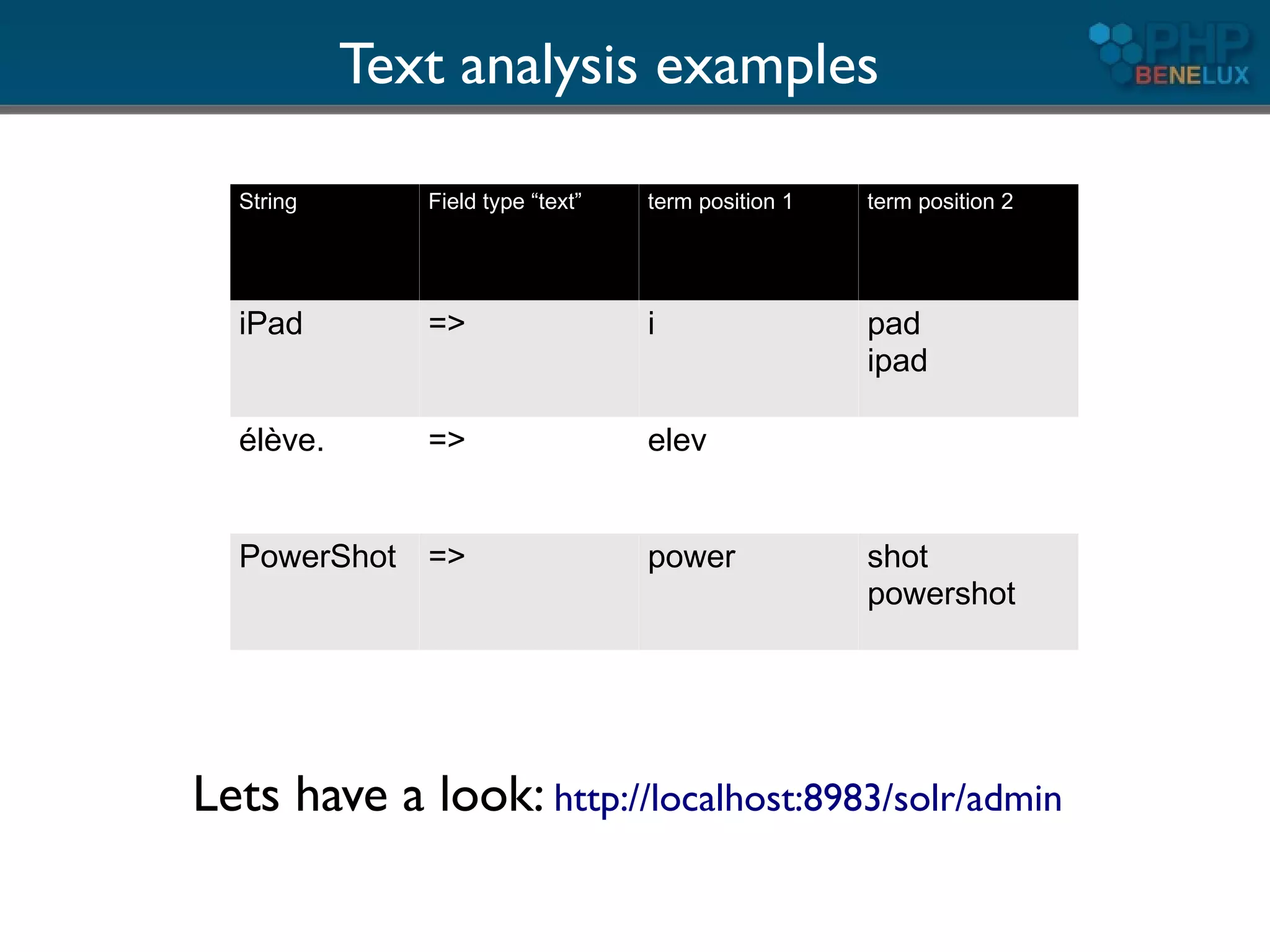

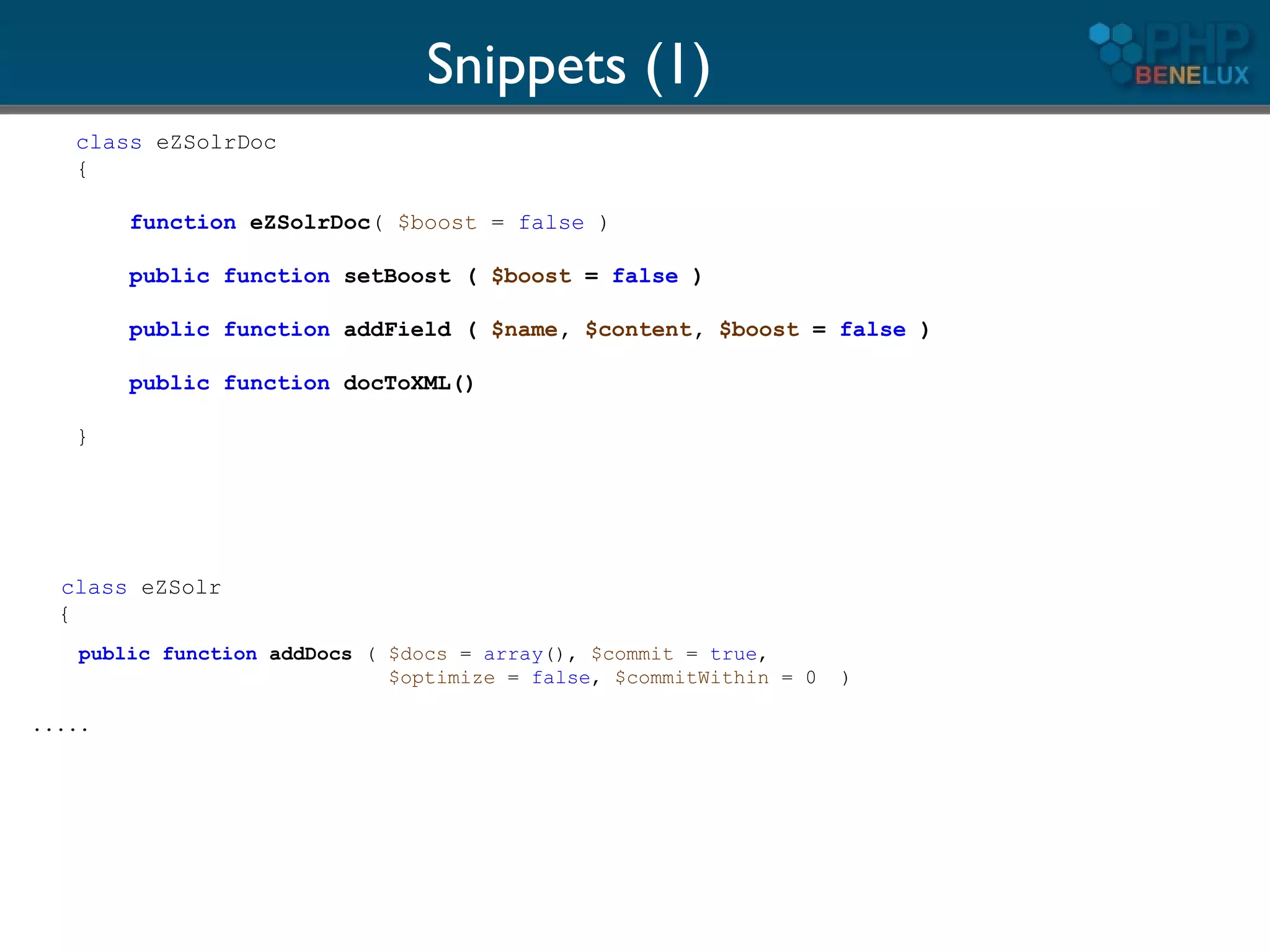

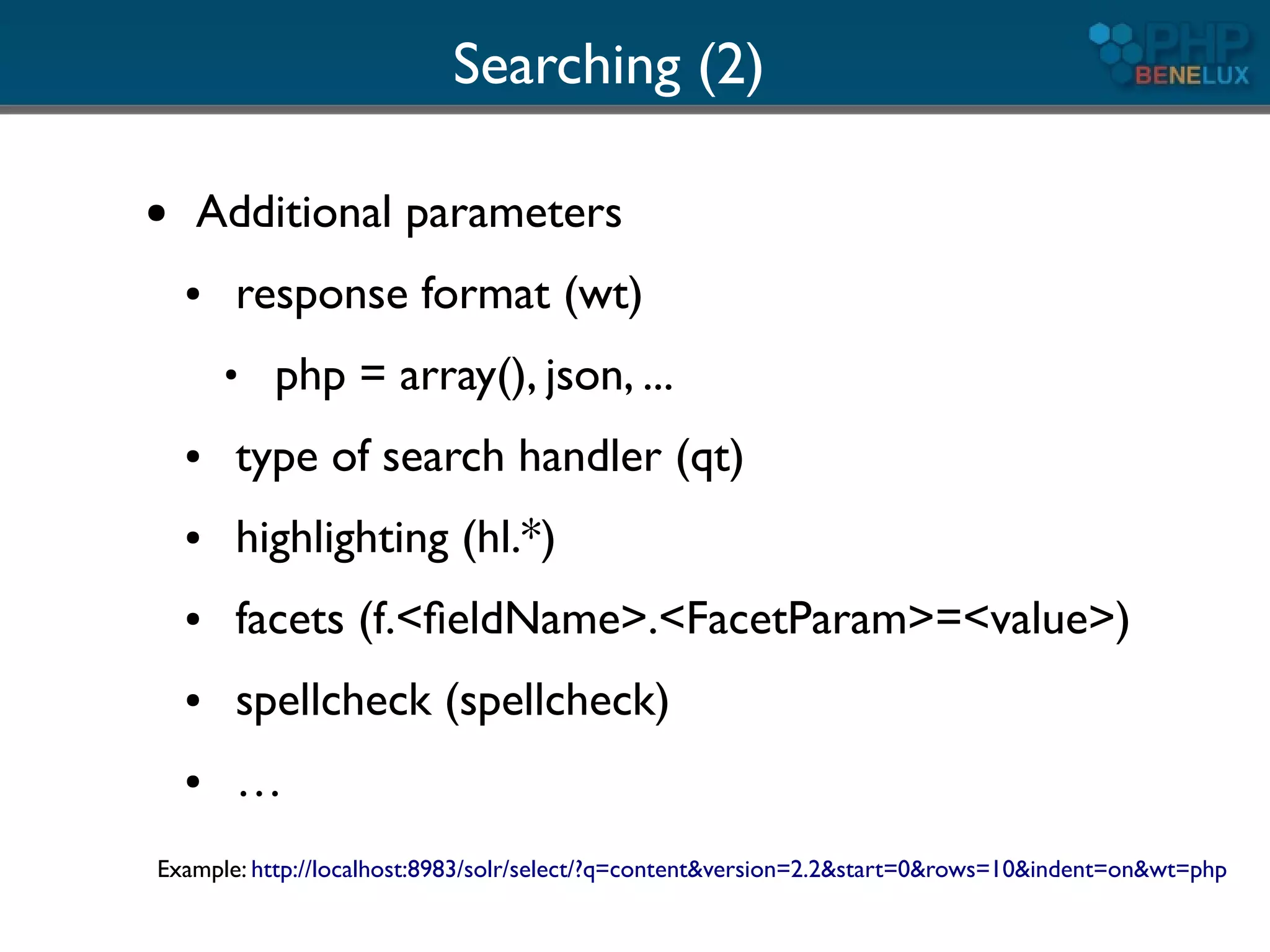

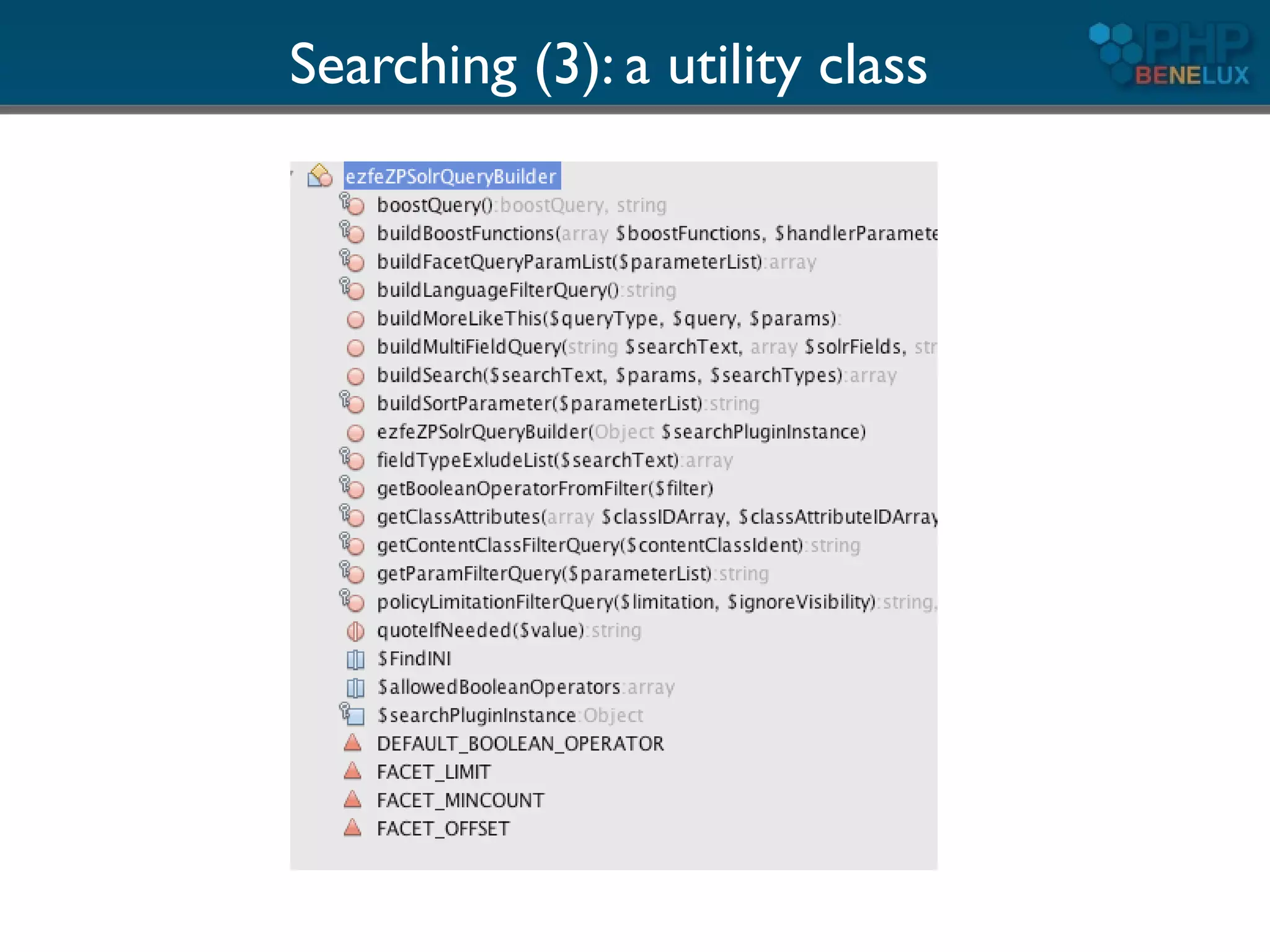

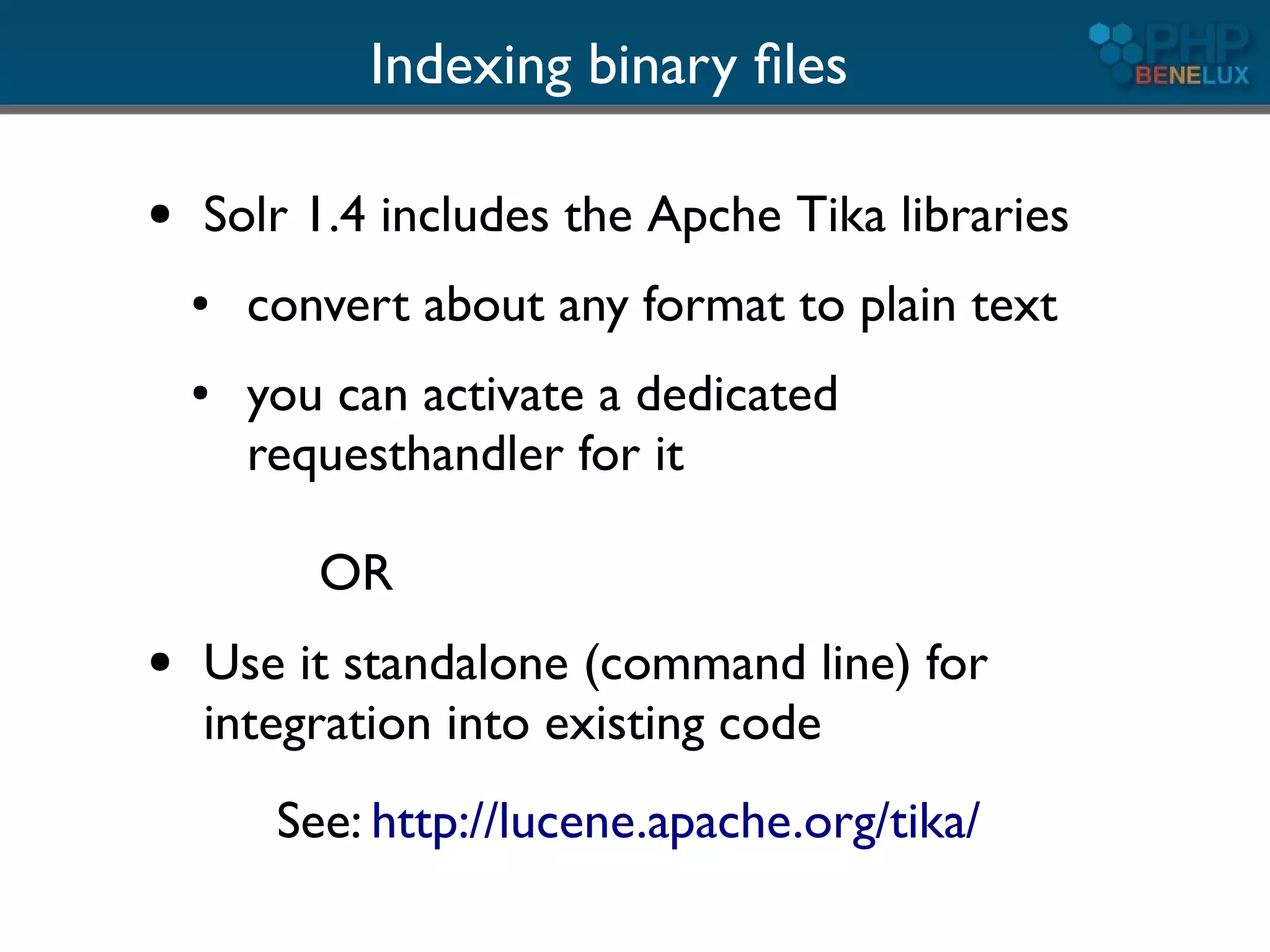

The document provides an overview of utilizing Apache Solr search with PHP, detailing its features, installation steps, and integration with PHP applications. It covers Solr's capabilities, including full-text search, relevancy ranking, and analysis of text, as well as backend setup and schema configuration with examples. Additionally, it presents tips for enhancing performance, multilingual support, and resources for further exploration.