The document is a research paper that presents a new symmetric block cipher algorithm for image encryption based on the 3D Rossler system, demonstrating its efficacy through experimentation. The algorithm combines chaotic cryptography with a unique key-splitting method applied to the RGB channels of color images, enhancing both the encryption structure and diffusion scheme. Security analyses show promising results, indicating strong performance and resistance to vulnerabilities in comparison to existing methods.

![Table of Contents VOL 14, NO 1 JULY 2014

Symmetric Image Encryption Algorithm Using 3D Rossler System........................................................1

Vishnu G. Kamat and Madhu Sharma

Node Monitoring with Fellowship Model against Black Hole Attacks in MANET.................................... 14

Rutuja Shah, M.Tech (I.T.-Networking), Lakshmi Rani, M.Tech (I.T.-Networking) and S. Sumathy, AP [SG]

Load Balancing using Peers in an E-Learning Environment ...................................................................... 22

Maria Dominic and Sagayaraj Francis

E-Transparency and Information Sharing in the Public Sector ................................................................ 30

Edison Lubua (PhD)

A Survey of Frequent Subgraphs and Subtree Mining Methods ............................................................. 39

Hamed Dinari and Hassan Naderi

A Model for Implementation of IT Service Management in Zimbabwean State Universities ................ 58

Munyaradzi Zhou, Caroline Ruvinga, Samuel Musungwini and Tinashe Gwendolyn Zhou

Present a Way to Find Frequent Tree Patterns using Inverted Index ..................................................... 66

Saeid Tajedi and Hasan Naderi

An Approach for Customer Satisfaction: Evaluation and Validation ....................................................... 79

Amina El Kebbaj and A. Namir

Spam Detection in Twitter – A Review...................................................................................................... 92

C. Divya Gowri and Professor V. Mohanraj

IJCSBI.ORG](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-2-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 1

Symmetric Image Encryption

Algorithm Using 3D Rossler System

Vishnu G. Kamat

M Tech student in Information Security and Management

Department of IT, DIT University

Dehradun, India

Madhu Sharma

Assistant Professor

Department of Computer Science, DIT University

Dehradun, India

ABSTRACT

Recently a lot of research has been done in the field of image encryption using chaotic

maps. In this paper, we propose a new symmetric block cipher algorithm using the 3D

Rossler system. The algorithm utilizes the approach used by Mohamed Amin et al.

[Commun. Nonlinear Sci. Numer. Simulat, (2010)] and Vinod Patidar et al. [Commun

Nonlinear SciNumerSimulat, (2009)]. The merits of these algorithms such as the encryption

structure and the diffusion scheme respectively are combined with an approach to split the

key for the three dimensions to use for encryption of color (RGB) images. The

experimentation results suggest an overall better performance of the algorithm.

Keywords

Image Encryption, Rossler System, Block Cipher, Security Analysis.

1. INTRODUCTION

Image encryption is relatively different from text encryption. Image is made

up of pixels and they are highly correlated; so different approaches are

followed for encryption of images [1-12]. One of the approaches is known

as chaotic cryptography. In this approach, for encryption we use chaotic

maps, which generate good pseudo-random numbers. Cryptographic

properties of these maps such as, sensitive dependence on initial parameters,

ergodic and random like behavior, make them ideal for use in designing

secure cryptographic algorithms. Many scholars have proposed various

chaos-based encryption schemes in recent years [4-12].

A scheme proposed by Mohamed Amin et al. [11] uses Tent map as the

chaotic map and the scheme is implemented for gray scale images. They

proposed a new approach of using the plaintext as blocks of bits rather than

block of pixels. Another scheme proposed by Vinod Patidaret al.[12] uses

chaotic standard and logistic maps and they introduce a way of spreading

the bits using diffusion to avoid redundancy. In this paper, we propose an

algorithm which utilizes the merits of the mentioned schemes. The](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-3-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 2

algorithm uses the Rossler system for the chaotic key generation. We

demonstrate a way to split the 3 dimensions of the key for the 3 image

channels i.e. Red, Green and Blue. The algorithm in [11] is used as a base

structure and the diffusion concept from [12] is used to spread the effect of

adding the key. The symmetric Feistel structure, diffusion method and key

splitting of the encryption scheme provide better results.

The rest of the paper is organized as follows: Section 2 provides a brief

overview of the Rossler system. Section 3 provides the algorithmic details.

The results of the security analysis are shown in section 4. Lastly, Section 5

concludes the paper.

2. BRIEF OVERVIEW OF 3D ROSSLER SYSTEM

Rossler system is a system of non-linear differential equations which has

chaotic properties [13]. Otto Rossler defined these equations in 1976. The

equations are as given below

Xn+1 = -Yn-Zn

Yn+1 = Xn + αYn (1)

Zn+1 = β + Zn (Xn-γ)

where, α, β and γ are real parameters. Rossler system's behavior is

dependent on the values of the parameters α, β and γ. For different values of

these parameters the system displays considerable changes. It may be

chaotic, converge toward a fixed point, follow a periodic orbit or escape

towards infinity. The Rossler system displays chaotic behavior for the

values of α=0.432, β=2 and γ=4.

The chaotic behavior refers to the fact that keeping the parameters constant,

even a slight change in the initial value would bring a significant change in

the subsequent values. For example the value of Z0 = 0.3 generates the value

of Z1 = 0.5. After changing the value of Z0to0.6it generates the value of Z1 =

-1. The same chaotic rule applies for the changes of other two dimensions

(X and Y). This chaotic behavior is known as deterministic chaos, i.e. the

knowledge of initial values and parameter values can help in recreating the

same chaotic pattern. Hence the initial conditions have to be shared between

the entities using the system for encryption/decryption process.

3. PROPOSED ALGORITHM

In this section we provide details of our algorithm. The algorithm is

designed to work with color images (RGB). In this scheme the plaintext

(image) is taken as blocks of bits. The block size is 8w, where ‘w’ is the

word size which is 32 bits. Each block of data is divided and stored into 8

w-bit registers and operations are performed on them. The key length](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-4-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 4

For example if the image is of dimensions 252 x 252 pixels, a 4 byte

padding of zeros is appended at the end of each row. The last byte of the

image then stores the number of bytes used as padding as a pixel value i.e. 4

in this case. This pixel value is used to remove the padding after decryption.

After retrieving the number of bytes padded ‘n’, all rows are checked to

determine if zeros exist in all the last ‘n’ bytes and in ‘n-1’ bytes of the last

row. The padding is then removed to generate the original image.

3.2 Key Generation

The key is generated by the 3D chaotic Rossler system as shown in (1). The

number of key bytes ‘t’ depends on the number of rounds ‘r’ i.e. t=4r+8. We

use the three equations separately. The random sequence generated by each

equation of the map is used as a key separately during the encryption

process of the red, green and blue channel of the image respectively. The

key generation concept is as shown below. The steps repeat ‘t’ number of

times to generate necessary key bytes.

a. Iterate Rossler system of equations (1) ‘r’ times where ‘r’ is the

number of rounds.

b. Use the decimal part of the X, Y, Z values to generate the key byte.

Xn = abs (Xn - integer part); // decimal part of x

Yn = abs (Yn - integer part); // decimal part of y

Zn = abs (Zn - integer part); // decimal part of z

c. Key byte for each dimension (R,G,B) is taken as X, Y, Z values

respectively by mapping it to a value between 0-255.

d. For the next set of key bytes the number of iterations is changed to a

value obtained by performing exclusive-or on the current set of key

bytes.

Iterations for next key byte = XOR (Xn, Yn, Zn);

3.3 Vertical and Horizontal Diffusion

The diffusion process explained in [12] is used in the algorithm. The

horizontal diffusion in our algorithm is used in a slightly different way i.e. it

is performed separately on each channel after the encryption of the channel

rather than using it on the entire image. The diffusion ensures spread of the

key additions for the channel. The horizontal diffusion moves in the forward

direction from the first pixel of a channel to the last. The second pixel is the

exclusive or of first and second pixel of a channel, the third pixel is the](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-6-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 7

4. EXPERIMENTATION RESULTS

We performed security analysis on six 256 x 256 color(RGB) images as

shown in Fig. 4. The statistical and differential analysis tests performed

display very favorable results. The results display the strength and security

of the algorithm. The results have been given in [14] to demonstrate the

overcoming of vulnerability in [11].

Figure 4.Plain images (clockwise from top left): Lena, Bridge, Lake, Plane,

Peppersand Mandrill

4.1 Statistical Analysis

Statistical analysis is performed to determine the correlation between the

plain image and the cipher image. For an encryption system to be strong the

cipher image should not be correlated to the plain image and the cipher

image pixels should not have correlation among them. In this section we

provide the histogram and correlation analysis.

4.1.1 Histogram Analysis

When the encrypted image and the plain image do not show high degree of

correlation we can consider the encryption to be secure form information

leakage. Histograms are used to plot the number of pixels at each intensity

level i.e. pixels having values 0-255. This helps in displaying how the pixels

are distributed.

Fig. 5 depicts the histogram for the red, green and blue channels of the plain

image ‘lena’ on the left side (from top to down) and the histograms of the

‘lena’ image after encryption for the three channels respectively on the right

side. They depict that the encryption does not leave any concentration of a

single pixel value.](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-9-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 12

Values for NPCR and UACI are calculated as given in equations (7) and (8),

where W and H denote width and height of the cipher images, T denotes the

largest supported pixel value in the cipher images (255 in our case) and

abs() computes the absolute value. The NPCR and UACI values given in

Table 4 show that the encryption algorithm is secure against differential

attacks.

NPCR =

𝐵(𝑖,𝑗)𝑖,𝑗

W x H

x 100% (7)

UACI =

1

W x H

𝑎𝑏𝑠(x1 𝑖,𝑗 −x2 𝑖,𝑗 )

𝑇𝑖,𝑗 x 100% (8)

Table 4.NPCR and UACI Values Obtained for Encryption of 6 Plain images and Same

Images with 1 Pixel Changed

Plain Images NPCR UACI

Lena 99.6333 33.4706

Bridge 99.5722 33.4403

Lake 99.5900 33.5313

Mandrill 99.6089 33.4595

Peppers 99.6185 33.4657

Plane 99.6206 33.4539

5. CONCLUSION

In this paper we proposed a new image encryption algorithm. The merits of

the recent research, based on results, were combined along with a symmetric

approach of encryption to provide a secure algorithm. The diffusion

mechanism along with Feistel structure makes the algorithm stronger. The

3D Rossler system of equations is used for the random key generation. The

splitting of the three dimensions of the key for the three channels makes the

cryptanalysis to obtain the key more difficult. The experimentation

performed depict that the algorithm generates favorable results.

REFERENCES

[1] Chang, C.-C., Hwang, M.-S.and Chen, T.-S., 2001. A New Encryption Algorithm for

Image Cryptosystems. Journal of Systems and Software, Vol. 58, No. 2, pp. 83-91.

[2] Yano, K. and Tanaka, K., 2002. Image Encryption Scheme Based on a Truncated

Baker Transformation. IEICE Transactions on Fundamentals of Electronics,

Communications and Computer Sciences, Vol. E85-A, No. 9, pp. 2025-2035.

[3] Gao, T. and Chen, Z., 2008. Image Encryption Based on a New Total Shuffling

Algorithm.Chaos, Solitons and Fractals, Vol. 38, No. 1, pp. 213-220.](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-14-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 13

[4] Chen, G., Mao, Y. and Chui, C.K., 2004. A Symmetric Image Encryption Based on 3D

Chaotic Cat Maps. Chaos, Solitons and Fractals, Vol. 21, pp. 749-761.

[5] Mao, Y., Chen, G. and Lian, S., 2004. A Novel Fast Image Encryption Scheme Based

on 3D Chaotic Baker Maps. International Journal of Bifurcation and Chaos, Vol. 14,

No. 10, pp. 3613-3624.

[6] Guan, Z.-H., Huang, F. and Guan, W., 2005. Chaos Based Image Encryption

Algorithm. Physics Letters A, Vol. 346, pp. 153-157.

[7] Zhang, L., Liao, X. and Wang, X., 2005. An Image Encryption Approach Based on

Chaotic Maps. Chaos, Solitons and Fractals, Vol. 24, pp. 759-765.

[8] Gao, H., Zhang, Y., Liag, S. and Li, D., 2006. A New Chaotic Algorithm for Image

Encryption. Chaos, Solitons and Fractals, Vol. 29, pp. 393-399.

[9] Pareek, N.K., Patidar, V. and Sud, K.K., 2006. Image Encryption Using Chaotic

Logistic Map. Image and Vision Computing, Vol. 24, pp. 926-934.

[10]Wong, K.-W., Kwok, B.S.-H.and Law, W.-S., 2008. A Fast Image Encryption Scheme

Based on Chaotic Standard Map. Physics Letters A, Vol. 372, pp. 2645-2652.

[11]Amin, M., Faragallah, O.S. and Abd El-Latif, A.A., 2010. A Chaotic Block Cipher

Algorithm for Image Cryptosystems. Communications in Nonlinear Science and

Numerical Simulation, Vol. 15, pp. 3484-3497.

[12]Patidar, V., Pareek, N.K. and Sud, K.K.,2009. A New Substitution-Diffusion Based

Image Cipher Using Chaotic Standard and Logistic Maps. Communications in

Nonlinear Science and Numerical Simulation, Vol. 14, pp. 3056-3075.

[13]Rossler, O.E., 1976. An Equation for Continuous Chaos. Physics Letters A, Vol. 57,

No. 5, pp. 397-398.

[14]Kamat, V.G. and Sharma, M., 2014. Enhanced Chaotic Block Cipher Algorithm for

Image Cryptosystems. International Journal of Computer Science Engineering, Vol. 3,

No. 2, pp. 117-124.

This paper may be cited as:

Kamat V. G. and Sharma M., 2014. Symmetric Image Encryption

Algorithm Using 3D Rossler System. International Journal of Computer

Science and Business Informatics, Vol. 14, No. 1, pp. 1-13.](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-15-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 14

Node Monitoring with Fellowship

Model against Black Hole Attacks in

MANET

Rutuja Shah, M.Tech (I.T.-Networking)

School of Information Technology & Engineering, VIT University

Lakshmi Rani, M.Tech (I.T.-Networking)

School of Information Technology & Engineering, VIT University

S. Sumathy, AP [SG]

School of Information Technology & Engineering, VIT University

Abstract

Security issues have been considerably increased in mobile ad-hoc networks. Due to absence of any

centralized controller, the detection of problems and recovery from such issues is difficult. The packet

drop attacks are one of those attacks which degrade the network performance. In this paper, we

propose an effective node monitoring mechanism with fellowship model against packet drop attacks

by setting up an observance zone where suspected nodes are observed for their performance and

behavior. Threshold limits are set to monitor the equivalence ratio of number of packets received at

the node and transmitted by node inside mobile ad hoc networks. This fellowship model enforces a

binding on the nodes to deliver essential services in order to receive services from neighboring nodes

thus improving the overall network performance.

Keywords: Black-hole attack, equivalence ratio, fair-chance scheme, observance zone, fellowship

model.

1. INTRODUCTION

Mobile ad-hoc networks are infrastructure less and self organized or configured

network of mobile devices connected with radio signals. There is no centralized

controller for the networking activities like monitoring, modifications and updating

of the nodes inside the network as shown in figure 1. Each node is independent to

move in any direction and hence have the freedom to change the links to other nodes

frequently. There have been serious security threats in MANET in recent years.

These usually lead to performance degradation, less throughput, congestion, delayed

response time, buffer overflow etc. Among them is a famous attack on packets

known as black-hole attack which is also a part of DoS(Denial of service) attacks. In

this, a router relays packets to different nodes but due to presence of malicious nodes](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-16-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 15

these packets are susceptible to packet drop attacks. Due to this, there is hindrance is

secure and reliable communication inside network.

Figure 1. MANET Scenario

Section 2 addresses the seriousness of packet drop attacks and related work done so

far in this area. Section 3 elaborates our proposal and defending scheme for packet

drop attacks. Section 4 provides concluding remarks.

2. LITERATURE SURVEY

The packet drop loss in ad-hoc network gained importance because of self-serving

nodes which fail to provide the basic facility of forwarding the packets to

neighboring nodes. This causes an occupational hazard in the functionality of

network. Generally there are two types of nodes- selfish and malicious nodes. Selfish

nodes are those nodes which act in the context of enhancing its performance while

malicious nodes are those which mortifies the functions of network through its

continual activity. The WATCHERS [1] from UC Davis was presented to detect and

remove routers that maliciously drop or misroute packets. A WATCHER was based

on the “principle of packet flow conservation”. But it could not differentiate much

between malicious and genuine nodes. Although it was robust against byzantine

faults, it could not be much effective in today’s internet world to reduce packet loss.

The basic mechanism of packet drop loss is that the nodes do not progress the

packets to other nodes selfishly or maliciously. Packet Drop loss could occur due to

Black hole attack. Sometimes the routers behave maliciously i.e. the routers do not

forwards packets, such kinds of attacks are known as “Grey Hole Attack”. In case of

routers, the attacks can be traced quickly while in the case of nodes it’s a

cumbersome task. Many researchers have worked in this field and have tried to find](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-17-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 16

solutions to this attack [2-6]. Energy level was one of the parameter on which the

researchers have shown their results. This idea works on the basis of the ratio of

fraction of energy committed for a node, to overall energy contributed towards the

network. The node is retained inside the network on the basis of energy level and the

energy level is decided by the activeness of node in a network through mathematical

computations. Mathematical computations are [7] too complicated to clench and

sometimes the results are catastrophic. It can be said that the computations are

accurate but they are very much prone to ambiguity in the case of ad-hoc networks.

Few techniques involve usage of routing table information which is modified after

detecting the MAC address of malicious node which uses jamming style DoS attack

to cease their activities [8]. Another approach to reduce attacks was using historical

evidence trust management based strategy. [9] Direct trust value (DTV) was used

amongst neighboring nodes to monitor the behavior of nodes depending on their past

against black hole attacks. However, there is high possibility that trust values may

get compromised by the malicious nodes. Also the third party used for setting the

trust values is also vulnerable to attacks. Recent methods included an [10]

introduction to a new protocol called RAEED (Robust formally Analyzed protocol

for wirEless sEnsor networks Deployment) which reduces this attack but not by a

considerable percentage. To overcome the issues faced in order to implement these

strategies there is a need of an effective mechanism to curb these attacks and make

network more secure.

3. PROPOSED APPROACH

In this paper, we put forth a mechanism to reduce these packet-drop attacks by

implementing “node monitoring with fellowship” technique. We introduce an

obligation on the nodes inside a particular network to render services to network. If

services are not rendered, the node will be expelled outside the performance.

However, we have kept a “fair-chance” scheme for all nodes which help to make out

whether it is genuine node or malicious node.

3.1 Fellowship of Network

The prime parameter we used in this to address packet drop attacks issue is by

maintaining the count of incoming packets, except the destined one on that node and

the count of outgoing nodes except the ones which are originated at that node,

should be same, referred to as “equivalence ratio”. If that count is same, there is

uniform distribution and forwarding of packets among the nodes inside network.

However, if the count is not same, then that particular node is kept under

“observance zone” in order to monitor its suspicious behavior. We suggest a

periodical reporting of all nodes about their equivalence ratio to neighboring nodes

inside the network.

This will help to decide whether to keep a particular node in “observance zone”

which could be done with polling techniques amongst each other. Inside, observance](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-18-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 20

3.3 Advantages:

1. Fair chance scheme ensures genuineness of innocent nodes.

2. No complex mathematical computations of energy levels at each node.

3. Periodical reporting ensures removal of both underperforming and malicious

nodes from the network.

4. Up gradation of network performance in MANET.

3.4 Disadvantages

However, there is an overhead of exchanging more number of messages among the

neighboring nodes. Optimization on number of messages exchanged during

communication can be addressed and worked upon in future research.

4. CONCLUSION

In this paper, we have proposed a novel scheme to reduce packet drop attacks and

enhance the network performance. However, we anticipate our “node-monitoring

with fellowship” model may lead to increase in number of exchanged messages

amongst neighboring nodes during the agreement protocols inside network but at the

same time it will be robust against attacks and thus increase the availability of nodes

in mobile ad-hoc networks. The outcomes of minimizing packet drop loss have better

utility of channel, resources and QoS guaranteed which results in productive priority

management and a considerable controlled traffic by periodic surveillance over

nodes. The future research on this would be to reduce the exchange of messages

amongst the nodes, minimize the overhead and achieve optimization inside mobile

ad-hoc networks.

5. REFERENCES

[1] K. A. Bradley, S. Cheung, N. Puketza, B. Mukherjee and R. A. Olsson, Detecting Disruptive

Routers: A Distributed Network Monitoring Approach, in the 1998 IEEE Symposium on Security and

Privacy, May 1998.

[2] Y.C. Hu, A. Perrig and D. B. Johnson, Ariadne: A Secure On-demand Routing Protocol for Ad

Hoc Networks, presented at International Conference on Mobile Computing and Networking, Atlanta,

Georgia, USA, pp. 12 - 23, 2002.

[3] P. Papadimitratos and Z. J. Haas, Secure Routing for Mobile Ad hoc Networks, presented at SCS

Communication Networks and Distributed Systems Modeling and Simulation Conference, San

Antonio, TX, January2002.

[4] K. Sanzgiri, B. Dahill, B. N. Levine, C. Shields and E. M. Belding-Royer, A Secure Routing

Protocol for Ad Hoc Networks, presented at 10th IEEE International Conference on Network

Protocols (ICNP'02), Paris, pp. 78 - 89, 2002.

[5] V. Balakrishnan and V. Varadharajan, Designing Secure Wireless Mobile Ad hoc Networks,

presented at Proceedings of the 19th IEEE International Conference on advanced information

Networking and Applications (AINA 2005). Taiwan, pp. 5-8, March 2005.](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-22-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 21

[6] V. Balakrishnan and V. Varadharajan, Packet Drop Attack: A Serious Threat to Operational

Mobile Ad hoc Networks, presented at Proceedings of the International Conference on Networks and

Communication Systems (NCS 2005), Krabi, pp. 89-95, April 2005.

[7] Venkatesan Balakrishnan and Vijay Varadharajan Short Paper: Fellowship in Mobile Ad hoc

Networks presented at Proceedings of the First International Conference on Security and Privacy for

Emerging Areas in Communications Networks (SECURECOMM’05) IEEE.

[8] Raza, M., and Hyder, S.I. A forced routing information modification model for preventing black

hole attacks in wireless Ad Hoc network presented at Applied Sciences and Technology (IBCAST),

2012, 9th International Bhurban Conference, Islamabad, pp. 418-422, January 2012.

[9] Bo Yang , Yamamoto, R., Tanaka, Y. Historical evidence based trust management strategy

against black hole attacks in MANET published in 14th International Advanced Communication

Technology(ICACT), 2012 on pp. 394 – 399.

[10] Saghar, K., Kendall, D.and Bouridane, A. Application of formal modeling to detect black hole

attacks in wireless sensor network routing protocols .Applied Sciences and Technology (IBCAST),

2014, 11th International Bhurban Conference, Islamabad, pp. 191-194, January 2014.

This paper may be cited as:

Shah, R., Rani, L. and Sumathy, S. 2014. Node Monitoring with Fellowship Model

against Black Hole Attacks in MANET. International Journal of Computer Science

and Business Informatics, Vol. 14, No. 1, pp. 14-21.](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-23-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 22

Load Balancing using Peers in an

E-Learning Environment

Maria Dominic

Department of Computer Science,

Sacred Heart College, India

Sagayaraj Francis

Department of Computer Science and Engineering,

Pondicherry Engineering College, India

ABSTRACT

When an e-Learning System is installed on a server, numerous learners make use of it and

they download various learning objects from the server. Most of the time the request is for

same learning object and downloaded from the server which results in server performing

the same repetitive task of locating the file and sending it across to the requestor or the

client. This results in wasting the precious CPU usage of the server for the same task which

has been performed already. This paper provides a novel structure and an algorithm which

stores the details of the various clients who have already downloaded the learning objects

in a dynamic hash table and look up that table when a new request comes in and sends the

learning object from that client to the requestor thus saving the precious CPU time of the

server by harnessing the computing power of the clients.

Keywords

Learning Objects, e-Learning, Load Distribution, Load Balancing, Data Structure, Peer –

Peer Distribution.

1. INTRODUCTION

1.1 e-Learning

Education is defined as the conscious attempt to promote learning in others

to acquire knowledge, skills and character [1]. To achieve this mission

different pedagogies were used and later on with the advent of new

information communication technology tools and popularity gained by

internet were used to enhance the teaching learning process and gave way to

the birth of e-learning [2]. This enabled the learner to learn by breaking the

time, geographical barriers and it allowed them to have individualized

learning paths [3]. The perception on e-Learning or electronic learning is

that it is a combination of internet, electronic form and network to

disseminate knowledge. The key factors of e-learning are reusing, sharing

resources and interoperability [4]. At present there are various organizations](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-24-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 23

providing e-learning tools of multiple functionalities and one such is

MOODLE (Modular Object Oriented Dynamic Learning Environment) [5]

which is used in our campus. This in turn created difficulty in sharing the

learning objects between heterogeneous sites and standards such as SCORM

& SCORM LOM [6], IMS & IMS DRI [7], AICC [8] and likewise were

proposed by different organizations. In Berner-Lee’s famous architecture for

Semantic Web, ontology’s are used for sharing and interoperability which

can be used to build better e-learning systems [9]. In order to define

components for e-learning systems the methodology used is the principle of

composibility in Service Oriented Architecture [10] since it enables us to

define the inter-relations between the different e-learning components. The

most popular model used nowadays in teaching learning process is Felder-

Silverman learning style model [11]. The e-Learning components are based

on key topics, topic types and associations and occurrences. VLE – Virtual

Learning Environment is the software which handles all the activities of

learning. Learning Objects are the learning materials which promotes a

conscious attempt to promote visual, verbal, logical and musical intelligence

[12] through presentations, tutorials, problem solving and projects. By the

multimedia, gaming and simulation kin aesthetic intelligence are promoted.

Interpersonal, intrapersonal and naturalistic intelligence are promoted by

means of chat, SMS, e-mail, forum, video, audio conference, survey, voting

and search. Finally assessment is used to test the knowledge acquired by the

learner and the repository is the place which will hold all the learning

materials.

This algorithm is useful when the learners access the learning objects which

are stored in the repository. It reduces the server’s response rate by the

directing a client to respond to the requestor with the file it has already

downloaded from the server.

1.2 Load Balancing

The emergence of large and faster networks with thousands of computers

connected to it provided a challenge to provide effective sharing of resource

around the computers in the network. Load balancing is a critical issue in

peer to peer network [14]. The existing load balancing algorithms for

heterogeneous, P2P networks are organized in a hierarchical fashion. Since

P2P have gained popularity it became mandatory to manage huge volume of

data to make sure that the response time is acceptable to the users. Due to

the requirement for the data from multiple clients at the same instance may

cause some of the peers to become bottleneck, and thereby creating severe

load imbalance and the response time to the user. So to reduce the

bottlenecks and the overhead of the server there was a need to harness the

computing power of the peers [15]. Much work has been done on harnessing](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-25-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 24

the computing power of the computer in the network in high performance

computing and scientific application, faster access to data and reducing the

computing time is still to be explored. In a P2P network the data is de-

clustered across the peers in the network. When there is requirement for a

popular data from across the peer then there occurs a bottleneck and

degrading the system response. So to handle this, a new strategy using a

new structure and an algorithm are proposed in this paper.

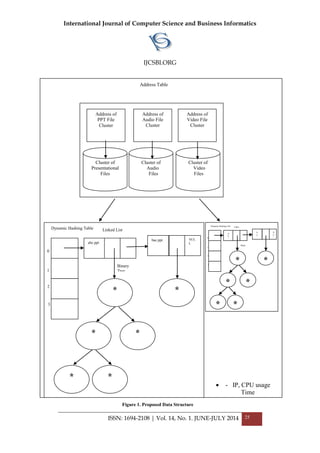

2. PROPOSED DATA STRUCTURE AND THE ALGORITHM

The objective of this architecture is to harness the computational power of

the clients in the network. This architecture is with respect to the clients

available in the e-learning network. The network comprises of Master

degree students of Computer Applications accessing learning materials for

their course. The degree programme is a three year programme. So the

clients are categorized to three different clusters namely I MCA, II MCA, III

MCA. We shall name it as class cluster. Every class cluster contains many

clusters inside it, let us name it as file clusters, one cluster for one type of

file since the learning objects can be made up of presentation, video, audio,

picture, animation etc [13]. An address table, named file address table holds

the address of each file cluster in the class cluster. When a request for a file

is received the corresponding cluster is identified by reading the address

from the address table. The following algorithm represents the working

logic of the concept. The data structure is represented in Figure 1. Every file

cluster holds a Dynamic Hash Table (DHT), Linked List and a Binary Tree.

The dynamic hash table holds the address of the linked list which holds the

file names that are already downloaded from the server. The hashing

function used to identify an index in the DHT is as follows,

1. Represent every character in the filename with its position in the

alphabet list and its position in the filename.

Eg: File Name - abc.ppt = 112233, the value for a is got as 11 since

the position of it in the alphabet list is 1 and its position in the file

name is 1.

2. Sum all the digits calculated from step 1.

Eg: 112233 = 12

3. Divide the sum by length of the file name, so 12/3 = 4, which becomes

the index for the file in DHT. The above three steps are mathematical

formulated in equation 1.](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-26-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 29

REFERENCES

[1] Lavanya Rajendran, Ramachandran Veilumuthu., 2011. A Cost Effective Cloud

Service for E-Learning Video on Demand, European Journal of Scientific Research,

pp.569-579.

[2] Maria Dominic, Sagyaraj Francis, Philomenraj., 2013. A Study On Users On Moodle

Through Sarasin Model, International Journal Of Computer Engineering And

Technology, Volume 4, Issue 1, pp 71-79.

[3] Maria Dominic, Sagyaraj Francis., 2013. Assessment Of Popular E-Learning Systems

Via Felder-Silverman Model And A Comphrehensive E-Learning System,

International Journal Of Modern Education And Computer Science, Hong Kong,

Volume 5, Issue 11, pp 1-10.

[4] Zhang Guoli, Liu Wanjun, 2010. The Applied Research of Cloud Computing. Platform

Architecture in the E-Learning Area, IEEE.

[5] www.moodle.org

[6] SCORM(Sharable Courseware Object Reference Model), http://www.adlnet.org

[7] IMS Global Learning Consortium, Inc., “Instructional Management System (IMS)”,

http://www.imsglobal.org.

[8] http://www.aicc.org

[9] Uschold, Gruninger., 1996. Ontologies, Principles , Methods and Applications,

Knowledge Engineering Review, Volume 11, Issue 2.

[10]Papazoglou, Heuvel., 2007. Service Oriented Architectures: Approaches,

Technologies, and research issues, The VLDB Journal, , Volume 16, Issue 3, pp. 389-

415.

[11]Graf, Viola, Kinshuk., 2006. Representative Characterestics of Felder-Silverman

Learning Styles: an Empirical Model, IADIS, pp. 235-242.

[12]Lorna Uden, Ernesto Damiani., 2007. The Future of E-Learning: E-Learning

ecosystem, Proceeding of IEEE Conference on Digital ecosystems and Techniques,

Australia, pp. 113-117.

[13]Maria Dominic, Sagyaraj Francis., 2012. Mapping E-Learning System To Cloud

Computing, International Journal Of Engineering Research And Technology, India,

Volume1, Issue 6.

[14]Chyouhwa Chen, Kun-Cheng Tsai., 2008. The Server Reassignment Problem forload

Balancing In Structured P2P Systems, IEEE Transactions On Parallel And Distributed

Systems, Volume 19, Issue 2.

[15]A. Rao, K. Lakshminarayanan, S. Surana, R. Karp, and I. Stoica., 2006. Load

Balancing in Structured P2P Systems. Proc. Second Int’l Workshop Peer-to-Peer

Systems (IPTPS ’03).

This paper may be cited as:

Dominic, M. and Francis, S. 2014. Load Balancing using Peers in an E-

Learning Environment. International Journal of Computer Science and

Business Informatics, Vol. 14, No. 1., pp. 22 -29.](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-31-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 38

REFERENCES

[1] Badillo-Amador, L., García-Sánchez, A., & Vila, L. E. (2005). Mismatches In The Spanish

Labor Market: Education Vs. Competence Match. International Advances in Economic

Research, Vol 11, 93-109.

[2] Barua, A., Ravindran, S., & Whinston, A. (2007). Enabling Information Sharing Within

Organizations. Information Technology and Management, Vol (3), 31 - 45 .

[3] Cohen, J. (2012). Benefits Of On Job Training. Retrieved February 7, 2013, from

http://jobs.lovetoknow.com

[4] Drake, D., Steckler, N., & Koch, M. (2004). Information Sharing In And Across Government

Agencies: The Role And Influence Of Scientist, Politician, And Bureaucratic Subcultures.

Social Science Computer Research, 22(1), , 67–84.

[5] HAKIELIMU, LHRC, REPOA. (2005). Access To Information In Tanzania: Is Still A

Challenge. Retrieved September 11, 2012, from

http://www.tanzaniagateway.org/docs/Tanzania_Information_Access_Challenge.pdf

[6] Hatala, J.-P., & Lutta, J. (2009). Managing Information Sharing Within an Organisation

Settings: A social Network Perspective. Retrieved September 13, 2012, from

http://www.performancexpress.org/wp-content/uploads/2011/11/Managing-Information-

Sharing.pdf

[7] Im, B., & Jung, J. (2001). Using ICT For Strengthening Government Transparency. Retrieved

May 10, 2011, from http://www.oecd.org/dataoecd/53/55/2537402.pdf

[8] Kilama, J. (2013). Impacts Of Social Networks In Citizen Involvements To Politics . Dar es

Salaam: Mzumbe University.

[9] Mkapa, B. (2003). Improving Public Communication Of The Government Policies And

Enhancing Media Relations. Bagamoyo.

[10]Navarra, D. D. (2006). Governance Architecture Of Global ICT Programme: The Case Of

Jordan. London: London School of Economics and Political Science.

[11]United Republic of Tanzania. (1995). The Constitution of United Republic of Tanzania. Dar

Es Salaam, Tanzania: Government Printer.

[12]Van Niekerk, B., Pillay, K., & Maharaj, M. (2011). Analyzing the Role of ICTs in the

Tunisian and Egyptian Unrest. International Journal of Communication, 5(1406–1416).

This paper may be cited as:

Lubua, E. 2014. E-Transparency and Information Sharing in the Public Sector.

International Journal of Computer Science and Business Informatics, Vol. 14,

No. 1, pp. 30 -38.](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-40-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 39

A Survey of Frequent Subgraphs

and Subtree Mining Methods

Hamed Dinari and Hassan Naderi

Department of Computer Engineering

Iran University of Science and Technology

Tehran, Iran

ABSTRACT

A graph is a basic data structure which, can be used to model complex structures and the

relationships between them, such as XML documents, social networks, communication

networks, chemical informatics, biology networks, and structure of web pages. Frequent

subgraph pattern mining is one of the most important fields in graph mining. In light of

many applications for it, there are extensive researches in this area, such as analysis and

processing of XML documents, documents clustering and classification, images and video

indexing, graph indexing for graph querying, routing in computer networks, web links

analysis, drugs design, and carcinogenesis. Several frequent pattern mining algorithms have

been proposed in recent years and every day a new one is introduced. The fact that these

algorithms use various methods on different datasets, patterns mining types, graph and tree

representations, it is not easy to study them in terms of features and performance. This

paper presents a brief report of an intensive investigation of actual frequent subgraphs and

subtrees mining algorithms. The algorithms were also categorized based on different

features.

Keywords

Graph Mining, Subgraph, Frequent Pattern, Graph indexing.

1. INTRODUCTION

Today we are faced with ever-increasing volumes of data. Most of these

data naturally are of graph or tree structure. The process of extracting new

and useful knowledge from graph data is known as graph mining [1] [2]

Frequent subgraph patterns mining [3] is an important part of graph mining.

It is defined as “process of pattern extraction from a database that the

number frequency of which is greater than or equal to a threshold defined by

the user.” Due to its wide utilization in various fields, including social

network analysis [4] [5] [6], XML documents clustering and classification

[7] [8], network intrusion [9] [10], VLSI reverse [11], behavioral modeling

[12], semantic web [13], graph indexing [14] [15] [16] [17] [18], web logs

analysis[19], links analysis[20], drug design [21] [22] [23], and

Classification of chemical compounds[24] [25] [26], this field has been

subject matter of several works.](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-41-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 40

The present paper is an attempt to survey subtree and subgraph mining

algorithms. A comparison and classification of these algorithms, according

to their different features, is also made. The next section discusses the

literature review followed by section three that deals with the basic ideas

and concepts of graphs and trees. Mining algorithms, frequent subgraphs are

discussed in section four from different viewpoint such as criteria of

representing graphs (adjacency matrix and adjacency list), generation of

subgraphs, number of replications, pattern growth-based and apriori-based

classifications, classification based on search method, classification based

on transactional and single inputs, classification based on type of output,

and also Mining based on the logic. Fifth section focuses on frequent

Mining algorithm from different angles such as trees representation method,

type of algorithms input, tree-based Mining, and Mining based on

Constraints on outputs.

2. RELATED WORKS

H.J.Patel1, R.Prajapati,et al. [27] Classified graph mining and mentioned

two types of the algorithms, apriori-based and pattern growth based.

K.Lakshmi1,T.Meyyappan [28] studied apriori based and pattern growth

based, taking into account aspects such as input/output type, how to display

a graph, how to generate candidates, and how many times a candidates is

repeated in the graph dataset. In [29] D.Kavitha, B.V.Manikyala, et al.

suggested the third type of graph mining algorithms named as inductive

logic programming. Here a complete survey of graph mining concepts and a

very useful set of examples to ease the understanding of the concept come

next.

3. BASIC CONCEPTS

3.1 Garph

A graph G (V, E) is composed of a set of vertices (V) connected to each

other by and a set of edges (E).

3.2 Tree

A tree T is a connected graph that has no cycle. In other words, there is only

and only one path between any two vertices.

3.3 Subgraph

A subgraph G '(V', E') is a subgraph of G (V, E), which vertices and edges

are subsets of V and E respectively:

V’⊆V

⊆](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-42-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 43

length of k. However, in this method, all state of candidate subgraph

generated is considered .Maintenance and processing need plenty of time

and memory, which tackles the performance [30] [2].

5.1.2 Pattern Growth-Based

In FP-growth-based methods a candidate subgraph with length of k+1 is

obtained by extending a frequent pattern with length of k. Since extending a

frequent subgraph with length of k may generate several candidate of length

k+1, thus the way a frequent subgraph is expanded is critical in reducing

generation of copied subgraphs.Table1 lists apriori and pattern growth

algorithms [2].

Table 1. Frequent Subgraph Mining Algorithms

AprioriPattern Growth

FARMER [31]

FSG [3]

HSIGRAM

GREW [32]

FFSM [4]

ISG

SPIN [33]

Dynamic GREW [34]

AGM [35]

MUSE [36]

SUBDUE [37]

AcGM [38]

DPMine

gFSG [39]

MARGIN [40]

GSPan [41]

CloseGraph [42]

Gaston [43]

TSP [44]

MOFa [45]

RP-FP [46]

RP-GD [46]

JPMiner [47]

MSPAN

VSIGRAM [48]

FPF [49]

Gapprox [50]

HybridGMiner

FCPMiner [51]

RING [52]

SCMiner [53]

GraphSig [54]

FP-GraphMiner [55]

gPrune [56]

CLOSECUT [57]

FSMA [58]

5.2 Classification Based on Search Strategy

There are two search strategies to find frequent subgraphs. These two

methods include breadth first search (BFS) and depth first search (DFS).

5.3 Classification Based on Nature of the Input

Depending on input type of algorithms, here tried to be divided two

categories presented as following:](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-45-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 44

5.3.1 Single Graph Database

Database consists of a single large graph

5.3.2 Transactional Graph Database

Database consists of a large number of small graphs. Figure.3 shows a

database consist of a set of graphs and two subgraphs and their frequency.

In Figure.3 (left side, g, g2, and g2) demonstrates a transactional graph

database and frequency of two frequent subgraphs (right side).

Figure.3. A database consisting of three graph g1, g2, g3 and two subgraph

and frequency of each

5.4 Classification Based on Nature of the Output

5.4.1 Completeness of the Output

While, some algorithms find all frequent patterns, some other only mines

part of frequent patterns. Frequent patterns mining is closely related to

performance. When the total size of dataset is too high, it is better to use

algorithms that are faster in execution so that reduction of the performance

is avoided, although, not all frequent patterns are minded. Table 2 lists the

completeness of output [29].

Table 2. Completeness of Output

Complete OutputIncomplete Output

FARMER

gSpan

FFSM

Gaston

FSG

HSIGRAM

SUBDUE

GREW

CloseGraph

ISG

5.4.2 Constraint-Based

With increase of size database, the number of frequent pattern is increased.

This makes maintenance and analyzing more difficult as it needs more

memory space. Reducing the number of frequent patterns without losing the

data is achievable through mining and maintains more comprehensive

patterns. Given that each pattern satisfies the condition of being frequent the](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-46-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 45

whole subset satisfies the condition, to achieve more comprehend patterns

we can use the following terms:

5.4.2.1 Maximal Pattern

Subgraph g1 is maximal pattern if the pattern is frequent and does not

consist of any super-pattern, so that g2 با g2⊃g1.

5.4.2.2 Closed Pattern

Subgraph g1 is closed if it is frequent and does not consist of any frequent

super-pattern such as g2, g2⊃g1 (i.e. support).Table3 lists maximal and

closed subgraph algorithms.

Table 3. Frequent Subgraph Mining (Constraintd)

MaximalClosed

SPIN

MARGIN

ISG

GREW

CloseGraph

CLOSECUT

TSP

RP-FP

RP-GD

5.5 Logic-Based Mining

Also known as inductive logic programming, which also an area of machine

learning, mainly in biology. This method uses inductive logic to display

structured data. ILP core uses the logic to display for search and the basic

assumptions of that structured way (e.g. WARMR, FOIL, and C-PROGOL),

which is derived from background knowledge [29]. Table 4 lists the Pattern

Growth and Table 5 indicates apriori-based algorithms categorized from

different aspects [59] [27] [60] [61] [62] [28] [30] [63].

Table 4. Frequent Subgraph Mining Algorithms (Pattern Growth-based)

Frequency

Counting

Subgraph

Generation

Graph

Representation

Input TypeAlgorithms](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-47-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 47

2. Using a new method for tree representation and look up table that allows

quick access to the information nodes in the candidate generation phase

without having to read the trees of the database.

3. using right most expansion to candidate generation that guaranteed not

generate duplicate candidate.

This algorithm uses lookup table that is implemented as Hash table to store

input trees information. It is the key part, represented as the pair of (T,pos),

where T is identification of input tree and pos is number in preorder

traversal, and value part, represented as (l,s), where l is label and s is scope

of node. In this algorithm a new candidate is generated using scope of each

node That means, first node, which is added to the other node should be

added along the right most expansion and that within the scope of the first

node to be added continually this process other frequent pattern is found

[64].

Fp-Graph Miner Algorithm

This algorithm uses FP-growth method to find frequent subgraphs, Its input

is a set of graphs (Transactional database). First a BitCode for each edge is

defined, then a set of edge is defined for each edge .When, edge is found in

the each of graphs, the BitCode is ‘1’ and otherwise ‘0’. Then a frequency

table is sorted in ascending order based on equivalent BitCode belongs to

each edge and afterward, FP tree is constructed and frequent subgraphs are

obtained through depth traversal [55].

6. FREQUENT SUBTREES MINING ALGORITHMS

CLASSIFICATION

6.1 Trees Representation

A tree can be encoded as a sequence of nodes and edges. Some of most

important ways of encoding trees are introduced below:

6.1.1 DLS (Depth Label Sequence)

Let T be a Labeled Ordered Tree and depth-label pairs including labels and

depth for each node are belonged to V. For example, (d(vi),l(vi)) are added

to string s throughout DFS traversal of tree T. Depth-label sequence of tree

T is obtained as { d(v1), l(v1)), …,(d(vk), l(vk) }. For instance, DLS for tree

in Figure.4 can be presented as follow:

{(0,a),(1,b),(2,e),(3,a),(1,c),(2,f),(3,b),(3,d),(2,a),(1,d),(2,f)(3,c)}

6.1.2 DFS – LS (Depth First Sequence)-(Label Sequence)

Assumed a labeled ordered tree, Labels is added to string of s

during the DFS traversal of Tree T. During backtrack ‘-1’or‘$’or ‘/’ is added](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-49-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 48

to the string, DFS-LS code for tree T is illustrated in in Figure.4

{abea$$$cfb$d$$a$$dfc$$$}

6.1.3 BFCS (Breadth First Canonical String)

Let T be an unordered tree. Several sequence encoded string can be

generated using the BFS method and through changing the order of children

of a node. Thus, one may say that BFCS tree T equals to the smallest

lexicographic order of this encoded string. BFCS of tree T is showed in

Figure.4. {a$bcd$e$fa$f$a$bd$$c#}

6.1.4 CPS (Consolidate Prufer Sequence)

Let T be a labeled tree T, and CPS encoding method consists of two parts:

NPS as extended prufer sequence, which uses vertex numbers traversal as

set of unique label is obtained; an d LS (Label Sequence) as a sequence

consisting of labels in prefix traversal after the leafs is removed is achieved.

Both NPS and LS generate a unique encoding for labeled tree. NPS and LS

obtained for the tree presented in Figure.4 is as follow respectively:

{ebaffccafda-}, {aebbdfaccfda}. To obtain NPS, a leaf from the tree is

removed in each step and the parent of the leaf is taken get as output. This is

repeated until only the roots remain and ‘-’ is added to note as the end of the

string. Regarding LS (Label Sequence) the same postfix traversal of the tree

is taken as LS. Table9 remarks this category of trees [65].

Figure.4. A Tree Example](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-50-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 49

Table 6. Frequent subtree Mining Algorithms (Tree Representation)

Tree RepresentationAlgorithms

DLS

DFS-LS

DLS

FST-Forest

BFCS

DLS

DFS-LS

DLS

DLS

DFS string

DFS-LS

CPS

BFCS

DFS-LS

BFCS

DFS-LS

uFreqt

SLEUTH

Unot

Path Join

RootedTreeMiner [66]

FREQT

TreeMiner

Chopper

XSPanner

AMIOT

IMB3Miner

TRIPS

FreeTreeMiner

CMTreeMiner

HybridTreeMiner [67]

GP-Growth

6.2 Input Types

6.2.1 Rooted Ordered Trees

Rooted Ordered sub-tree is a kind of tree in which a single node is considered

as the “root” of the tree and there is a relationship between children of each

node so that each child is greater than or equal to its siblings that are placed at

the left hand side of it; moreover it is less than or equal to ones that are

placed at its right hand side. If we elate or definition of rooted ordered tree

such that there was no need to consider the relationship between siblings we

have a rooted unordered sub-tree.in Table7 rooted ordered tree mining

algorithms is shown.

Table 7. Rooted Ordered Tree mining Algorithms

InducedEmbedded

FREQT [68]

AMIOT [69]

IMB3Miner [70]

TRIPES [65]

TIDES [65]

TreeMiner [71]

Chopper [72]

XSPanner [72]

IMB3-Miner

6.2.2 Rooted Unordered Trees

In this type of trees, a node is considered as the root, however, there is no

particular order between the descendants of each node,In Table 8 rooted

unordered tree mining algorithms is listed.](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-51-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 50

Table 8. Rooted unordered Tree mining Algorithms

InducedEmbedded

uFreqT [73]

Unot [74]

PathJoin [65]

Rooted TreeMiner [75]

TreeFinder [76]

TreeFinder

Cousin Pair [77]

SLEUTH [78]

6.3 Tree Base Data Mining

Frequent subtrees mining algorithm can be categorized into two major

categories, aprior-based and pattern growth-based. Table 9 lists the apriori

and pattern growth algorithms of trees [79] [76] [80].

Table 9. Frequent Subtree Mining Algorithms

7. CONCLUSIONS AND FUTURE WORKS

Frequent subgraph Mining algorithms were first examined from different

viewpoints such as different ways of representing a graph (e.g. adjacency

matrix and adjacency list), generation of subgraphs, frequency counting,

pattern growth-based and apriori-based algorithm classification, search

based classification, input-based classification (single, transactional), output

based classification. Furthermore, Mining based on logic was discussed.

Afterward, frequent subtrees traversal algorithms were examined from

different viewpoints such as trees representation methods, type of inputs,

tree-based traversal, and also Mining based on Constraints of outputs. Given

the results, it is concluded that in absence of generating patterns by pattern-

AprioriPattern Growth

TreeFinder

AMIOT

FreeTreeMiner

TreeMine [81]

SLEUTH

CMTreeMiner [82]

Pattern Matcher [71]

W3Miner [83]

FTMiner [84]

CFFTree [85]

IMB3-Miner

uFreqt

Unot

FREQT

TRIPS

TIDES

Path Join

XSPanner

Chopper

PrefixTreeISpan [86]

PCITMiner [87]

F3TM [88]

GP-Growth [64]](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-52-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 51

growth, it is featured with less computation work and needs smaller memory

size. Moreover, these algorithms are specifically designed for trees and

graphs and cannot be used for other purposes. On the other hand, as they

work on variety of datasets, it is not easy to find tradeoffs between them.

The same frequent patterns can be used for searching similarity, indexing,

classifying graphs and documents in future studies. Parallel methods and

technologies such as Hadoop can also be needed when working with

excessive data volume.

8. ACKNOWLEDGMENTS

Authors are thankful to Mohammad Reza Abbasifard for their support of the

investigations.

REFERENCES

[1] A.Rajaraman, J.D.Ullman, 2012. Mining of Massive Datasets, 2nd ed.

[2] J.Han, M.Kamber, 2006, Data Mining Concepts and Techniques. USA: Diane

Cerra.

[3] Kuramochi, Michihiro, and G.Karypis., 2004. An efficient algorithm for

discovering frequent subgraphs, in IEEE Transactions on Knowledge and

Data Engineering, pp. 1038-1051.

[4] J.Huan, W.Wang, J. Prins, 2003. Efficient Mining of Frequent Subgraph in the

presence of isomorphism, in Third IEEE International Conference on Data

Minign (ICDM).

[5] (2013, Dec.) Trust Network Datasets - TrustLet. [Online].

http://www.trustlet.org

[6] L.YAN, J.WANG, 2011. Extracting regular behaviors from social media

networks, in Third International Conference on Multimedia Information

Networking and Security.

[7] Ivancsy,I. Renata, I.Vajk., 2009. Clustering XML documents using frequent

subtrees, Advances in Focused Retrieval, Vol. 3, pp. 436-445.

[8] J.Yuan, X.Li, L.Ma, 2008. An Improved XML Document Clustering Using

Path Features, in Fifth International Conference on Fuzzy Systems and

knowledge Discovery, Vol. 2.

[9] Lee, Wenke, and Salvatore J. Stolfo, 2000. A framework for constructing

features and models for intrusion detection systems, in ACM transactions on

Information and system security (TiSSEC), pp. 227-261.

[10] Ko, C, Logic induction of valid behavior specifications for intrusion detection

, 2000. in In IEEE Symposium on Security and Privacy (S&P), pp. 142–155.](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-53-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 52

[11] Yoshida, K. and Motoda, 1995. CLIP: Concept learning from inference

patterns, in Artificial Intelligence, pp. 63–92.

[12] Wasserman, S., Faust, K., and Iacobucci. D, 1994. Social network analysis :

Methods and applications. Cambridge university Press.

[13] Berendt, B., Hotho, A., and Stumme, G., 2002. semantic web mining, in In

Conference International Semantic Web (ISWC), pp. 264–278.

[14] S.C.Manekar, M.Narnaware, May 2013. Indexing Frequent Subgraphs in

Large graph Database using Parallelization, International Journal of Science

and Research (IJSR), Vol. 2 , No. 5.

[15] Peng, Tao, et al., 2010. A Graph Indexing Approach for Content-Based

Recommendation System, in IEEE Second International Conference on

Multimedia and Information Technology (MMIT), pp. 93-97.

[16] S.Sakr, E.Pardede, 2011. Graph Data Management: Techniques and

Applications, in Published in the United States of America by Information

Science Reference.

[17] Y.Xiaogang, T.Ye, P.Tao, C.Canfeng, M.Jian, 2010. Semantic-Based Graph

Index for Mobile Photo Search," in Second International Workshop on

Education Technology and Computer Science, pp. 193-197.

[18] Yildirim, Hilmi, and Mohammed Javeed Zaki., 2010. Graph indexing for

reachability queries, in 26th International Conference on Data Engineering

Workshops (ICDEW)IEEE, pp. 321-324.

[19] R.Ivancsy and I.Vajk, 2006. Frequent Pattern Mining in Web Log Data, in

Acta Polytechnica Hungarica, pp. 77-90.

[20] G.XU, Y.zhang, L.li, 2010. Web mining and Social Networking. melbourn:

Springer.

[21] S.Ranu, A.K. Singh, 2010. Indexing and mining topological patterns for drug,

in ACM, Data mining and knowlodge discovery, Berlin, Germany.

[22] (2013, Dec.) Drug Information Portal. [Online]. http://druginfo.nlm.nih.gov

[23] (2013, Dec.) DrugBank. [Online]. http://www.drugbank.ca

[24] Dehaspe,Toivonen, and King, R.D., 1998. Finding frequent substructures in

chemical compounds, in In Proc. of the 4th ACM International Conference on

Knowledge Discovery and Data Mining, pp.30-36.

[25] Kramer, S., De Raedt, L., and Helma, C., 2001. Molecular feature mining in

HIV data, in In Proc. of the 7th ACM SIGKDD International Conference on

Knowledge Discovery and Data Mining (KDD-01), pp. 136–143.

[26] Gonzalez, J., Holder, L.B. and Cook, 2001. Application of graph-based

concept learning to the predictive toxicology domain, in In Proc. of the](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-54-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 53

Predictive Toxicology Challenge Workshop.

[27] H.J.Patel, R.Prajapati, M.Panchal, M.Patel, Jan. 2013. A Survey of Graph

Pattern Mining Algorithm and Techniques, International Journal of

Application or Innovation in Engineering & Management (IJAIEM), Vol. 2,

No. 1.

[28] K.Lakshmi, T. Meyyappan, 2012. FREQUENT SUBGRAPH MINING

ALGORITHMS - A SURVEY AND FRAMEWORK FOR

CLASSIFICATION, computer science and information technology, pp. 189–

202.

[29] D.Kavitha, B.V.Manikyala Rao and V. Kishore Babu, 2011. A Survey on

Assorted Approaches to Graph Data Mining, in International Journal of

Computer Applications, pp. 43-46.

[30] C.C.Aggarwal,Wang, Haixun, 2010. Managing and Mining Graph Data.

Springer,.

[31] B.Wackersreuther, Bianca, et al. , 2010. Frequent subgraph discovery in

dynamic networks, in ACM, Proceedings of the Eighth Workshop on Mining

and Learning with Graphs, Washington DC USA, pp. 155-162.

[32] Kuramochi, Michihiro, and G.Karypis, 2004. Grew-a scalable frequent

subgraph discovery algorithm, in Fourth IEEE International Conference on

Data Mining (ICDM), pp. 439-442.

[33] Huan, Jun, SPIN: mining maximal frequent subgraphs from graph databases,

2004. in Proceedings of the tenth ACM SIGKDD international conference on

Knowledge discovery and data mining.

[34] Borgwardt, Karsten M., H-P. Kriegel, and P.Wackersreuther, 2006. Pattern

mining in frequent dynamic subgraphs, in Sixth International Conference on

Data Mining (ICDM), pp. 818-822.

[35] Inokuchi, Akihiro, T.Washio, and H.Motoda, 2000. An apriori-based

algorithm for mining frequent substructures from graph data, in Principles of

Data Mining and Knowledge Discovery, pp. 13-23, Springer Berlin

Heidelberg.

[36] Zou, Zhaonian, et al, 2009. Frequent subgraph pattern mining on uncertain

graph data, in Proceedings of the 18th ACM conference on Information and

knowledge management, pp. 583-592.

[37] Ketkar, N.S, Lawrence B.Holder, and D.J.Cook, 2005. Subdue: compression-

based frequent pattern discovery in graph data, in ACM, Proceedings of the

1st international workshop on open source data mining: frequent pattern

mining implementations, pp. 71-76.

[38] A. Inokuchi, T. Washio, and H. Motoda, 2003. Complete mining of frequent

patterns from graphs: Mining graph data, in Machine Learning, pp. 321-354.](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-55-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 54

[39] Kuramochi, Michihiro, and G.Karypis, 2007. Discovering frequent geometric

subgraphs, in Information Systems, pp. 1101-1120.

[40] Thomas, Lini T, Satyanarayana R. Valluri, and K.Karlapalem, 2006. Margin:

Maximal frequent subgraph mining, in IEEE Sixth International Conference

on Data Mining (ICDM), pp. 1097-1101.

[41] Yan, Xifeng, and J.Han, 2002. gspan: Graph-based substructure pattern

mining, in Proceedings International Conference on Data Mining.IEEE, pp.

721-724.

[42] Yan, Xifeng, and Jiawei Han, 2003. CloseGraph: mining closed frequent

graph patterns, in Proceedings of the ninth ACM SIGKDD international

conference on Knowledge discovery and data mining, pp. 286-295.

[43] Nijssen, Siegfried, and J.N. Kok., 2005. The gaston tool for frequent subgraph

mining, in Electronic Notes in Theoretical Computer Science, pp. 77-87.

[44] Hsieh, Hsun-Ping, and Cheng-Te Li, 2010. Mining temporal subgraph patterns

in heterogeneous information networks, in IEEE Second International

Conference on Social Computing (SocialCom), pp. 282-287.

[45] Wörlein, Marc, et al, 2005. A quantitative comparison of the subgraph miners

MoFa, gSpan, FFSM, and Gaston, in Knowledge Discovery in Databases:

PKDD , Springer Berlin Heidelberg, pp. 392-403.

[46] S.J.Suryawanshi,S.M.Kamalapur, Mar 2013. Algorithms for Frequent

Subgraph Mining, International Journal of Advanced Research in Computer

and Communication Engineering, Vol. 2, No. 3.

[47] Liu, Yong, Jianzhong Li, and Hong Gao, 2009. JPMiner: mining frequent

jump patterns from graph databases, in IEEE, Sixth International Conference

on Fuzzy Systems and Knowledge Discovery, pp. 114-118.

[48] Reinhardt, Steve, and G.Karypis, 2007. A multi-level parallel implementation

of a program for finding frequent patterns in a large sparse graph, in IEEE

International Parallel and Distributed Processing Symposium (IPDPS), pp. 1-

8.

[49] Schreiber, Falk, and H.Schwobbermeyer., 2005. Frequency concepts and

pattern detection for the analysis of motifs in networks, in Transactions on

computational systems biology III, pp. 89-104, Springer Berlin Heidelberg.

[50] Chent, Chen, et al., 2007. gapprox: Mining frequent approximate patterns

from a massive network, in Seventh IEEE International Conference on Data

Mining (ICDM), pp. 445-450.

[51] Ke, Yiping, J.Cheng, and Jeffrey Xu Yu, 2009. Efficient discovery of frequent

correlated subgraph pairs, in Ninth IEEE International Conference on Data

Mining (ICDM), pp. 239-248.](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-56-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 55

[52] Zhang, Shijie, J.Yang, and Shirong Li, 2009. Ring: An integrated method for

frequent representative subgraph mining, in Ninth IEEE International

Conference on Data Mining (ICDM), pp. 1082-1087.

[53] Fromont, Elisa, Céline Robardet, and A.Prado, 2009. Constraint-based

subspace clustering, in International conference on data mining, pp. 26-37.

[54] Ranu, Sayan, and Ambuj K. Singh., 2009. Graphsig: A scalable approach to

mining significant subgraphs in large graph databases, in IEEE 25th

International Conference on Data Engineering (ICDE), pp. 844-855.

[55] R. Vijayalakshmi,R. Nadarajan, J.F.Roddick,M. Thilaga, 2011. FP-

GraphMiner, A Fast Frequent Pattern Mining Algorithm for Network Graphs,

Journal of Graph Algorithms and Applications, Vol. 15, pp. 753-776.

[56] Zhu, Feida, et al., 2007. gPrune: a constraint pushing framework for graph

pattern mining, in Advances in Knowledge Discovery and Data Mining, , pp.

388-400, Springer Berlin Heidelberg.

[57] Yan, Xifeng, X. Zhou, and Jiawei Han, 2005. Mining closed relational graphs

with connectivity constraints, in Proceedings of the eleventh ACM SIGKDD

international conference on Knowledge discovery in data mining, pp. 324-

333.

[58] Wu, Jia, and Ling Chen, 2008. A fast frequent subgraph mining algorithm, in

The 9th International Conference for Young Computer Scientists (ICYCS), pp.

82-87.

[59] Krishna, Varun, N. N. R. R. Suri, G. Athithan, 2011. A comparative survey of

algorithms for frequent subgraph discovery, Current Science(Bangalore), pp.

1980-1988.

[60] K.Lakshmi, T. Meyyappan, Apr. 2012. A COMPARATIVE STUDY OF

FREQUENT SUBGRAPH MINING ALGORITHMS, International Journal

of Information Technology Convergence and Services (IJITCS), Vol. 2, No. 2.

[61] C.Jiang, F.Coenen, M.Zito, 2004. A Survey of Frequent Subgraph Mining

Algorithms, The Knowledge Engineering Review, pp. 1-31.

[62] M.Gholami, A.Salajegheh, Sep. 2012. A Survey on Algorithms of Mining

Frequent Subgraphs, International Journal of Engineering Inventions, Vol. 1,

No. 5, pp. 60-63.

[63] V.Singh, D.Garg, Jul. 2011. Survey of Finding Frequent Patterns in Graph

Mining: Algorithms and Techniques, International Journal of Soft Computing

and Engineering (IJSCE), Vol. 1, No. 3.

[64] Hussein, M.MA, T. H.Soliman, O.H. Karam, 2007. GP-Growth: A New

Algorithm for Mining Frequent Embedded Subtrees. 12th IEEE Symposium on

Computers and Communications.](https://image.slidesharecdn.com/vol14no1-july2014-171208072343/85/Vol-14-No-1-July-2014-57-320.jpg)

![International Journal of Computer Science and Business Informatics

IJCSBI.ORG

ISSN: 1694-2108 | Vol. 14, No. 1. JUNE-JULY 2014 56

[65] Tatikonda, Shirish, S.Parthasarathy,T.Kurc., 2006. TRIPS and TIDES: new

algorithms for tree mining, in Proceedings of the 15th ACM international

conference on Information and knowledge management.

[66] Tung, Jiun-Hung, 2006. MINT: Mining Frequent Rooted Induced Unordered

Tree without Candidate Generation.

[67] Chi, Yun, Y.Yang, and Richard R. Muntz., 2004. HybridTreeMiner: An

efficient algorithm for mining frequent rooted trees and free trees using

canonical forms, in Proceedings 16th International Conference on Scientific

and Statistical Database Management.

[68] T.Asai, H.Arimura, T.Uno, S.Nakano and K.Satoh, 2008. Efficient tree

mining using reverse search.

[69] S.Hido, and H. Kawano., 2005. AMIOT: Induced Ordered Tree Mining in

Tree-structured Databases, in Proceedings of the Fifth IEEE International

Conference on Data Mining (ICDM’05).

[70] H.Tan, T.S. Dillon, F.Hadzic, E.Chang, and L.Feng, 2006. IMB3-Miner:

Mining Induced/Embedded Subtrees by Constraining the Level of Embedding,

in Advances in Knowledge Discovery and Data Mining, Springer Berlin

Heidelberg, pp. 450–461.

[71] M.J.Zaki, 2002. Efficiently mining frequent trees in a forest, in In Proceedings

of the 8th International Conference on Knowledge Discovery and Data

Mining (ACM SIGKDD), pp. 71-80.

[72] C.Wang, M.Hong, J.Pei, H.Zhou, W.Wang, 2004. Efficient pattern-growth

methods for frequent tree pattern mining, in Advances in Knowledge