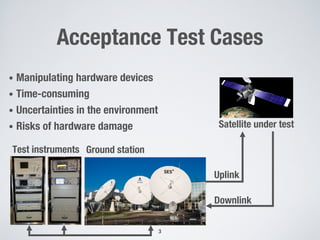

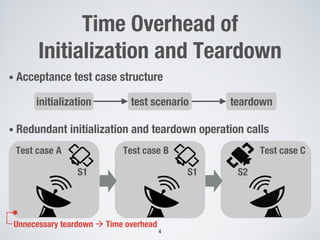

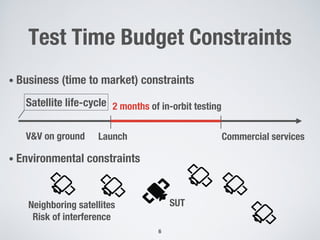

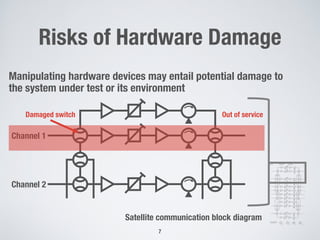

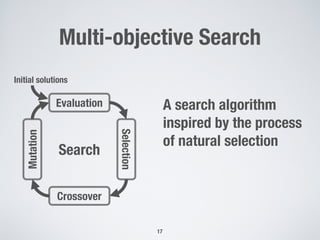

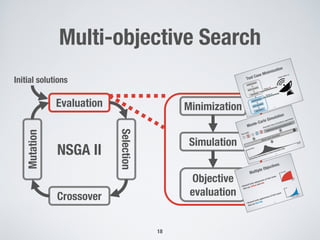

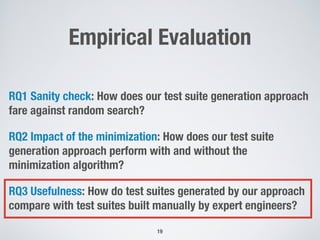

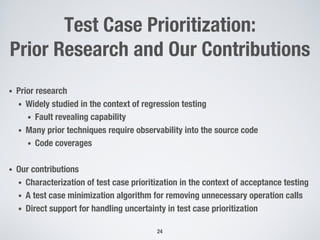

1) The document describes a multi-objective search-based approach for minimizing and prioritizing acceptance test cases for cyber physical systems to address challenges like time overhead, uncertainties in execution time, and risks of hardware damage.

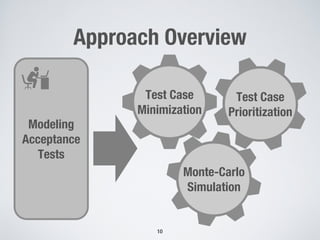

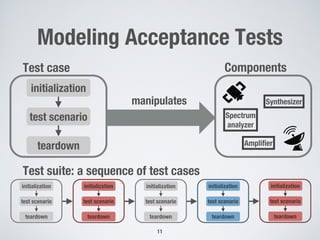

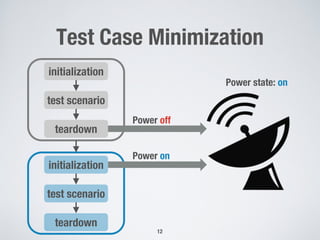

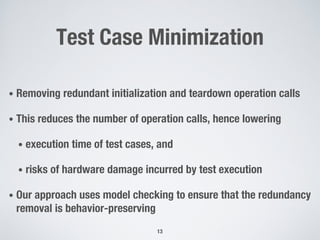

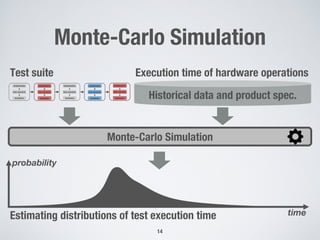

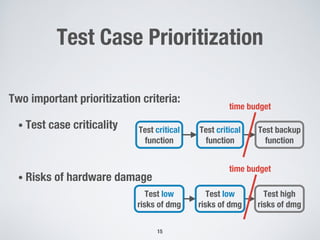

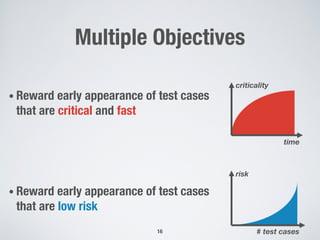

2) The approach models acceptance tests, minimizes test cases by removing redundant operations, and prioritizes test cases using Monte Carlo simulation to estimate execution times while optimizing for criticality and risk.

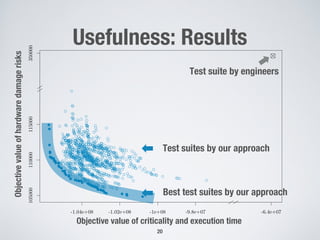

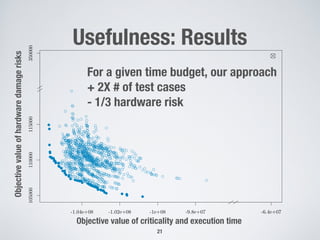

3) An empirical evaluation on an industrial case study of in-orbit satellite testing shows the approach generates test suites that cover more test cases and have lower hardware risk within time budgets compared to manually created test suites.