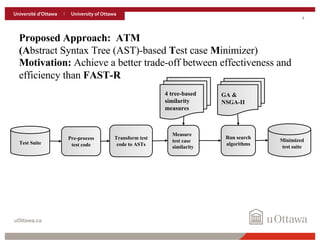

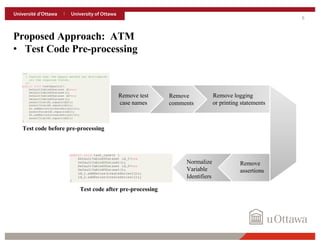

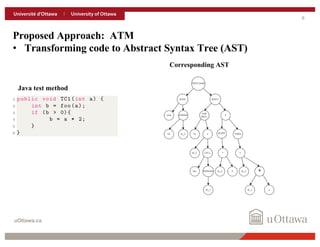

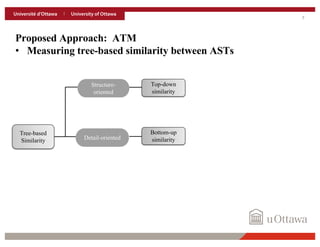

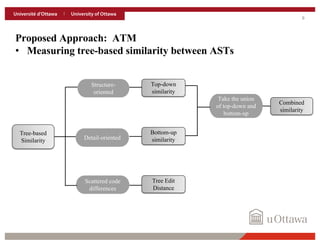

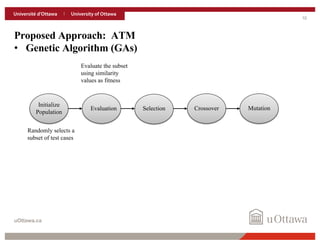

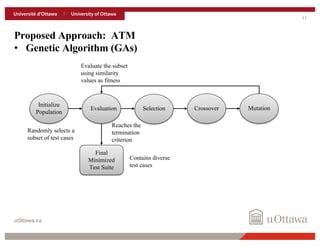

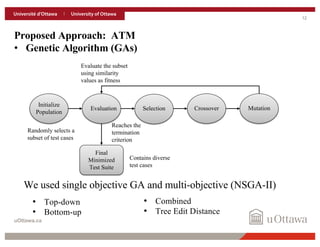

1. The document presents ATM, a new approach for black-box test case minimization that transforms test code into abstract syntax trees and uses tree-based similarity measures and genetic algorithms to minimize test suites.

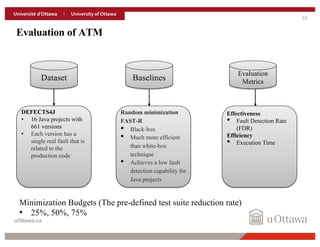

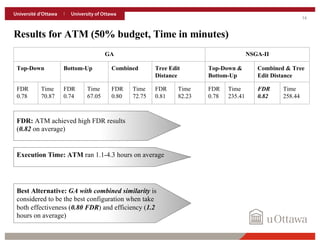

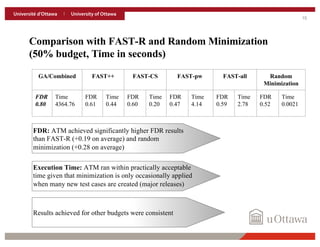

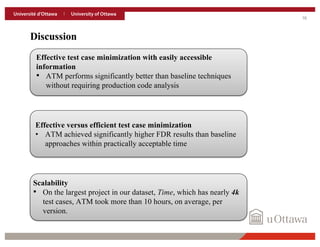

2. ATM was evaluated on the DEFECTS4J dataset and achieved a fault detection rate of 0.82 on average, significantly outperforming existing techniques, while requiring only practical execution times.

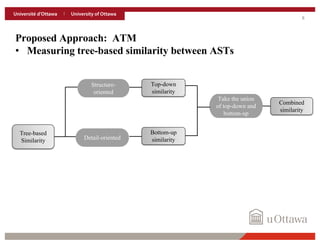

3. The best configuration of ATM used a genetic algorithm with a combined similarity measure, achieving a fault detection rate of 0.80 within 1.2 hours on average.