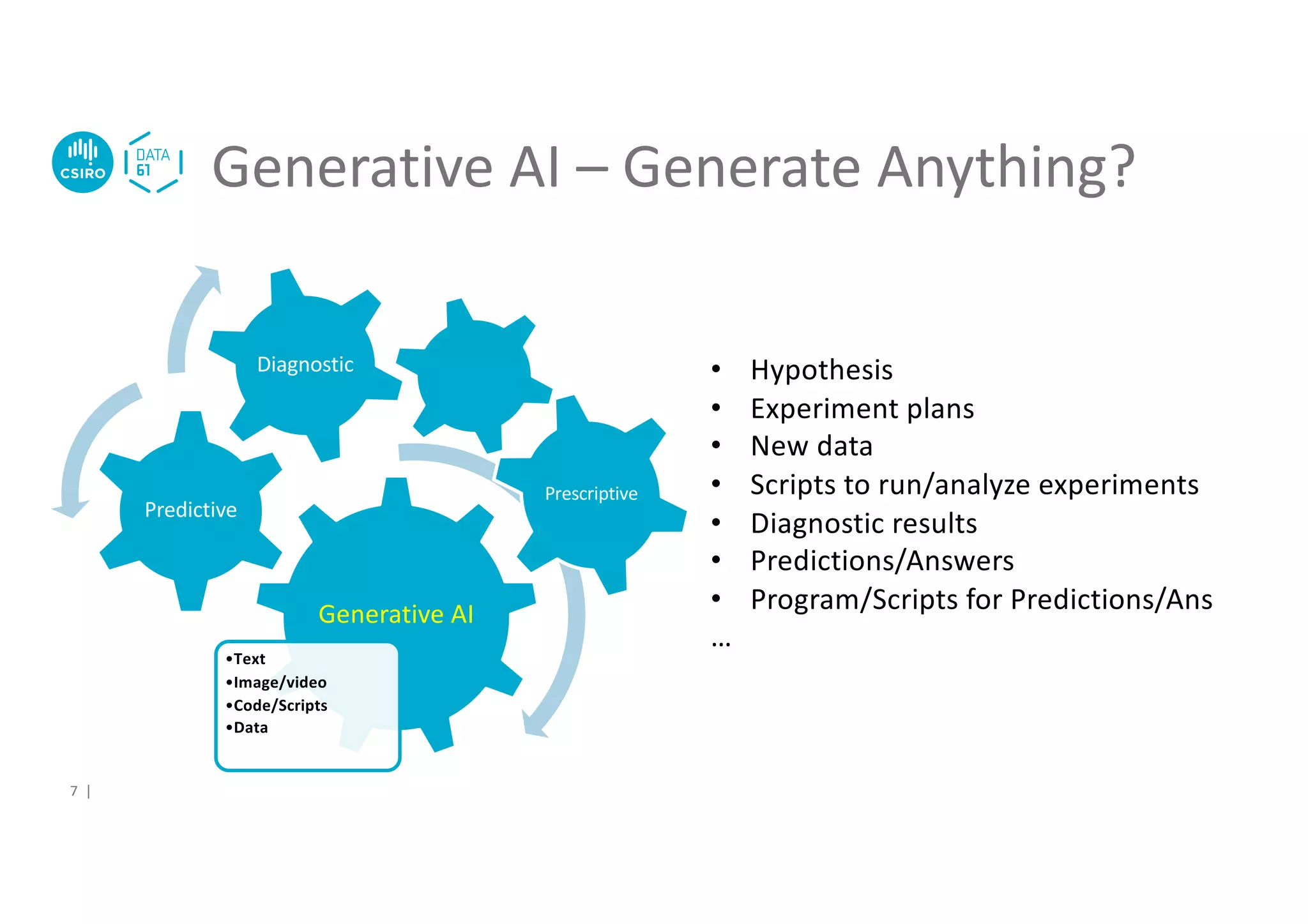

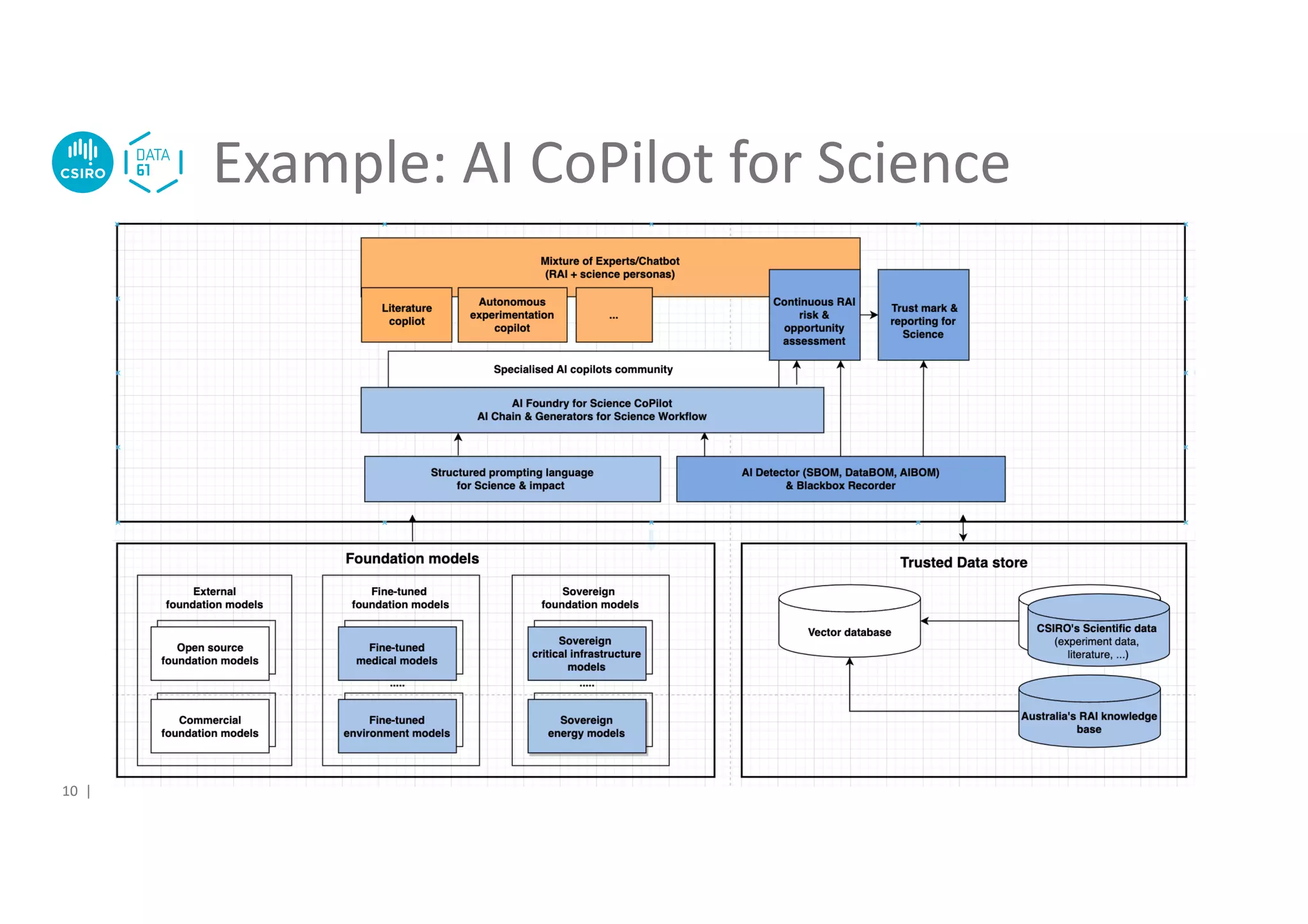

1) Dr. Liming Zhu from CSIRO's Data61 discusses using generative AI like ChatGPT to assist with scientific discovery by acting as smart interns or tools that can provide low-cost experimentation ideas and analyses.

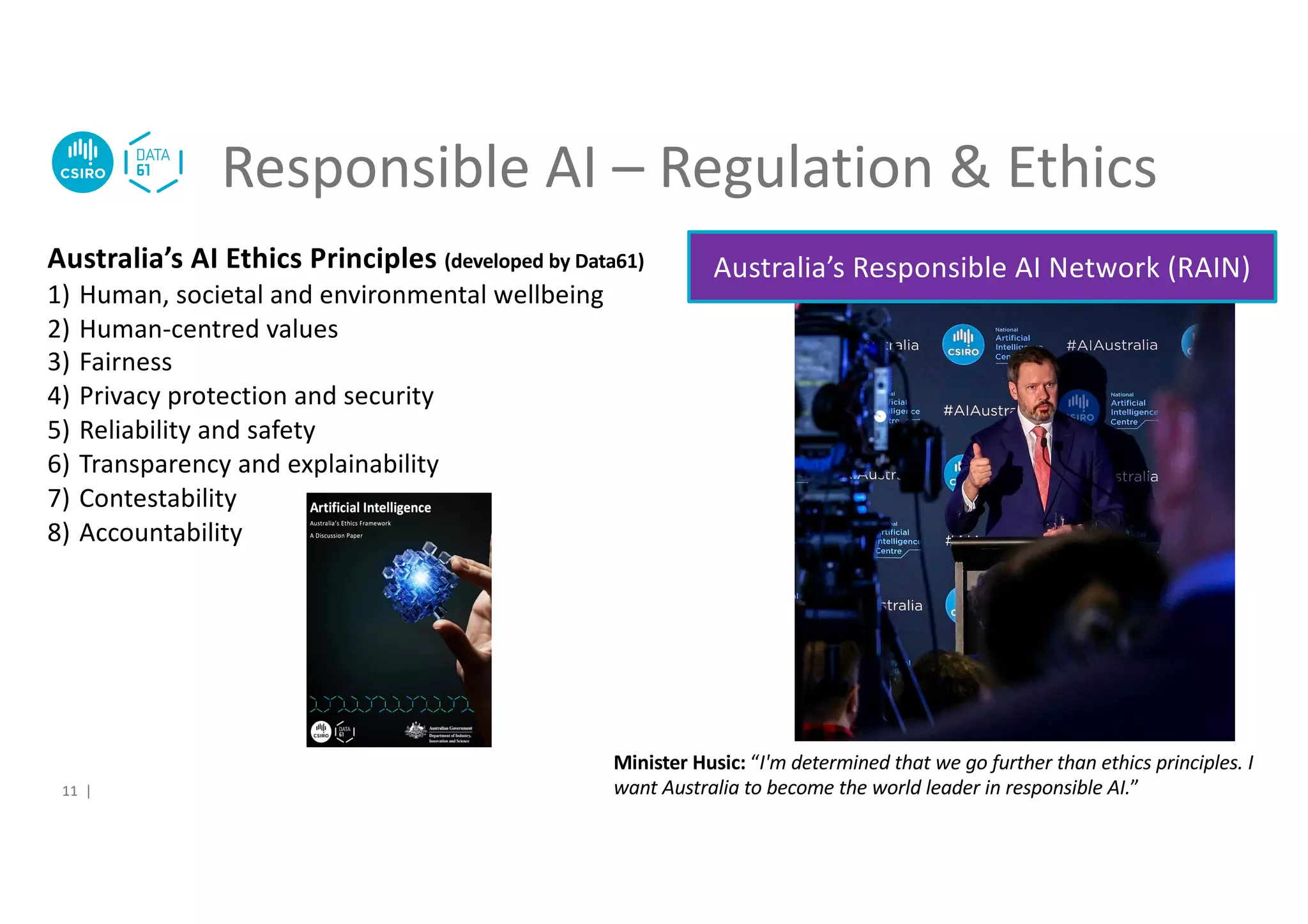

2) This raises opportunities for changing the role of scientist expertise and facilitating new cross-discipline collaborations, but also risks around ensuring AI systems are developed and used responsibly and trustworthily.

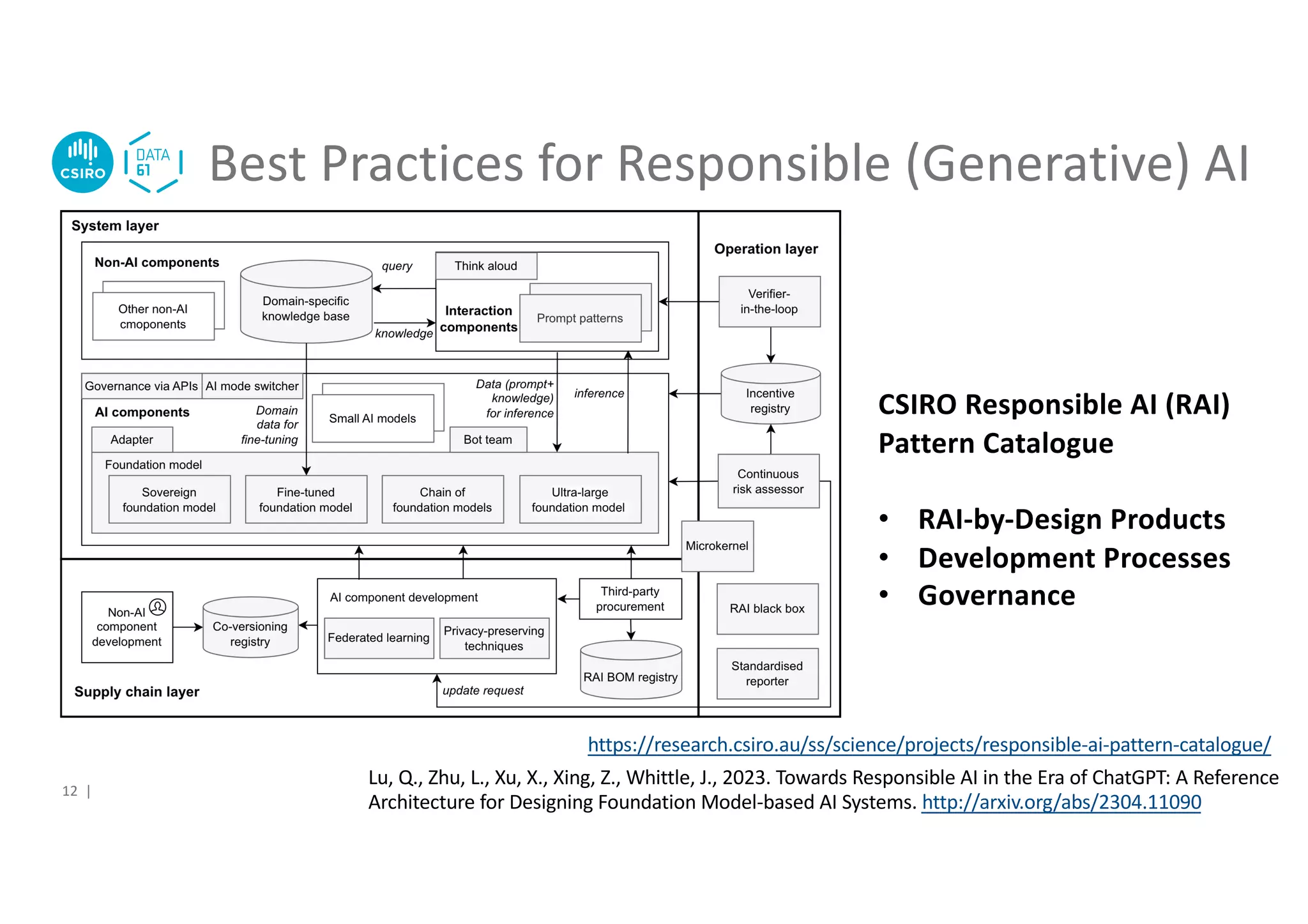

3) CSIRO is working on best practices for responsible generative AI, including through their Responsible AI Pattern Catalogue, to help address issues of trust, transparency, and accountability as general AI capabilities are applied to science.