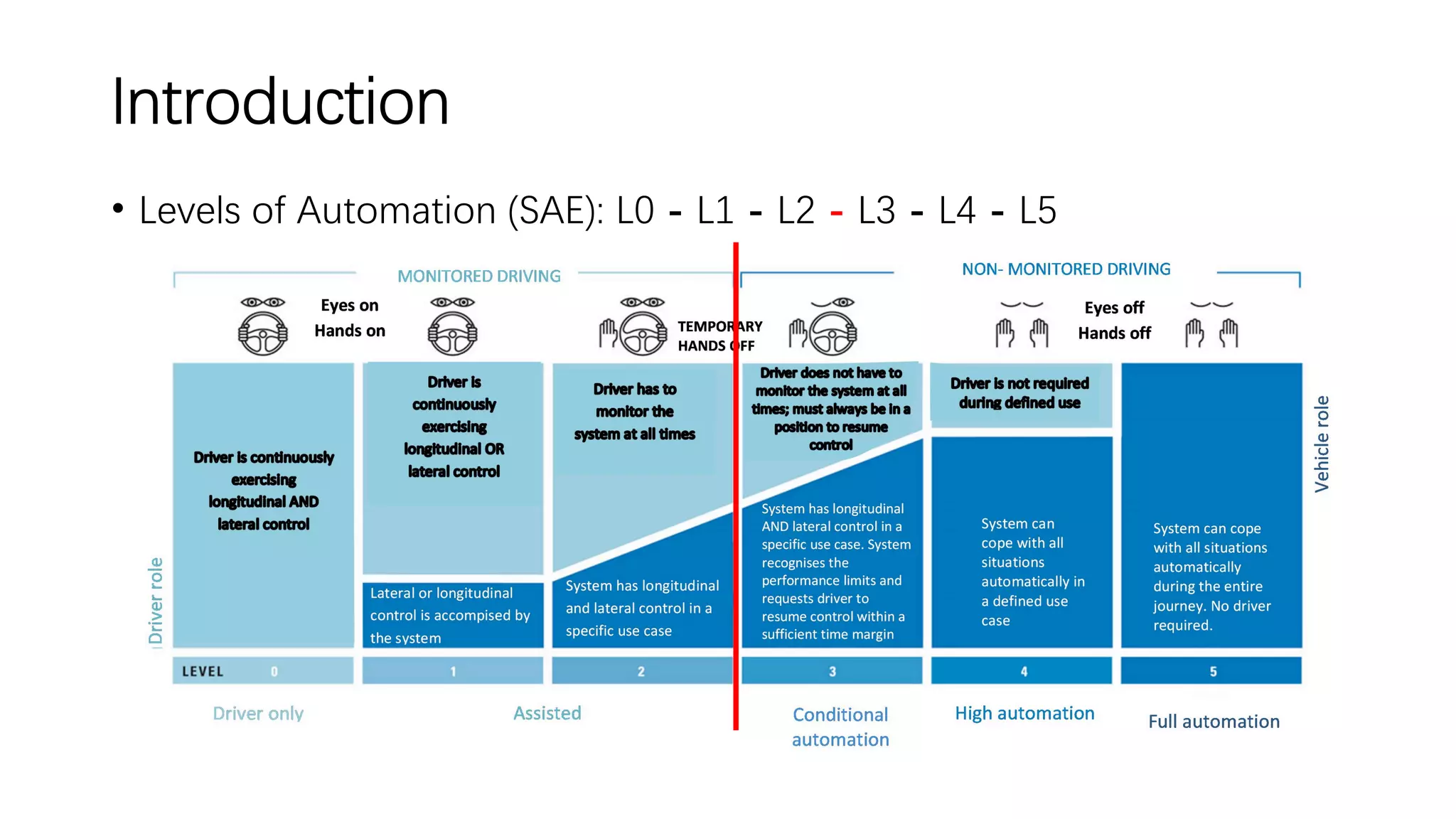

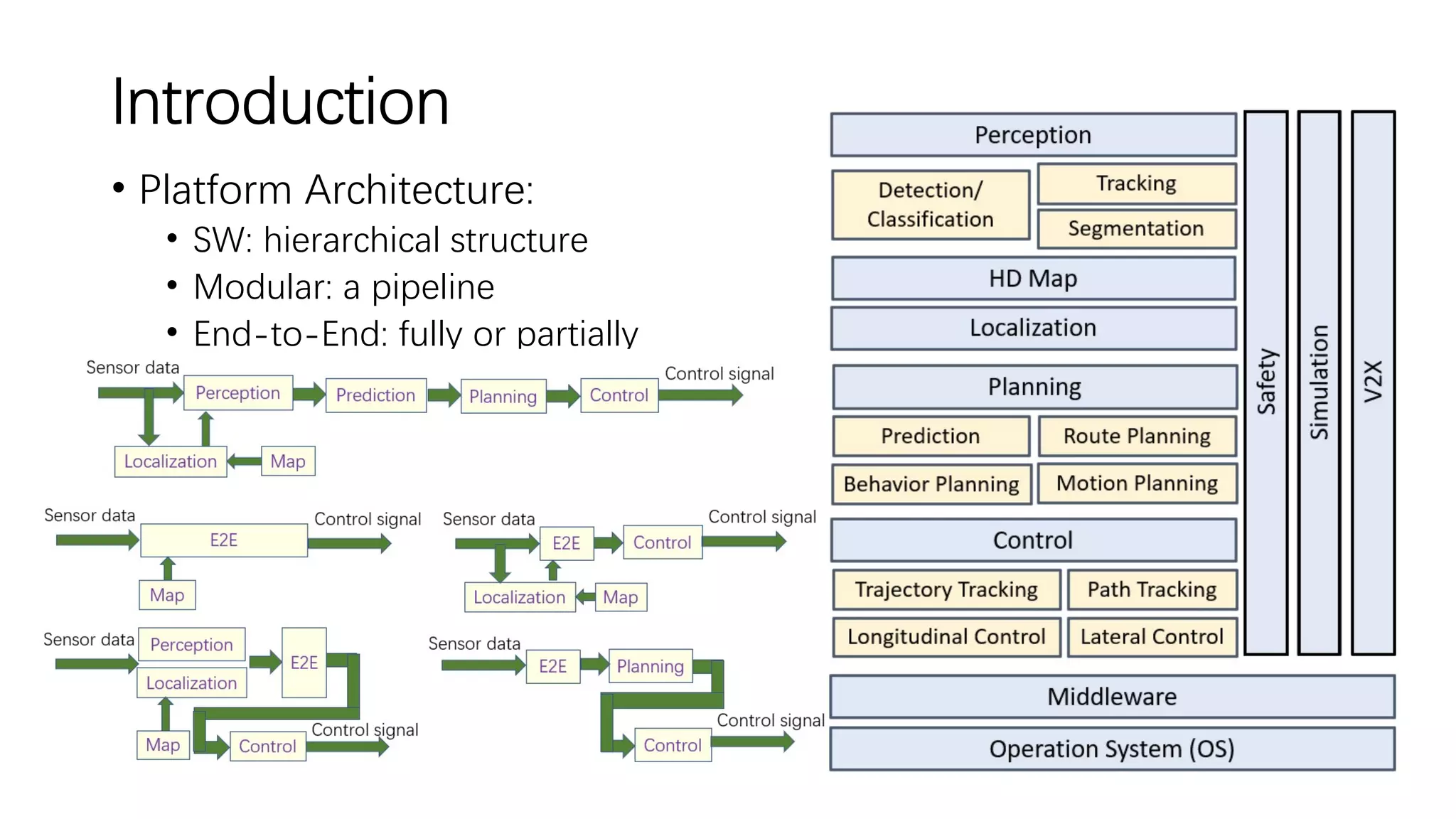

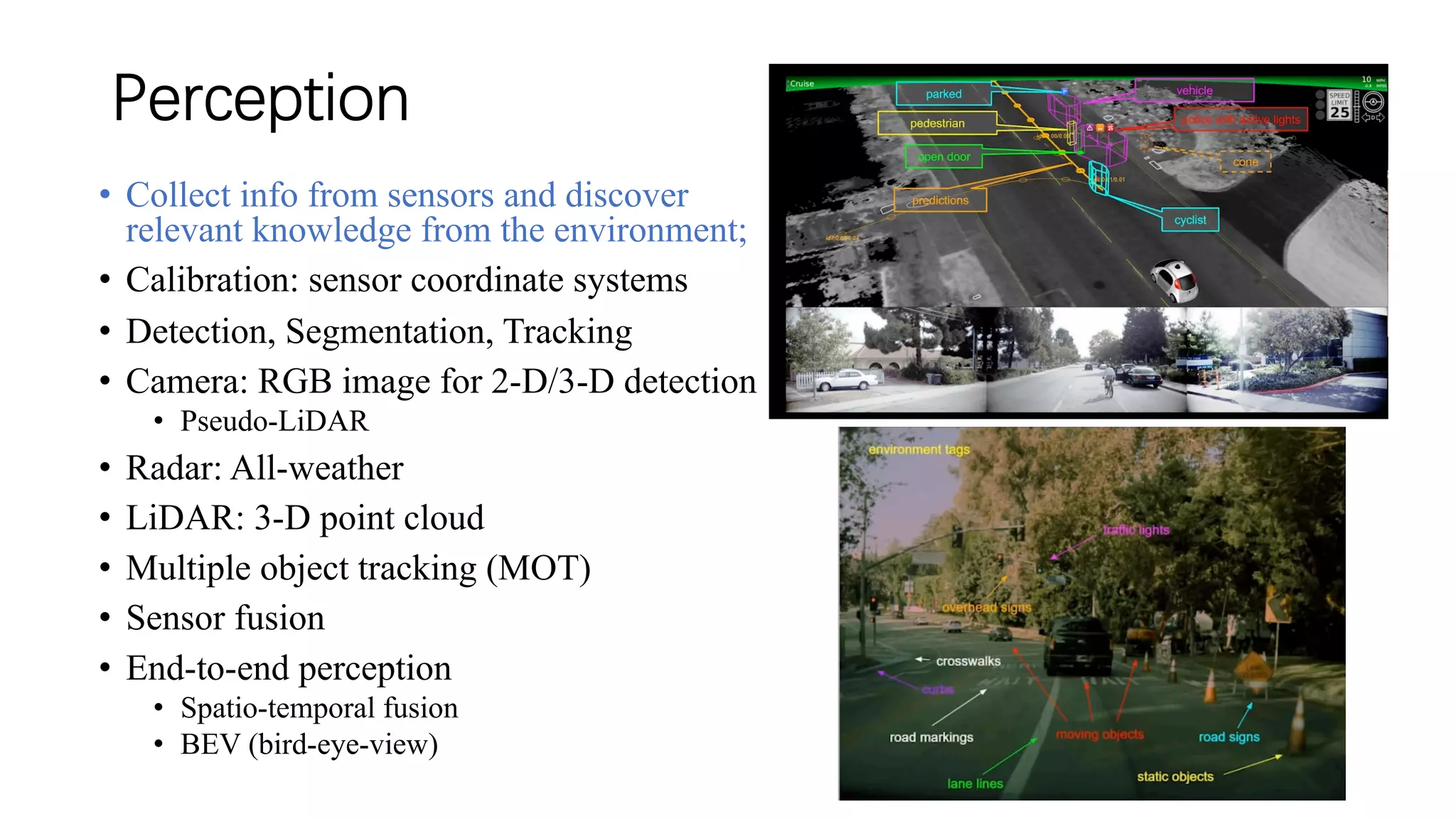

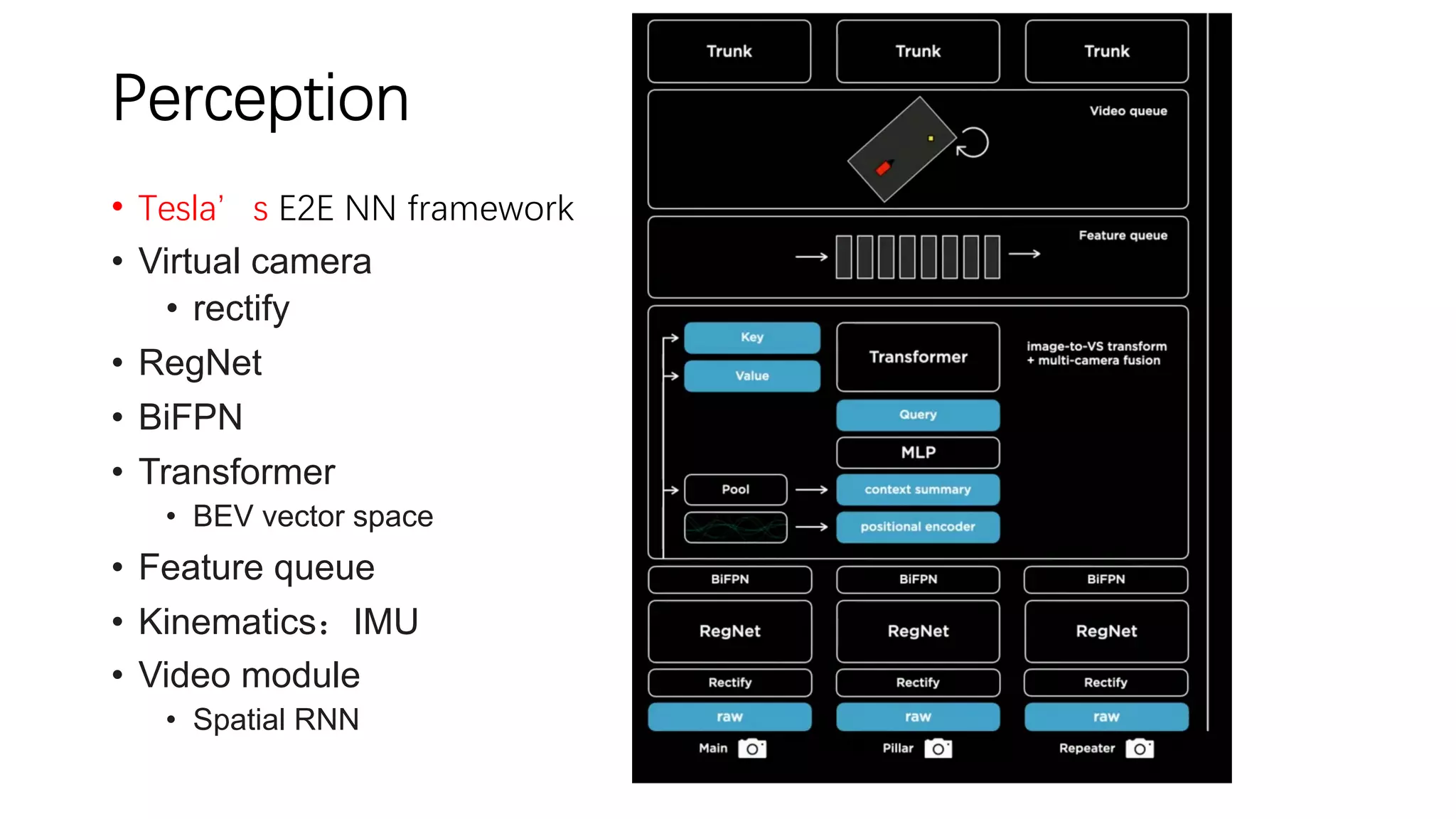

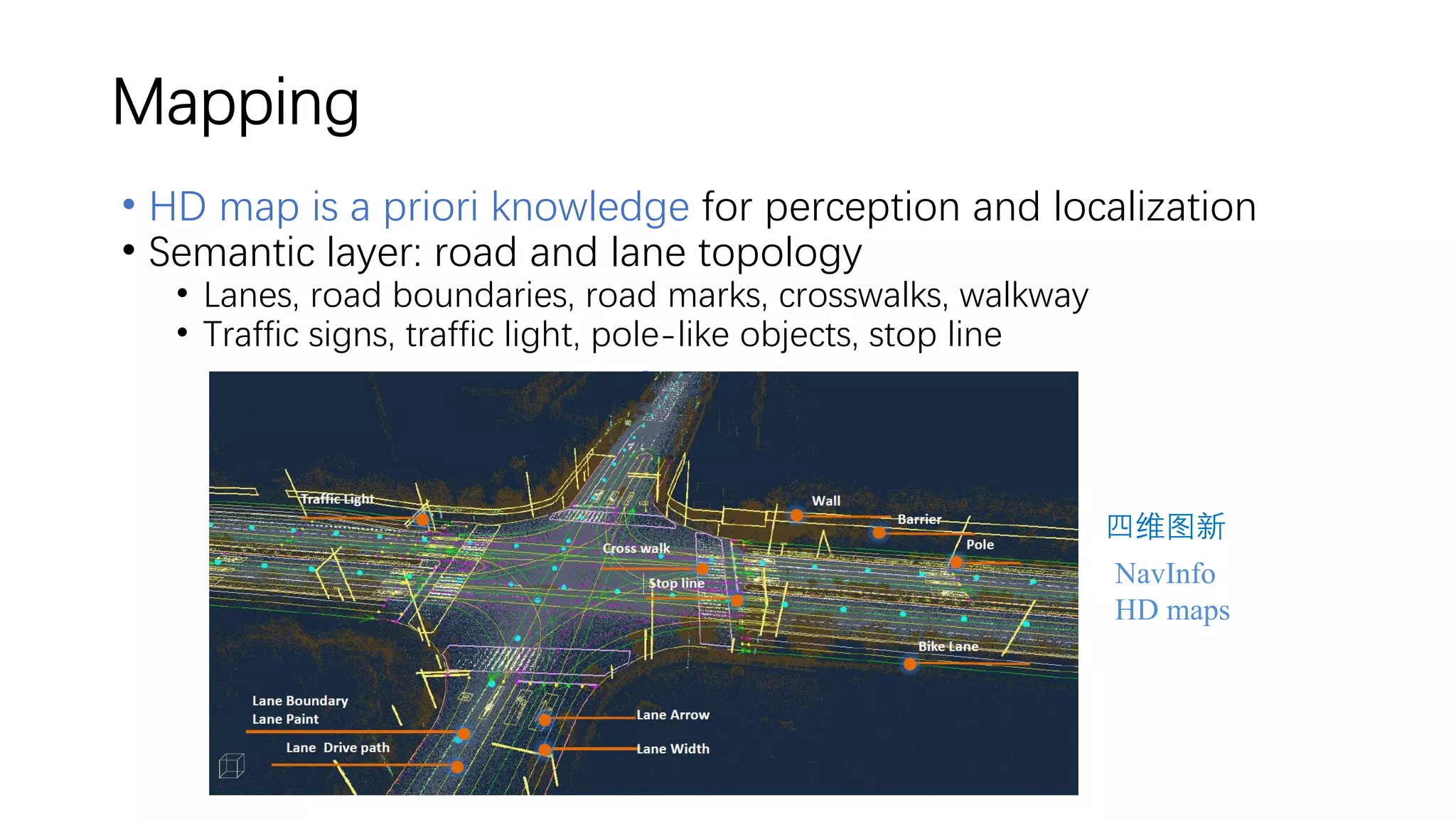

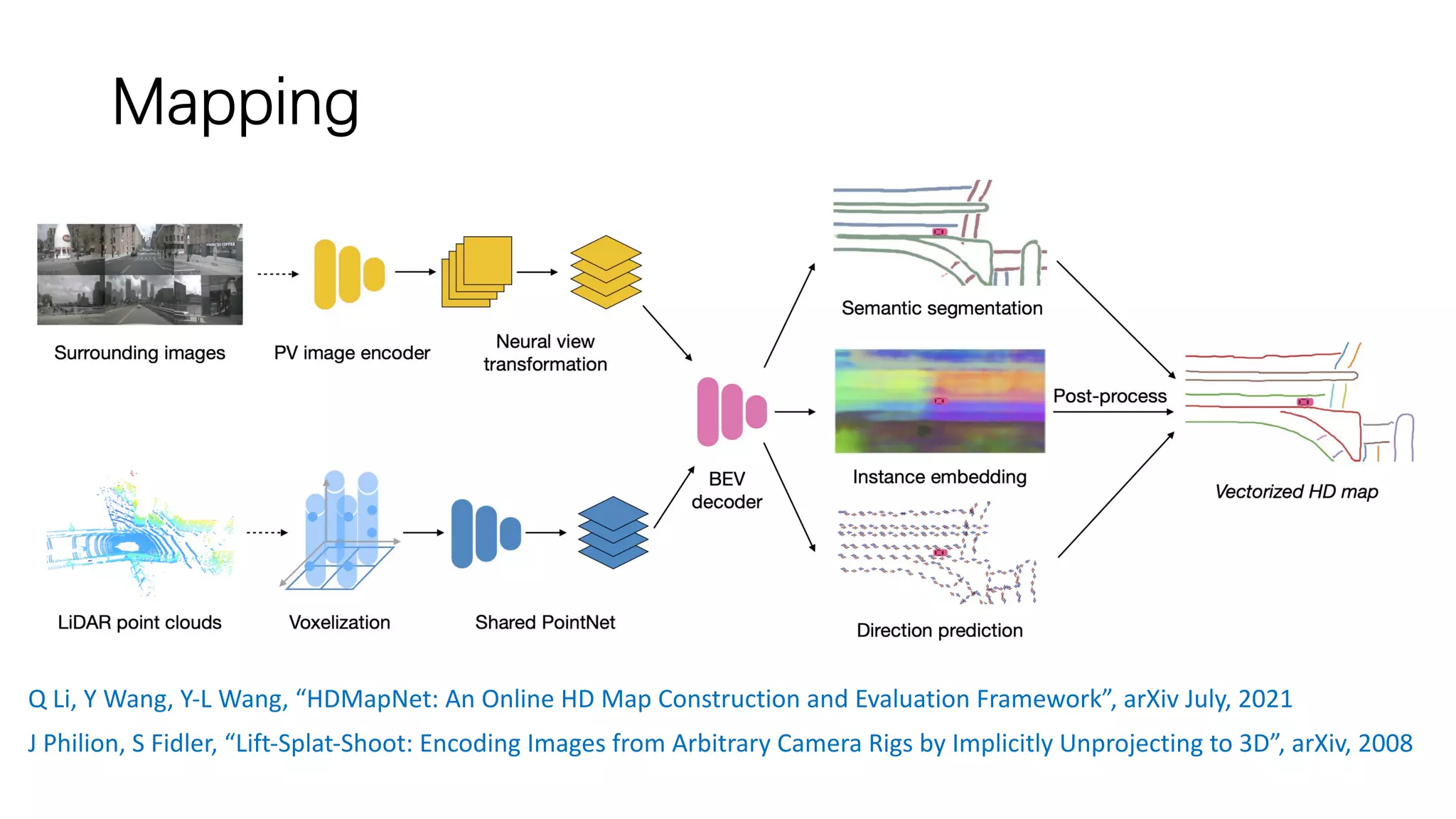

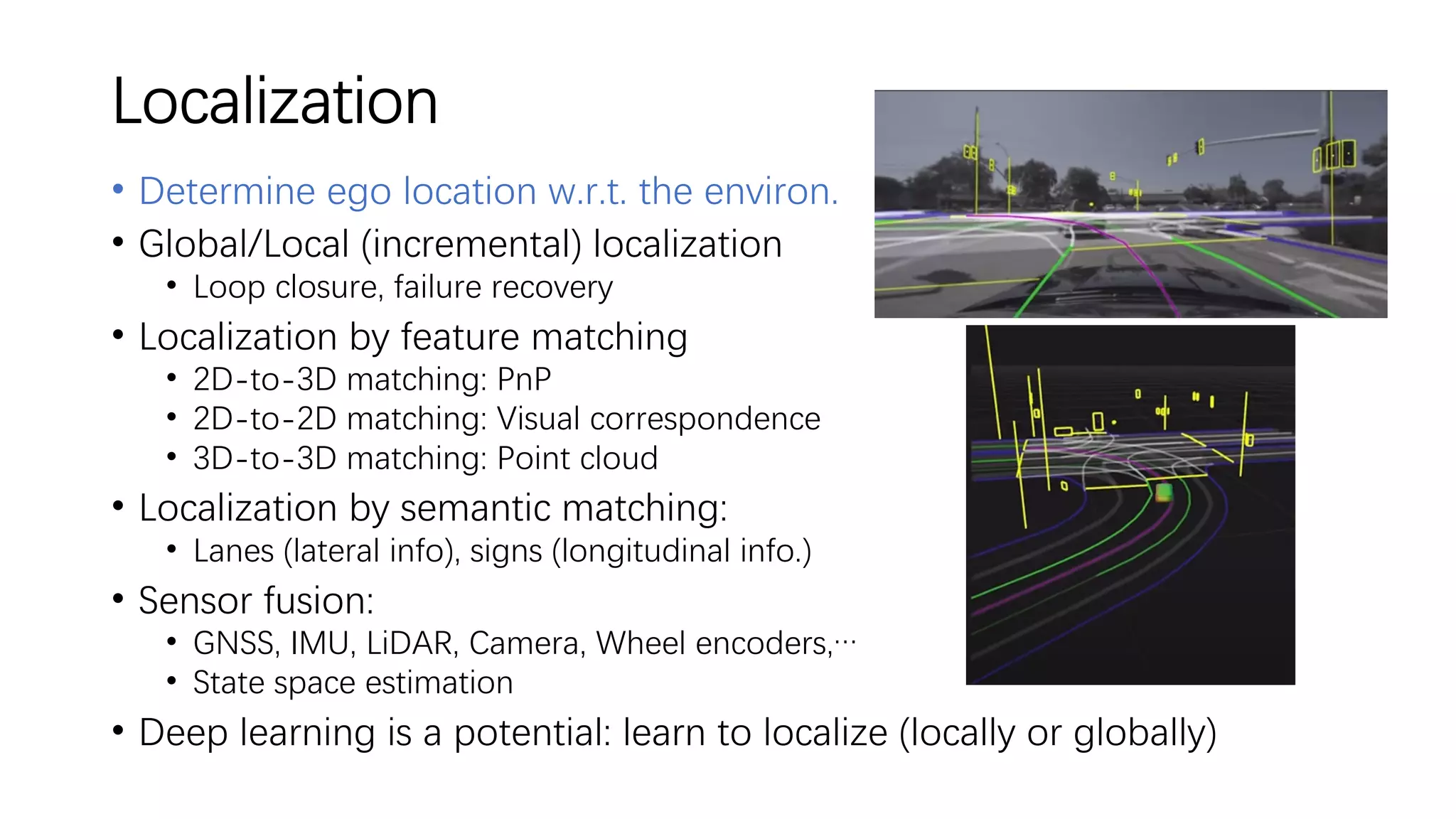

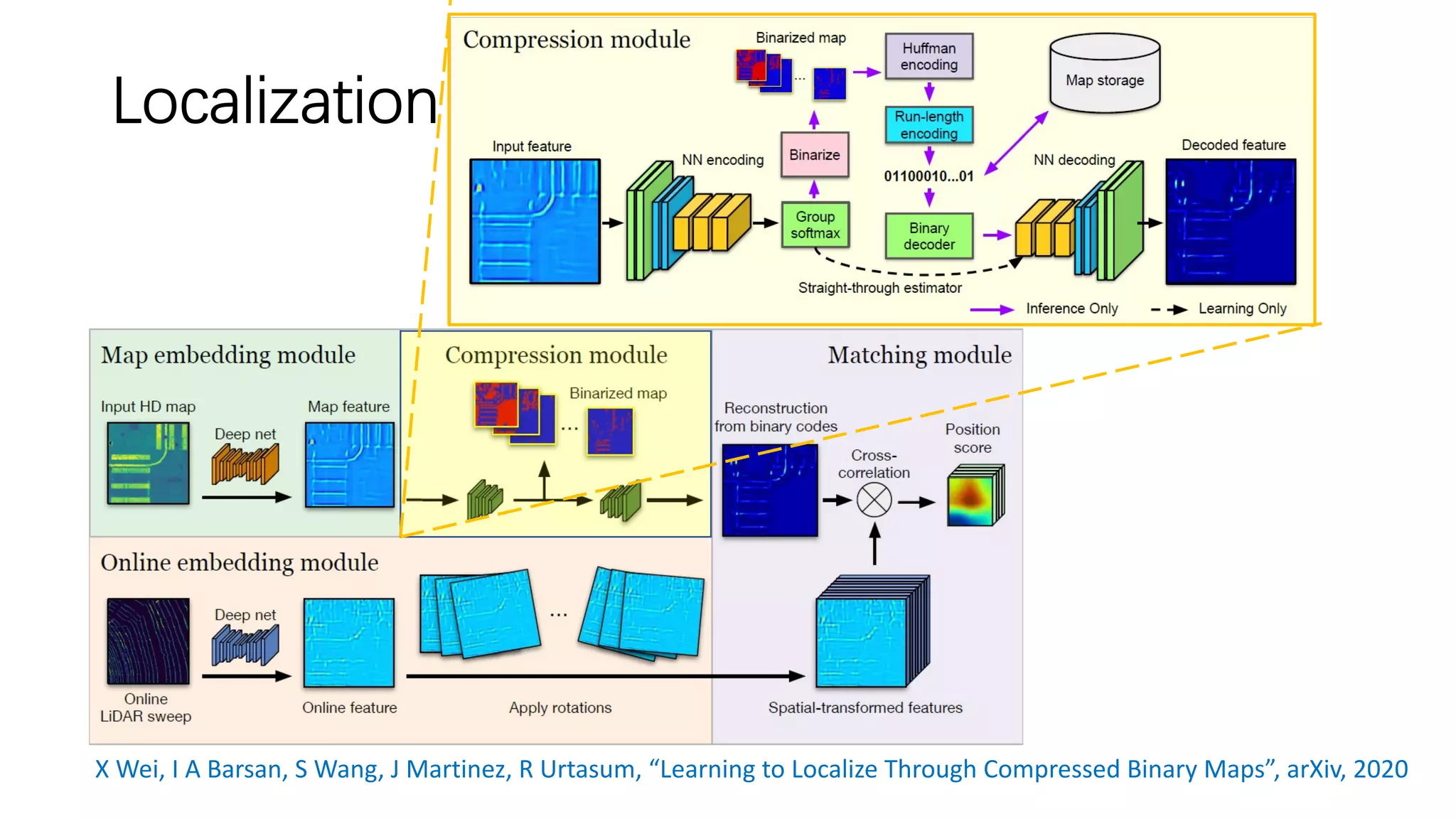

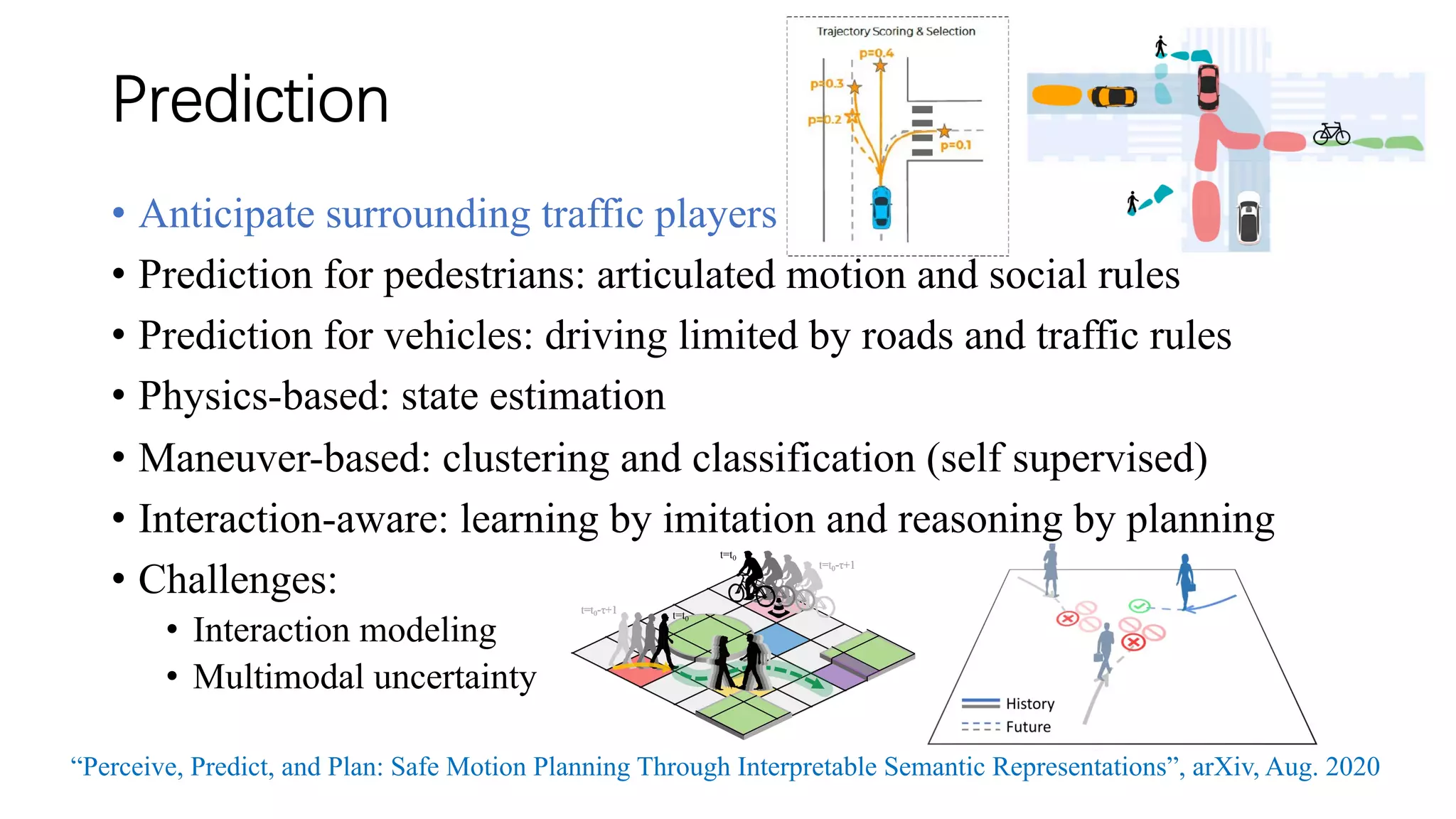

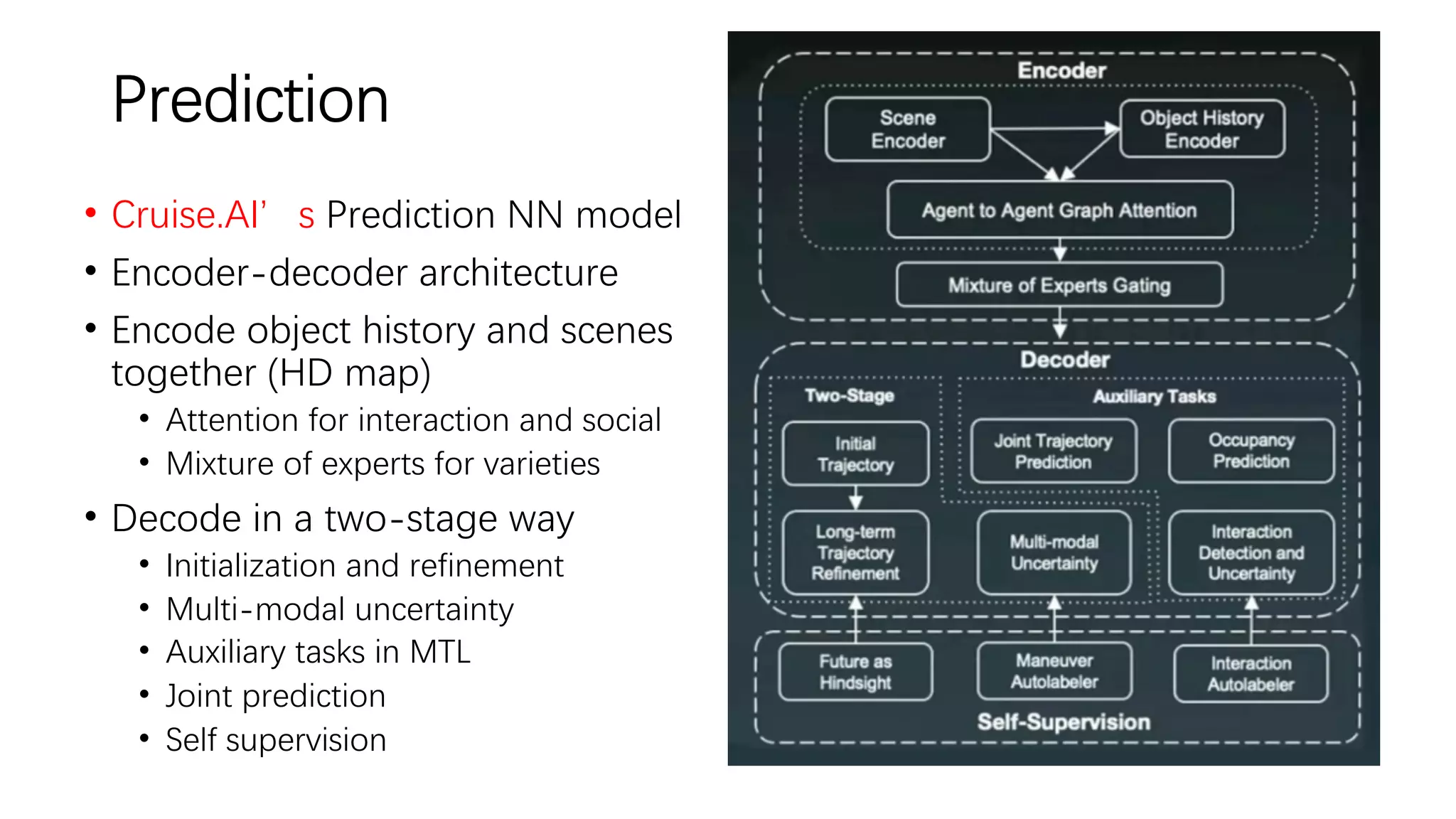

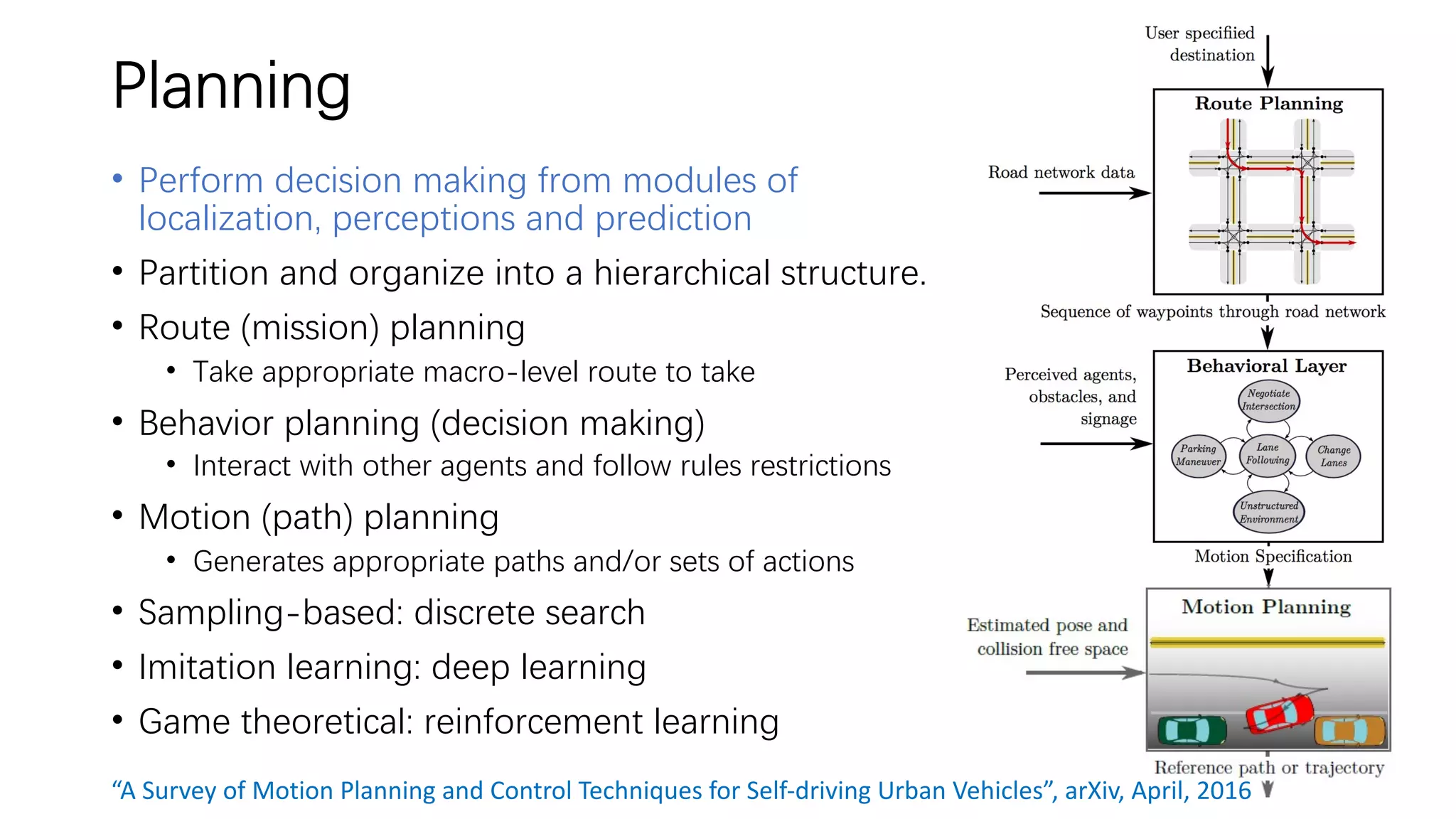

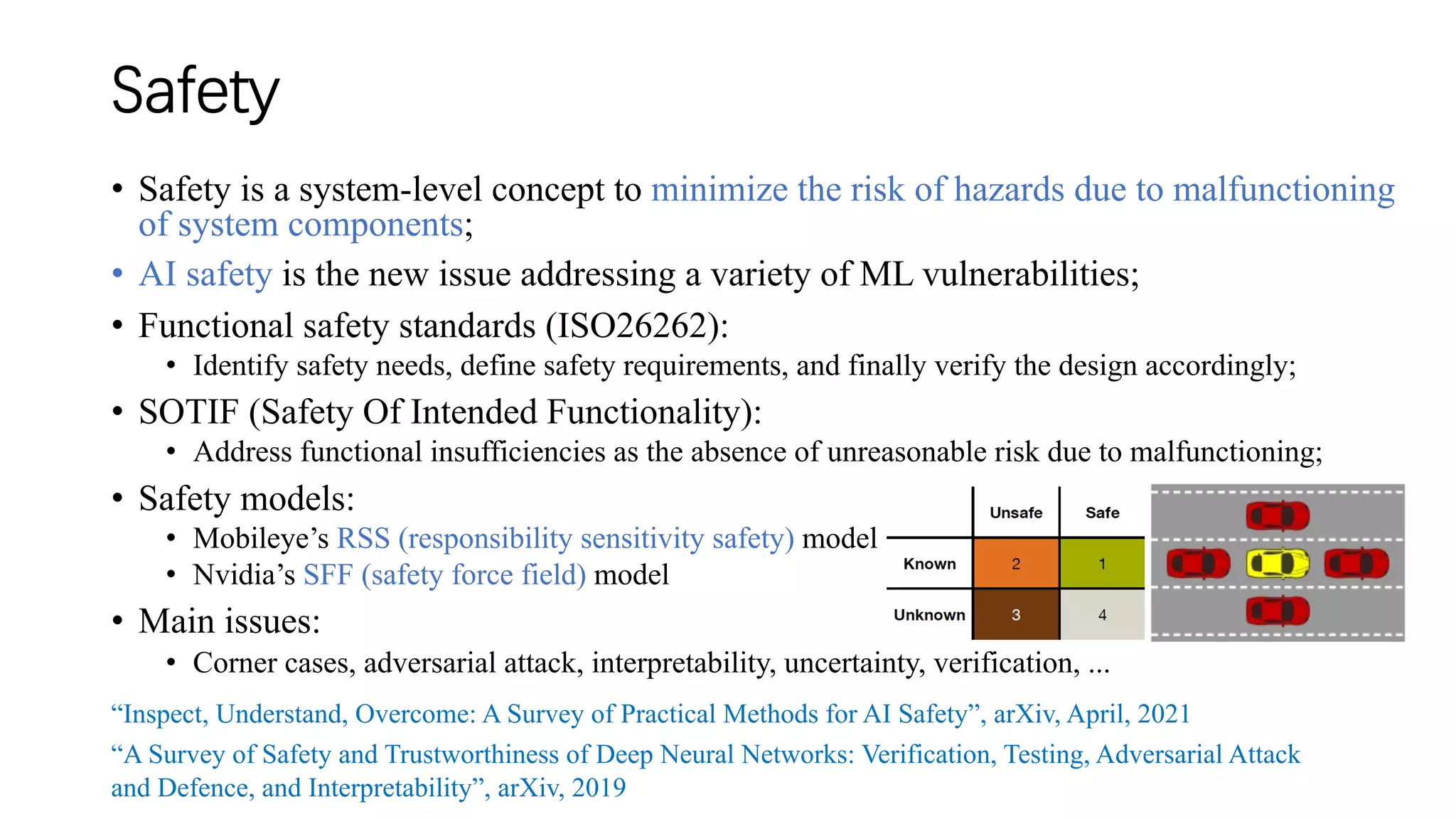

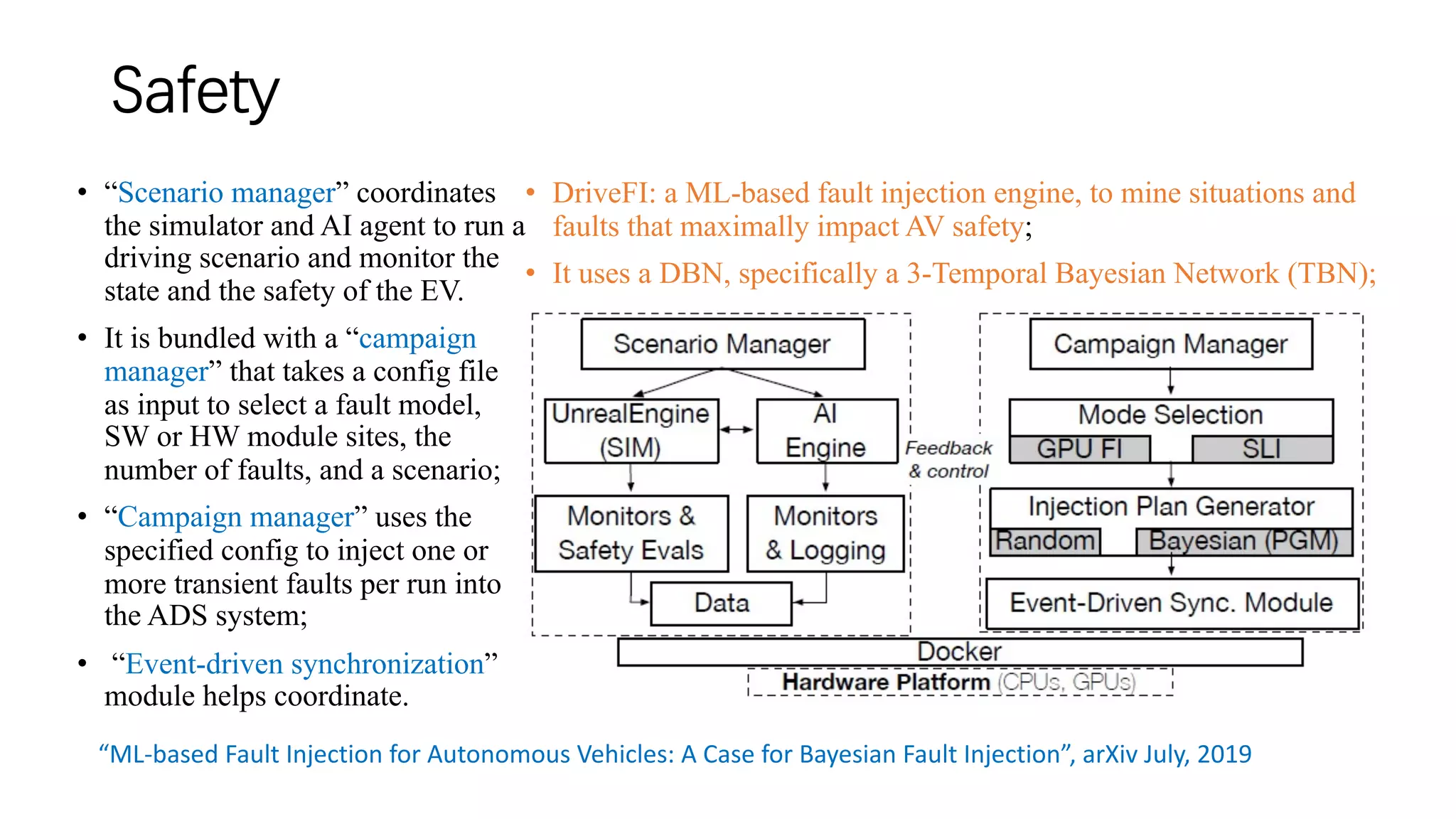

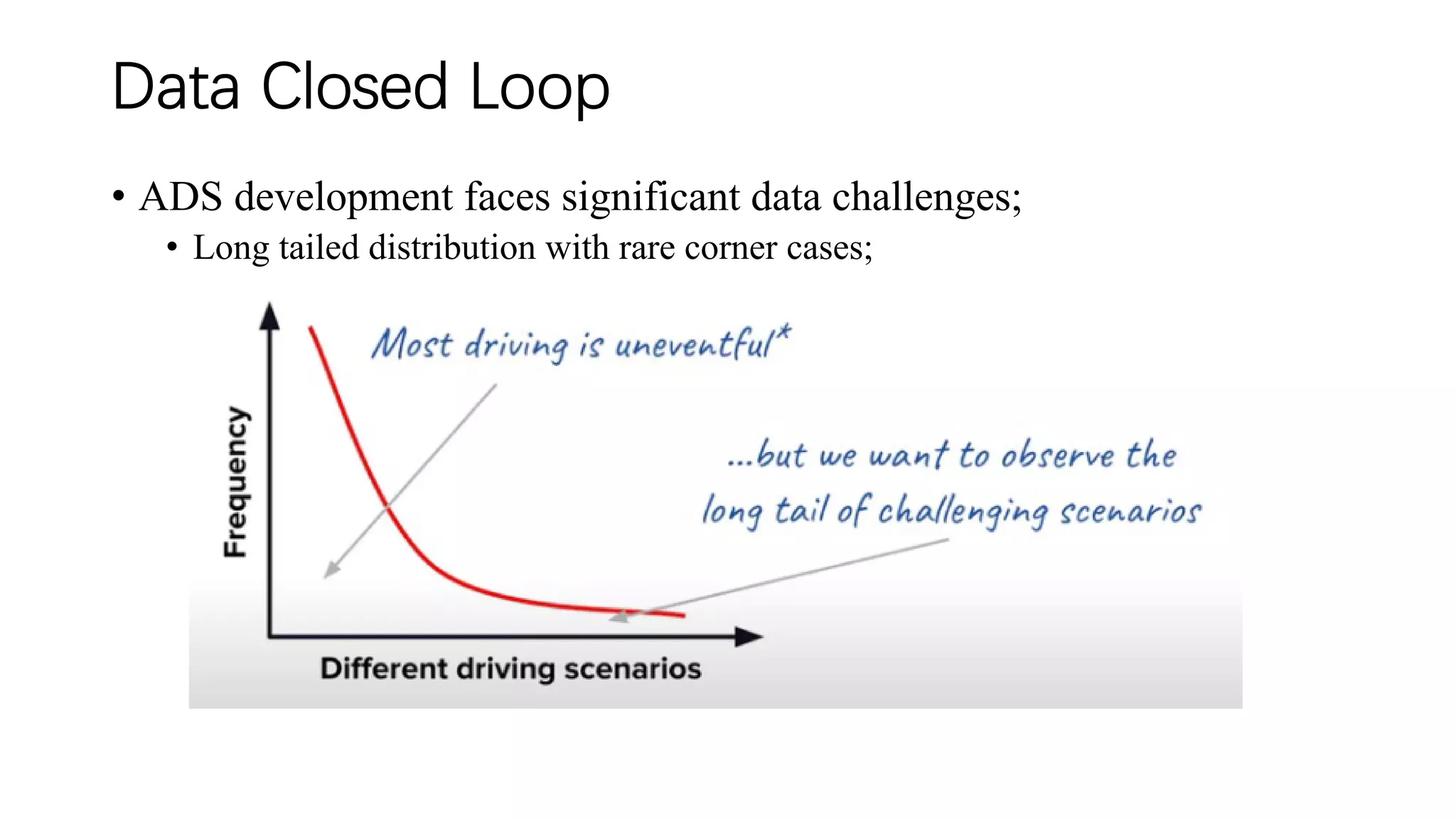

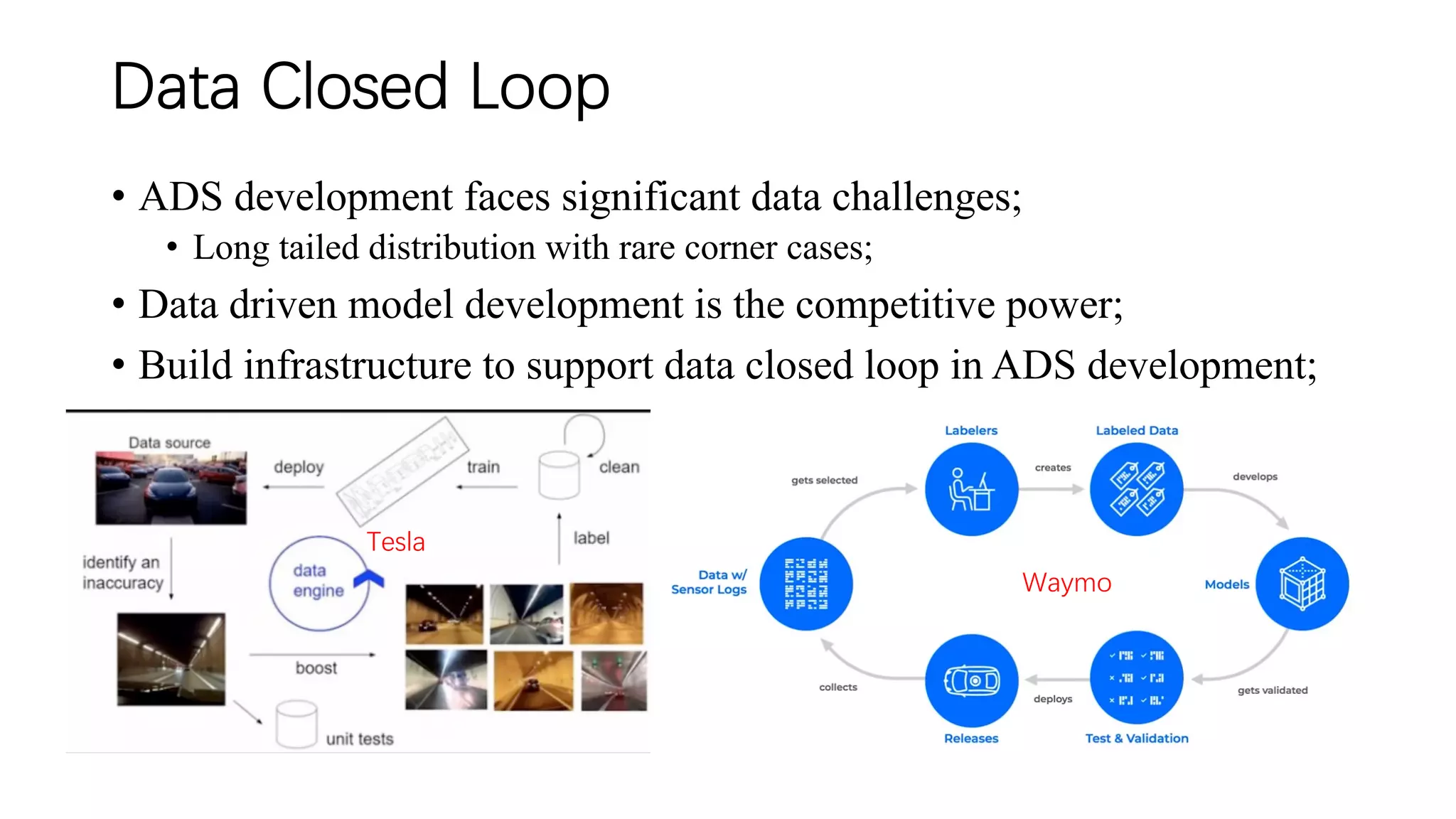

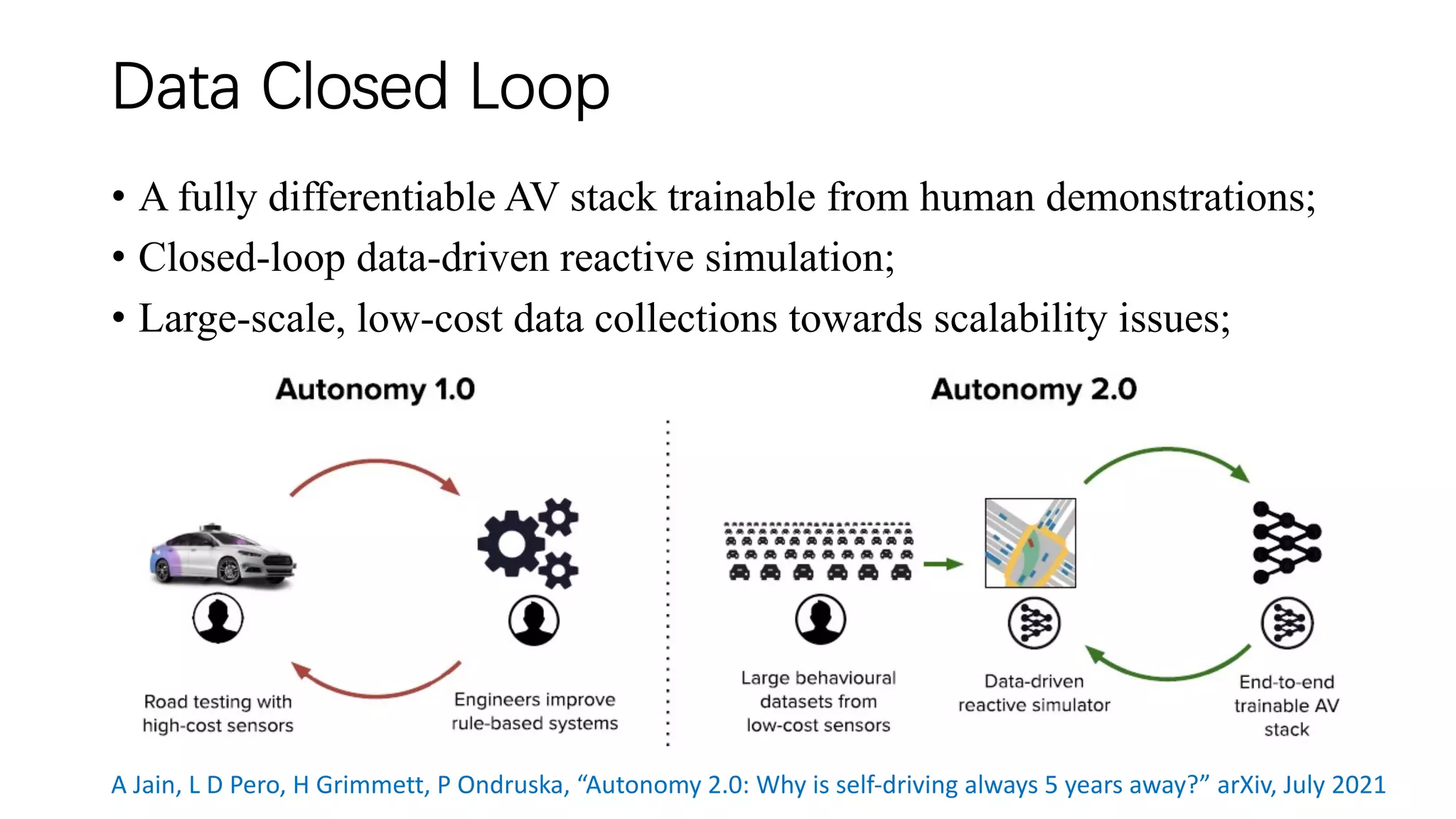

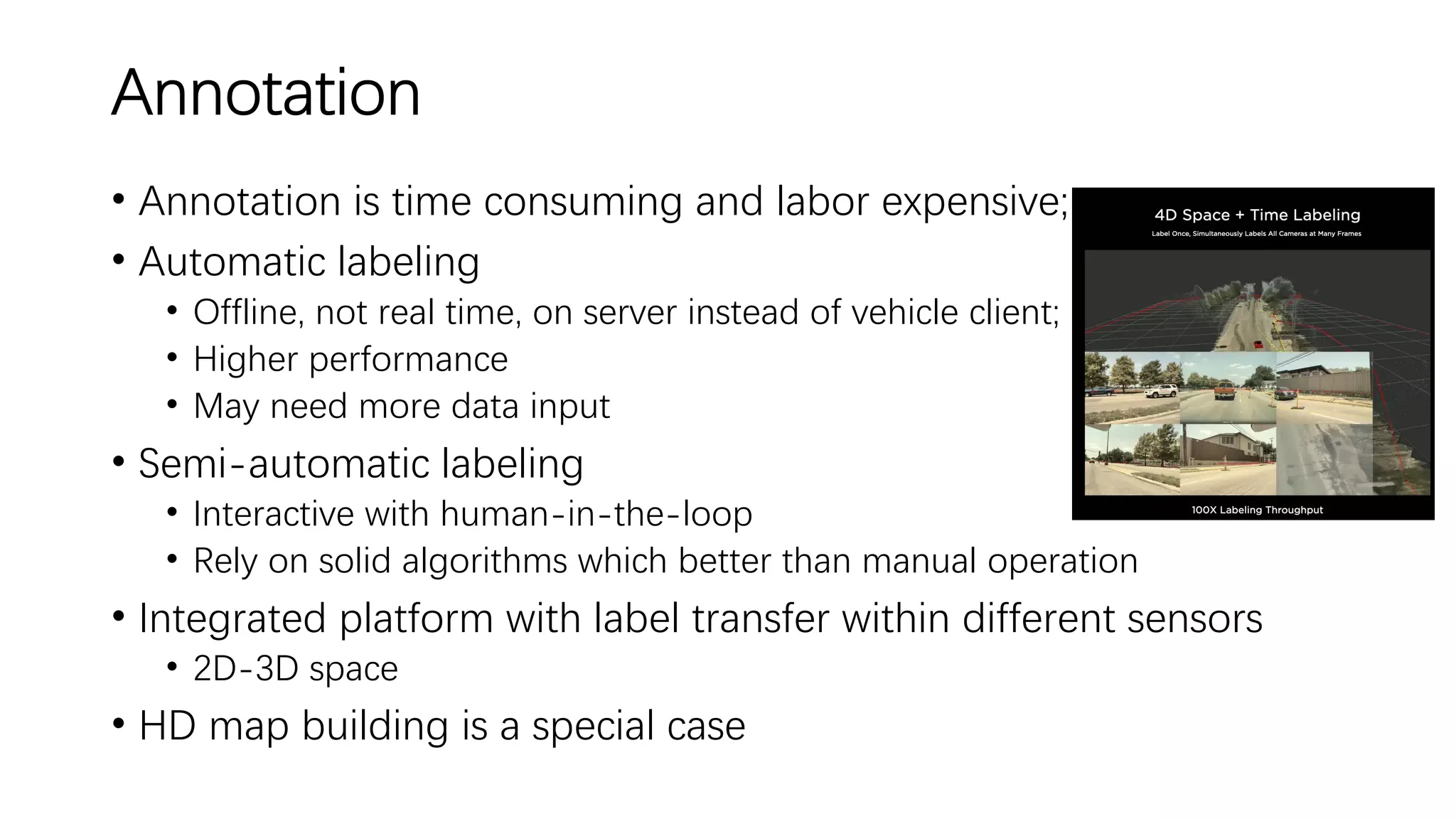

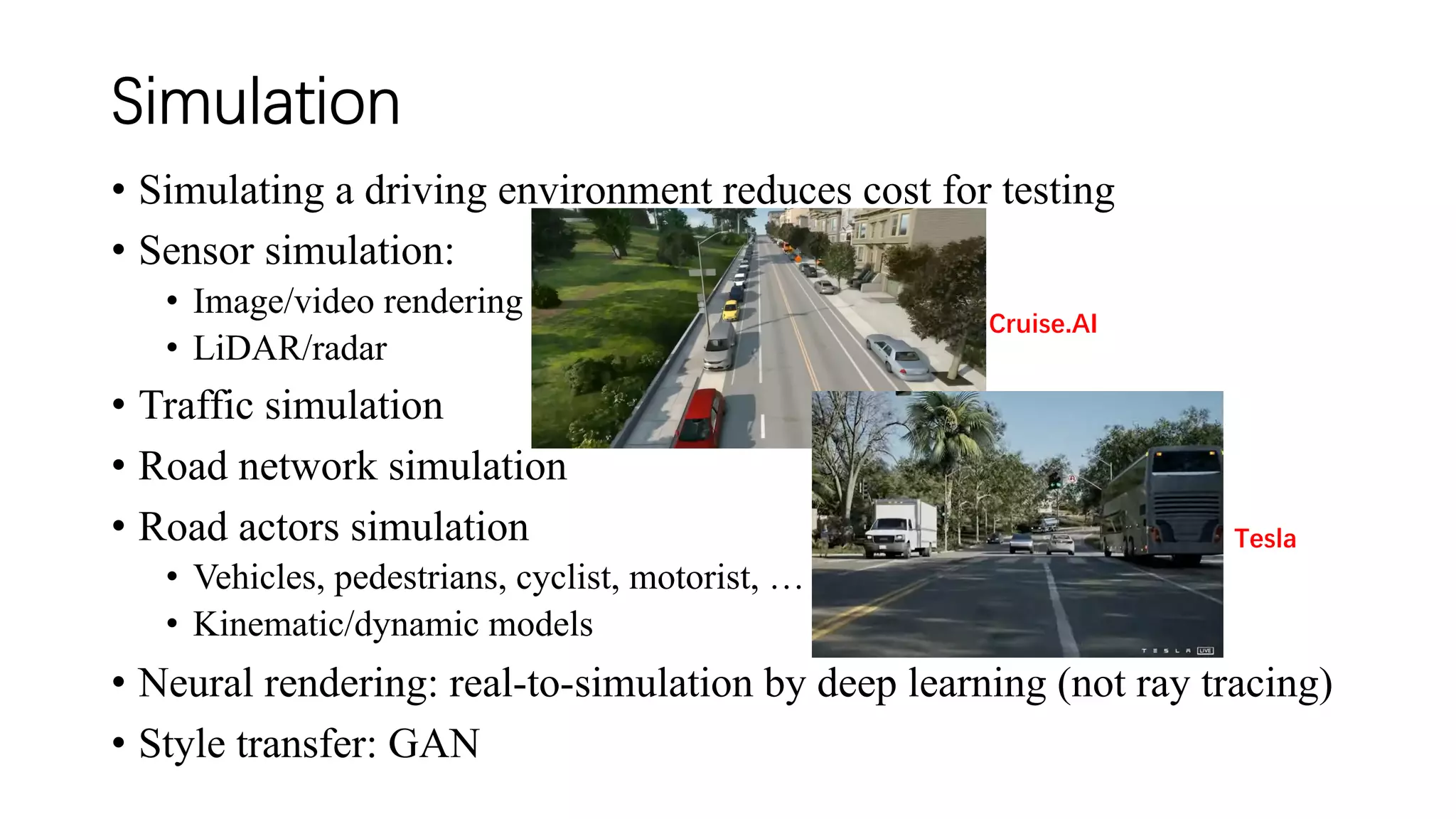

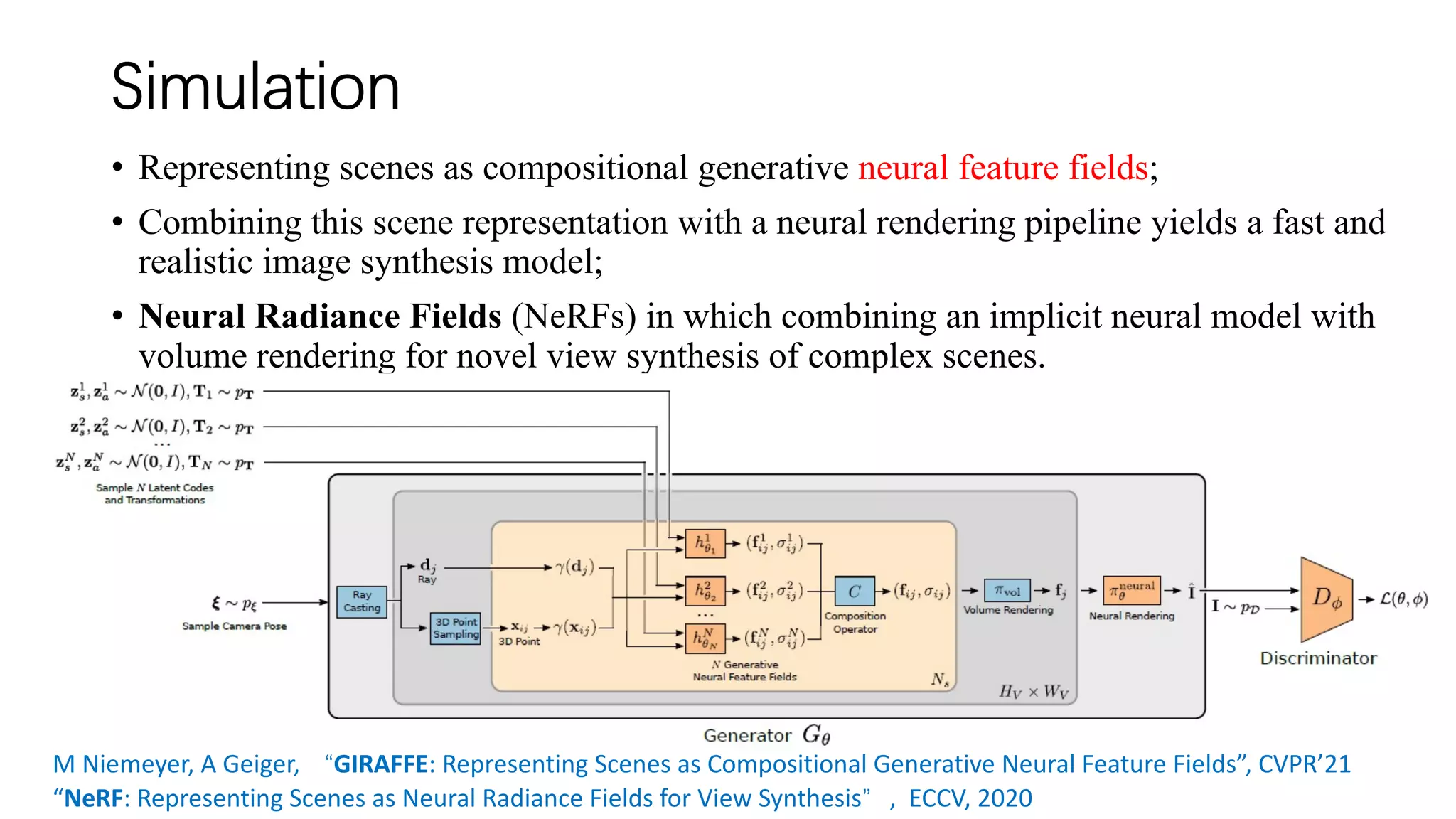

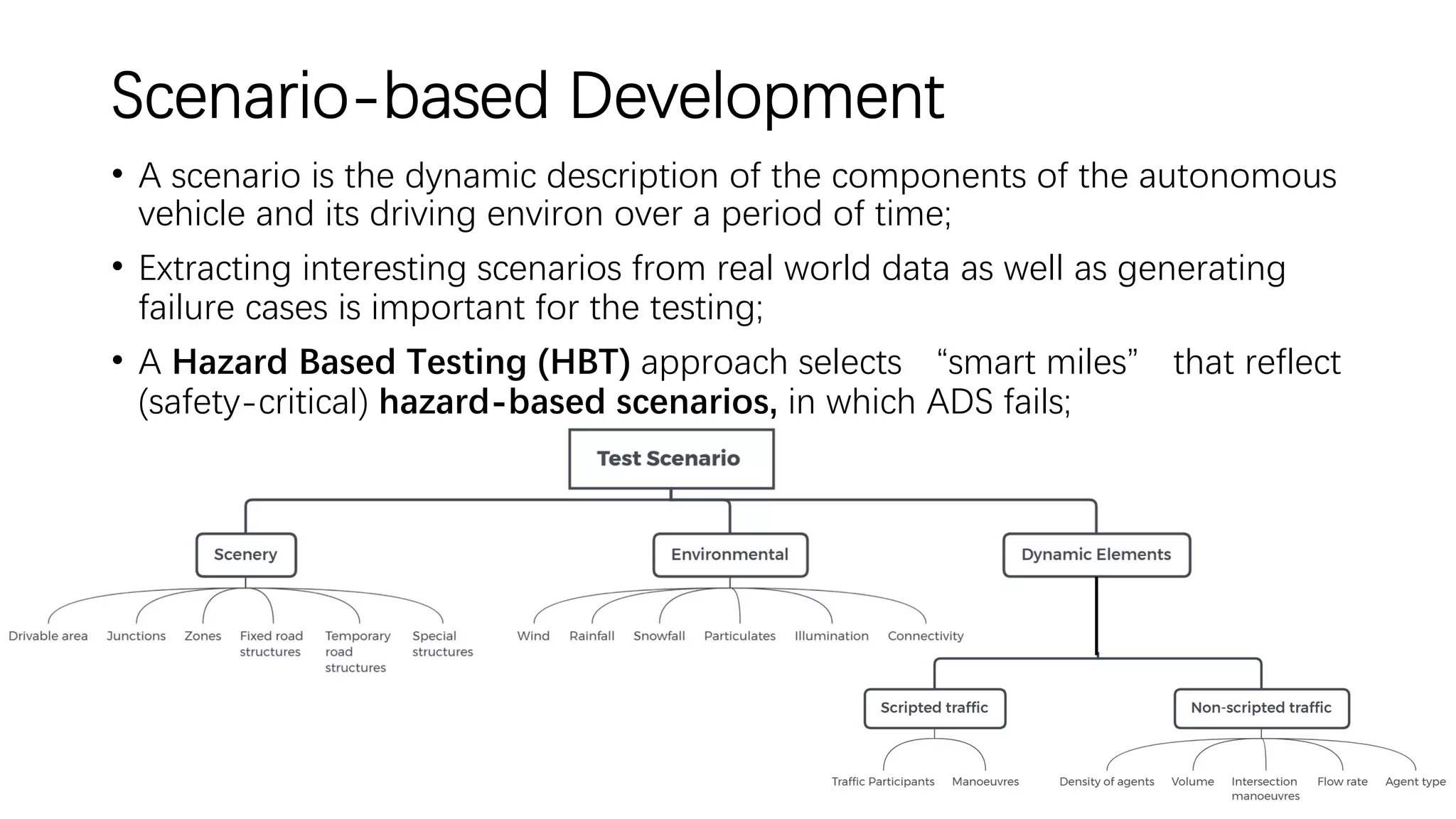

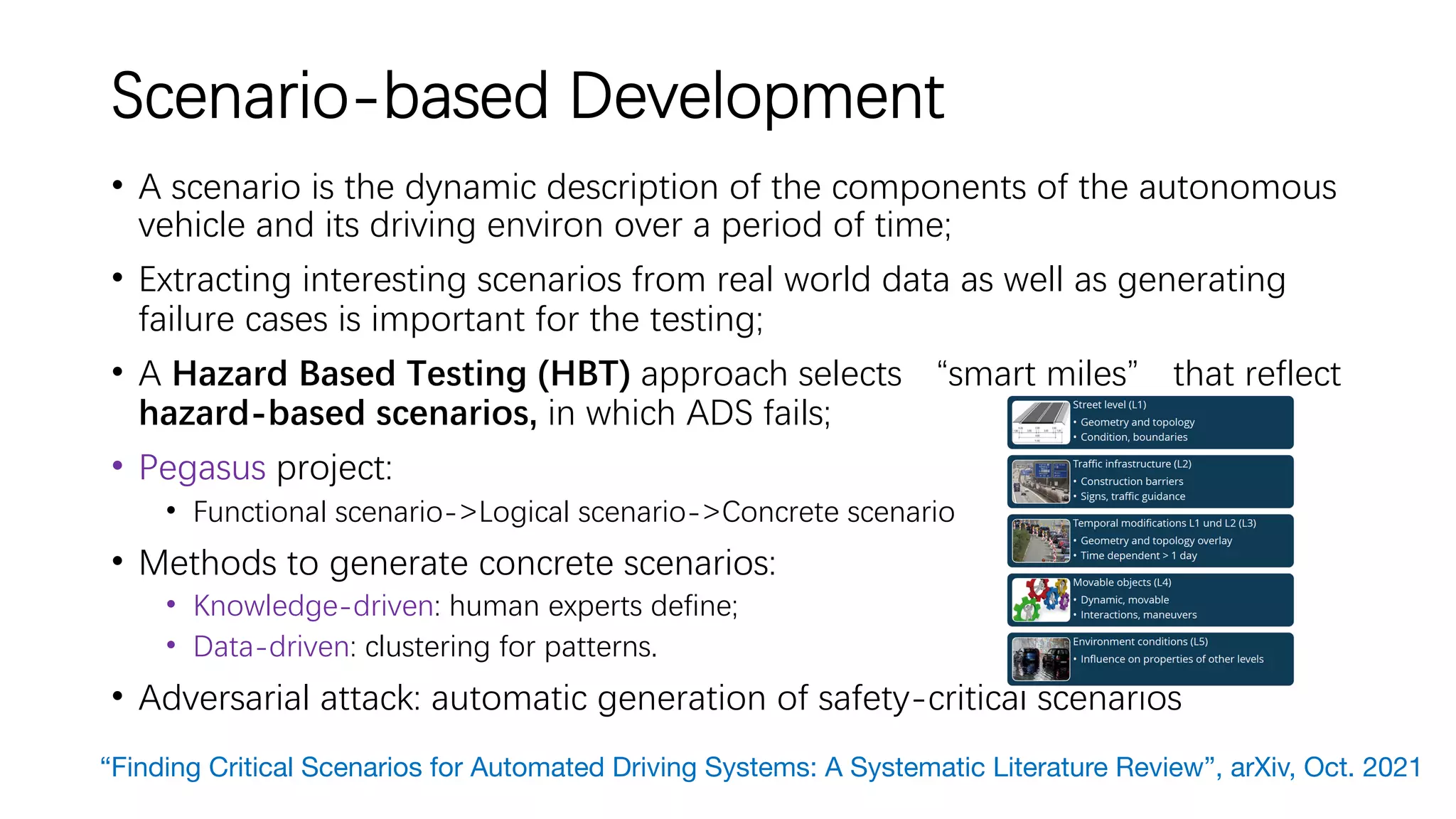

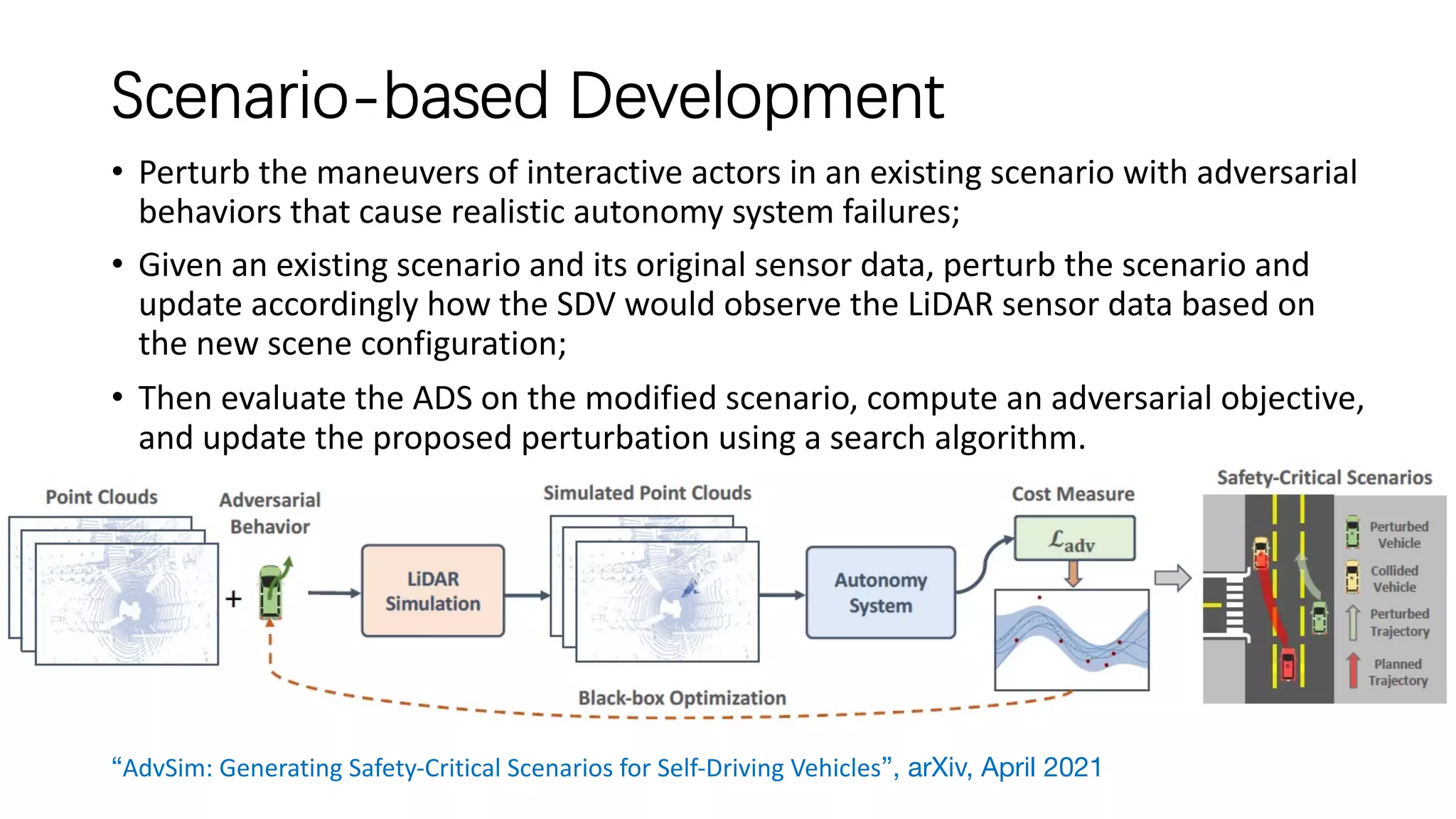

The document outlines the various techniques and challenges in autonomous driving, focusing on aspects such as perception, mapping, localization, prediction, and safety. It highlights the importance of deep learning and data closed loops in the development process while addressing issues like corner cases, system-level safety, and scenario-based testing. Key developments in sensor technology and operational domain design are also emphasized as crucial for advancing autonomous driving systems.