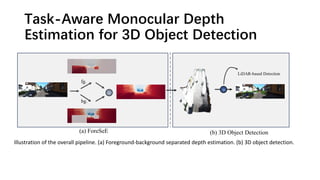

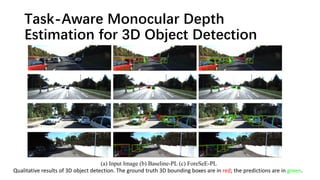

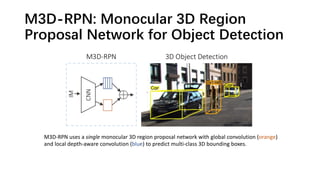

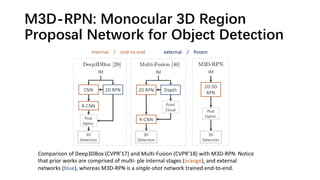

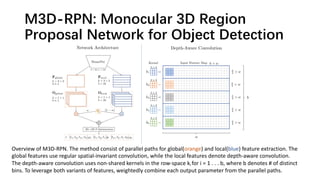

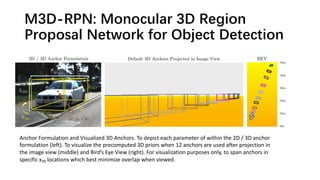

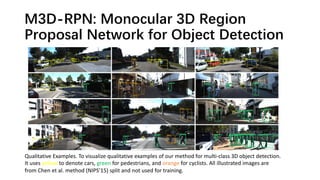

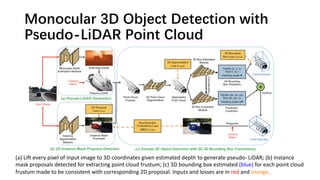

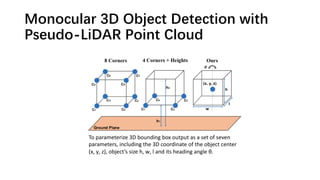

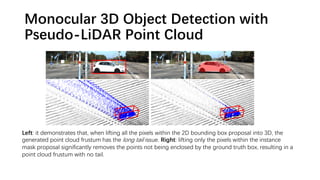

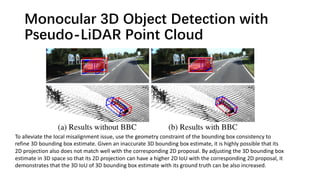

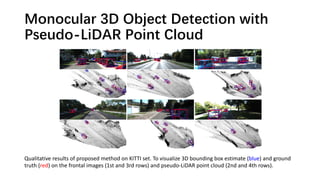

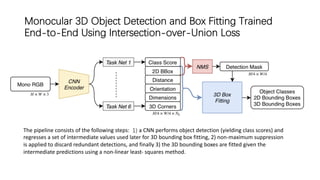

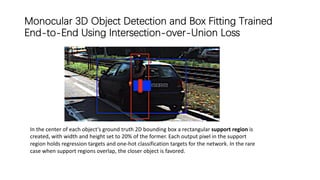

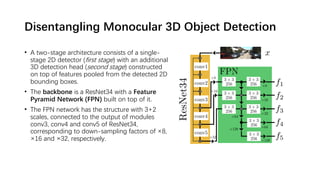

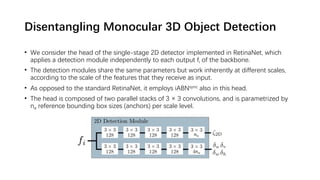

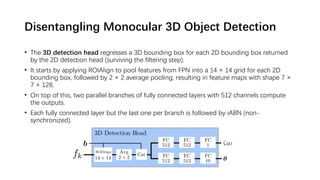

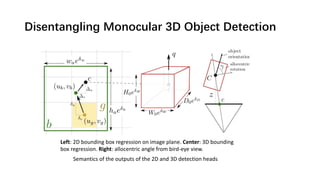

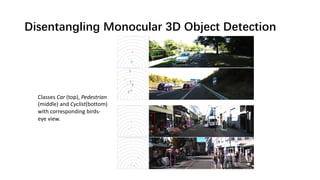

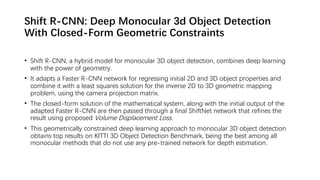

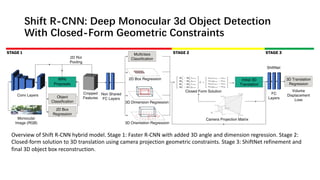

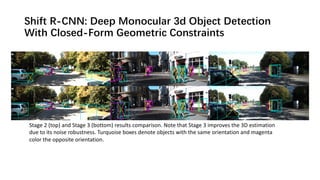

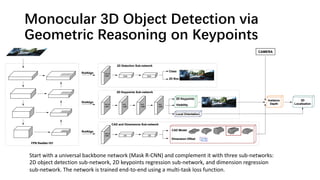

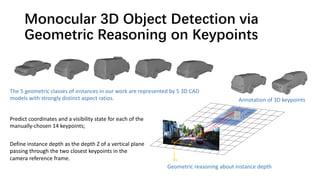

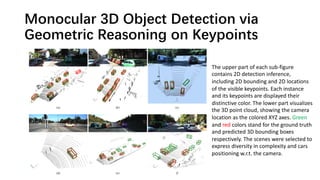

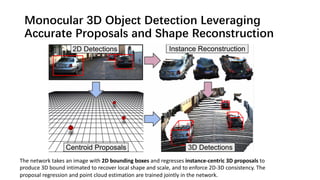

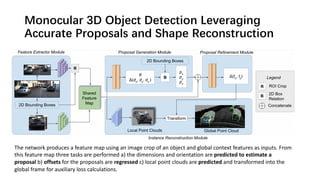

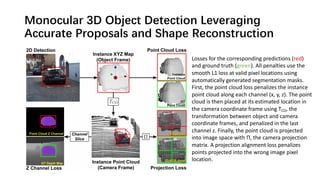

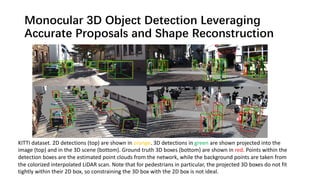

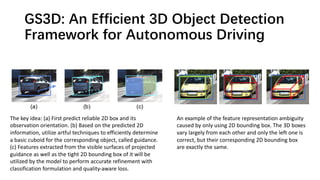

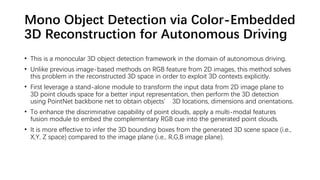

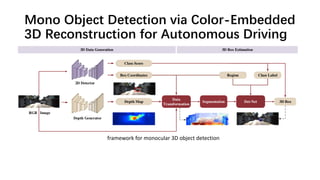

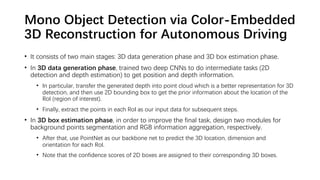

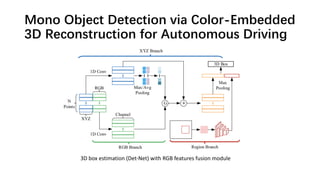

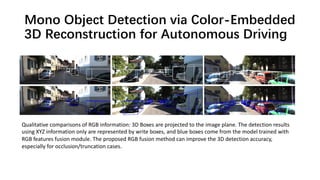

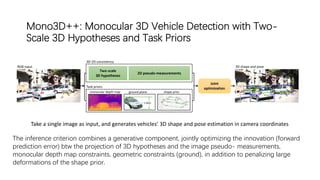

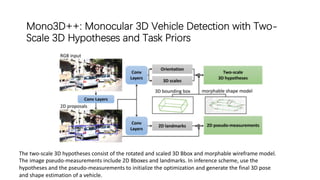

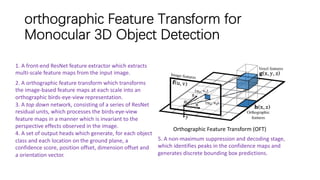

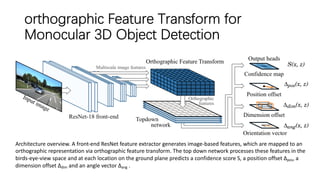

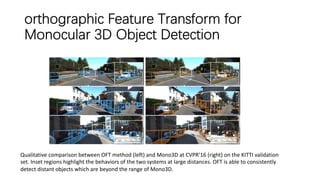

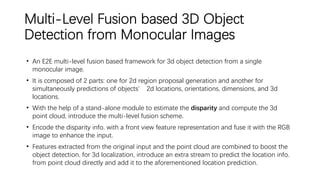

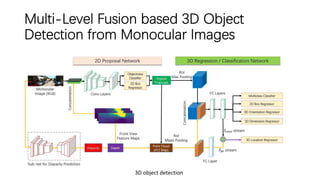

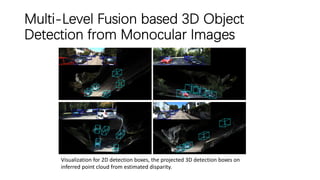

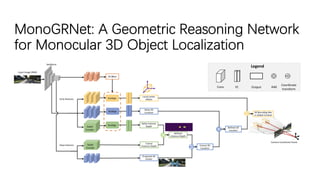

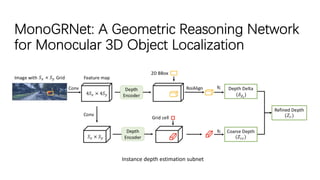

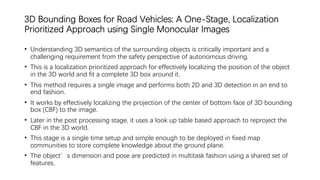

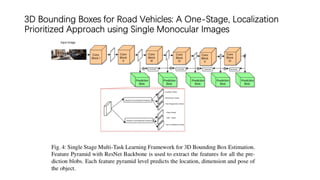

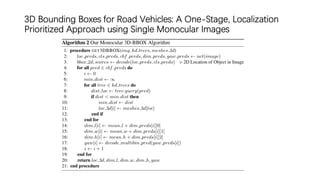

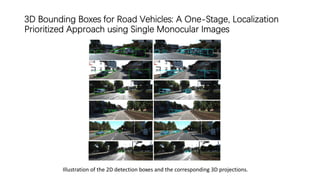

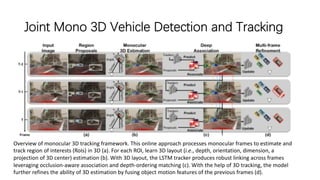

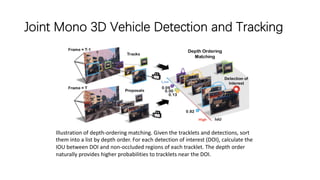

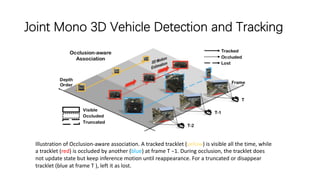

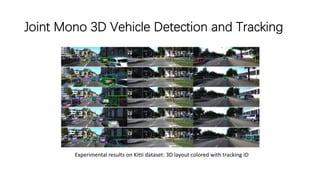

The document discusses advancements in monocular 3D object detection for autonomous driving, focusing on methods like m3d-rpn and pseudo-lidar point clouds to enhance detection accuracy from single 2D images. It emphasizes the importance of separating foreground and background in depth estimation, introducing innovative techniques for robust 3D object localization. The document also critiques existing evaluation metrics and proposes solutions to improve the performance of 3D detection systems.