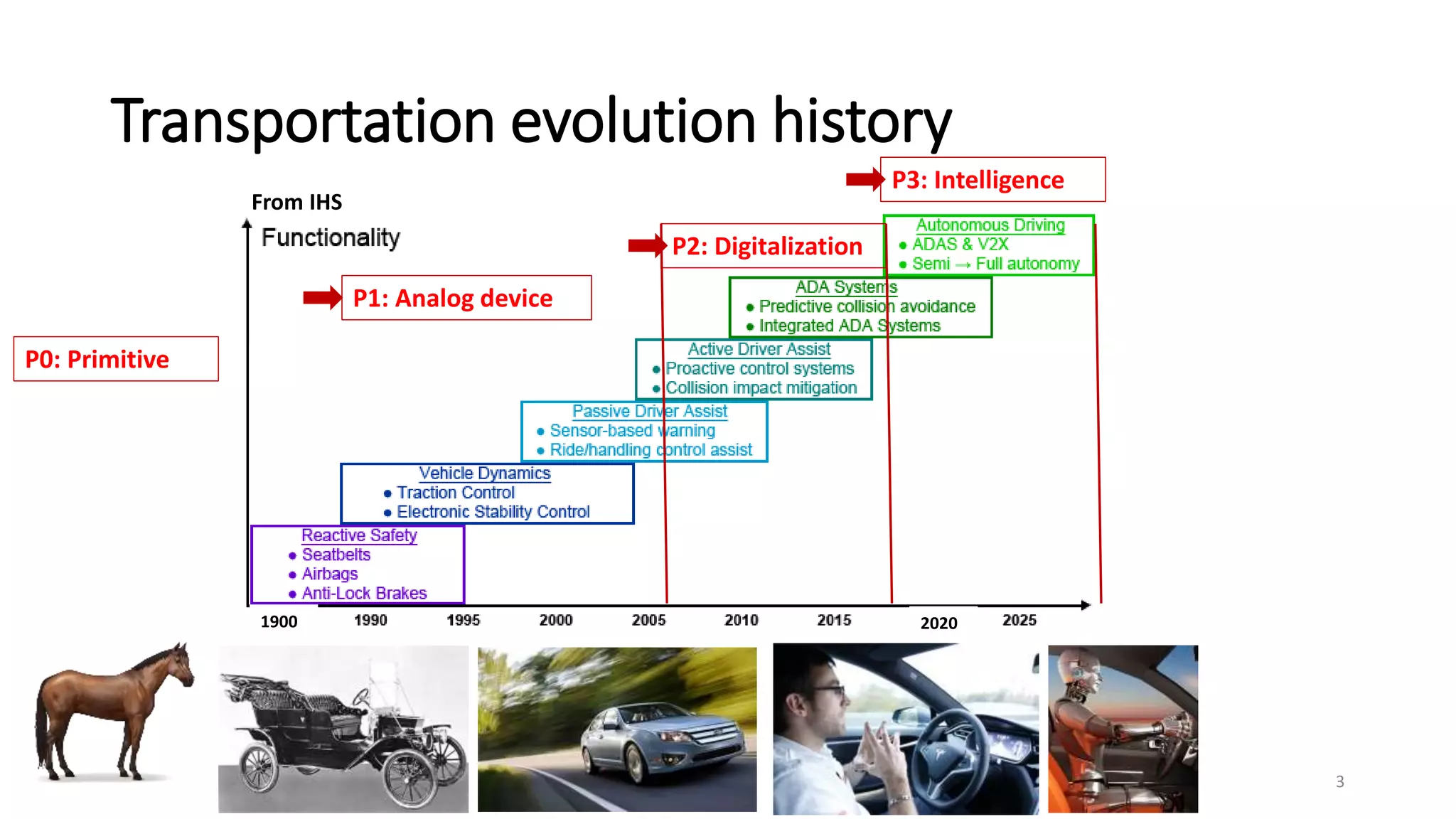

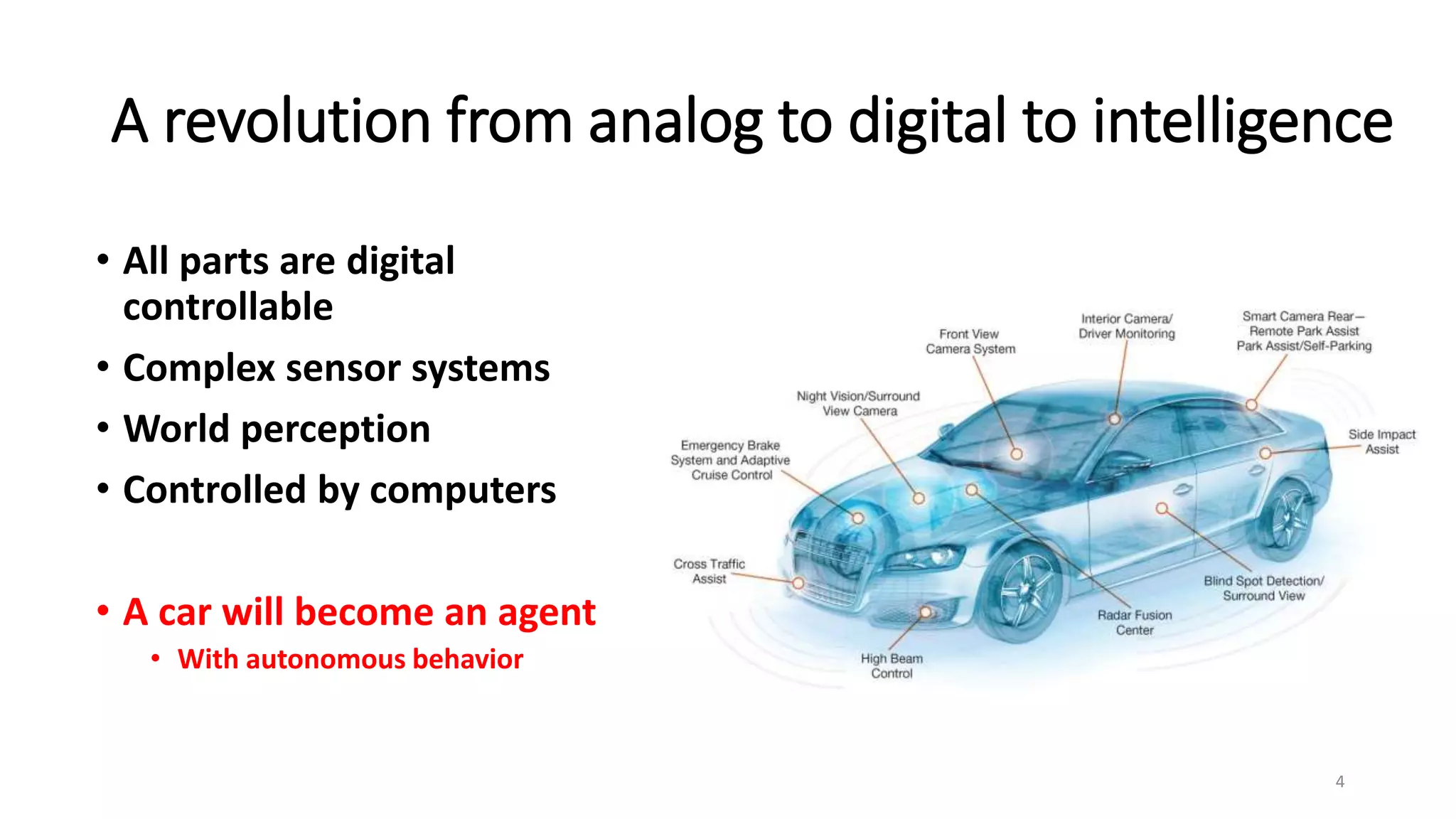

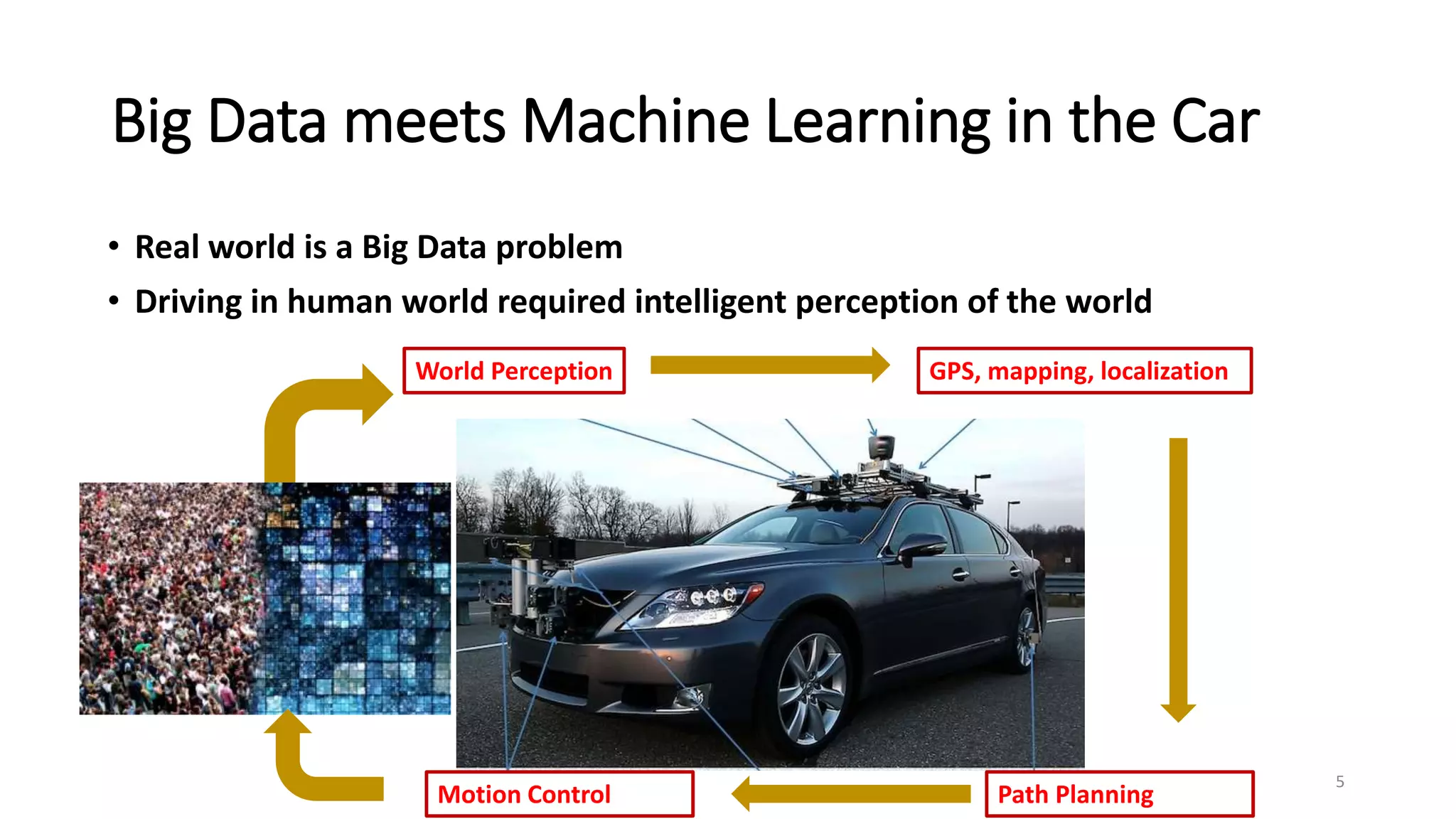

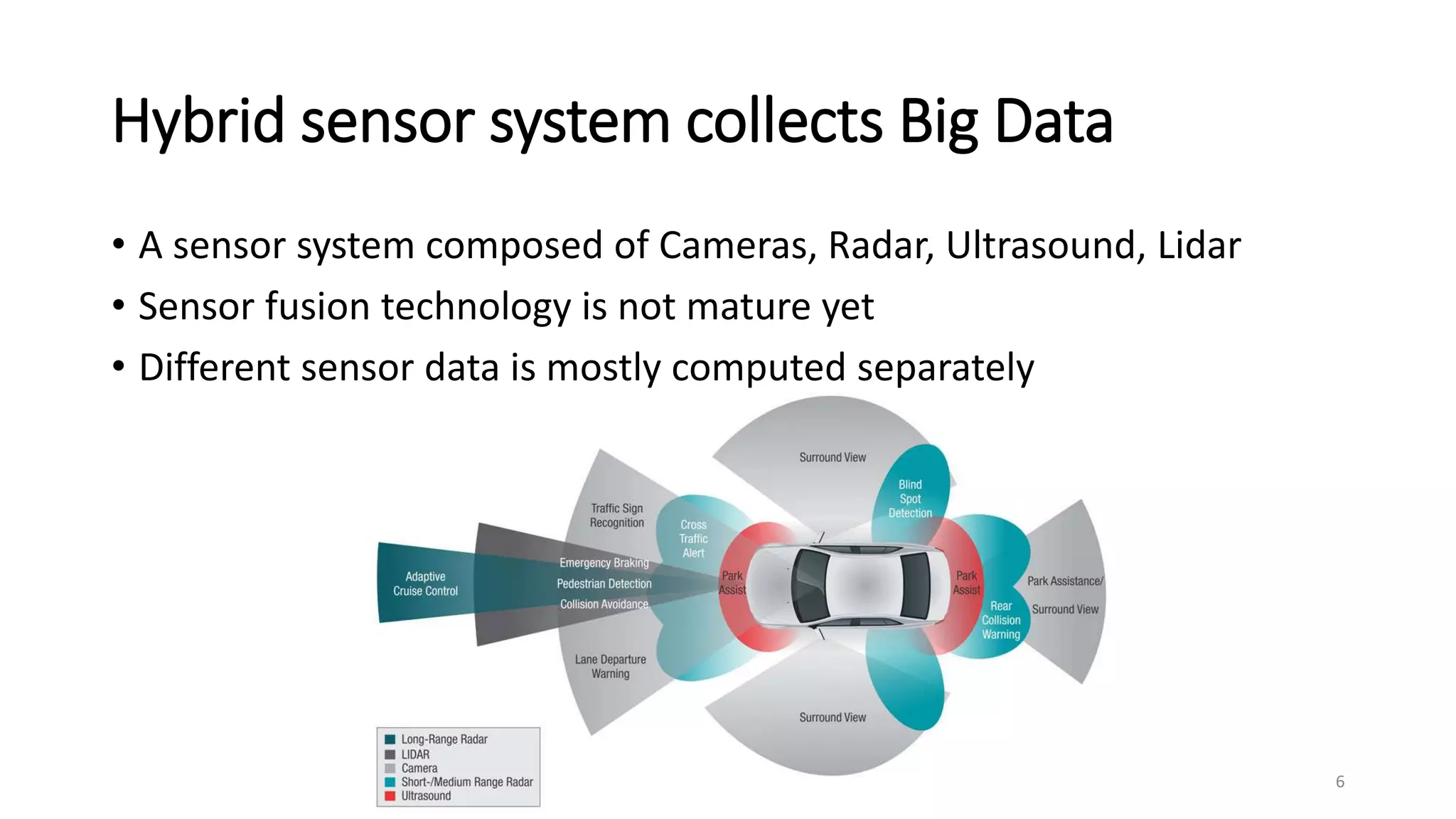

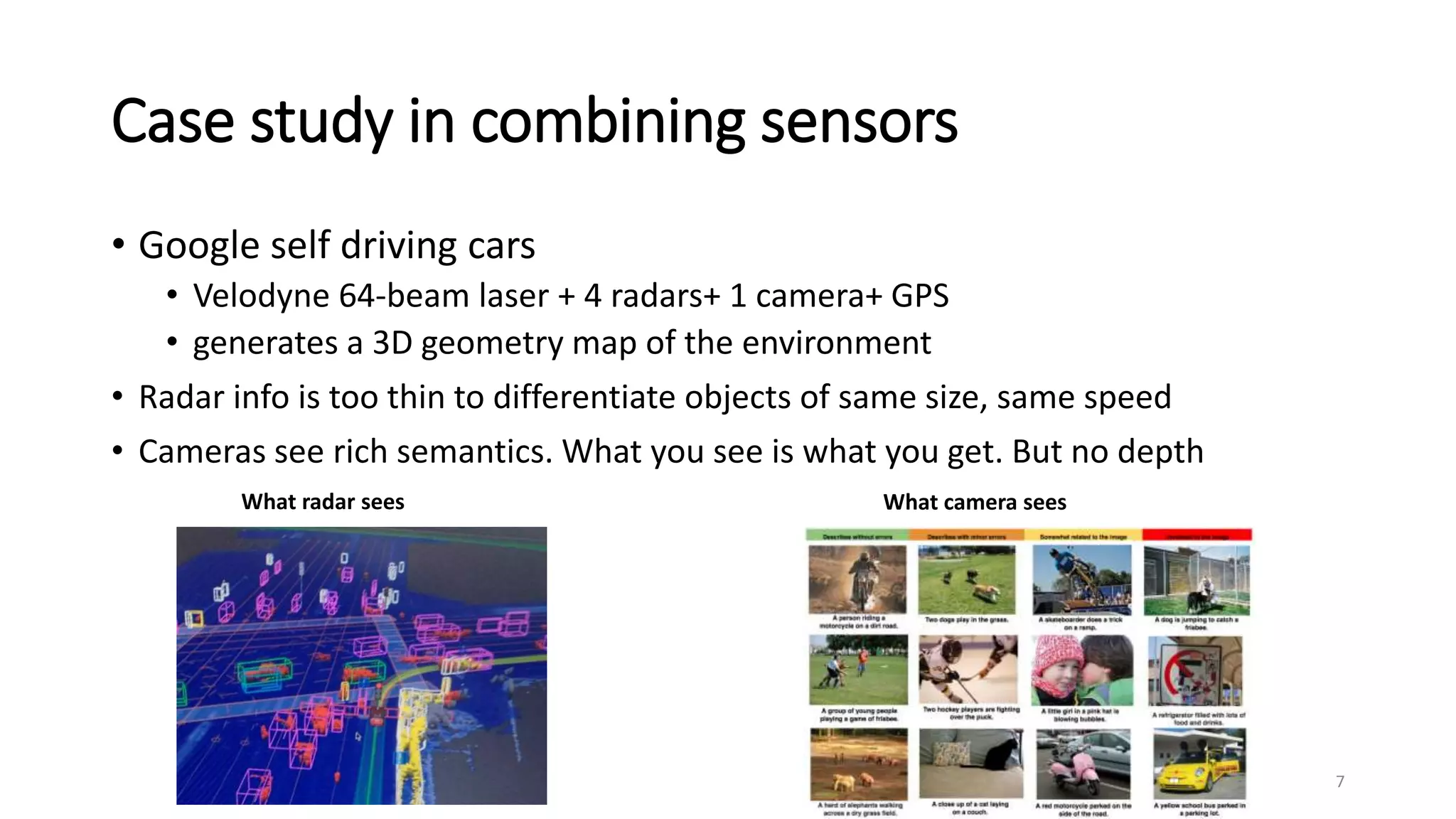

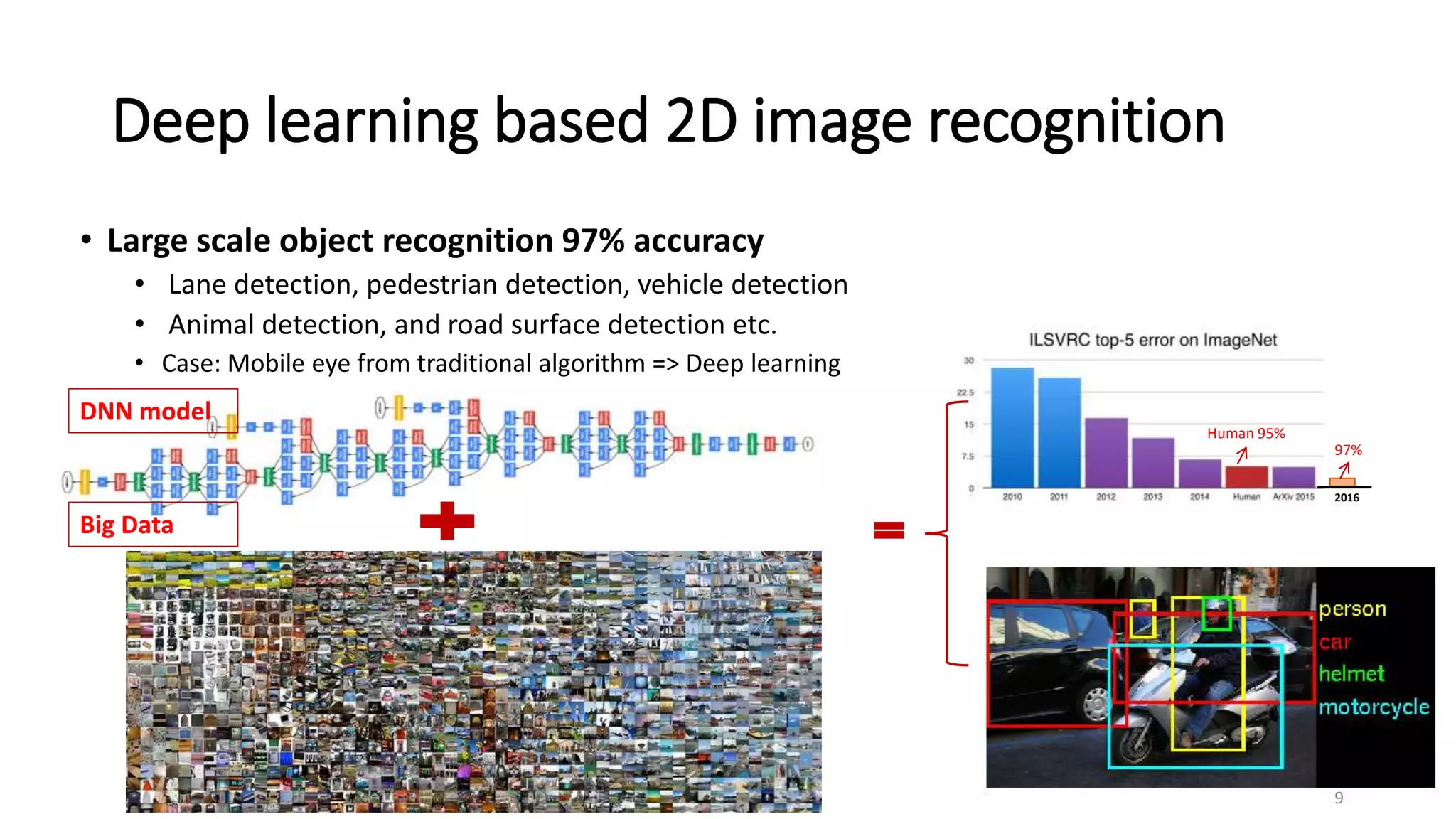

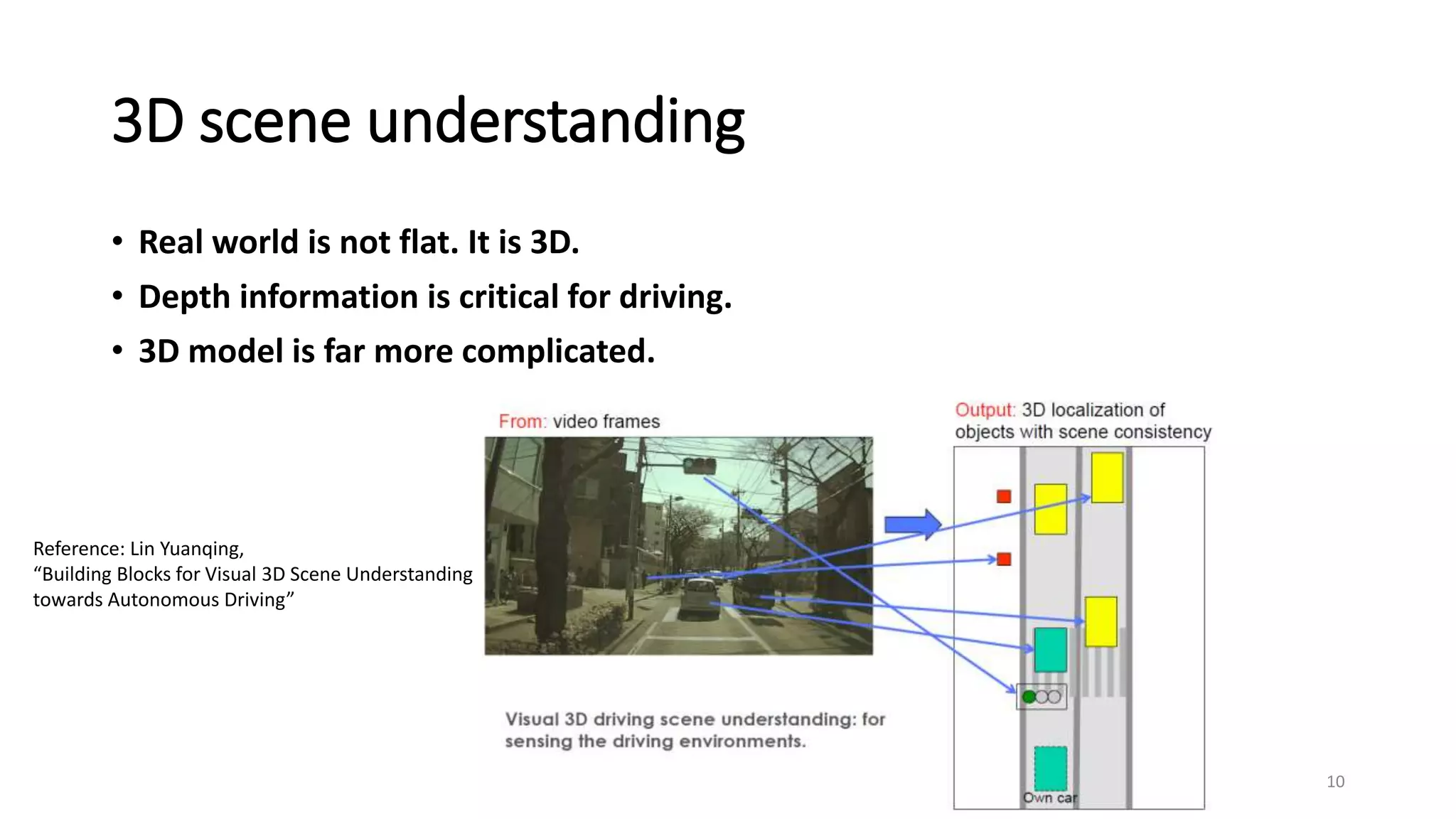

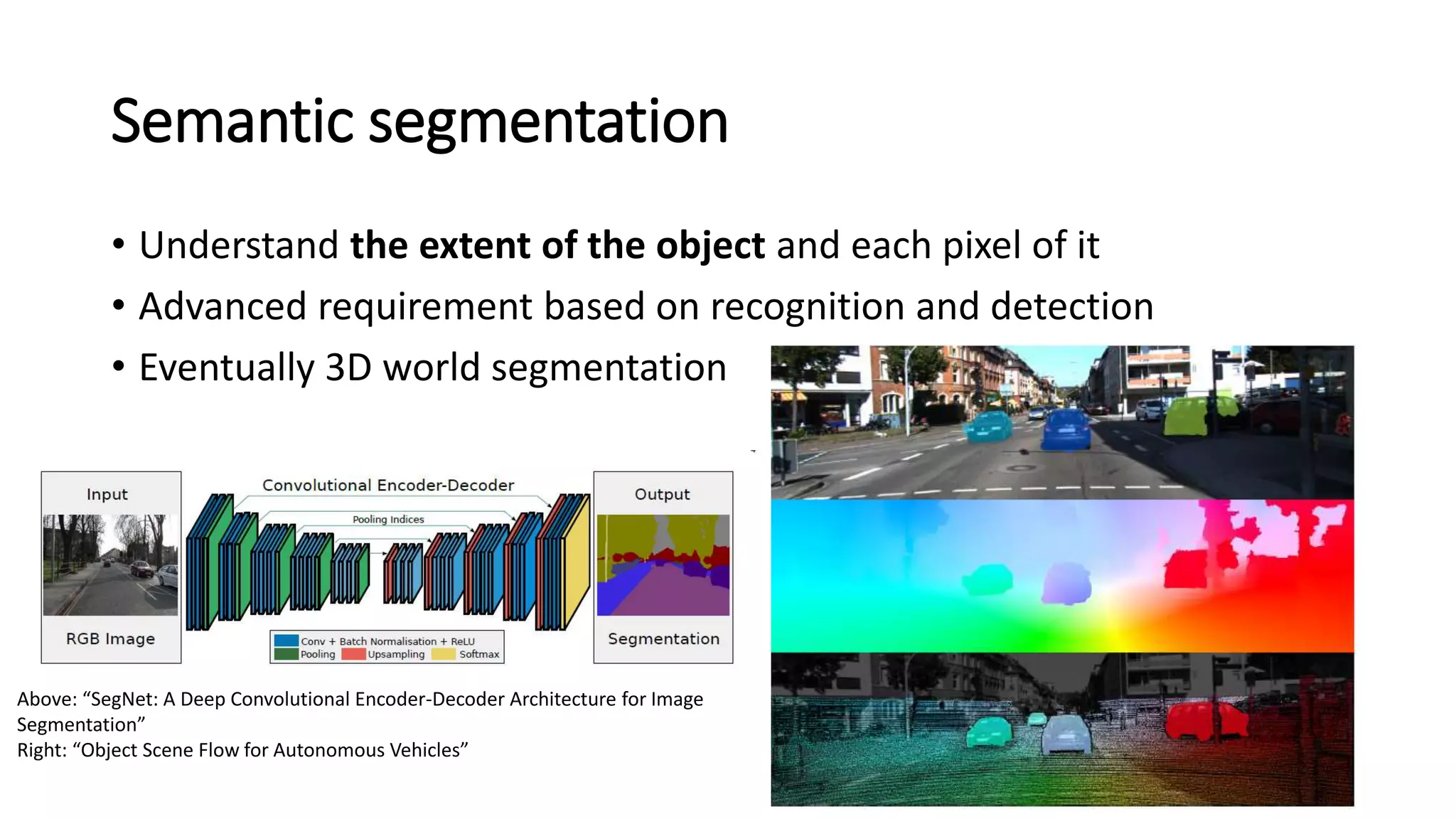

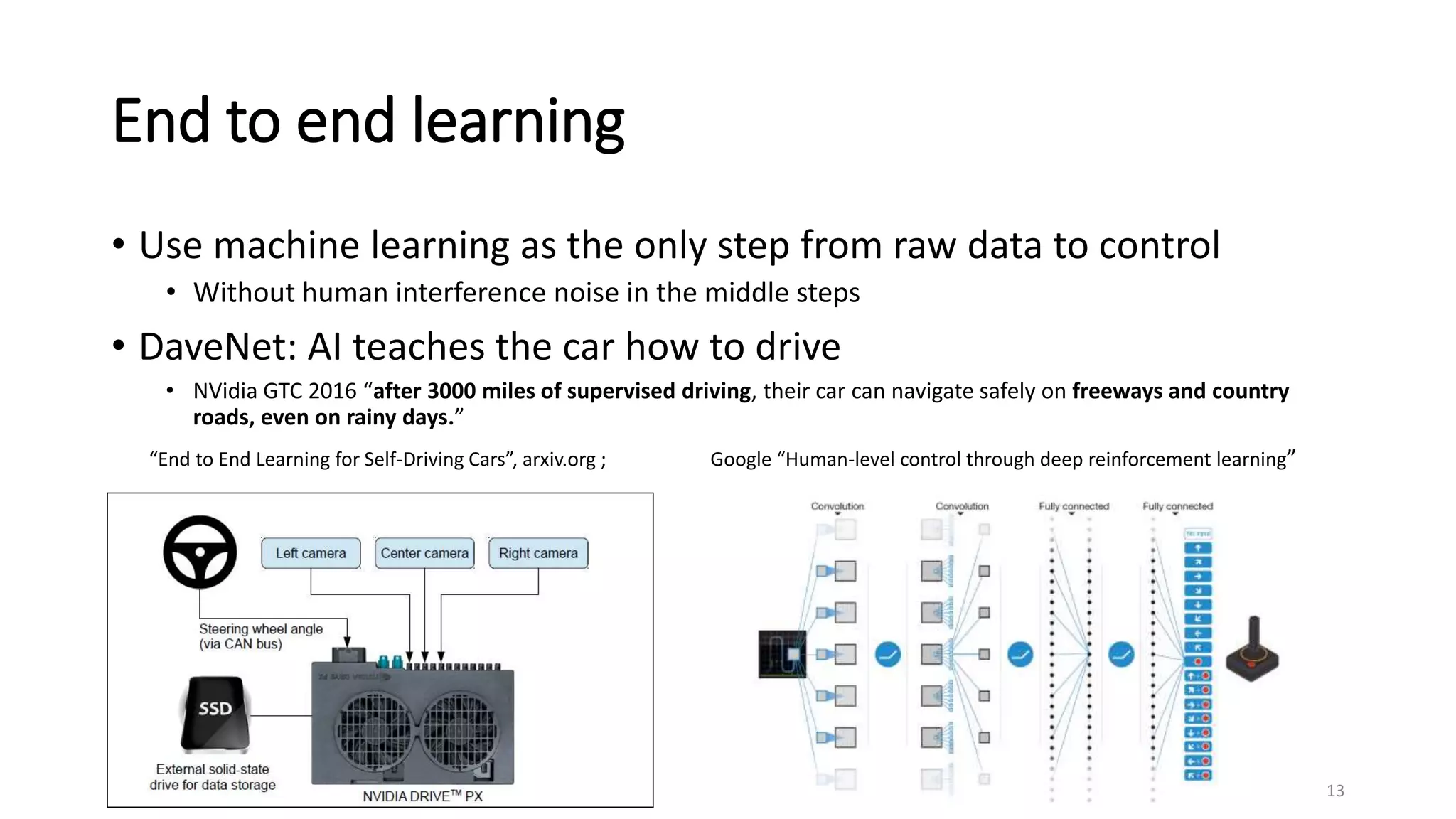

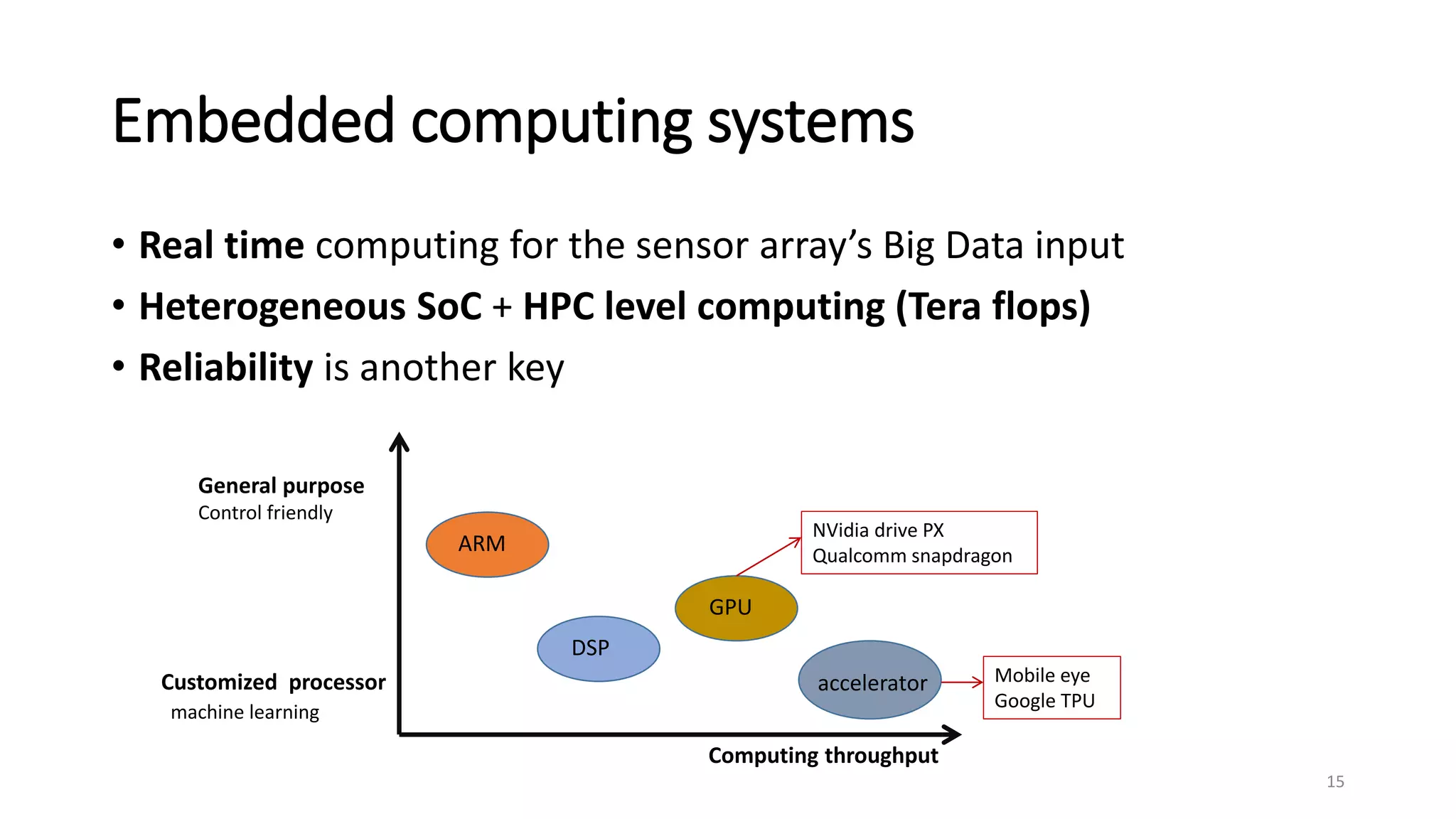

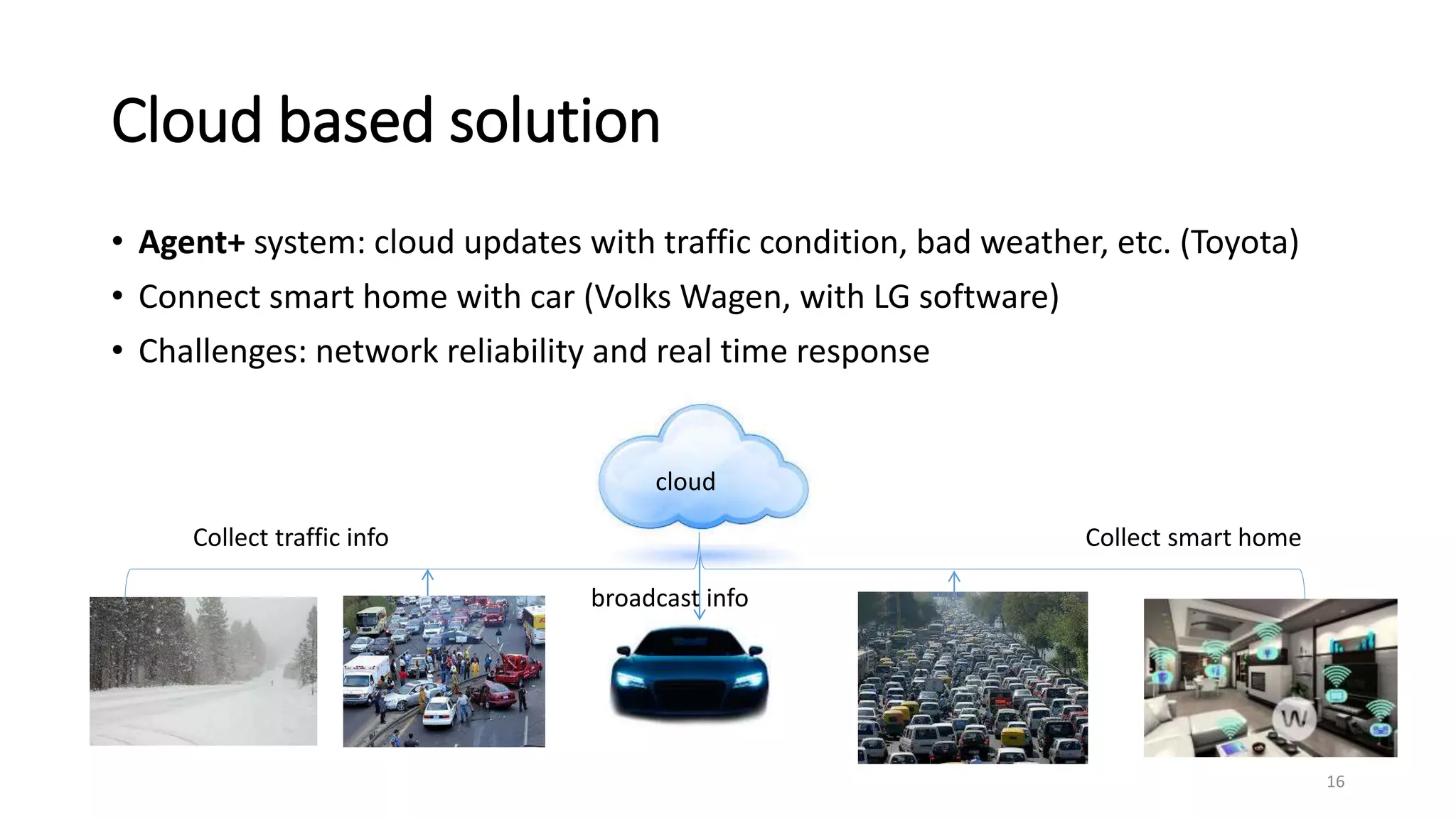

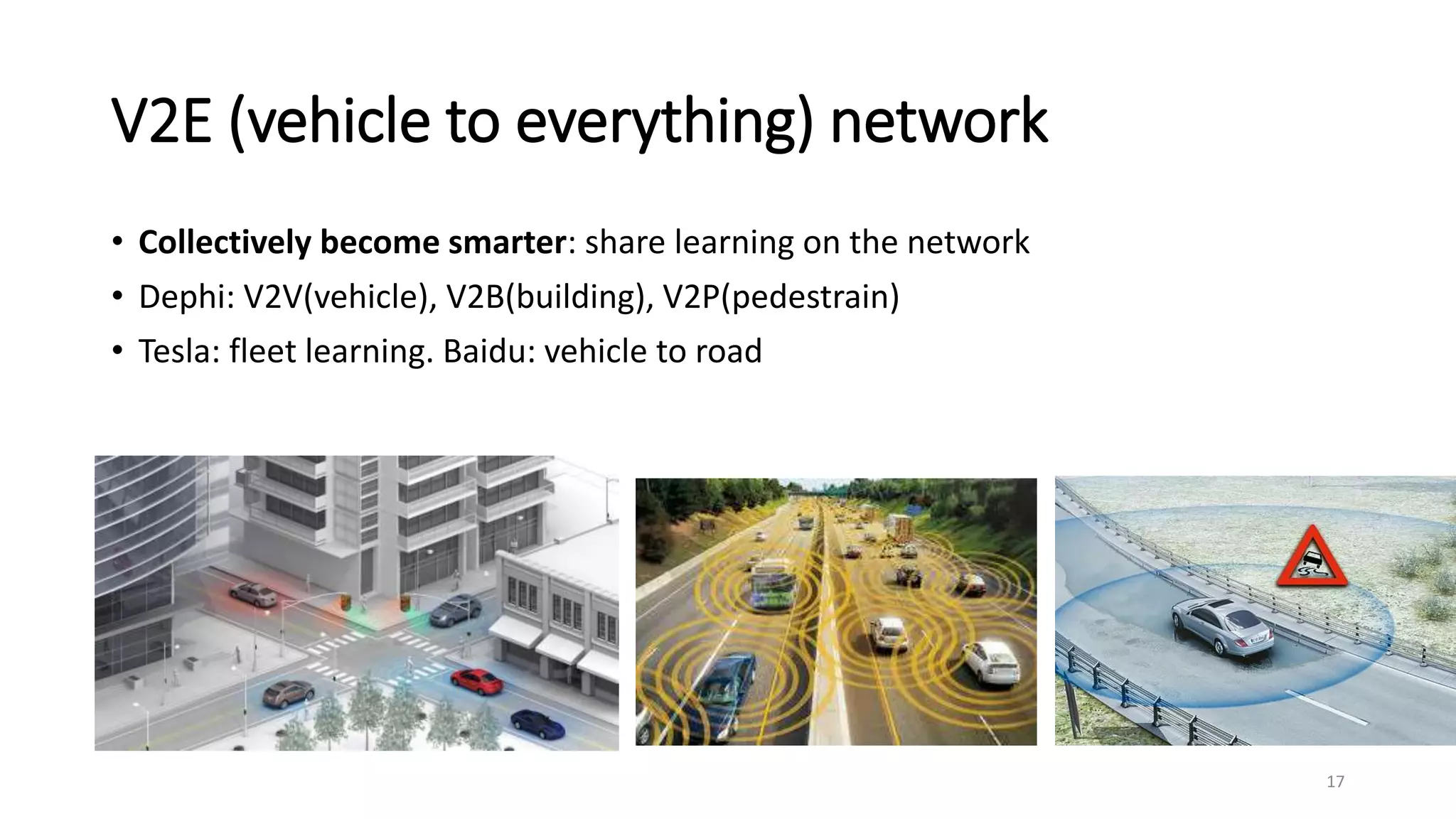

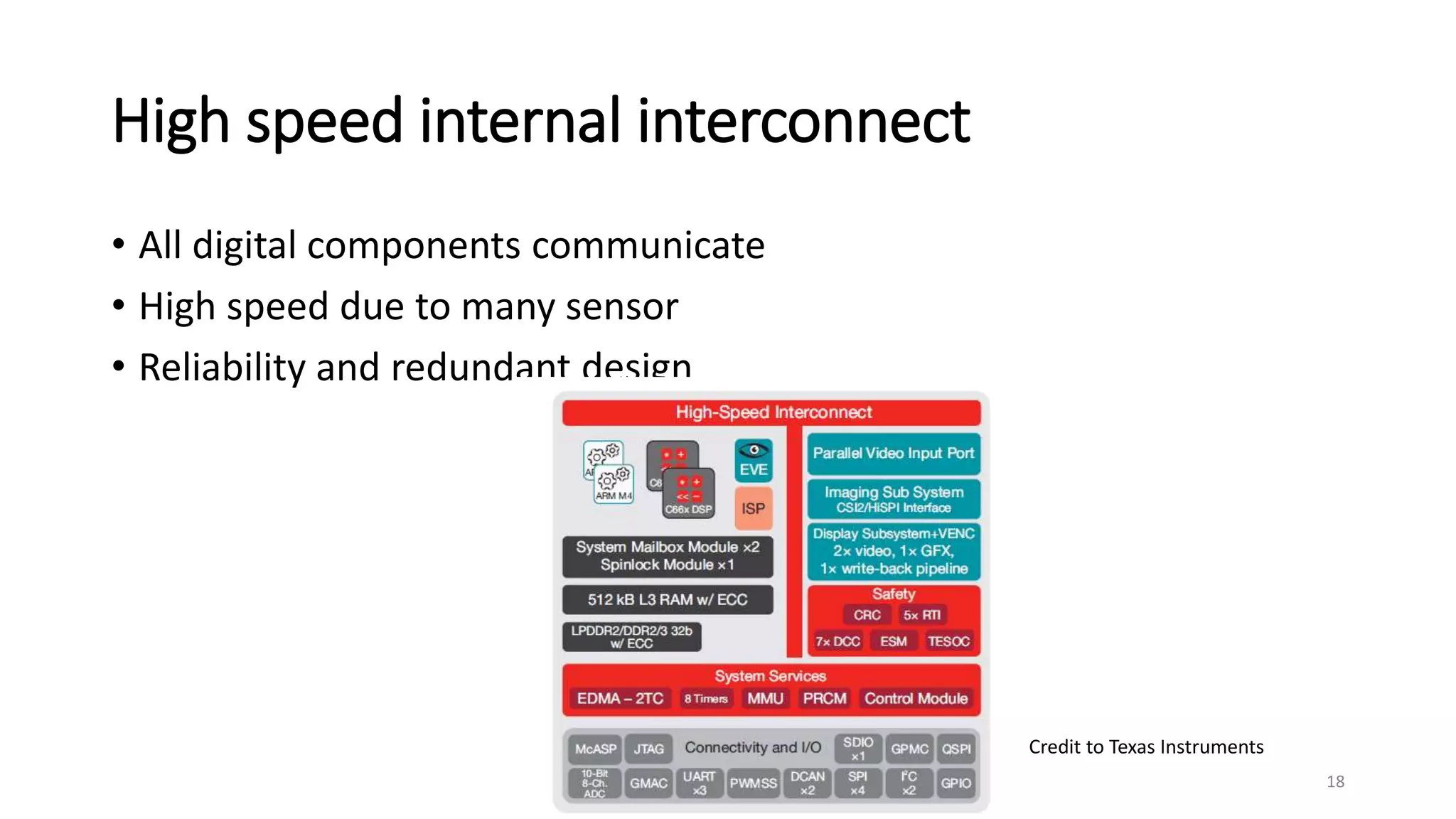

The document discusses trends in autonomous driving, including challenges when big data meets machine learning in cars. It outlines how sensor systems collect big data from cameras, radar, ultrasound and lidar. Machine learning is then used to perceive the real world through techniques like object recognition, 3D scene understanding, semantic segmentation and reinforcement learning. Autonomous vehicles will also need powerful embedded computing and connectivity through vehicle-to-vehicle and cloud networks.