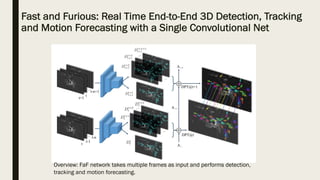

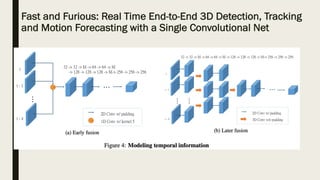

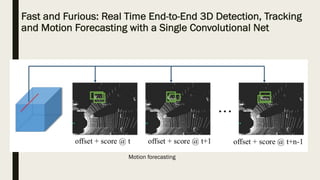

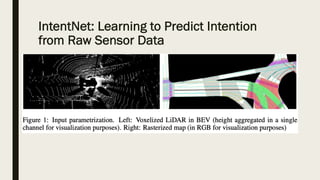

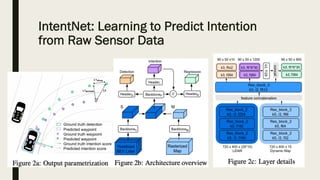

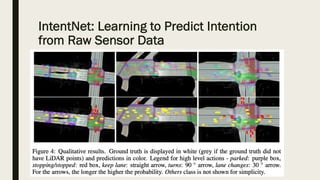

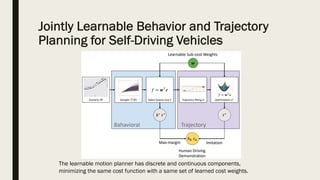

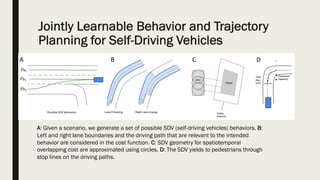

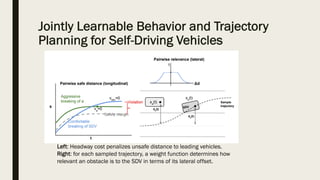

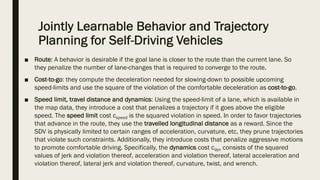

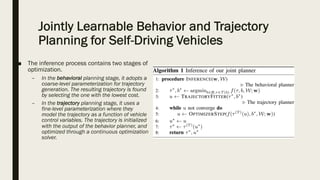

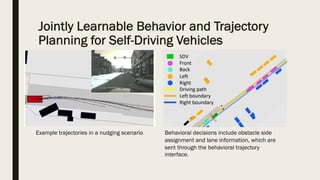

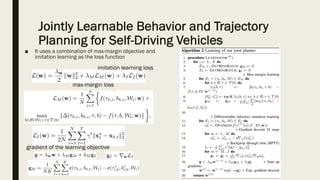

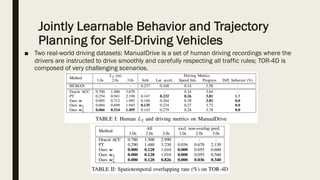

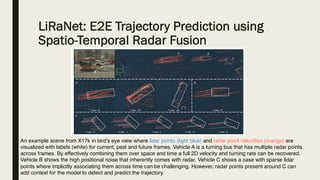

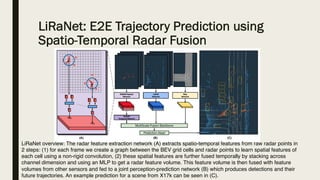

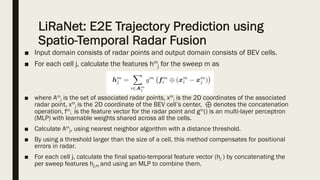

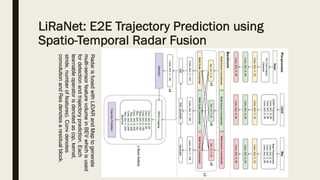

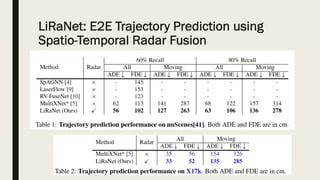

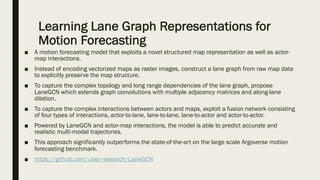

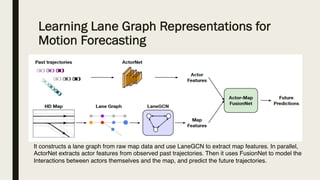

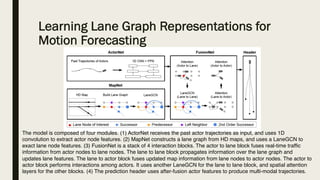

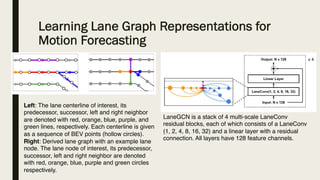

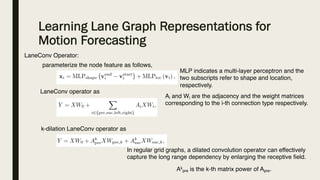

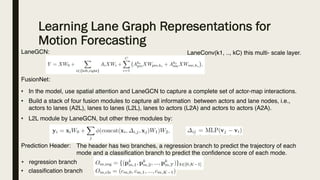

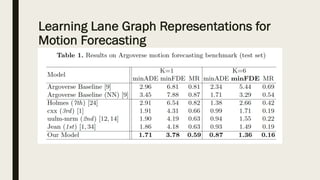

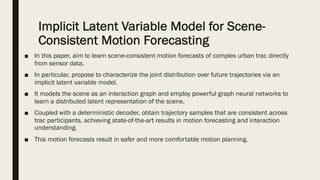

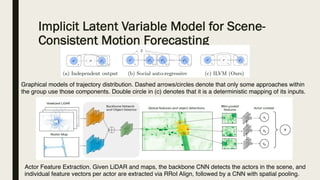

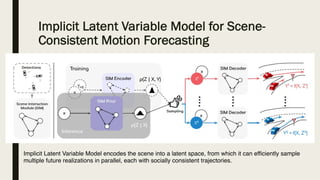

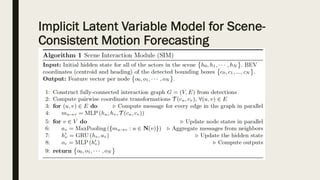

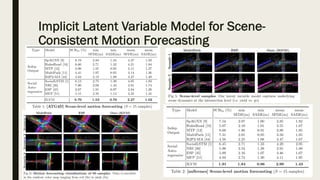

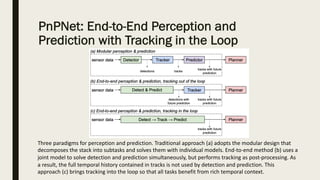

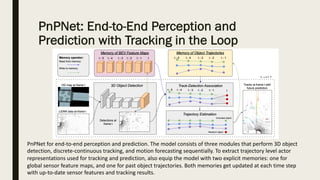

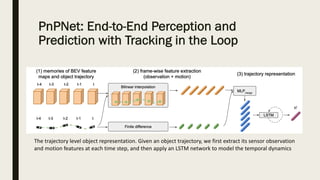

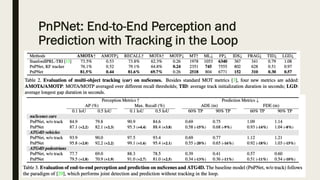

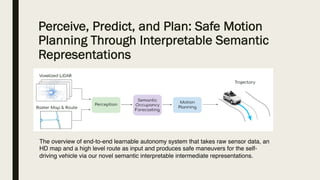

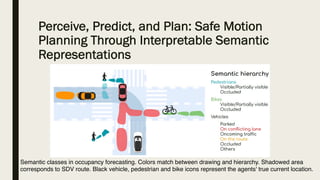

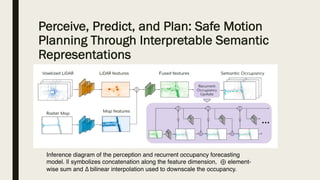

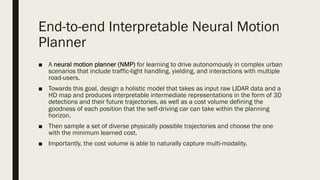

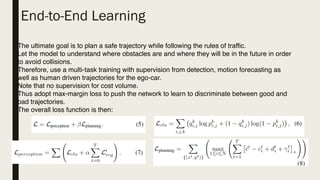

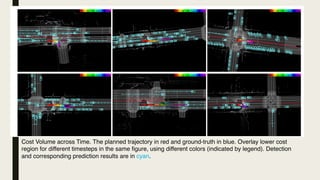

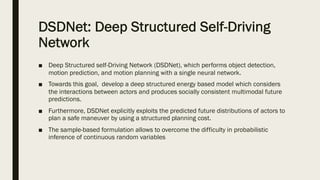

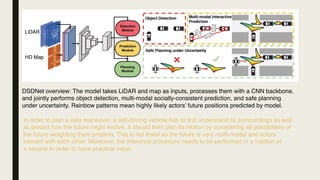

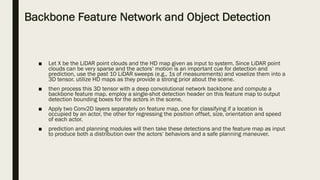

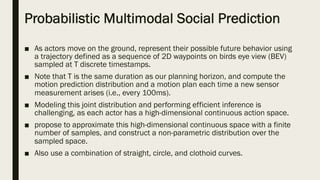

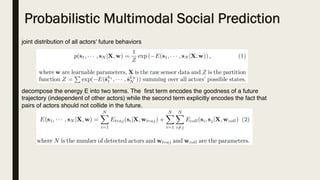

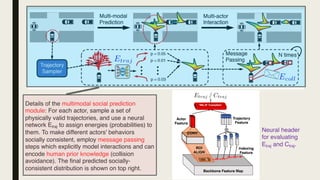

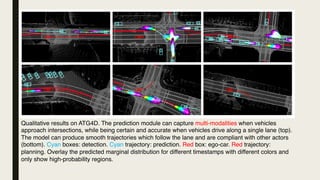

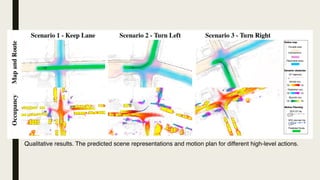

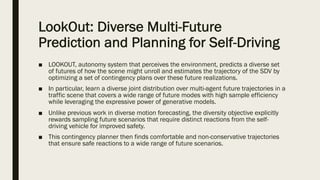

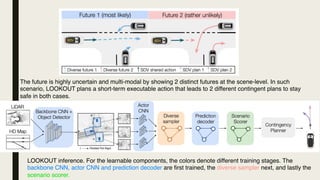

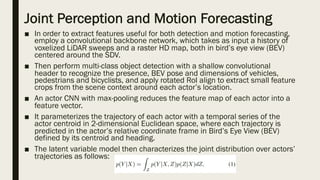

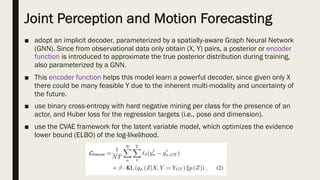

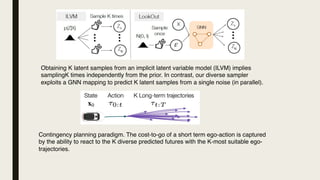

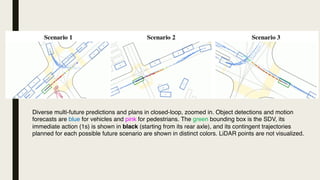

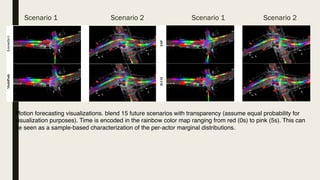

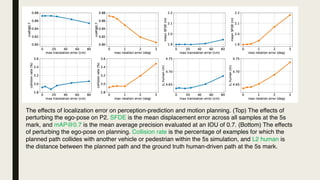

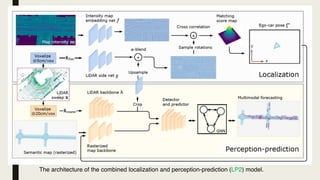

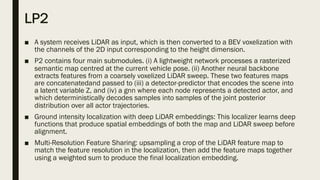

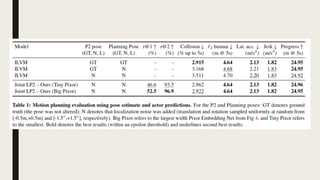

This document discusses various approaches for improving motion forecasting, perception, and planning in self-driving vehicles, including methods like Fast and Furious for real-time 3D detection and IntentNet for predicting the intentions of other road users. It highlights frameworks that integrate behavior and trajectory planning, such as jointly learnable planners and the LaneGCN model, which processes lane representations for better performance. Additionally, it explores the fusion of radar and lidar data, as well as the use of implicit latent variable models to achieve scene-consistent motion predictions.