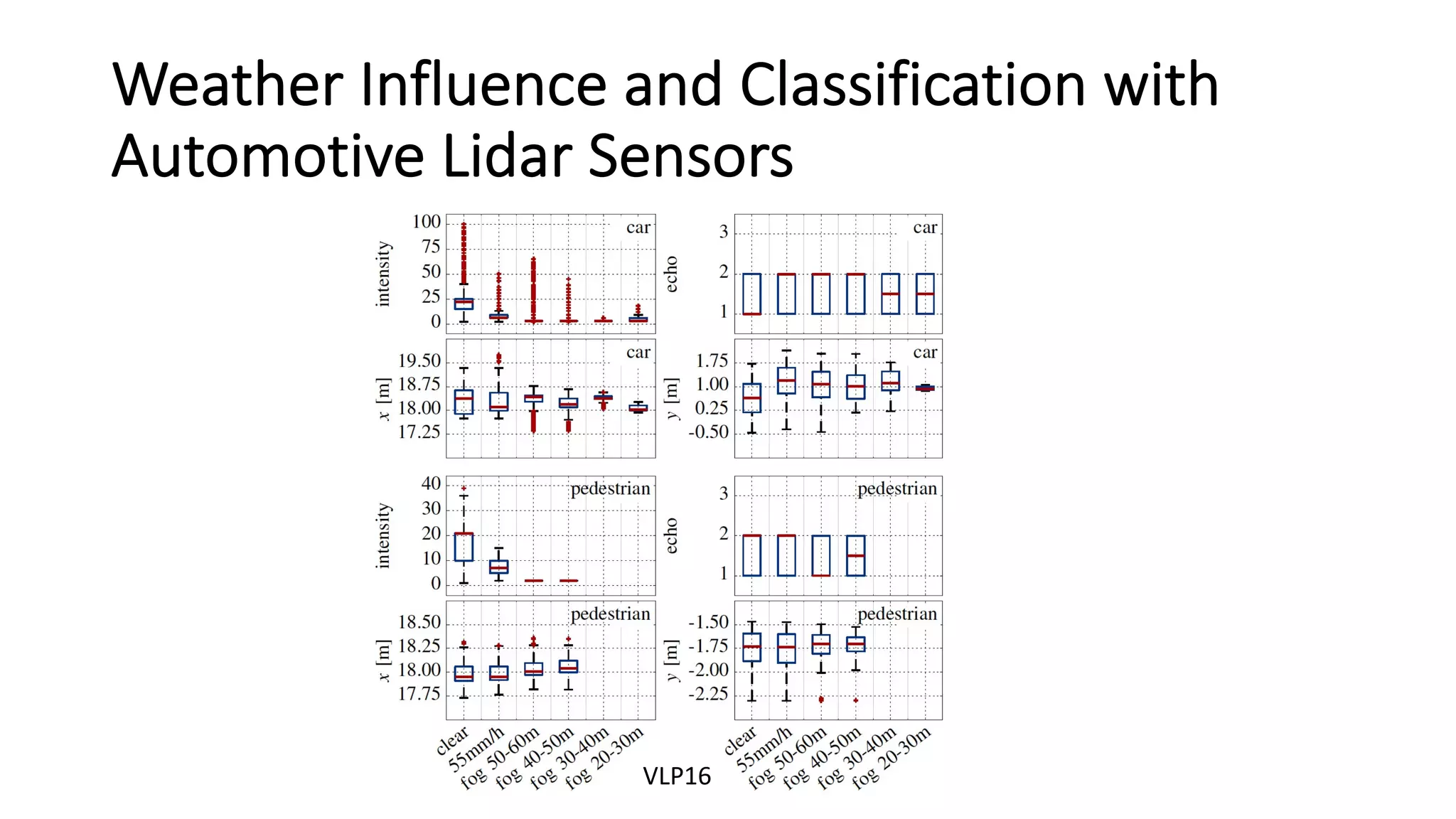

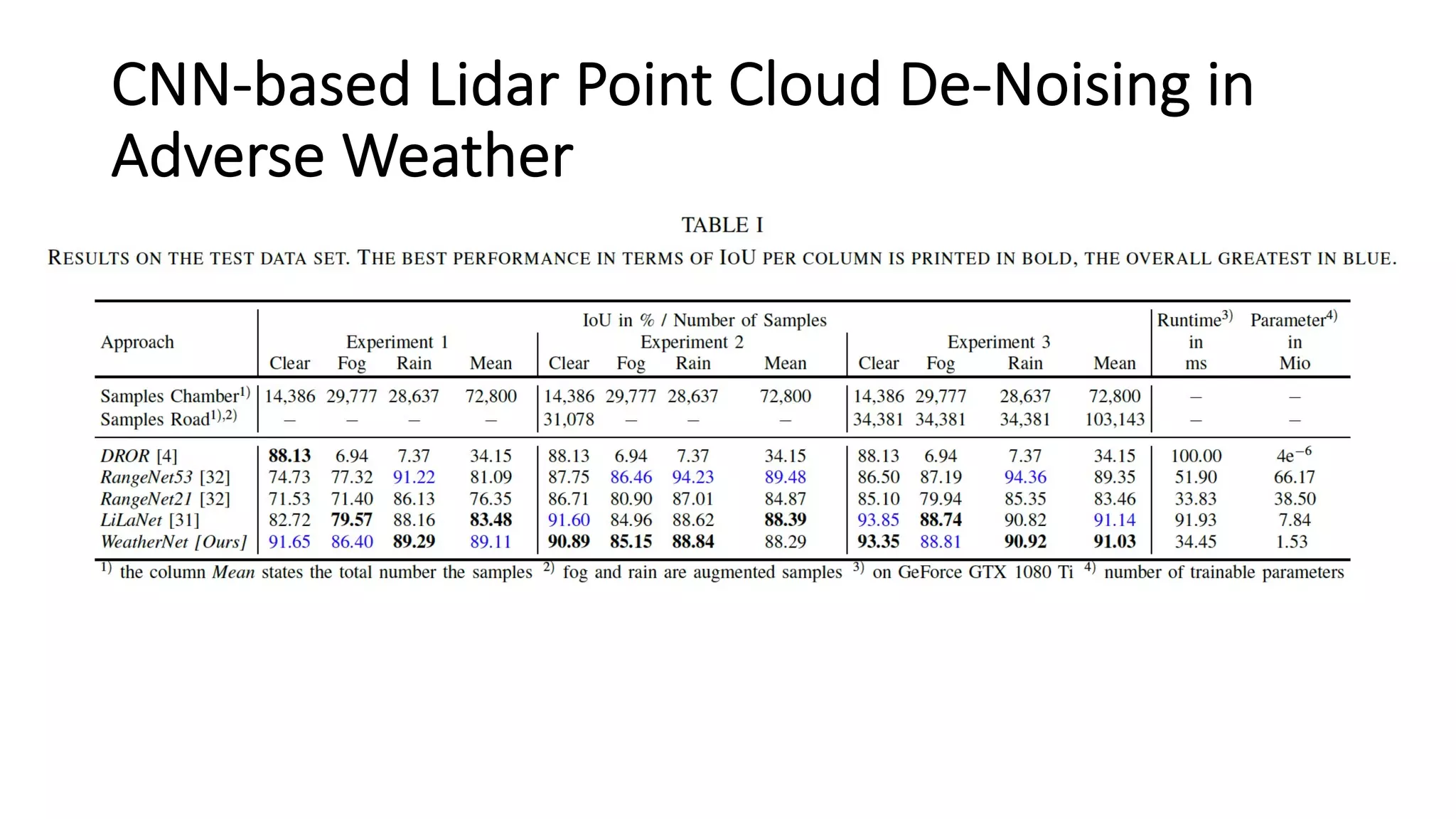

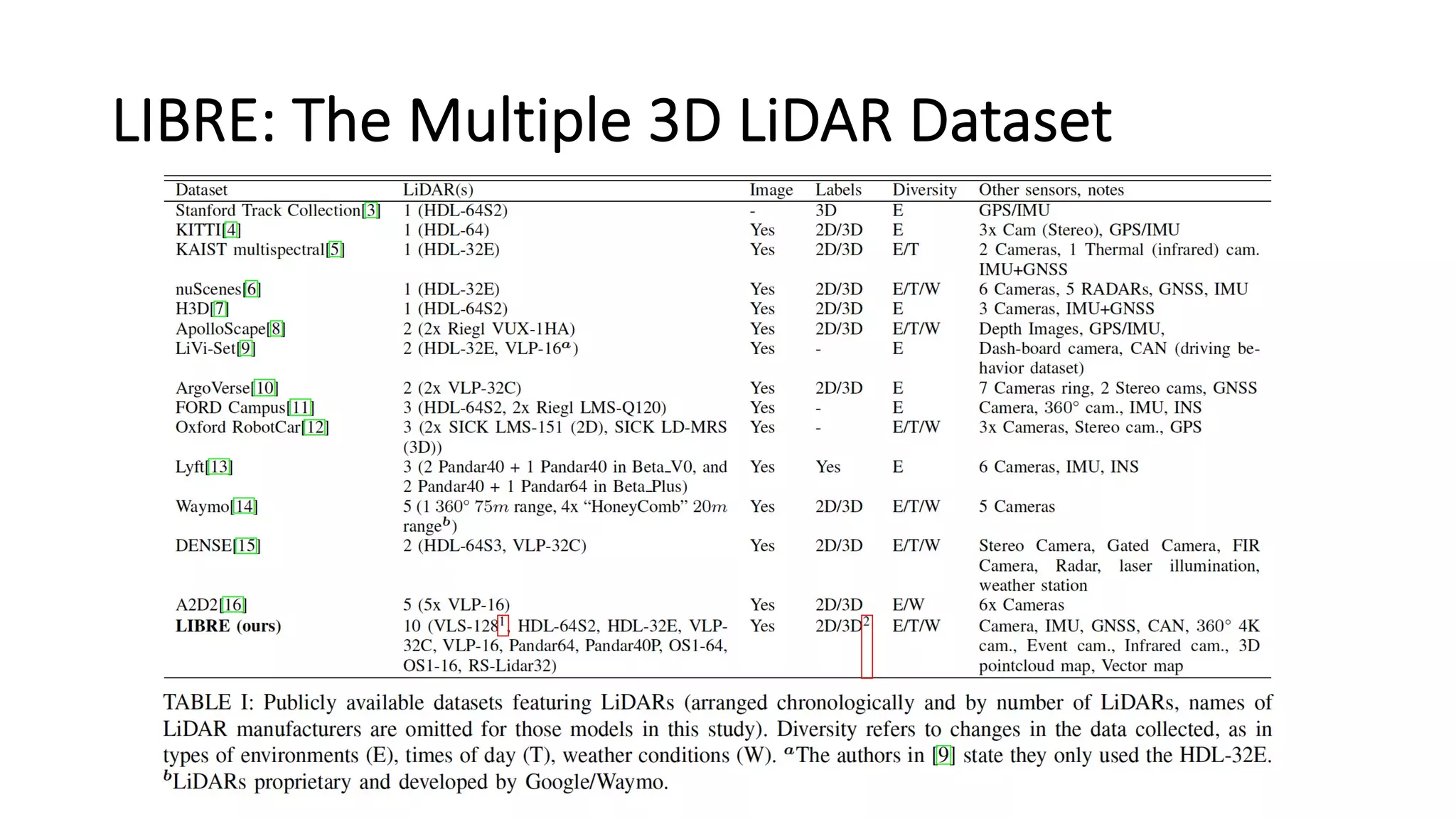

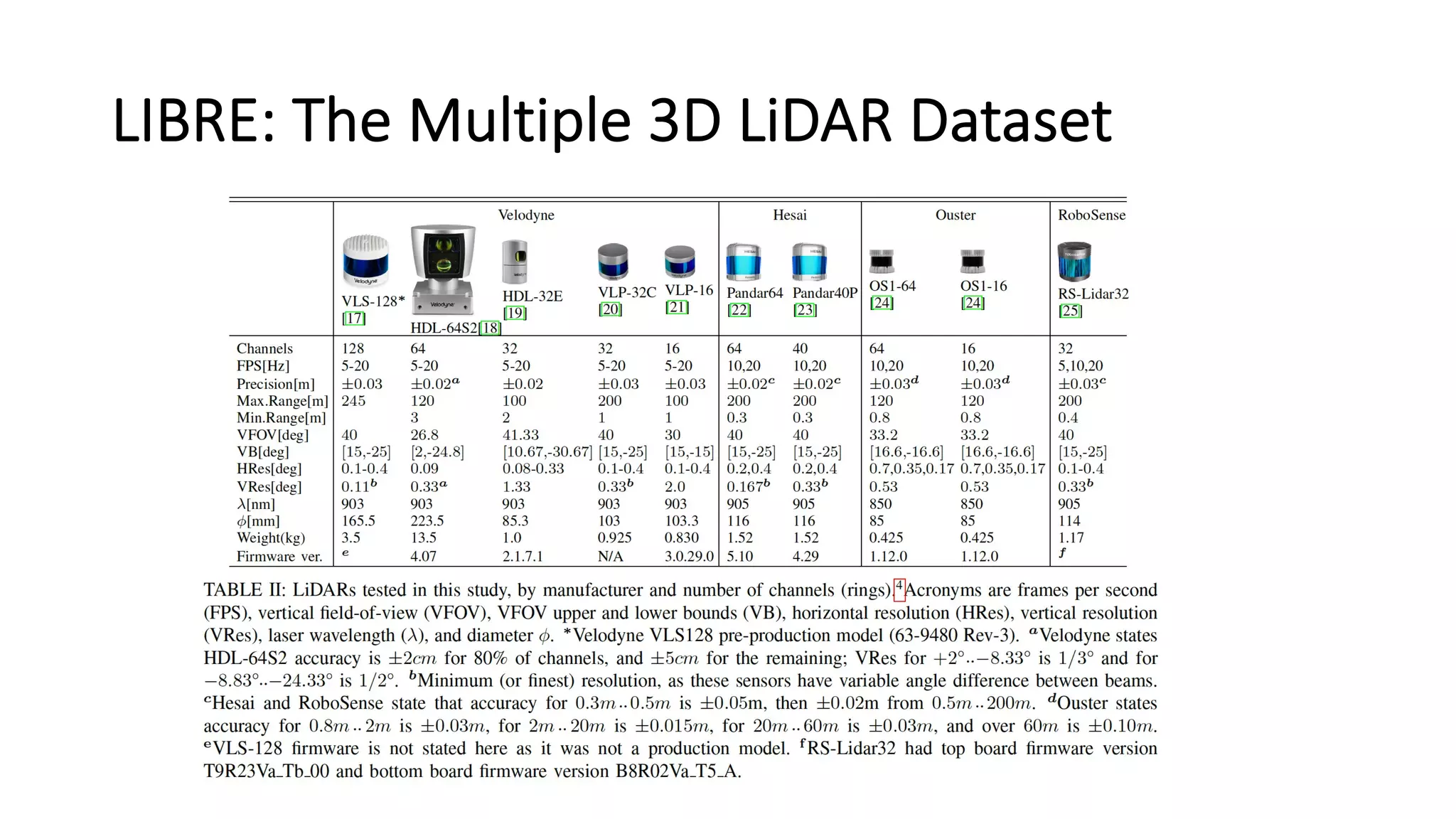

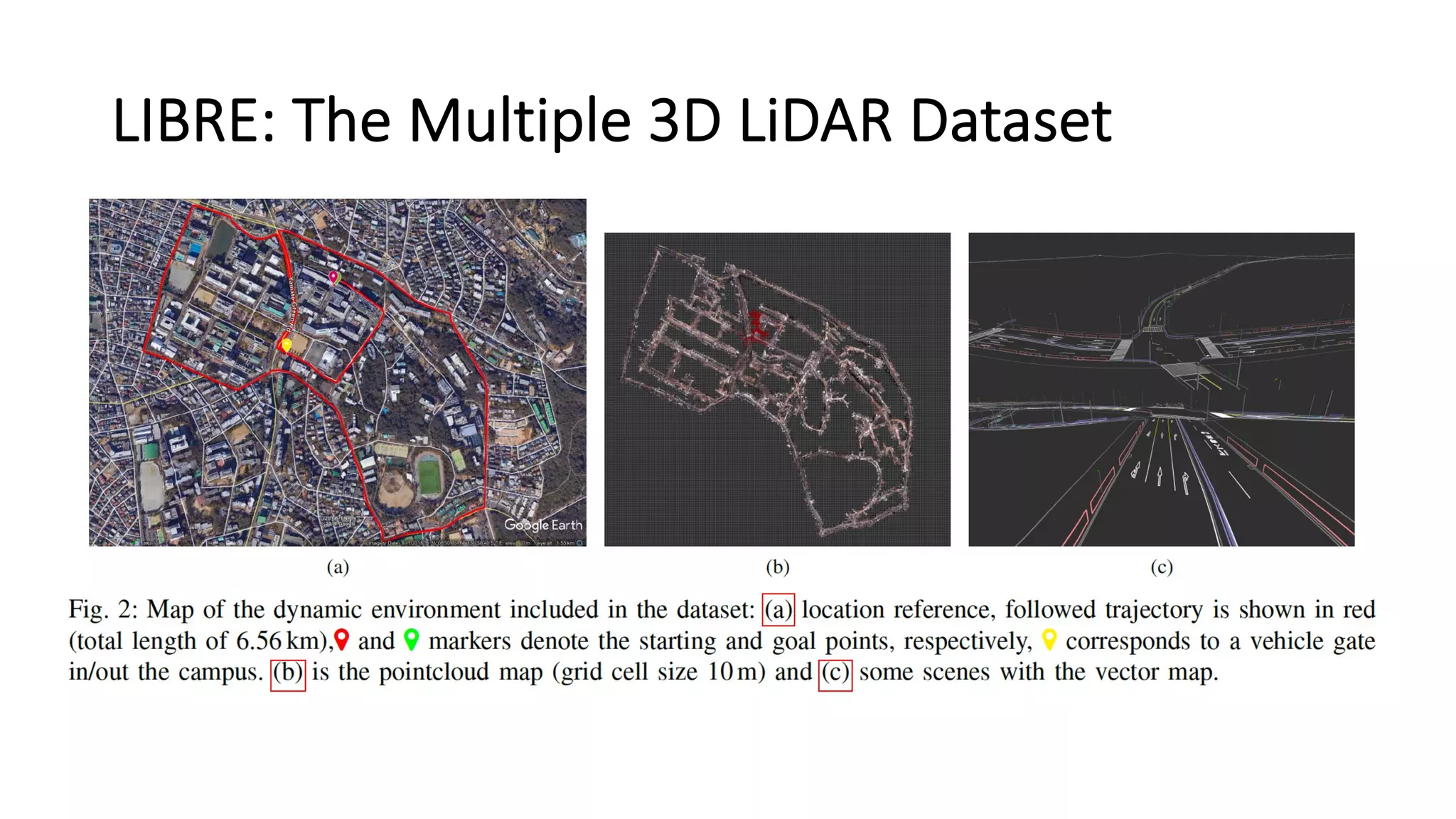

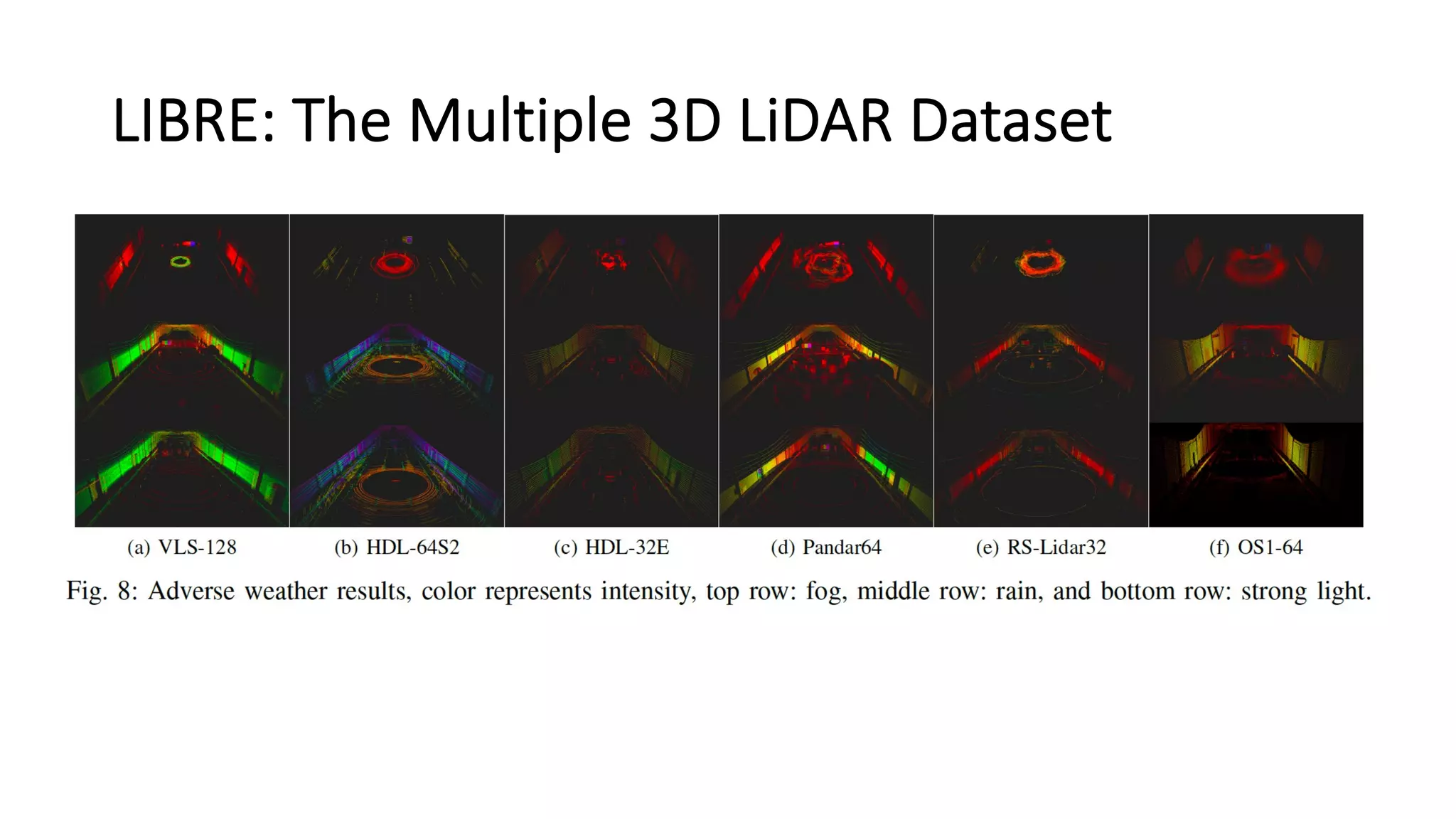

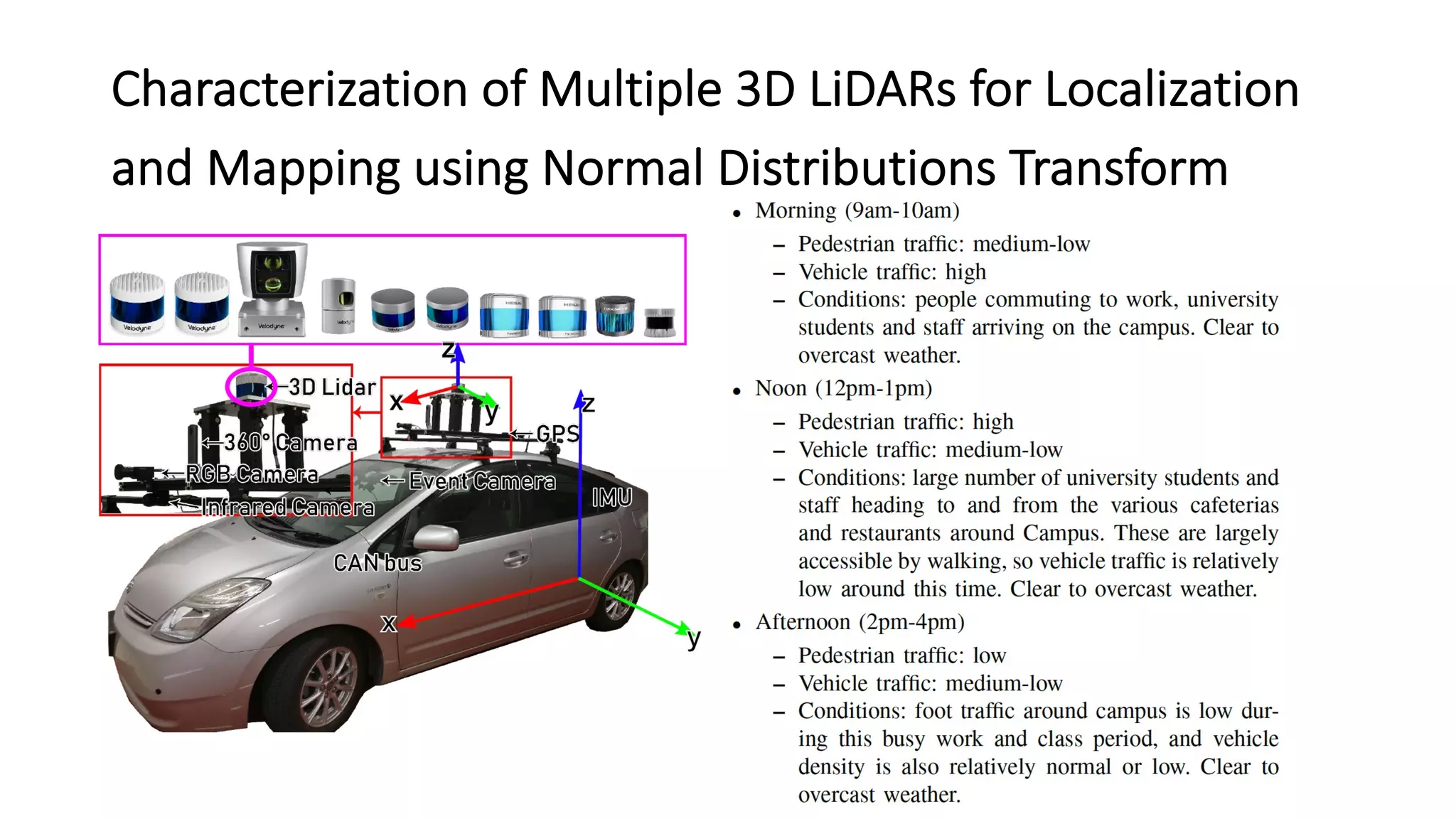

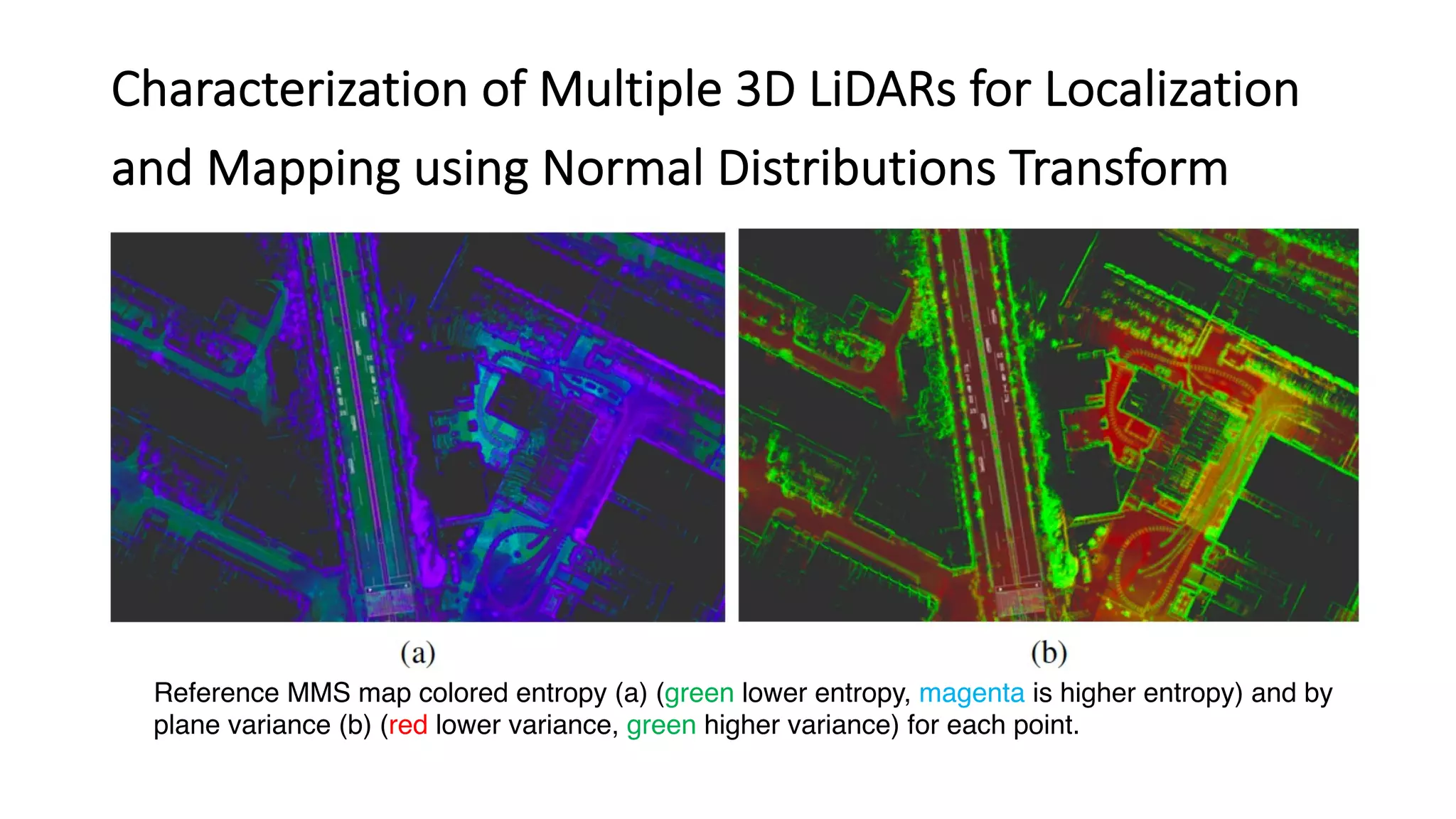

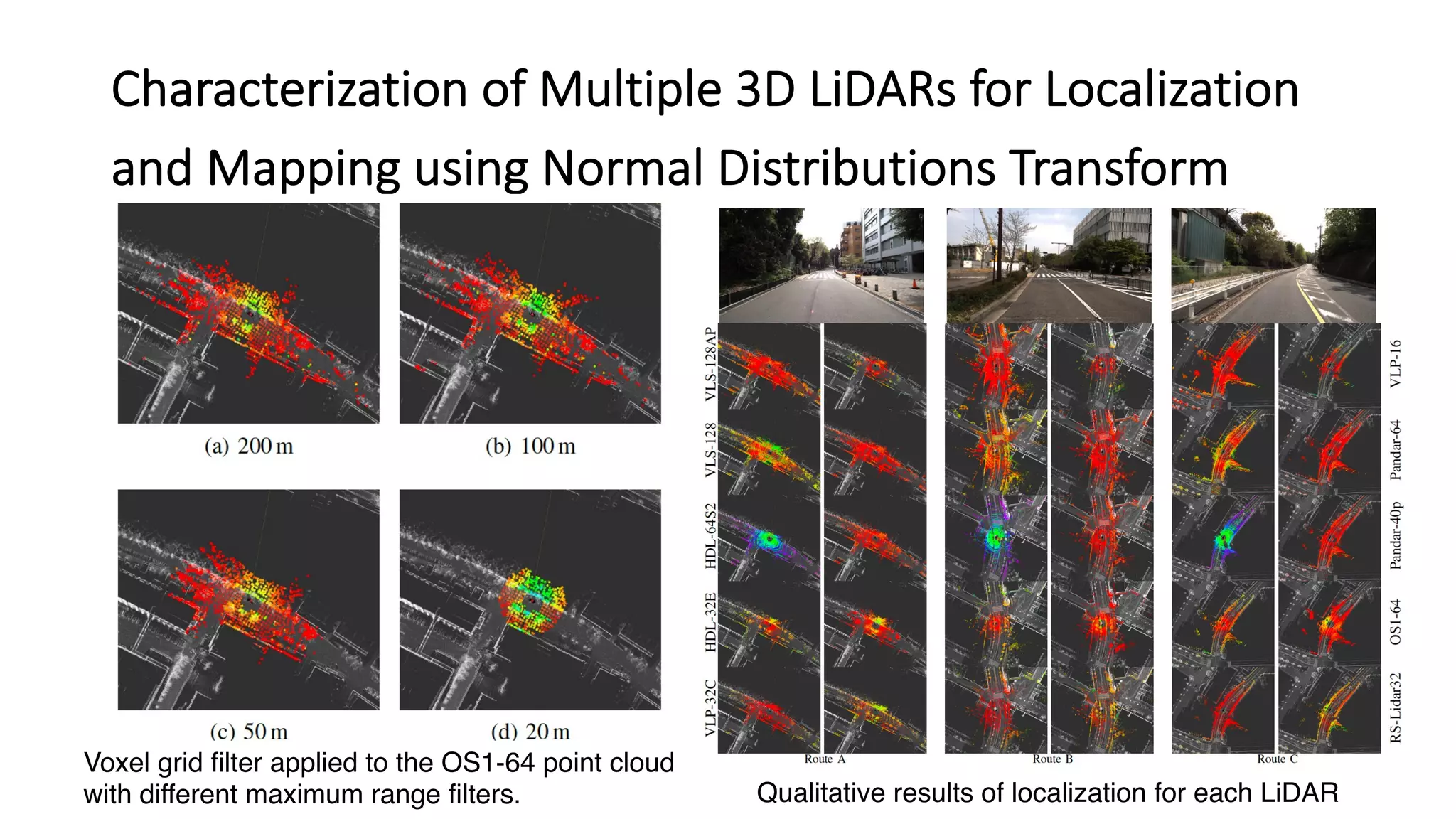

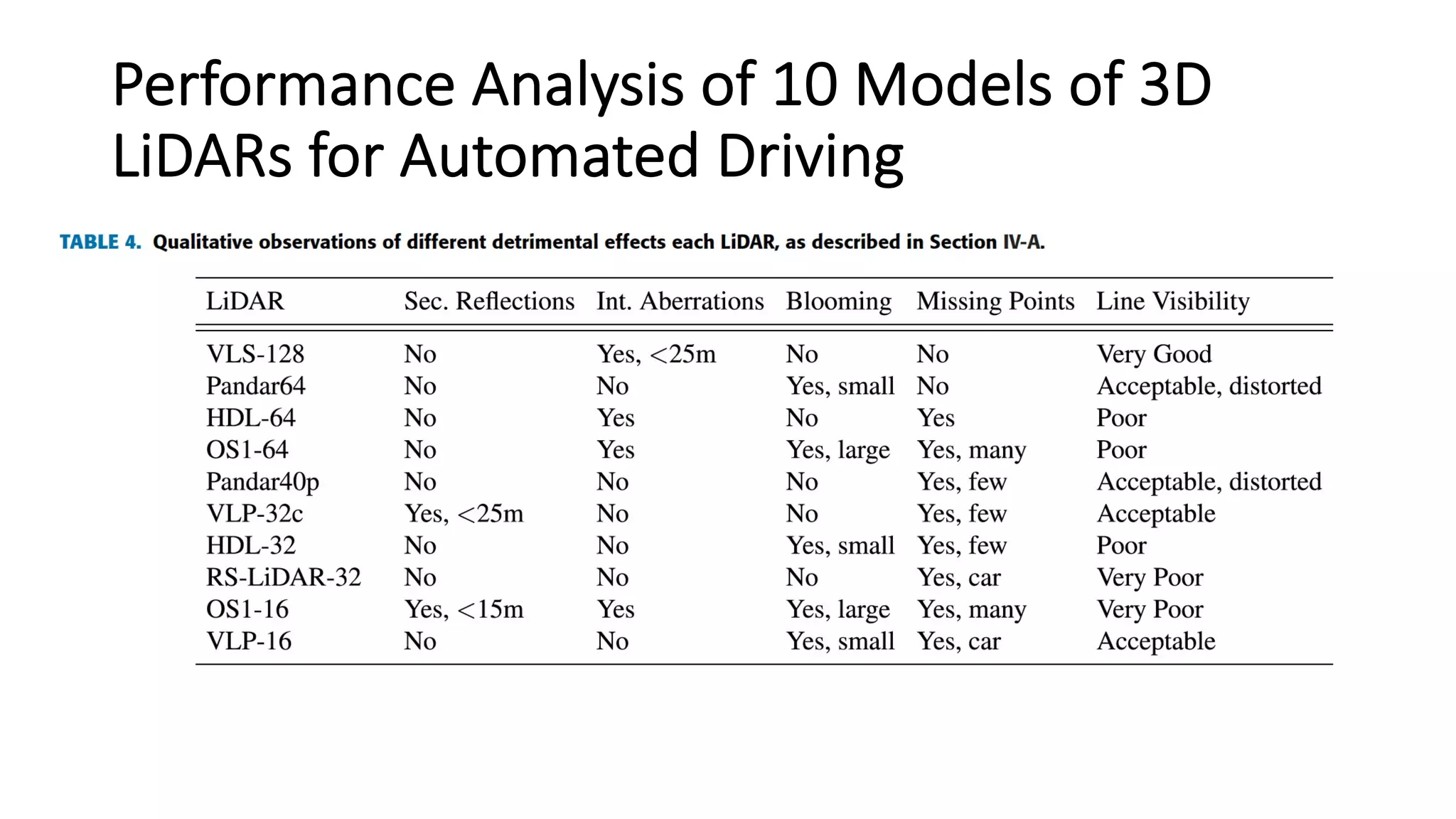

This document discusses research on LiDAR performance in adverse weather conditions such as dust, snow, rain, and fog. It outlines several papers that analyze how different weather influences LiDAR sensors, develop methods to detect and classify weather conditions using LiDAR data, and explore techniques for denoising LiDAR point clouds in adverse weather. The papers covered include work on characterizing multiple LiDAR sensors for localization and mapping applications and benchmarking LiDAR performance for automated driving tasks.