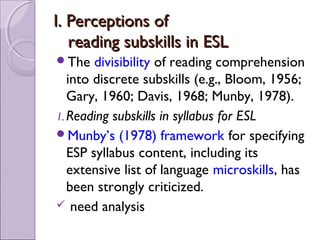

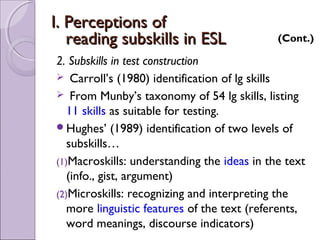

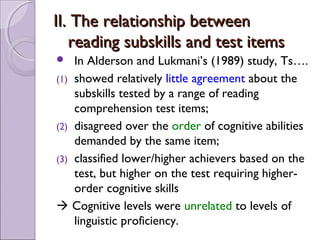

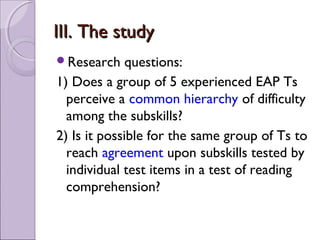

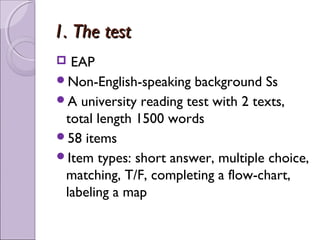

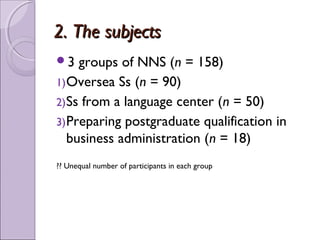

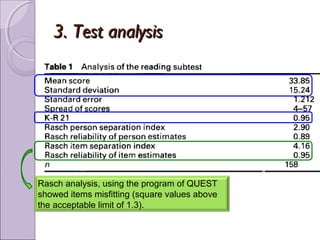

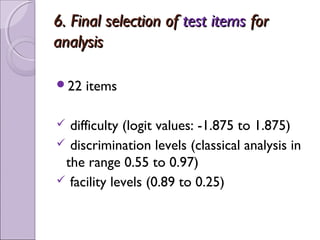

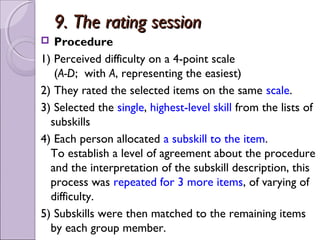

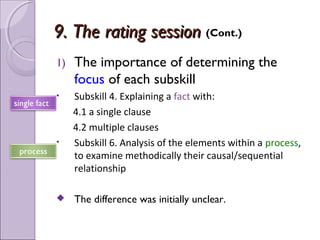

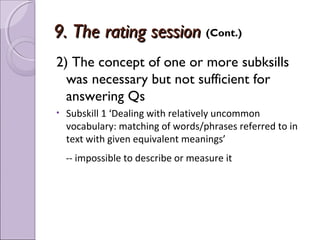

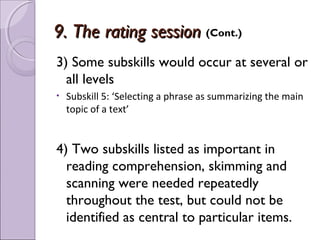

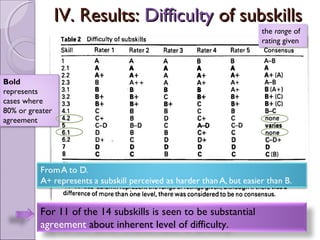

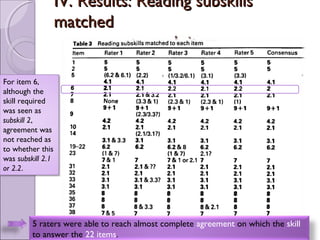

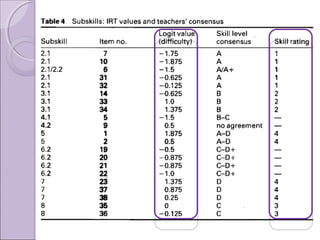

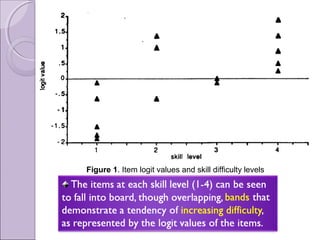

The document summarizes a study that examined teachers' perceptions of reading subskills and the relationship between subskills and test items. 5 experienced English teachers participated in rating the difficulty of reading subskills and identifying the subskills required to answer test items. There was strong agreement among teachers on the hierarchy of subskill difficulty and the subskills tested by each item. A correlation was found between teachers' ratings of subskill difficulty and results from Rasch analysis, providing empirical validation of teachers' judgments. The study provides support for using teacher judgments in test development and examining reading test content in relation to subskills.