The document discusses issues with language assessment tests and more constructive ways of testing. Some key points:

- Tests were previously misused as punishment or the only grading measure without reflecting what was taught.

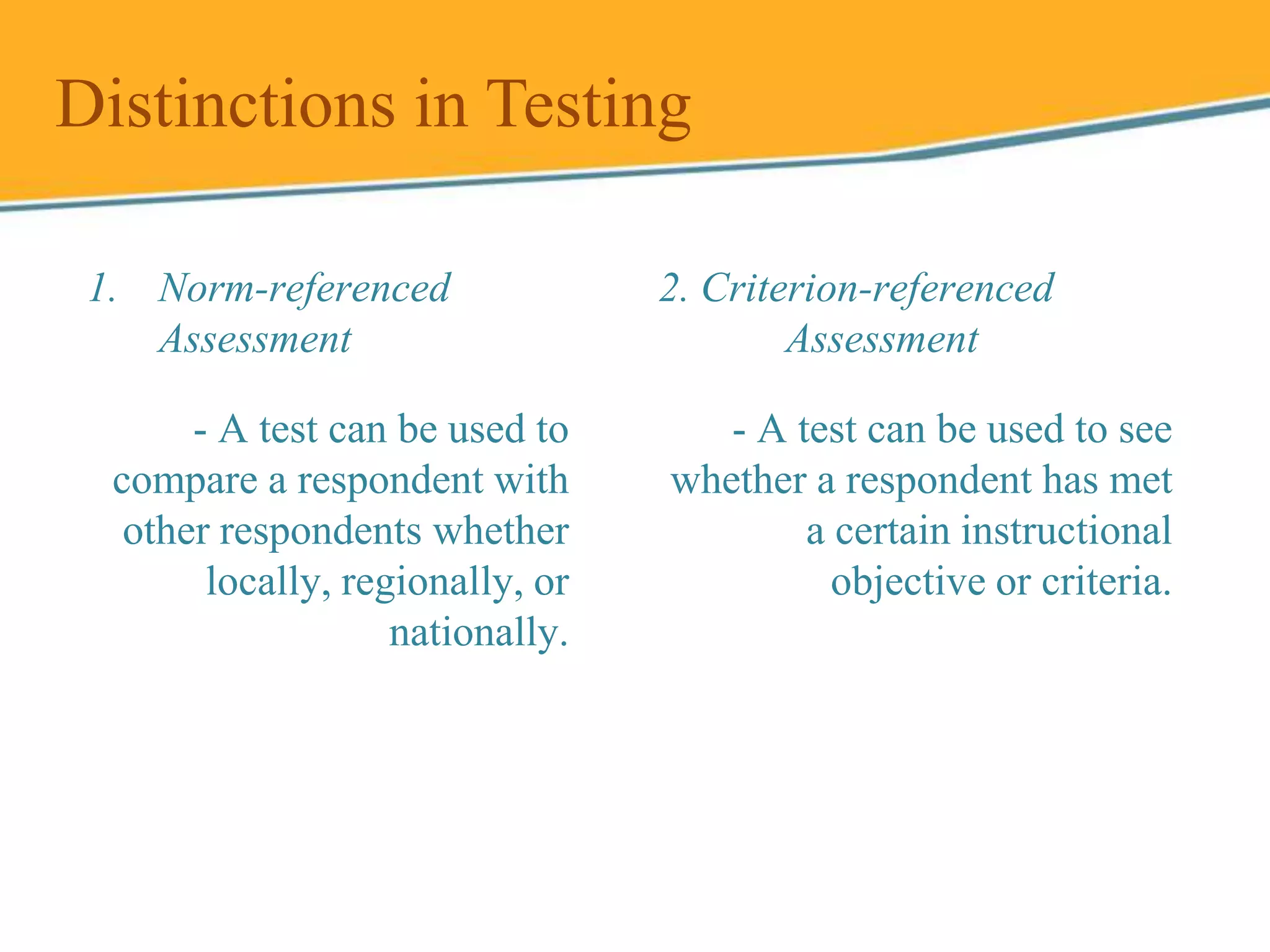

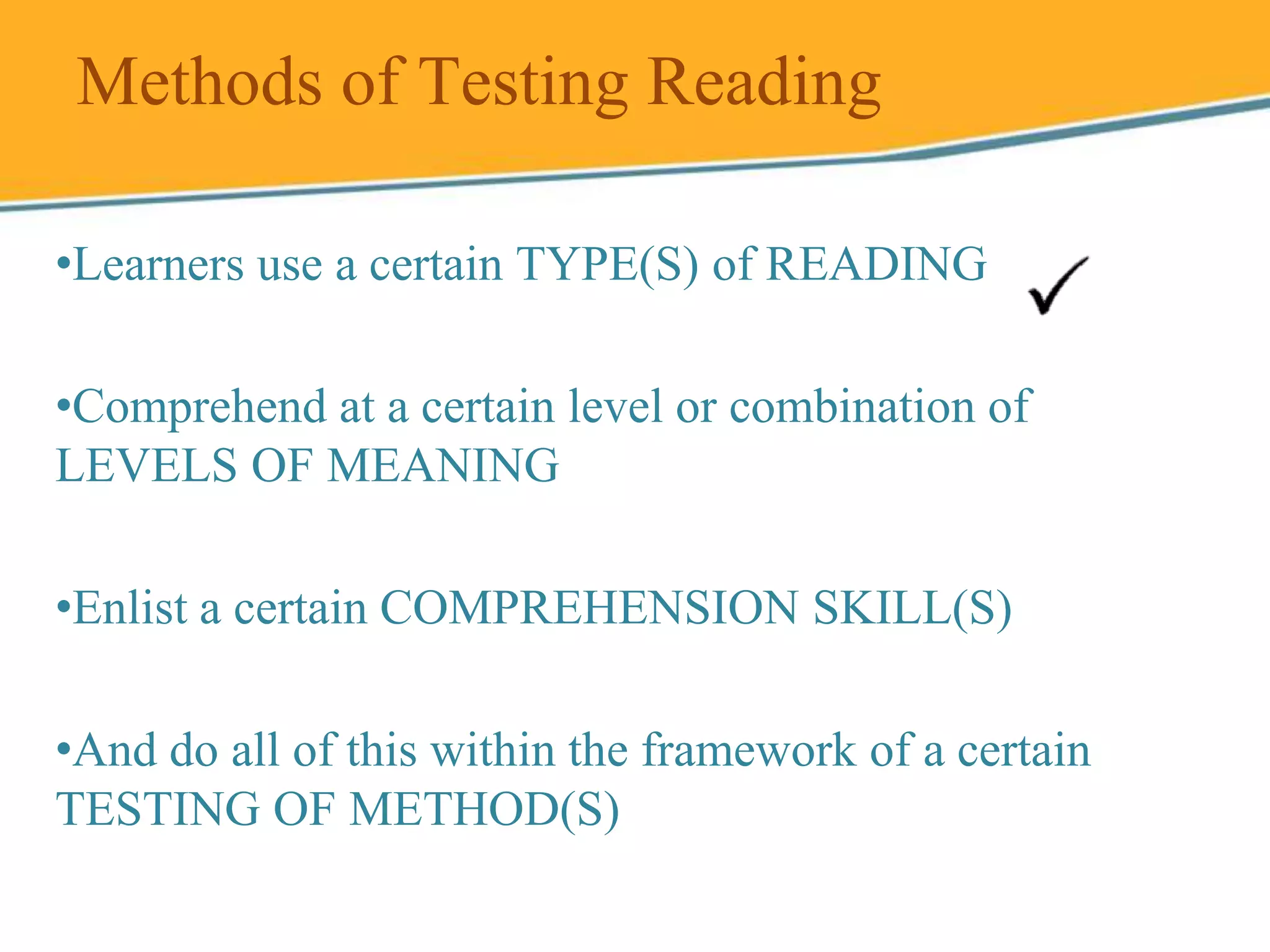

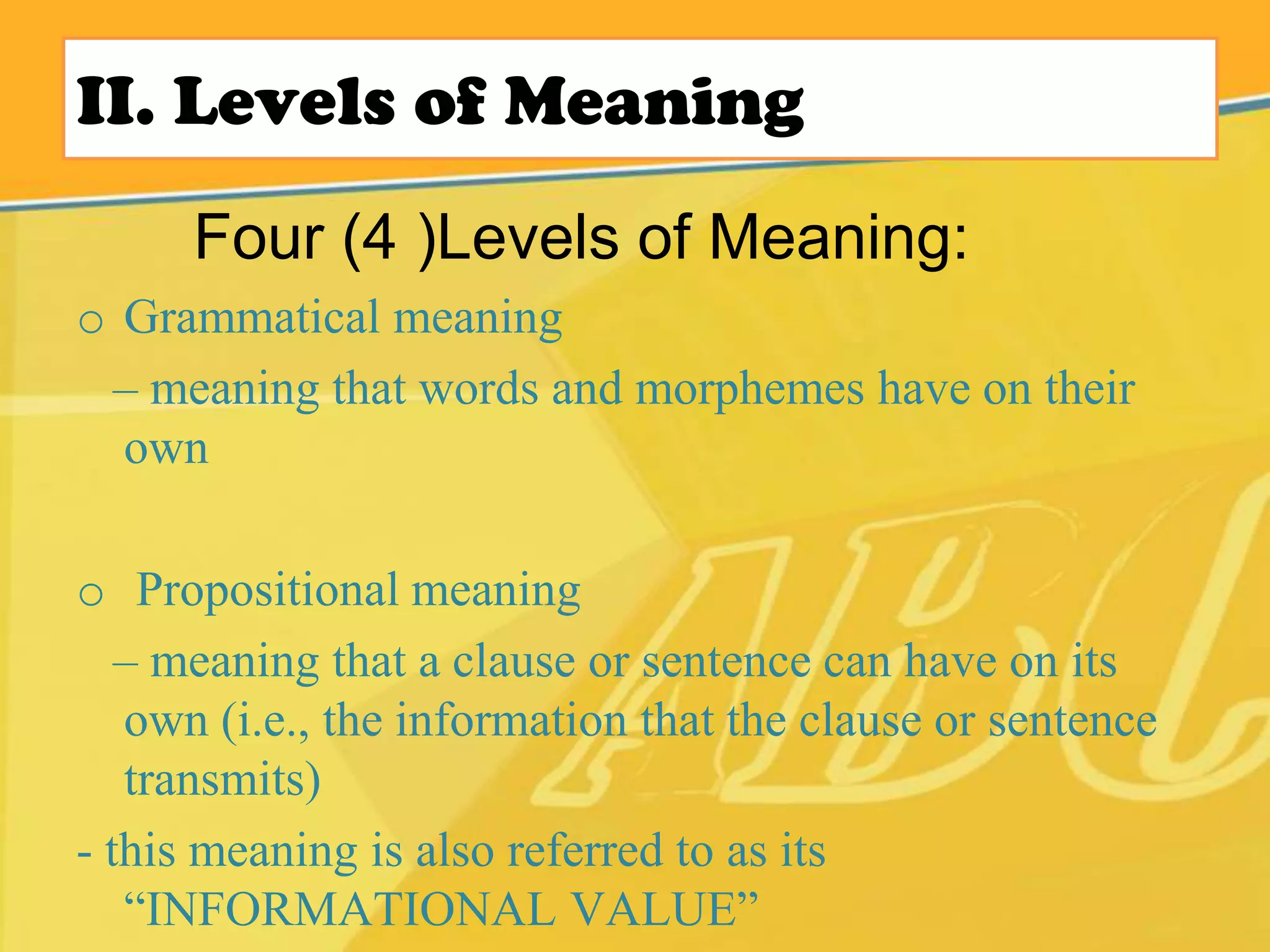

- A more constructive approach sees testing as teacher-student interaction, judges students on their knowledge, aims to improve skills, and has clear criteria.

- The summary highlights some of the constructive principles discussed in the document for better language assessment.