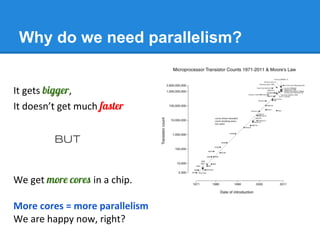

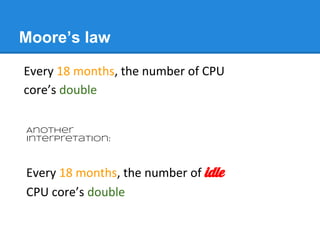

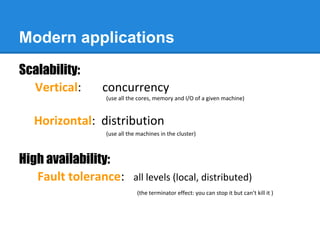

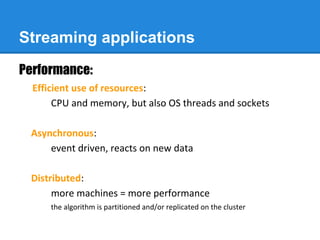

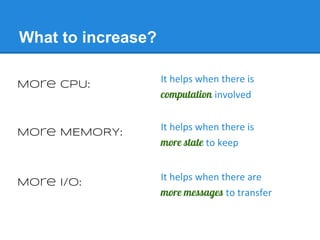

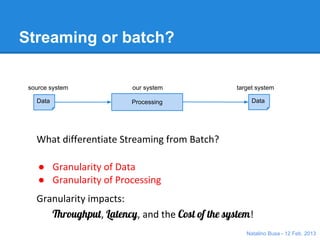

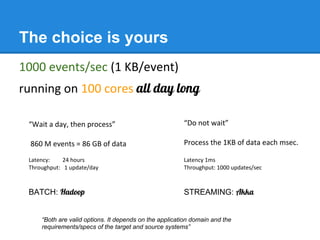

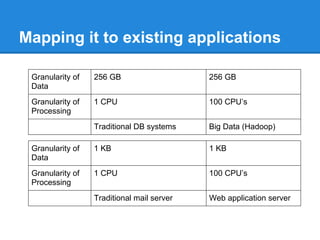

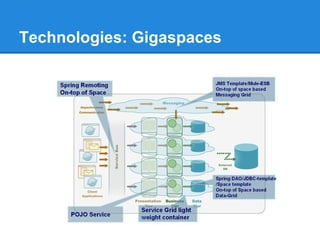

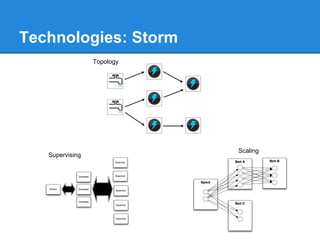

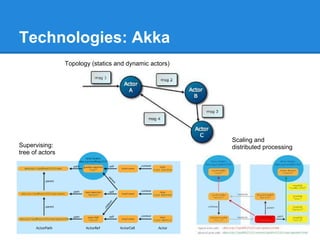

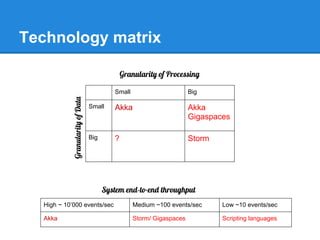

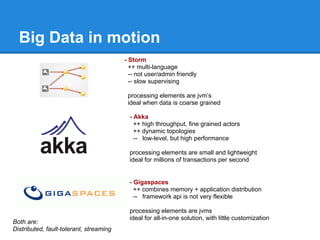

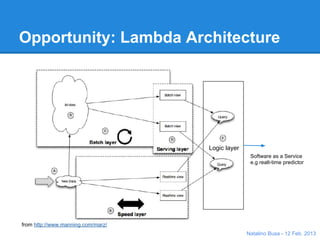

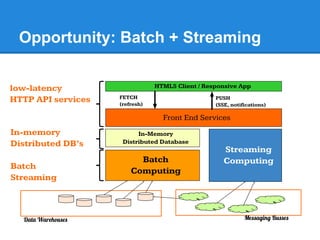

The document discusses streaming computing, focusing on technologies such as GigaSpaces, Storm, and Akka, and their use in event-driven processing. It highlights the importance of parallelism, the distinction between streaming and batch processing, and factors affecting performance such as throughput and latency. The author suggests that both streaming and batch approaches are valid depending on the application requirements and emphasizes opportunities like lambda architecture.