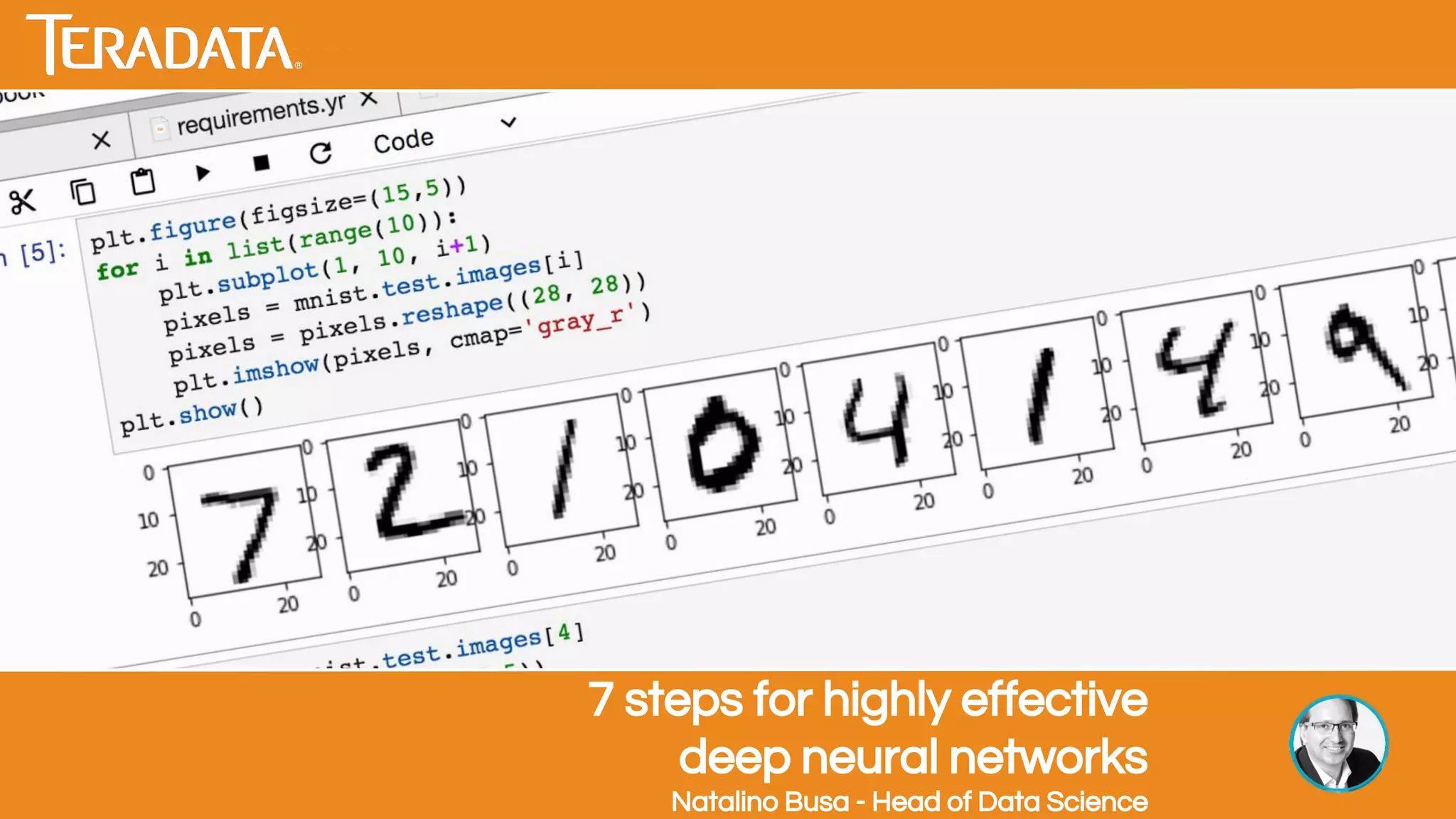

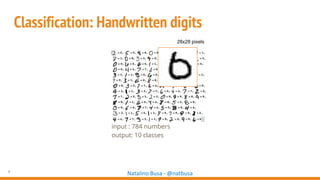

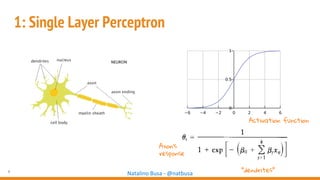

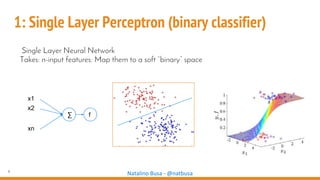

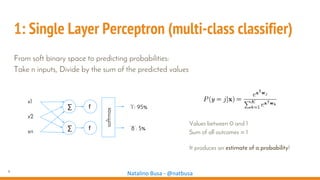

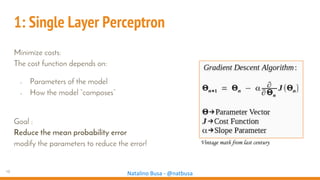

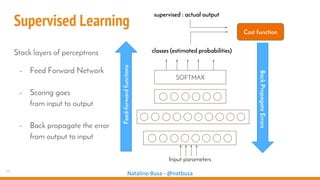

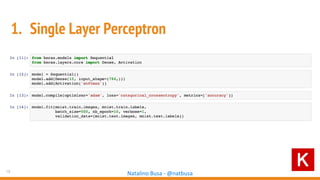

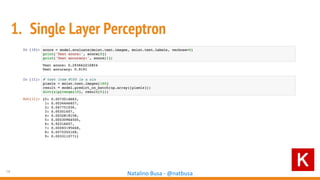

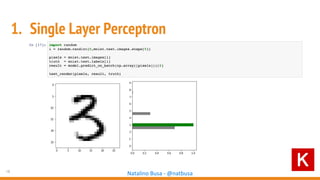

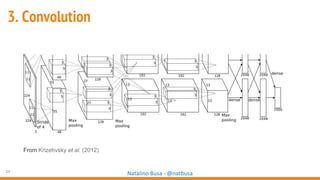

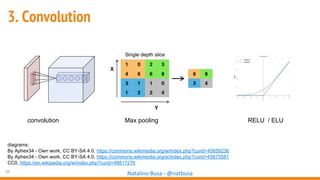

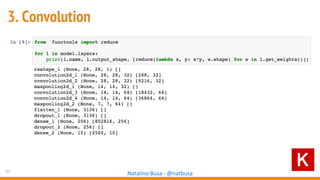

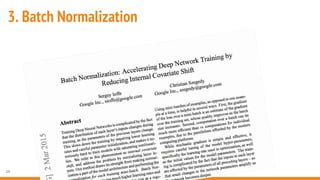

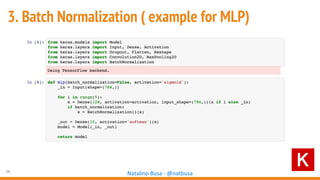

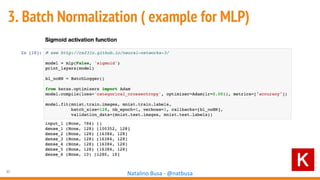

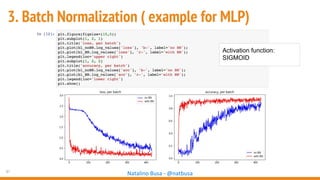

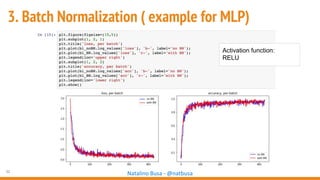

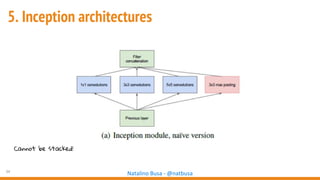

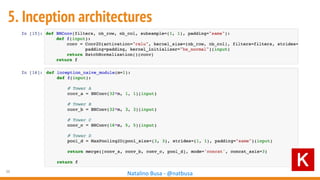

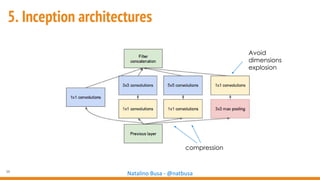

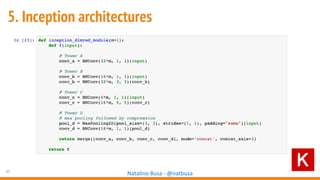

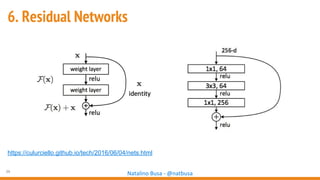

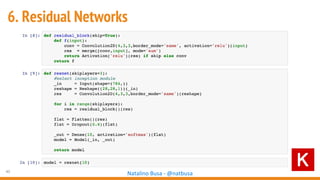

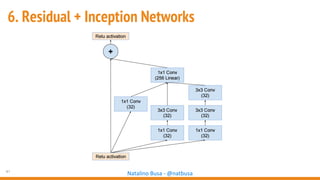

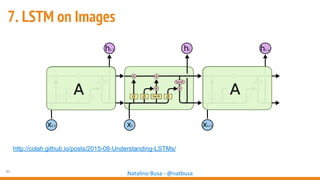

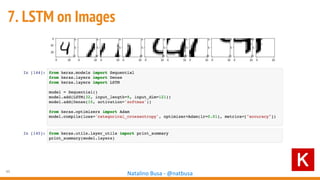

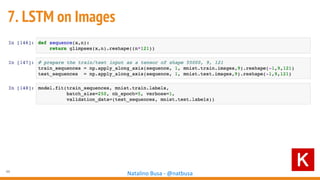

The document outlines seven essential steps for creating effective deep neural networks, beginning with the single layer perceptron and moving through multi-layer perceptrons, convolutional networks, and regularization techniques. It emphasizes the importance of batch normalization, inception architectures, and residual networks, culminating in the use of LSTM for image processing. Additionally, it provides various references and resources for further learning in neural network design and implementation.