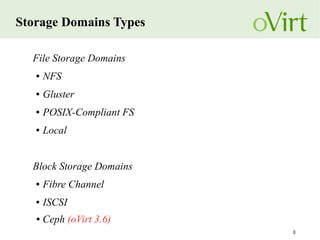

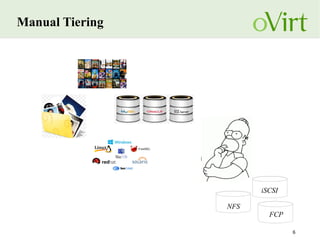

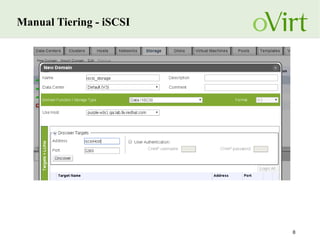

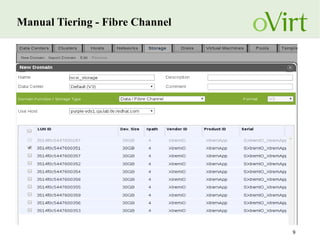

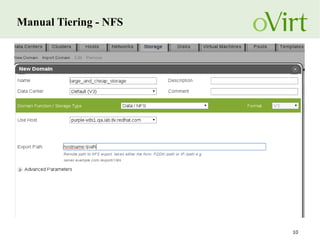

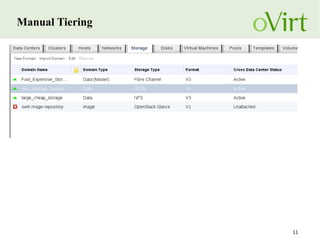

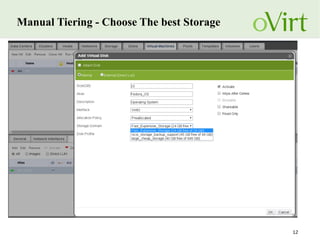

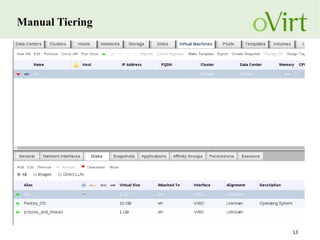

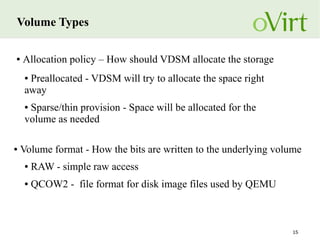

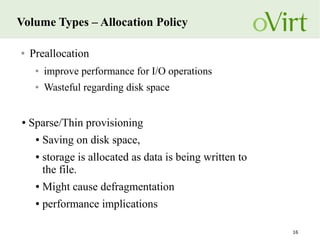

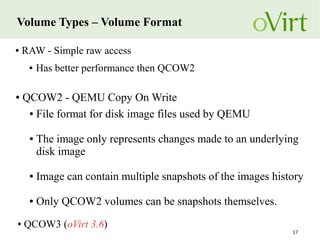

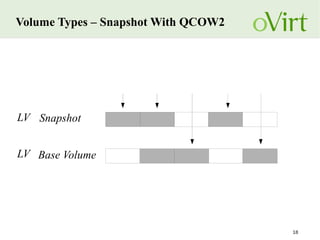

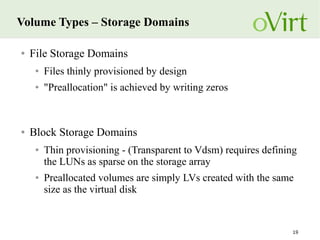

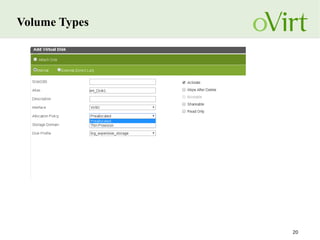

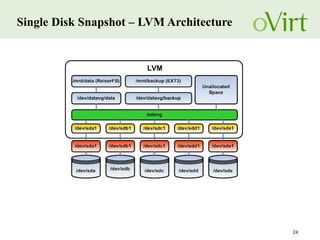

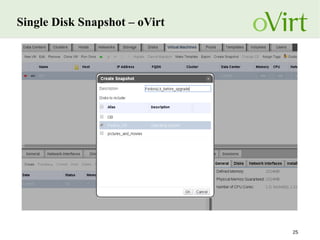

This document provides an overview of storage best practices in oVirt, including oVirt storage domains, manual tiering across different storage types, volume types and allocation policies, and single disk snapshots. It discusses using different storage domains like NFS, iSCSI, Fibre Channel for manual tiering to choose the best storage. It also covers volume types, allocation policies of preallocated vs thin provisioning, and using QCOW2 format for snapshots. Finally, it describes how oVirt implements single disk snapshots using logical volume manager (LVM).