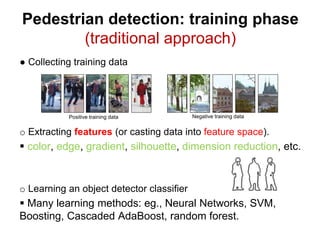

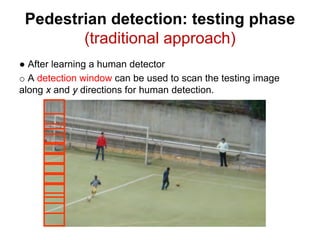

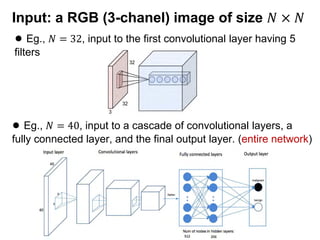

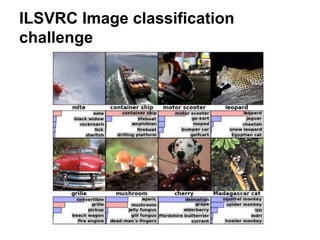

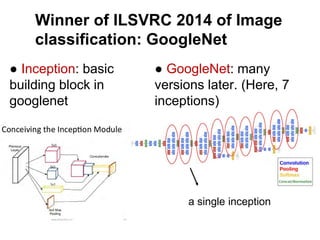

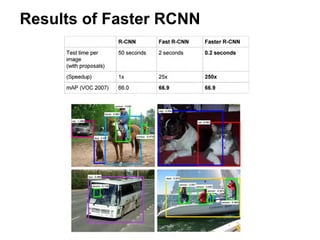

This document discusses deep learning techniques for object detection and recognition. It provides an overview of computer vision tasks like image classification and object detection. It then discusses how crowdsourcing large datasets from the internet and advances in machine learning, specifically deep convolutional neural networks (CNNs), have led to major breakthroughs in object detection. Several state-of-the-art CNN models for object detection are described, including R-CNN, Fast R-CNN, Faster R-CNN, SSD, and YOLO. The document also provides examples of applying these techniques to tasks like face detection and detecting manta rays from aerial videos.

![Deep CNN face detection/alignment

on Zenbo

●Zenbo Specifications

o CPU: Intel Atom x5-Z8550 2.4 GHz

o OS: Android 6.0.1

o RAM: 4G

o without using GPUs

● Frames per second

o 2.5 FPS [Resolution (640x480) ]

● Code optimizations

o C++ and OpenBLAS library

o Multi-threads computation

o without using any deep learning frameworks such as

tensorflow or pytorch](https://image.slidesharecdn.com/201706-180815030629/85/slide-63-320.jpg)