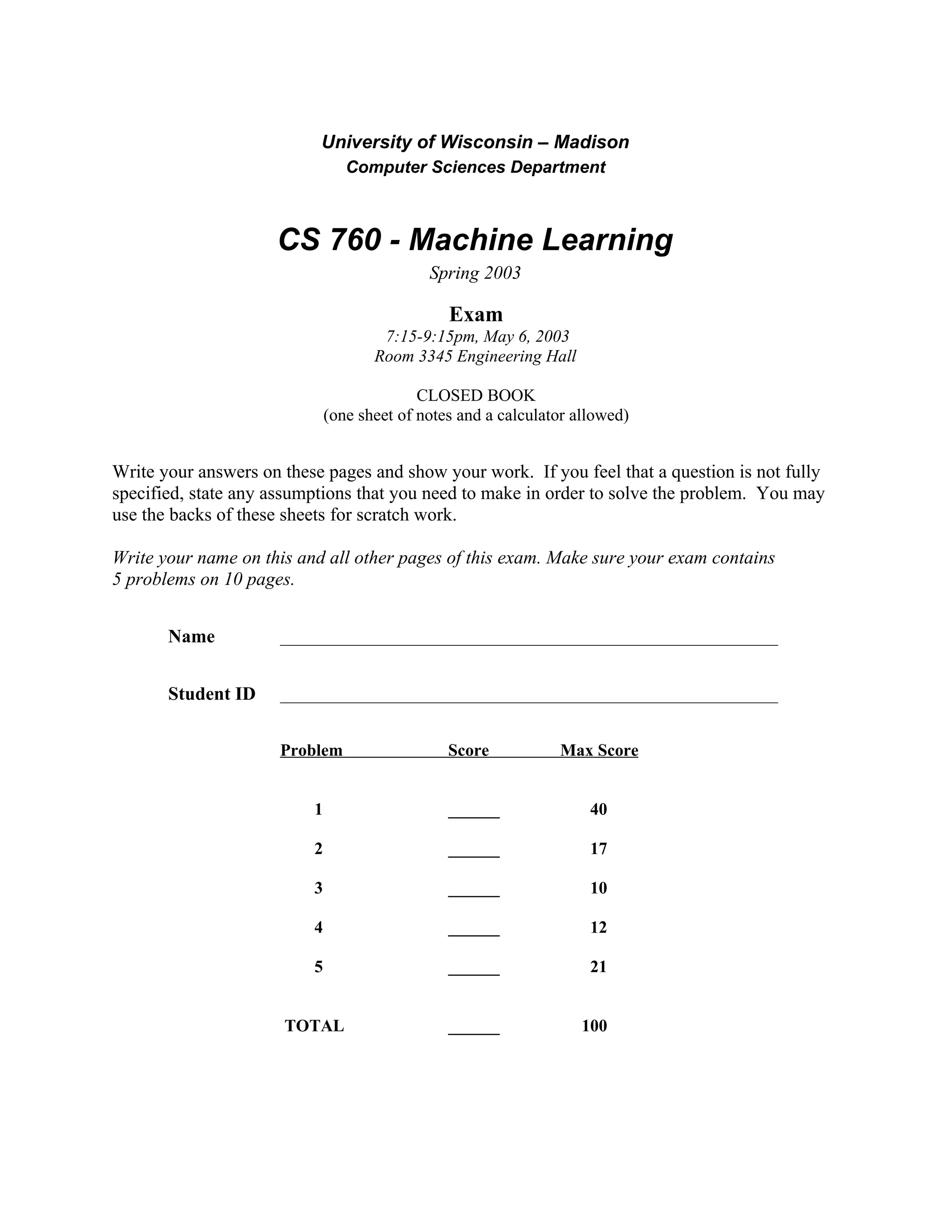

This document contains a 10-page machine learning exam consisting of 5 problems testing knowledge of concepts like Naive Bayes, decision trees, neural networks, reinforcement learning, overfitting avoidance, and computational learning theory. The exam is closed book and allows one sheet of notes and a calculator. It will take place on May 6, 2003 in Room 3345 of the Engineering Hall from 7:15-9:15pm.

![Name: _______________________________________

Problem 1 – Learning from Labeled Examples (40 points)

Imagine that you are given the following set of training examples.

Feature F1 can take on the values a, b, or c; Feature F2 is Boolean-valued;

and Feature F3 is always a real-valued number in [0,1].

F1 F2 F3 Category

Example 1 a T 0.2 +

Example 2 b F 0.5 +

Example 3 b F 0.9 +

Example 4 b T 0.6 –

Example 5 a F 0.1 –

Example 6 a T 0.7 –

a) How might a Naive Bayes system classify the following test example?

Be sure to show your work. (Discretize the numeric feature into three equal-width bins.)

F1 = c F2 = T F3 = 0.8

b) Describe how a 2-nearest-neighbor algorithm might classify Part a’s test example.

Page 2 of 10](https://image.slidesharecdn.com/spring-20032767/85/Spring-2003-2-320.jpg)