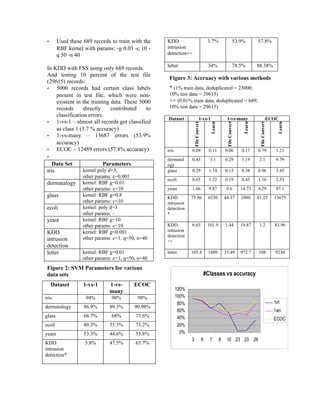

This document evaluates different multi-class classification methods using binary SVMs. It compares the 1-vs-1, 1-vs-many, and ECOC methods on various datasets from UCI and KDD. Experiments were run using SVMLight and results show accuracy and runtime for each method. The document outlines the objectives, implementation details, datasets used, and experimental results.

![Evaluating multi-class classification using binary SVMs

(IT-642: Course Project)

Vijay T. Raisinghani Pradeep Jagannath

rvijay@it.iitb.ac.in pradeep@it.iitb.ac.in

Roll No: 01429703 Roll No: 00329010

Abstract

We study how SVM-based binary classifiers are used for multi-way classification. We present the

results of experiments run on various UCI and KDD datasets, using the SVMLight package. The

methods evaluated are 1-versus-1, 1-versus-many and Erroc Correcting Output Coding (ECOC).

1. Main Objectives 4. Introduction

- Use three (1-vs-1, 1-vs-many, ECOC) Many supervised learning tasks can be cast

binary classification schemes, with as the problem of assigning elements to a

SVMLight [SVM02] on various UCI finite set of classes or categories. For

datasets and the KDD intrusion example the goal of optical character

detection dataset. recognition (OCR) is to determine the digit

- Report accuracy and run-time for the value (0..9) from its image. A number of

various methods. other applications too require such multi-

way classification e.g.: text and speech

2. Status and other details categorisation, natural language processing

- Fully completed tasks and gesture and object recognition in

machine vision [All00].

- Percentage contribution of members:

In designing machine learning algorithms, it

- Pradeep Jagannath – 50%

is often easier first to devise algorithms for

- Vijay T. Raisinghani – 50% distinguishing between only two classes

- Total time spent on the project: ?? [All00]. Ensemble schemes have been

proposed, which use binary (two-class)

3. Major stumbling blocks classification algorithms to solve K-class

- SVM parameter estimation. We referred classification problems. Decomposing a K-

to [Dua01] and other papers (see section class classification problem into a number of

“Related Work”) and had discussions binary classification problems allows an

with Shantanu Godbole (Ph.D student, ensemble scheme to model binary class

KR School of IT, IIT Bombay) to boundaries with much greater flexibility at a

“estimate” the kernel parameters lower computational cost [Goh01].

required. Still, with no prior estimate

about the time required for various Three representative ensemble schemes are

datasets, especially the KDD dataset, we one per class (1-vs-many), pairwise coupling

had to abort tests, which were running (1-vs-1), and error-correcting output coding

for days together. (ECOC) [Goh01].

- KDD dataset tested using only 1% of the 1. One per class (OPC). This is also known

data (i.e. 50,000) records. Full dataset as “one against others.” OPC trains K binary

has 5 million records. Even 10% of the classifiers, each of which separates one class

records were taking a very large amount from the other (K - 1) classes. Given a point

of time. X to classify, the binary classifier with the

largest output determines the class of X.](https://image.slidesharecdn.com/doc2889/85/doc-1-320.jpg)

![2. Pairwise coupling (PWC). PWC 7. Experiments and Results

constructs K(K-1)/2 pairwise binary All our tests were run on cygnus (PIII – 3

classifiers. The classifying decision is made processors, 512 MB RAM, running Linux

by aggregating the outputs of the pairwise version 2.4.17 (RedHat 7.1).

classifiers.

3. Error-correcting output coding (ECOC). We experimented with various kernel

ECOC was first proposed by Dietterich and settings. For some cases, the test did not

Bakiri [S] to reduce classification error by terminate and had to be aborted. For some

exploiting the redundancy of the coding settings, the accuracy from all the methods

scheme. ECOC employs a set of binary was very low. We exclude the results for

classifiers assigned with codewords such which the test had to be aborted or the

that the Hamming distance between each accuracy was low for all the methods.

pair is far enough apart to enable good error

correction. Data Set No. of Train Test

Classes records records

5. Related Work iris 3 100 50

[Die95] discusses the use of ECOC method dermatalogy 6 244 122

versus multi-way classification using glass 7 142 72

decision trees. [Gha00], [Ren01] present the ecoli 8 224 112

use of ECOC, for improving the yeast 10 989 495

performance of Naïve-Bayes, for text KDD 23 48,984,31 3,11,029

intrusion (in train (4.9 (0.3

classification. [All00], [Wes99], [Hsu02]

detection data) million) million)

propose extensions to SVMs for multi-way 38

classification. [Goh01] provides details of (in test

how to boost the output of binary SVMs, for data)

image classification. [Mor98] discusses letter 26 15,000 5000

various methods of combining the output of

1-vs-1. Figure 1: Data sets

6. Implementation details The dematalogy dataset had missing values

All our test scripts were shell scripts, which in the age attribute. We substituted this with

invoked SVMLight . Additionally, for ECOC the maximum frequency value of age.

we used a modified form of bch3.c [Zar02]

– encoder/decoder program for BCH codes For the KDD dataset we did the following:

in C. We modified the program to decode - Reduced the training data to only 1% i.e.

and encode in parts. The program generates 50,000 records.

the code matrix based on the input set of - Scanned the 1% test set for duplicates --

classes and accepts the ‘received’ code from found 50% duplicates. Eliminated them,

our shell scripts for decoding. We hard- finally training data had 23000 records.

coded other parameters: code length to 31 - One training record had 55 features

bits and errors correcting capability to 15 while all others had 41. We eliminated

bits. This resulted in the data length being a this record, although it may not have

maximum of 6 bits i.e. we could encode a had contributed to any problems.

maximum of 64 classes with these settings. - Feature selection was done using

This was sufficient for the data sets we used, “Inducer”[MLC++] and C4.5. Selected

which had a maximum of 26 classes. 16 features from the original set of 41.

- Stratified the de-duped file to max 50

per class to get 689 records. To run a

simpler / faster test.](https://image.slidesharecdn.com/doc2889/85/doc-2-320.jpg)

![[Gha00] Rayid Ghani. Using error-

convert vs row s/class

correcting codes for text

classification. In Proceedings of

16000

14000

the Seventeenth International

12000 Conference on Machine Learning,

1v1

10000 2000.

8000 1vm

6000

4000

ECOC [Ren01] Jason D. M. Rennie. Improving

2000 multi-class text classification with

0 naïve bayes. Master's thesis,

Massachusetts Institute of

4

29

63

43

5.

Technology, 2001.

0.

6.

0.

10

[Hsu02] C.-W. Hsu and C.-J. Lin. A

comparison of methods for multi-

learn vs row s/class

class support vector machines ,

IEEE Transactions on Neural

120

Networks, 13(2002), 415-425.

100

80 1v1 [Wes99] J. Weston, "Extensions to the

60 1vm Support Vector Method", PhD

40 ECOC thesis, Royal Holloway University

20 of London, 1999.

0 [Mor98] M. Moreira and E. Mayoraz.

Improving pairwise coupling

4

63

29

43

5.

classification with error correcting

0.

6.

0.

10

classifiers. Proceedings of the

Tenth European Conference on

Machine Learning, April 1998.

References

[All00] E. L. Allwein, R. E. Schapire, and [SVM02] Thorsten Joachims

Y. Singer. Reducing multiclass to http://svmlight.joachims.org/,

binary: A unifying approach for Cornell University, Department of

margin classifiers. Journal of Computer Science.

Machine Learning Research,

1:113-141, 2000. [Dua01] Kaibo Duan, S Sathiya Keerthi,

Aun Neow PooICONIP – 2001,

[Goh01] K. Goh, E. Chang, K. Cheng. 8th International Conference on

SVM Binary Classifier Ensembles Neural Information Processing,

for Image Classification. Shanghai China, November

CIKM’01, November 5-10,2001, 14-18.2001

Atlanta, Georgia, USA.

[MLC++] Silicon Graphics, Inc., MLC++,

[Die95] T. G. Dietterich and G. Bakiri. http://www.sgi.com/tech/mlc/,

Solving multiclass learning 2002

problems via error-correcting

output codes. Journal of Artificial [Zar02] R. Morelos-Zaragoza., BCH codes -

Intelligence Research, 2:263-286, The Error Correcting Codes (ECC)

1995. Page,

http://www.csl.sony.co.jp/person/

morelos/ecc/codes.html, 2002](https://image.slidesharecdn.com/doc2889/85/doc-4-320.jpg)