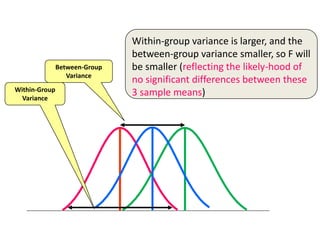

This document provides an overview of one-way ANOVA, including its assumptions, steps, and an example. One-way ANOVA tests whether the means of three or more independent groups are significantly different. It compares the variance between sample means to the variance within samples using an F-statistic. If the F-statistic exceeds a critical value, then at least one group mean is significantly different from the others. Post-hoc tests may then be used to determine specifically which group means differ. The example calculates statistics to compare the analgesic effects of three drugs and finds no significant difference between the group means.