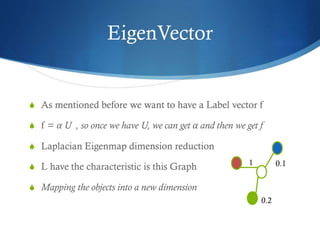

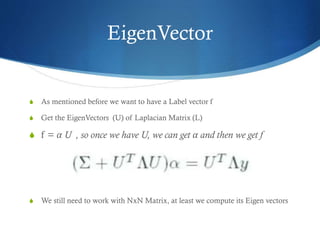

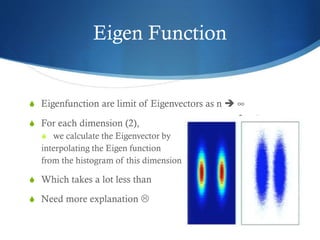

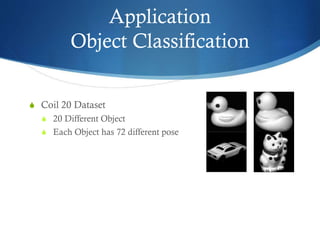

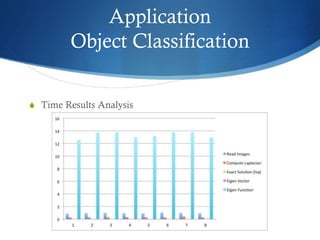

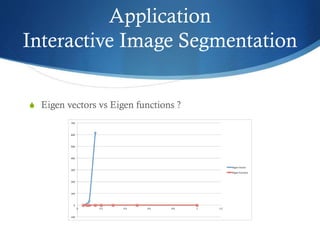

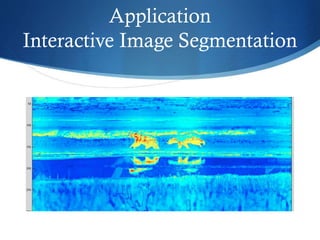

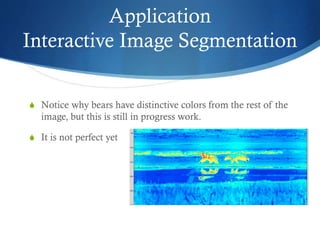

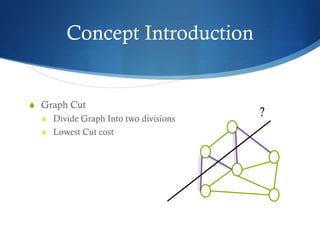

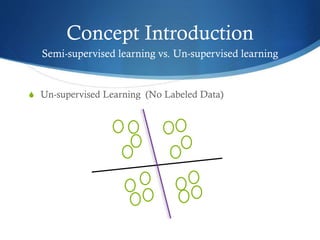

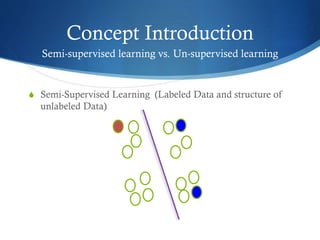

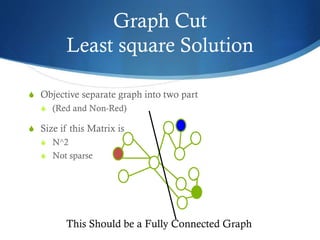

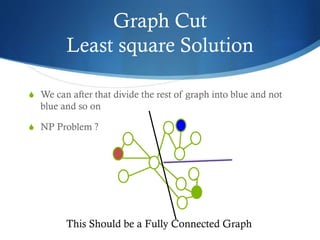

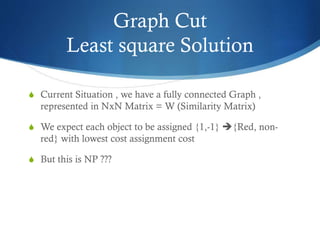

The document discusses semi-supervised learning techniques, focusing on graph cut and least square solutions as methods for object classification and image segmentation. It distinguishes between semi-supervised and unsupervised learning, presenting the use of eigenvectors and eigenfunctions for dimensionality reduction and navigating high-dimensional data. The findings suggest using eigenvectors for smaller object sets with high dimensions and eigenfunctions for larger sets with lower dimensions for optimal performance.

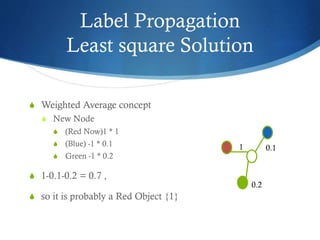

![Label Propagation

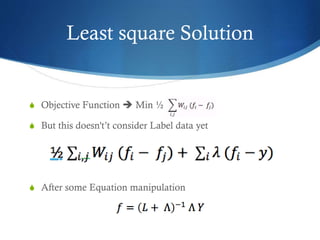

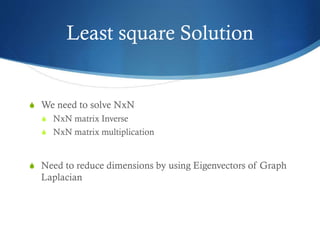

Least square Solution

S Here comes the first Equation , Lets define

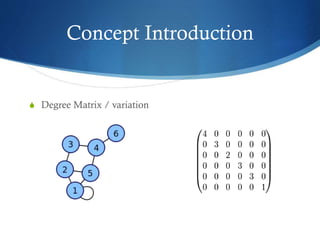

S Matrix W (NxN) , Similarity Between Objects

S Matrix D (NxN), degree of each Object

S Matrix L (Laplacian Matrix) = D – W

S Label vector F (Nx1), assignment of each object [-1,1] and

not {-1,1}

S Objective Function Min ½](https://image.slidesharecdn.com/semi-supervisedlearning-140304091308-phpapp02/85/Semi-supervised-learning-13-320.jpg)