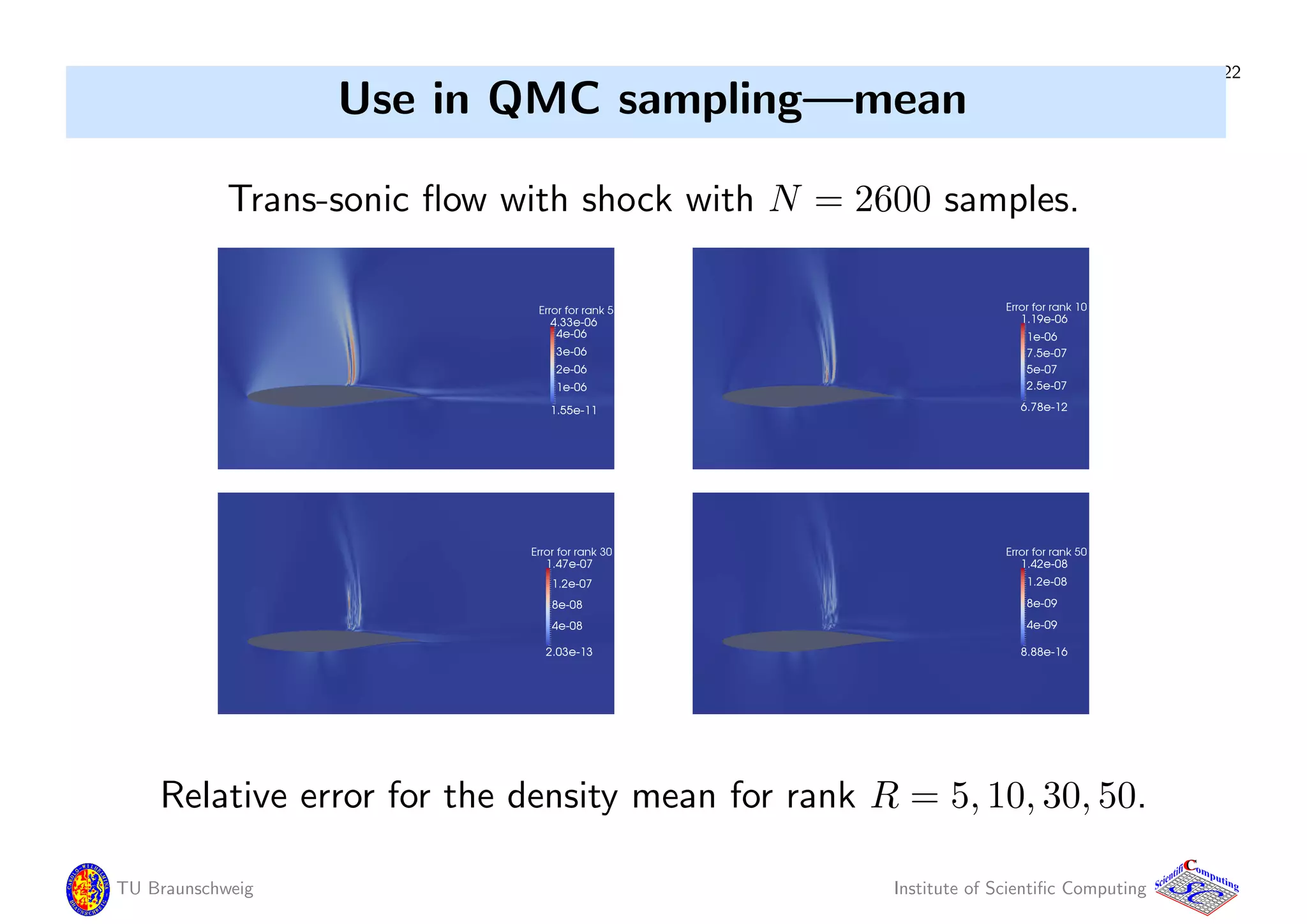

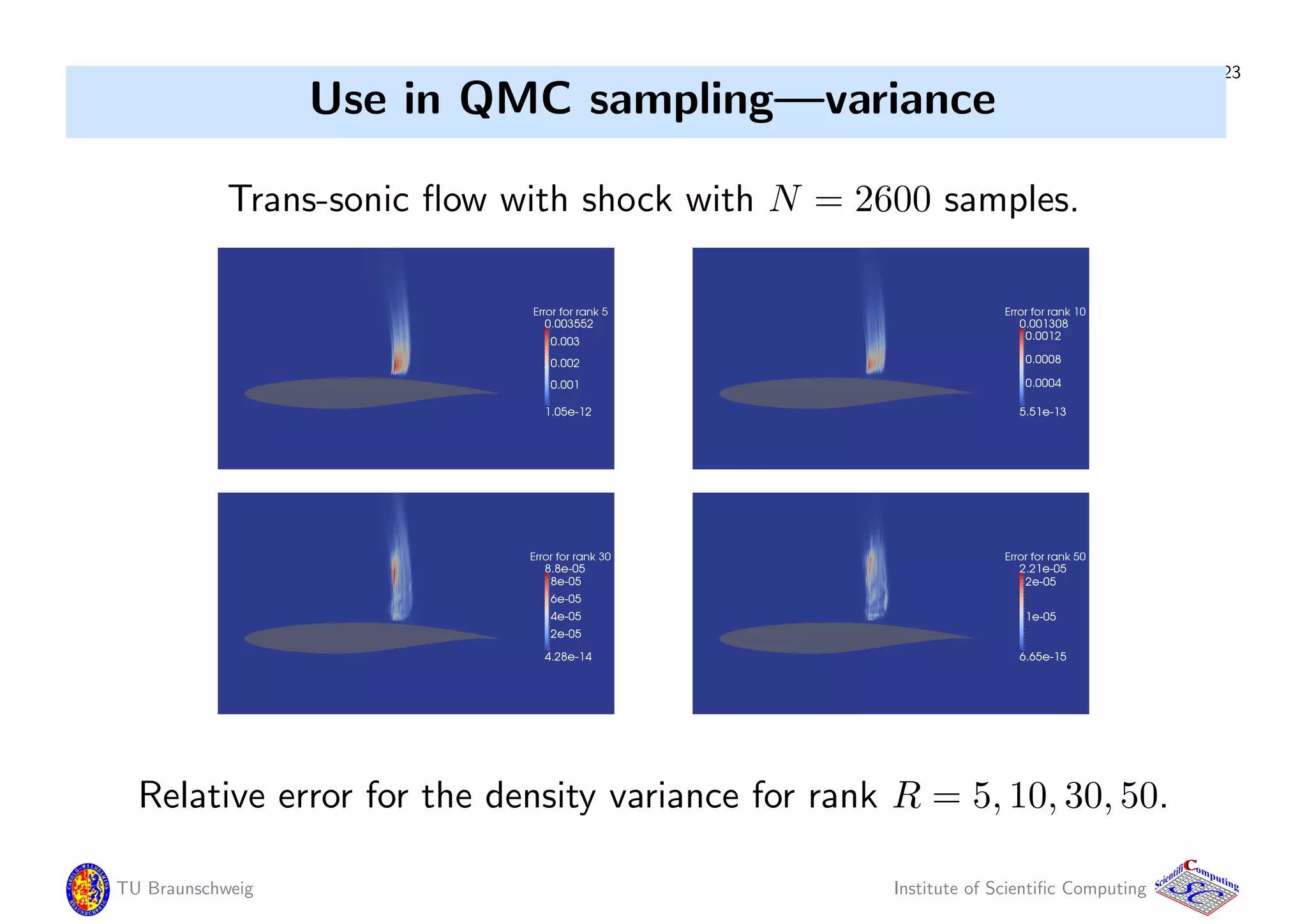

The document discusses the computation of functionals of stochastic partial differential equations (SPDEs) solutions using sampling and low-rank tensor approximations. It outlines methods for simulating expensive computations, parametric problems, and the use of emulation to approximate solutions efficiently. Several mathematical techniques, including tensor products, Karhunen-Loève expansions, and covariance operators, are employed to achieve model reduction and effective numerical solutions in the context of random fields.

) = f(ω) a.s. in ω ∈ Ω,

ue(ω) is a U-valued random variable (RV).

To compute an approximation uM(ω) to ue(ω) via

simulation is expensive, even for one value of ω, let alone for

Jk ≈

N

n=1

Ψk(ωn, uM(ωn)) wn

Not all Ψk of interest are known from the outset.

TU Braunschweig Institute of Scientific Computing

CC

Scientifi omputing](https://image.slidesharecdn.com/mcqmc2012matthies-161029075148/75/Sampling-and-low-rank-tensor-approximations-3-2048.jpg)

) = f(ω) a.s. in ω ∈ Ω,

where uM(ω) =

M

m=1 um(ω)vm ∈ S ⊗ UM.

TU Braunschweig Institute of Scientific Computing

CC

Scientifi omputing](https://image.slidesharecdn.com/mcqmc2012matthies-161029075148/75/Sampling-and-low-rank-tensor-approximations-4-2048.jpg)

![7

Computing the simulation

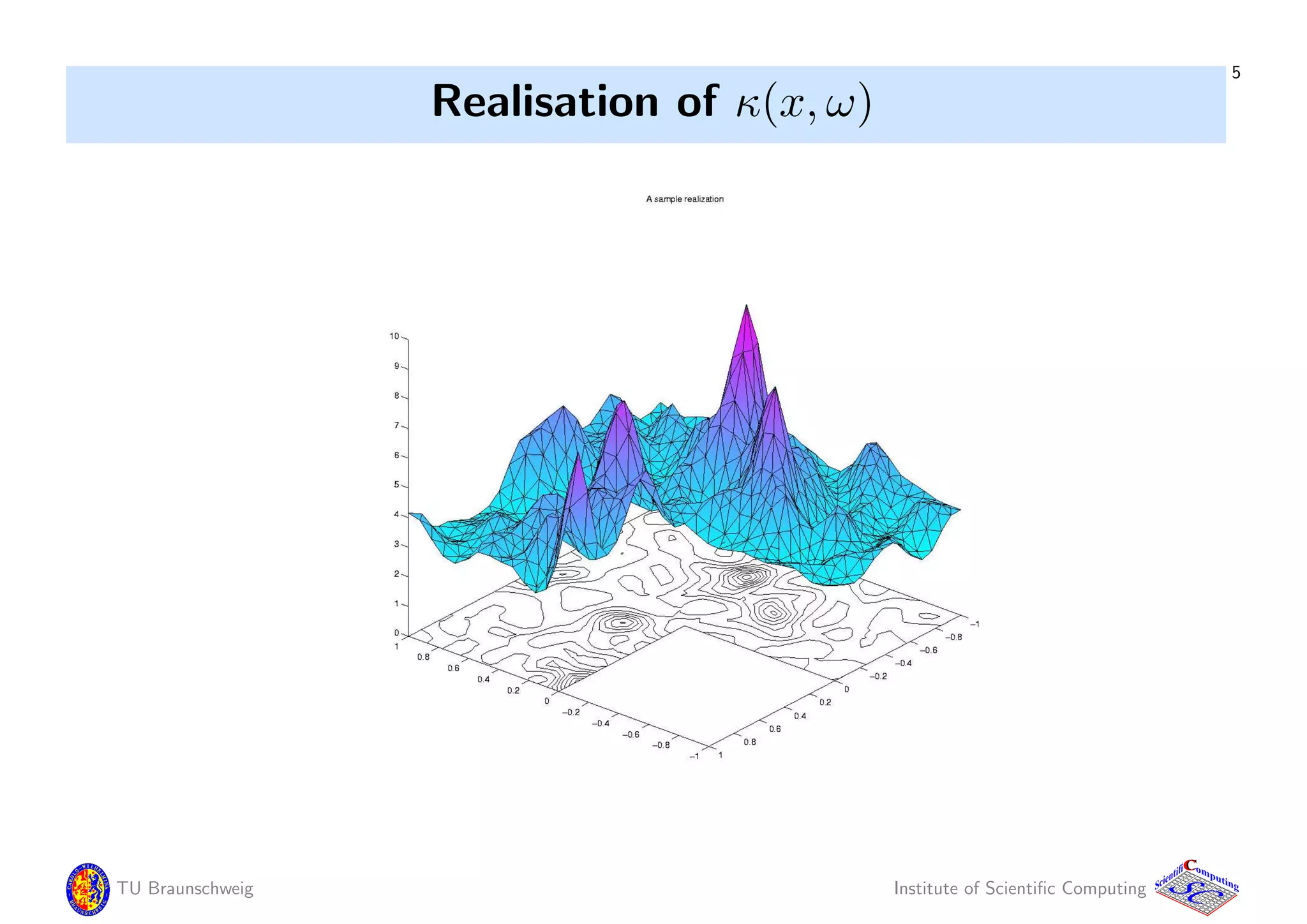

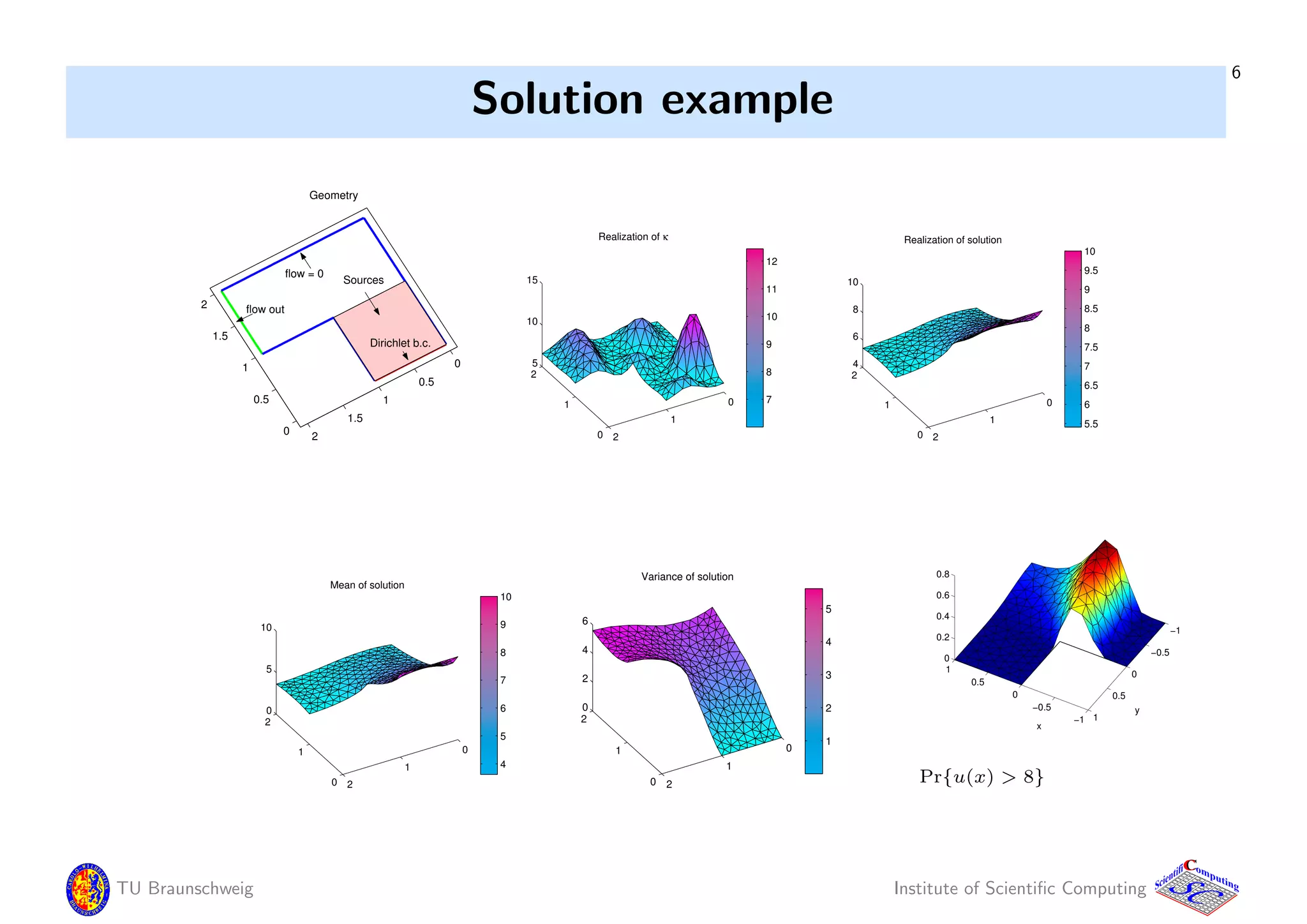

To simulate uM one needs samples of the random field (RF) κ,

which depends on infinitely many random variables (RVs).

This has to be reduced / transformed Ξ : Ω → [0, 1]s

to a finite number

s of RVs ξ = (ξ1, . . . , ξs), with µ = Ξ∗P the push-forward measure:

Jk =

Ω

Ψk(ω, ue(ω)) P(dω) ≈

[0,1]s

ˆΨk(ξ, uM(ξ)) µ(dξ).

This is a product measure for independent RVs (ξ1, . . . , ξs).

Approximate expensive simulation uM(ξ) by cheaper emulation.

Both tasks are related by viewing uM : ξ → uM(ξ), or κ1 : x → κ(x, ·)

(RF indexed by x), or κ2 : ω → κ(·, ω) (function valued RV),

maps from a set of parameters into a vector space.

TU Braunschweig Institute of Scientific Computing

CC

Scientifi omputing](https://image.slidesharecdn.com/mcqmc2012matthies-161029075148/75/Sampling-and-low-rank-tensor-approximations-7-2048.jpg)

![15

Functional approximation

Emulation — replace expensive simulation uM(ξ) by inexpensive

approximation / emulation uE(ξ) ≈ uM(ξ)

( alias response surfaces, proxy / surrogate models, etc.)

Choose subspace SB ⊂ S with basis {Xβ}B

β=1,

make ansatz for each um(ξ) ≈ β uβ

mXβ(ξ), giving

uE(ξ) =

m,β

uβ

mXβ(ξ)vm =

m,β

uβ

mXβ(ξ) ⊗ vm.

Set U = (uβ

m) — (M × B).

Sampling, we generate matrix / tensor

U = [uM(ξ1), . . . , uM(ξN)] = (um(ξn))n

m — (M × N).

TU Braunschweig Institute of Scientific Computing

CC

Scientifi omputing](https://image.slidesharecdn.com/mcqmc2012matthies-161029075148/75/Sampling-and-low-rank-tensor-approximations-15-2048.jpg)

![16

Tensor product structure

Story does not end here as one may choose S = k Sk,

approximated by SB =

K

k=1 SBk

, with SBk

⊂ Sk.

Solution represented as a tensor of grade K + 1

in WB,N =

K

k=1 SBk

⊗ UN.

For higher grade tensor product structure, more reduction is possible,

— but that is a story for another talk, here we stay with K = 1.

With orthonormal Xβ one has

uβ

m =

[0,1]s

Xβ(ξ)um(ξ) µ(dξ) ≈

N

n=1

wnXβ(ξn)um(ξn).

Let W = diag (wn)—(N × N), X = (Xβ(ξn)) — (B × N), hence

U = U(W XT

). For B = N this is just a basis change.

TU Braunschweig Institute of Scientific Computing

CC

Scientifi omputing](https://image.slidesharecdn.com/mcqmc2012matthies-161029075148/75/Sampling-and-low-rank-tensor-approximations-16-2048.jpg)

![17

Low-rank approximation

Focus on array of numbers U := [um(ξn)], view as matrix / tensor:

N

n=1

M

m=1

Um,nem

M ⊗ en

N, with unit vectors en

N ∈ RN

, em

M ∈ RM

.

The sum has M ∗ N terms, the number of entries in U.

Rank-R representation is approximation with R terms

U =

N

n=1

M

m=1

Um,nem

M(en

N)T

≈

R

=1

a bT

= ABT

,

with A = [a1, . . . , aR] — (M × R) and B = [b1, . . . , bR] — (N × R).

It contains only R ∗ (M + N) M ∗ N numbers.

We will use updated, truncated SVD. This gives for coefficients

U = U(W XT

) ≈ ABT

(W XT

) = A(XW B)T

=: AB

T

TU Braunschweig Institute of Scientific Computing

CC

Scientifi omputing](https://image.slidesharecdn.com/mcqmc2012matthies-161029075148/75/Sampling-and-low-rank-tensor-approximations-17-2048.jpg)

![18

Emulation instead of simulation

Let x(ξ) := [X1(ξ), . . . , XB(ξ)]T

. Emulator and low-rank emulator is

uE(ξ) = Ux(ξ), and uL(ξ) := AB

T

x(ξ).

Computing A, B: start with z samples Uz1 = [uM(ξ1), . . . , uM(ξz)].

Compute truncated, error controled SVD:

M×z

Uz1 ≈

M×R

W

R×R

Σ

z×R

V

T

;

then set A1 = W Σ1/2

, B1 = V Σ1/2

⇒ B1.

For each n = z + 1, . . . , 2z, emulate uL(ξn) and evaluate residuum

rn := r(ξn) := f(ξn) − A[ξn](uL(ξn)). If rn is small, accept

un

A = uL(ξn), otherwise solve for uM(ξn) and set un

A = uM(ξn).

Set Uz2 = [uz+1

A , . . . , u2z

A ], compute updated SVD of [Uz1, Uz2],

⇒ A2, B2. Repeat for each batch of z samples.

TU Braunschweig Institute of Scientific Computing

CC

Scientifi omputing](https://image.slidesharecdn.com/mcqmc2012matthies-161029075148/75/Sampling-and-low-rank-tensor-approximations-18-2048.jpg)

![19

Emulator in integration

To evaluate

Jk =

Ω

Ψk(ω, ue(ω)) P(dω) ≈

[0,1]s

ˆΨk(ξ, uM(ξ)) µ(dξ),

we compute

Jk ≈

N

n=1

wn

ˆΨk(ξn, uL(ξn)).

If we are lucky, we need much fewer than N samples to find the

low-rank representation A, B for uL.

This is cheap to compute from samples, and uses only little storage.

In the integral the integrand is cheap to evaluate, and the low-rank

representation can be re-used if a new (Jk, Ψk) has to be evaluated.

TU Braunschweig Institute of Scientific Computing

CC

Scientifi omputing](https://image.slidesharecdn.com/mcqmc2012matthies-161029075148/75/Sampling-and-low-rank-tensor-approximations-19-2048.jpg)

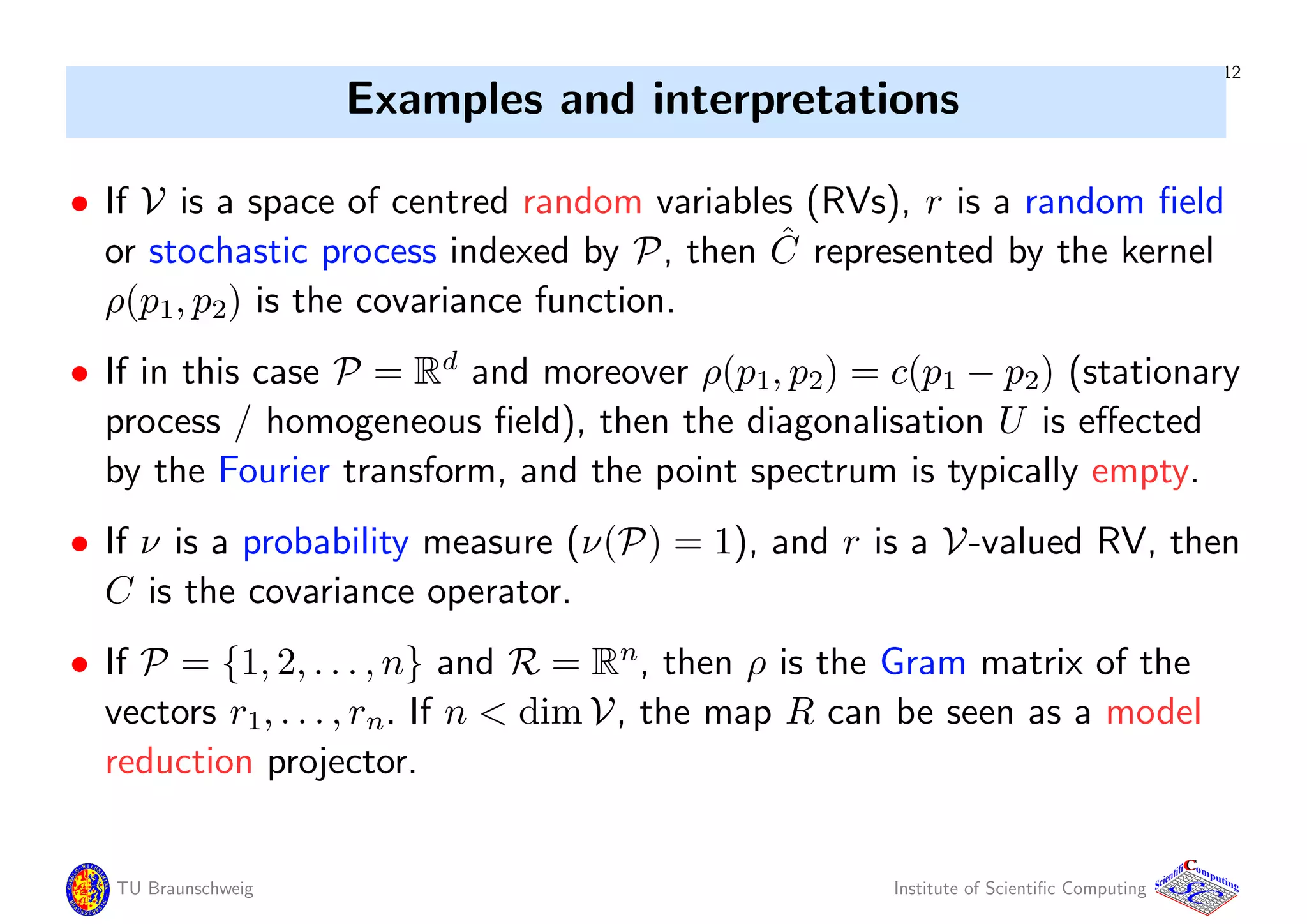

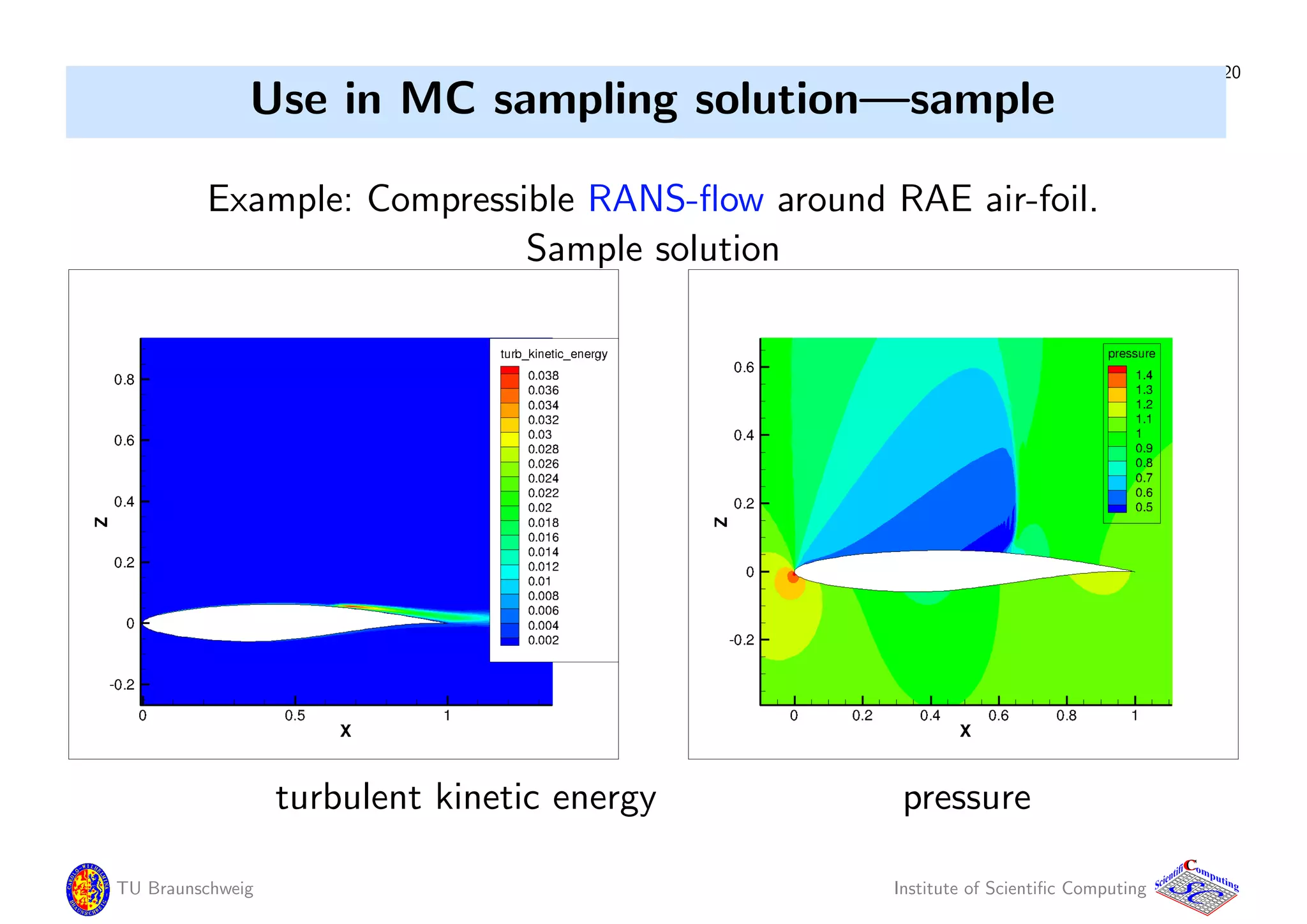

![21

Use in MC sampling solution—storage

Inflow and air-foil shape uncertain.

Data compression achieved by updated SVD:

Made from 600 MC Simulations, SVD is updated every 10 samples.

M = 260, 000 N = 600

Updated SVD: Relative errors, memory requirements:

rank R pressure turb. kin. energy memory [MB]

10 1.9e-2 4.0e-3 21

20 1.4e-2 5.9e-3 42

50 5.3e-3 1.5e-4 104

Dense matrix ∈ R260000×600

costs 1250 MB storage.

TU Braunschweig Institute of Scientific Computing

CC

Scientifi omputing](https://image.slidesharecdn.com/mcqmc2012matthies-161029075148/75/Sampling-and-low-rank-tensor-approximations-21-2048.jpg)