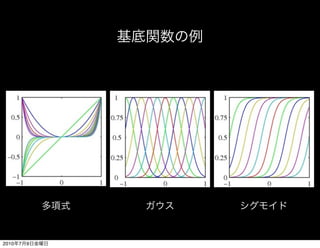

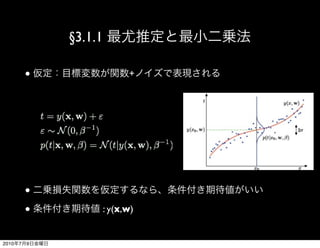

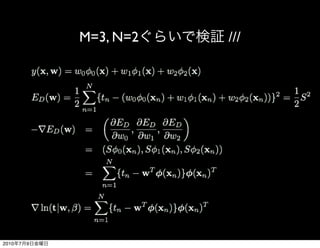

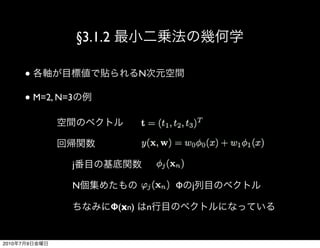

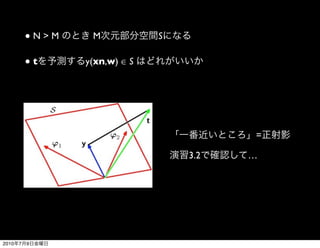

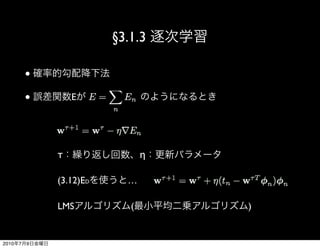

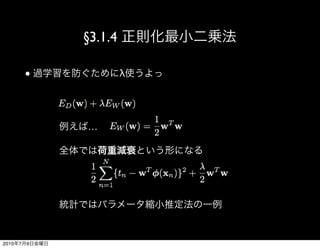

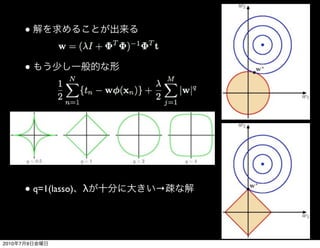

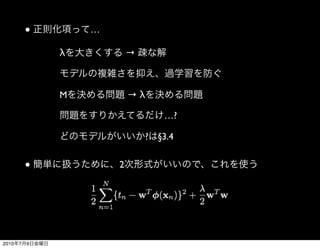

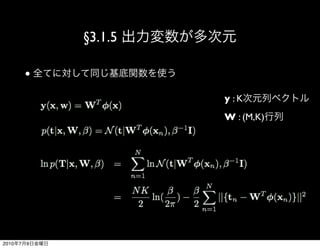

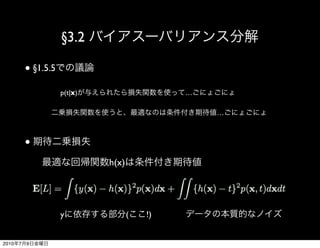

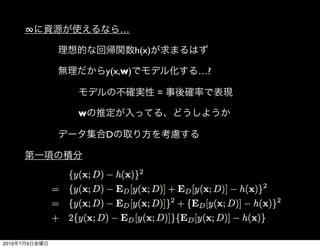

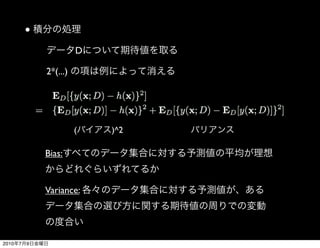

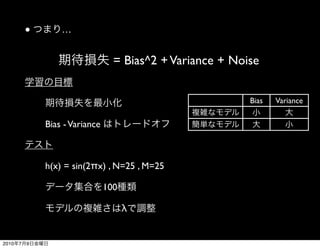

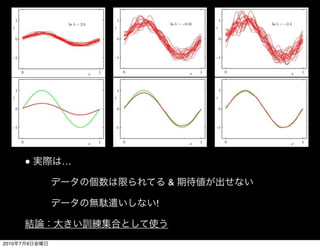

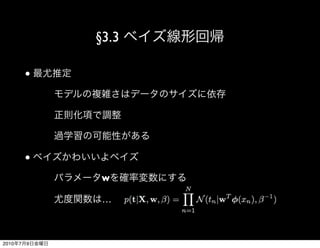

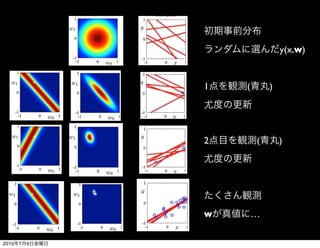

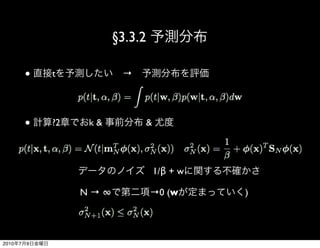

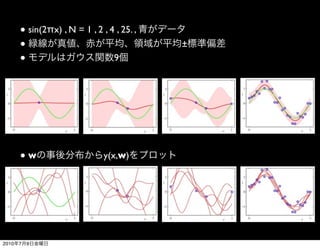

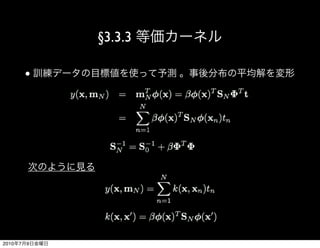

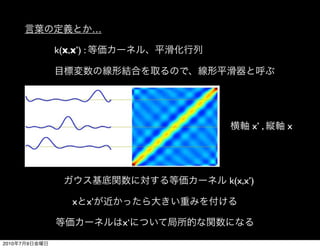

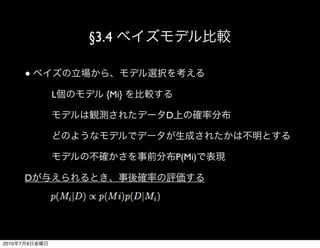

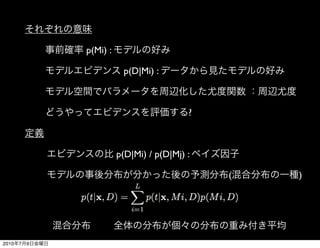

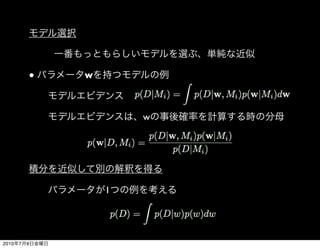

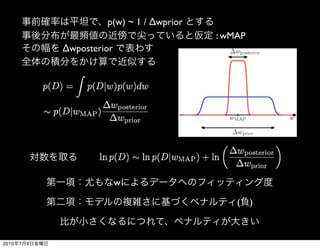

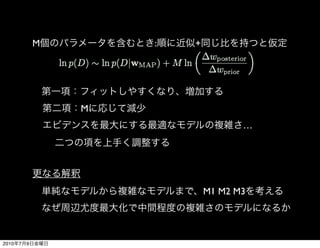

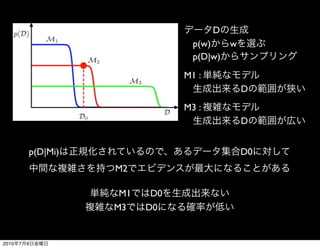

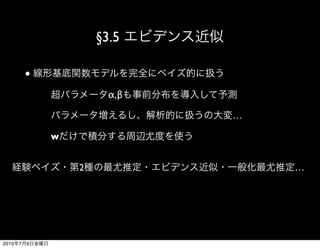

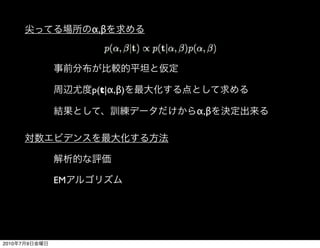

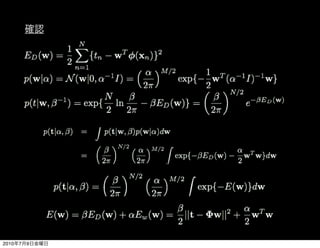

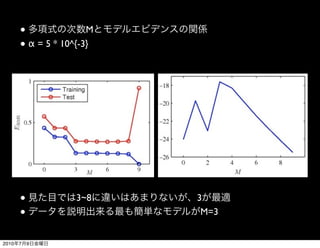

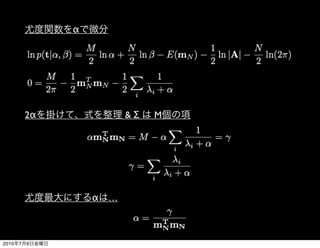

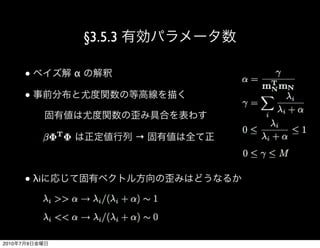

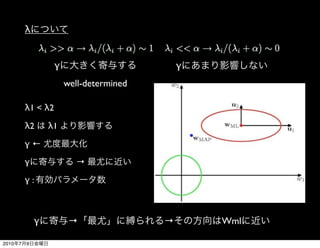

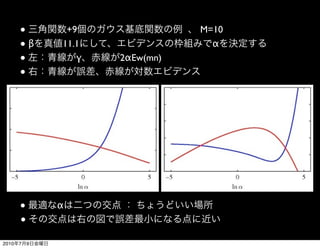

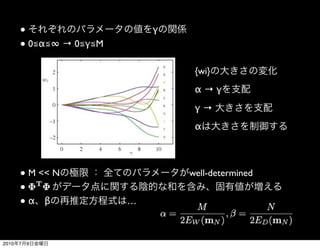

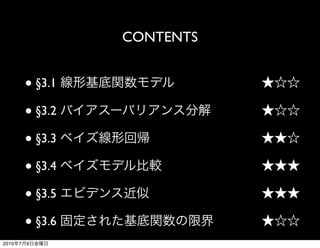

The document discusses linear regression and regularization. It introduces linear regression models using basis functions and describes optimization methods like gradient descent. It then covers regularization, discussing how adding constraints like lasso and ridge regression can improve generalization by reducing overfitting. The document presents evidence selection methods like Bayesian model averaging to integrate over models. Finally, it describes applying empirical Bayes methods to estimate hyperparameters like α and β by maximizing the marginal likelihood.

![Introduction

● →

●

{xn} , {tn}

x t

…

t = y(x) p( t | x ) …

Et[t | x] @ §1.5.5

2010 7 9](https://image.slidesharecdn.com/prmlsec32-100714025820-phpapp01/85/Prml-sec3-3-320.jpg)