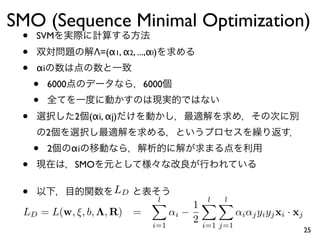

The document describes the support vector machine (SVM) algorithm for classification. It discusses how SVM finds the optimal separating hyperplane between two classes by maximizing the margin between them. It introduces the concepts of support vectors, Lagrange multipliers, and kernels. The sequential minimal optimization (SMO) algorithm is also summarized, which breaks the quadratic optimization problem of SVM training into smaller subproblems to optimize two Lagrange multipliers at a time.

![(2)

• w=w0, b=b0

L(w, b, Λ)

l

∂L(w, b, Λ)

= w0 − αi yi xi = 0

∂w

w=w0

l

i=1 (2)

∂L(w, b, Λ)

= − αi yi = 0

∂b

b=b0 i=1

l

l

w0 = αi yi xi , αi yi = 0

i=1 i=1

• w=w0, b=b0

l

1

L(w0 , b0 , Λ) = w0 · w0 − αi [yi (xi · w0 + b0 ) − 1]

2 i=1

l

l l

1

= αi − αi αj yi yj xi · xj

i=1

2 i=1 j=1

• w b

Λ 16](https://image.slidesharecdn.com/datamining6thsvm-101226214920-phpapp02/85/Datamining-6th-svm-16-320.jpg)

![SVM

• l

w, b

αi yi = 0, αi ≥ 0

i=1 (3)

l

l l

1

L(w0 , b0 , Λ) = αi − αi αj yi yj xi · xj

i=1

2 i=1 j=1

Λ

• SVM

• w0 Λ

l

• (2) ( w0 = i=1 αi yi xi )

• (2) αi≠0 xi w KKKT

• KKT : αi [yi (xi · w0 + b0 ) − 1] = 0

17](https://image.slidesharecdn.com/datamining6thsvm-101226214920-phpapp02/85/Datamining-6th-svm-17-320.jpg)

![(1)

•

Λ = (α1 , . . . , αl ), R = (r1 , . . . , rl )

L

L(w, ξ, b, Λ, R)

l

l

l

1

= w·w+C ξi − αi [yi (xi · w + b) − 1 + ξi ] − ri ξi

2 i=1 i=1 i=1

w0 , b0 , ξi L

0

w, b, ξi KKT

l

∂L(w, ξ, b, Λ, R)

= w0 − α i y i xi = 0

∂w w=w0 i=0

l

∂L(w, ξ, b, Λ, R)

= − αi yi = 0

∂b

b=b0 i=0

∂L(w, ξ, b, Λ, R)

0 = C − αi − ri = 0

∂ξi ξ=ξ 22

i](https://image.slidesharecdn.com/datamining6thsvm-101226214920-phpapp02/85/Datamining-6th-svm-22-320.jpg)