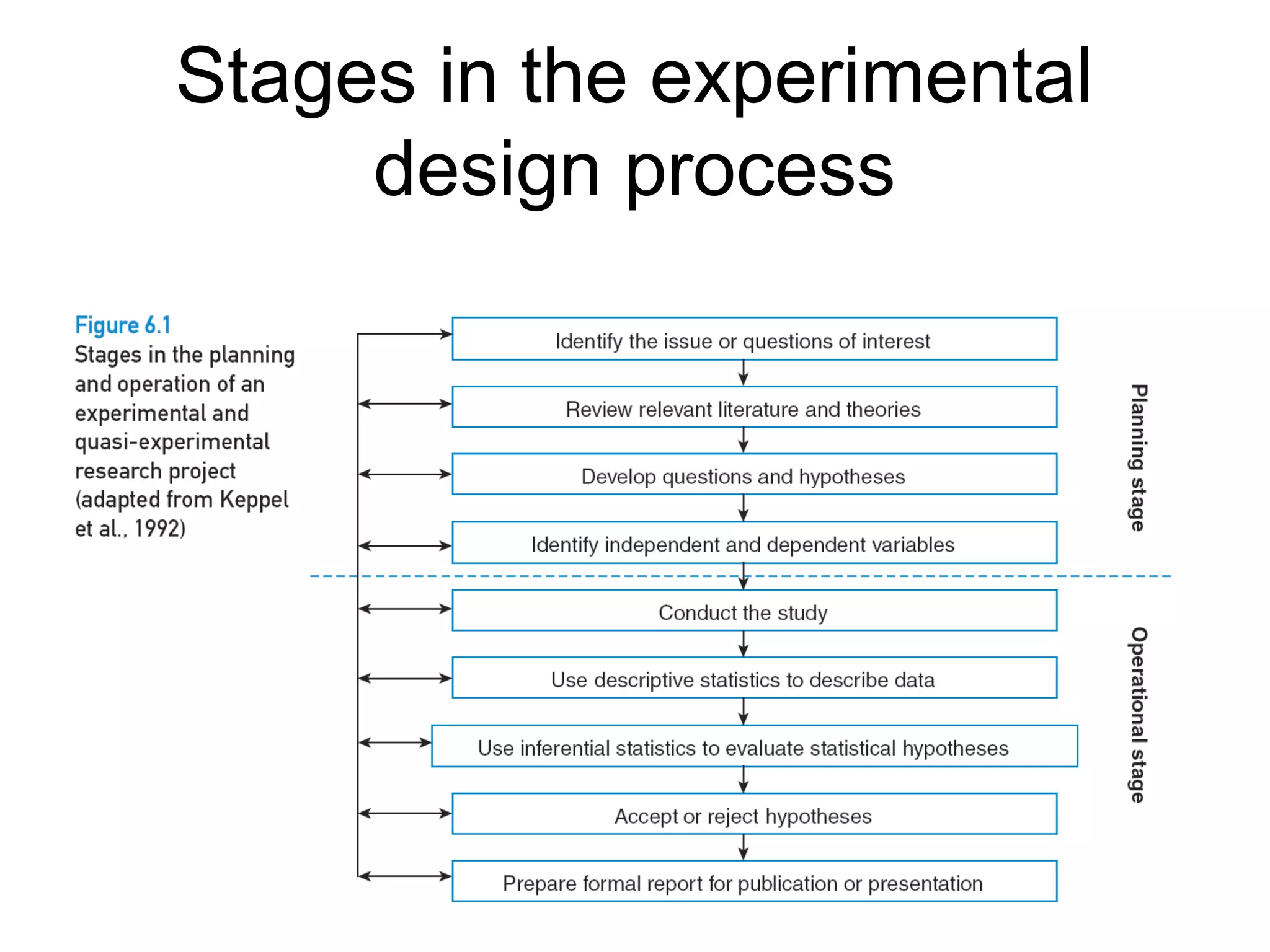

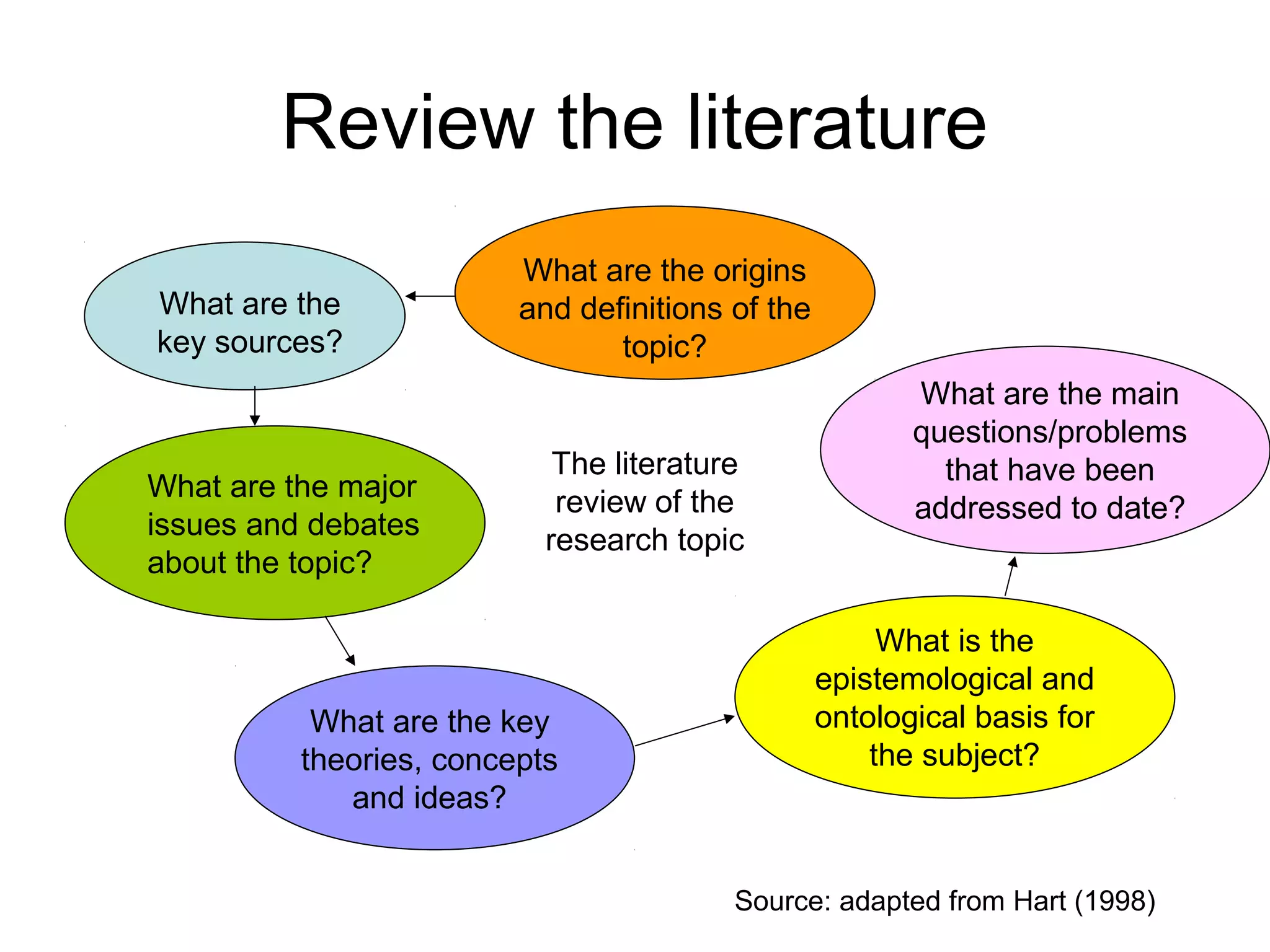

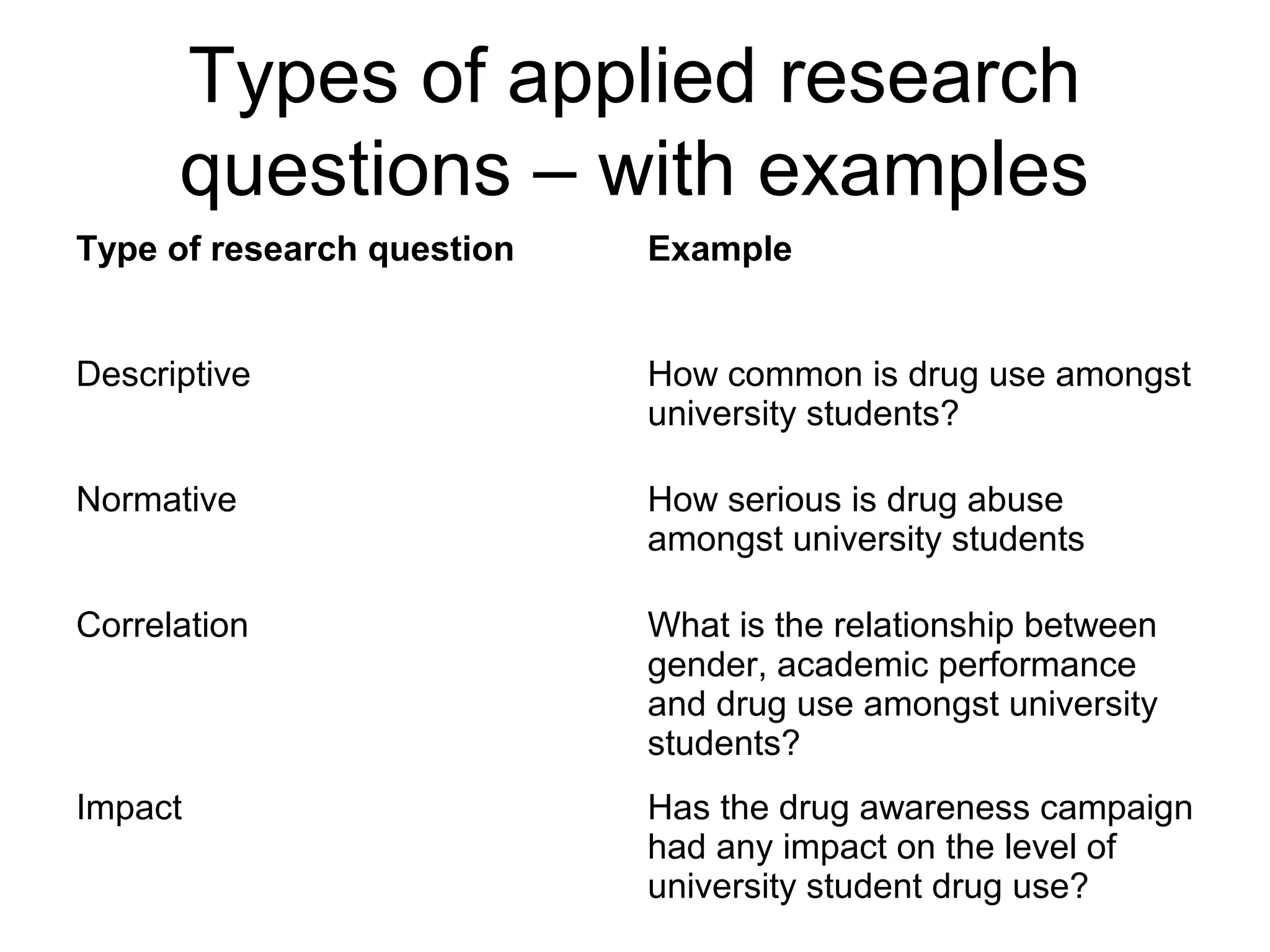

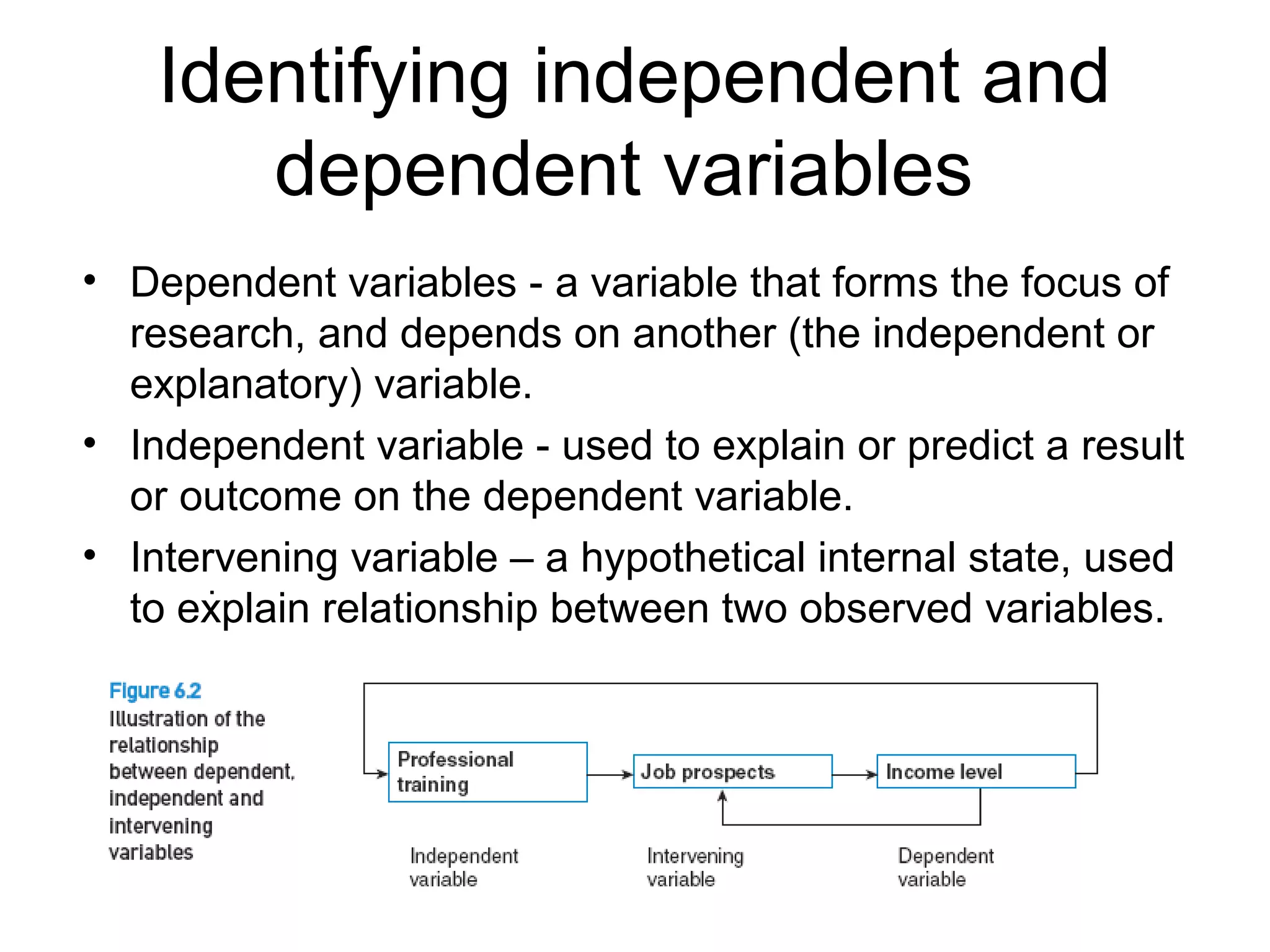

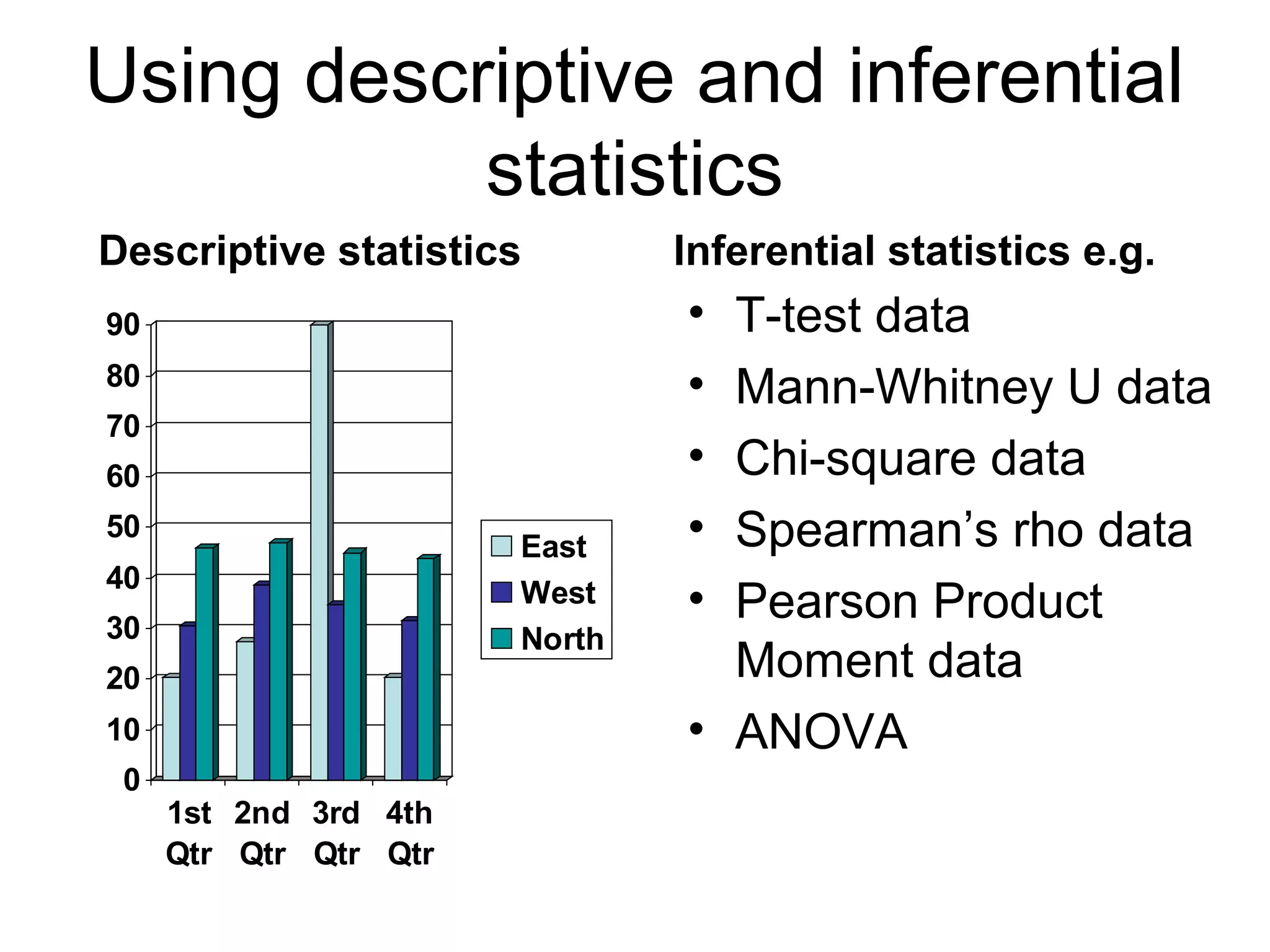

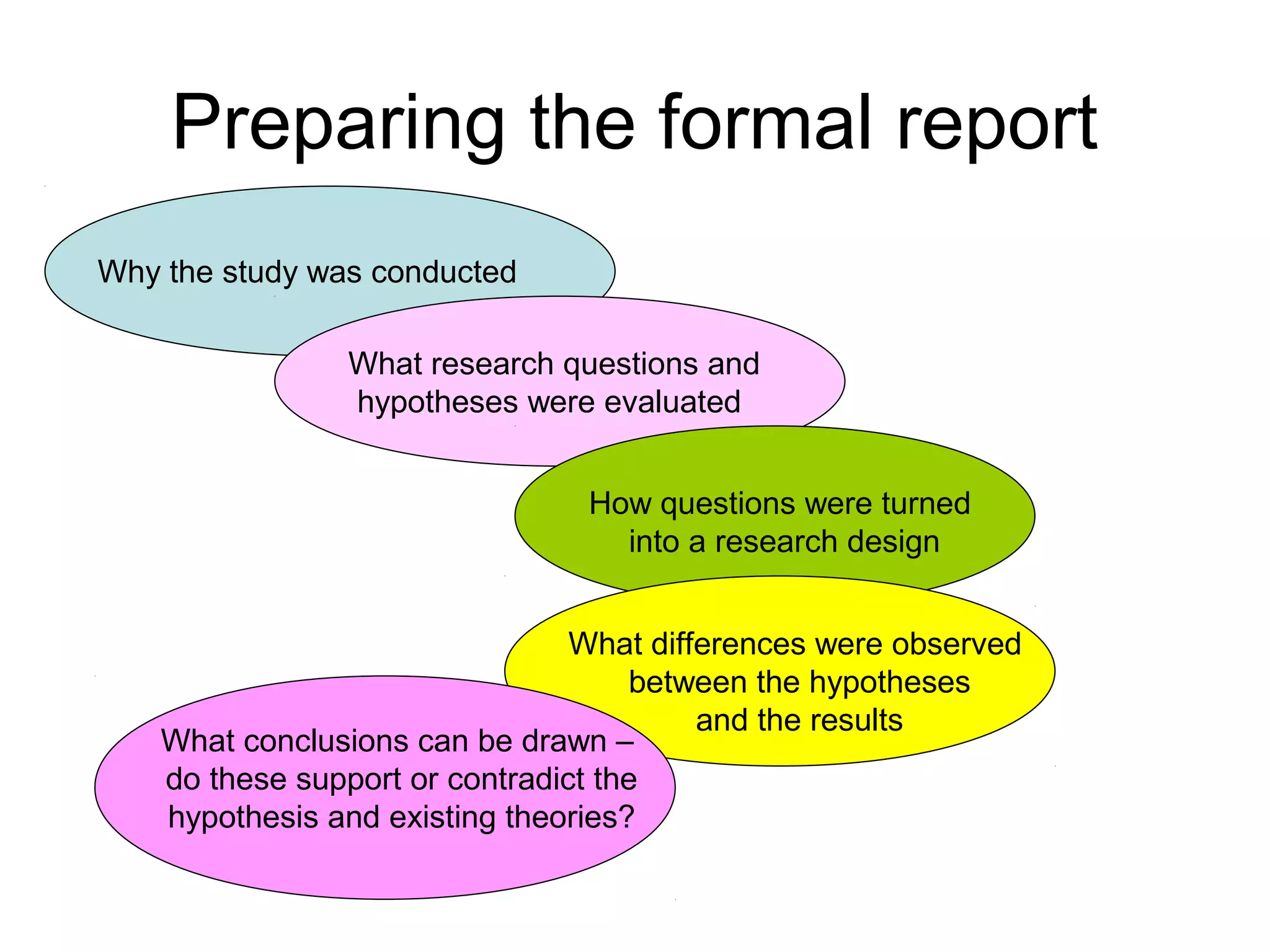

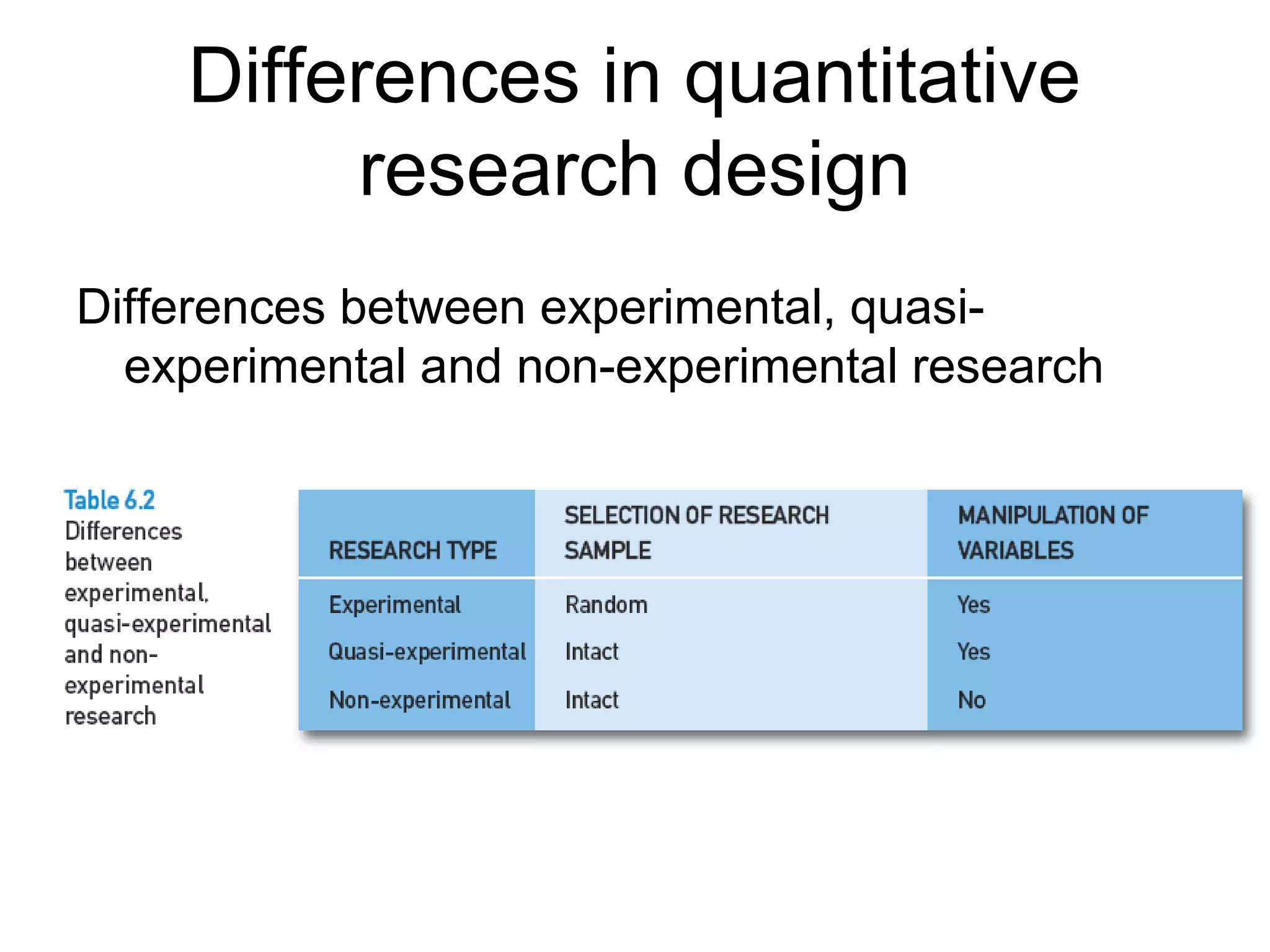

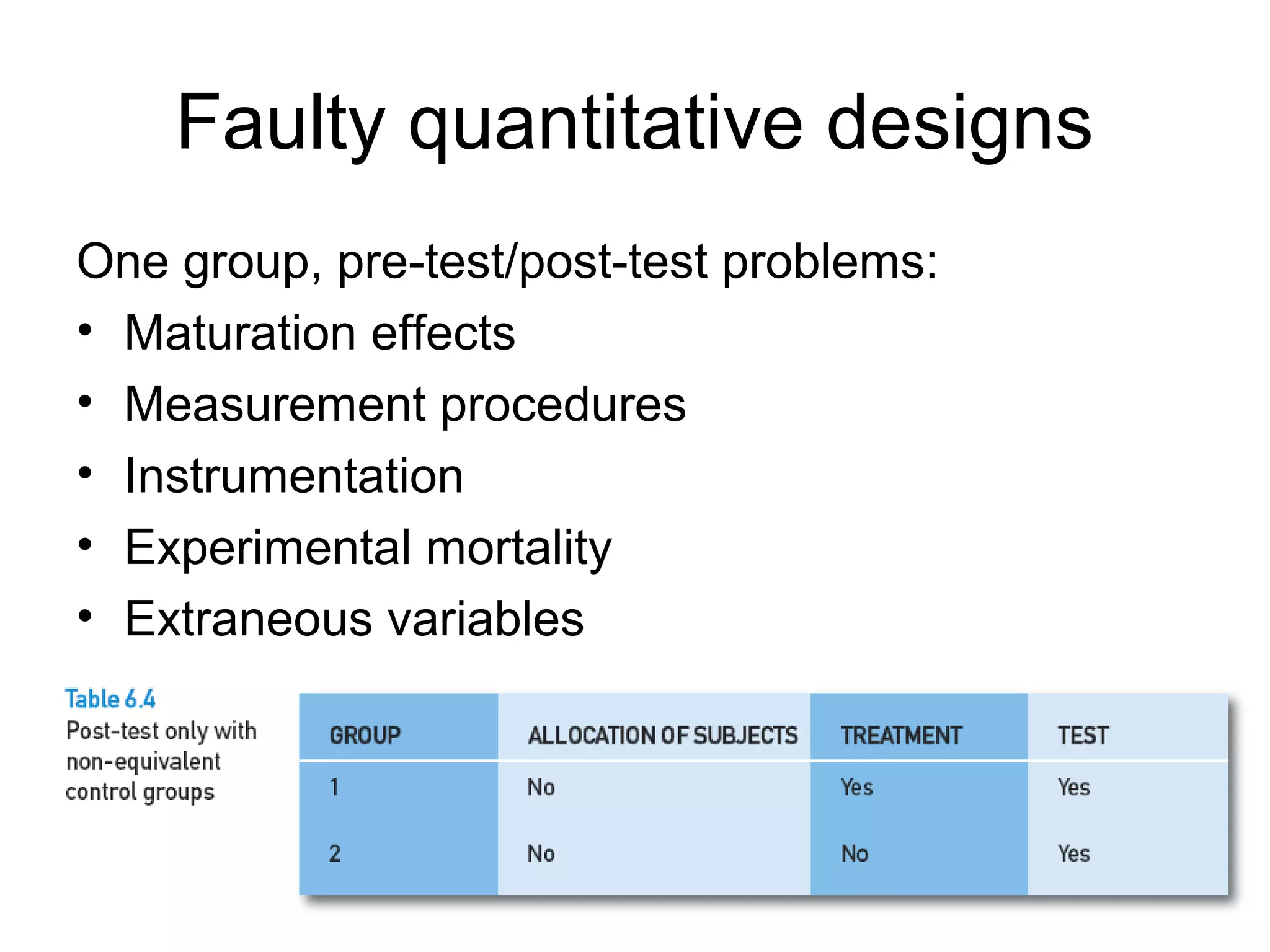

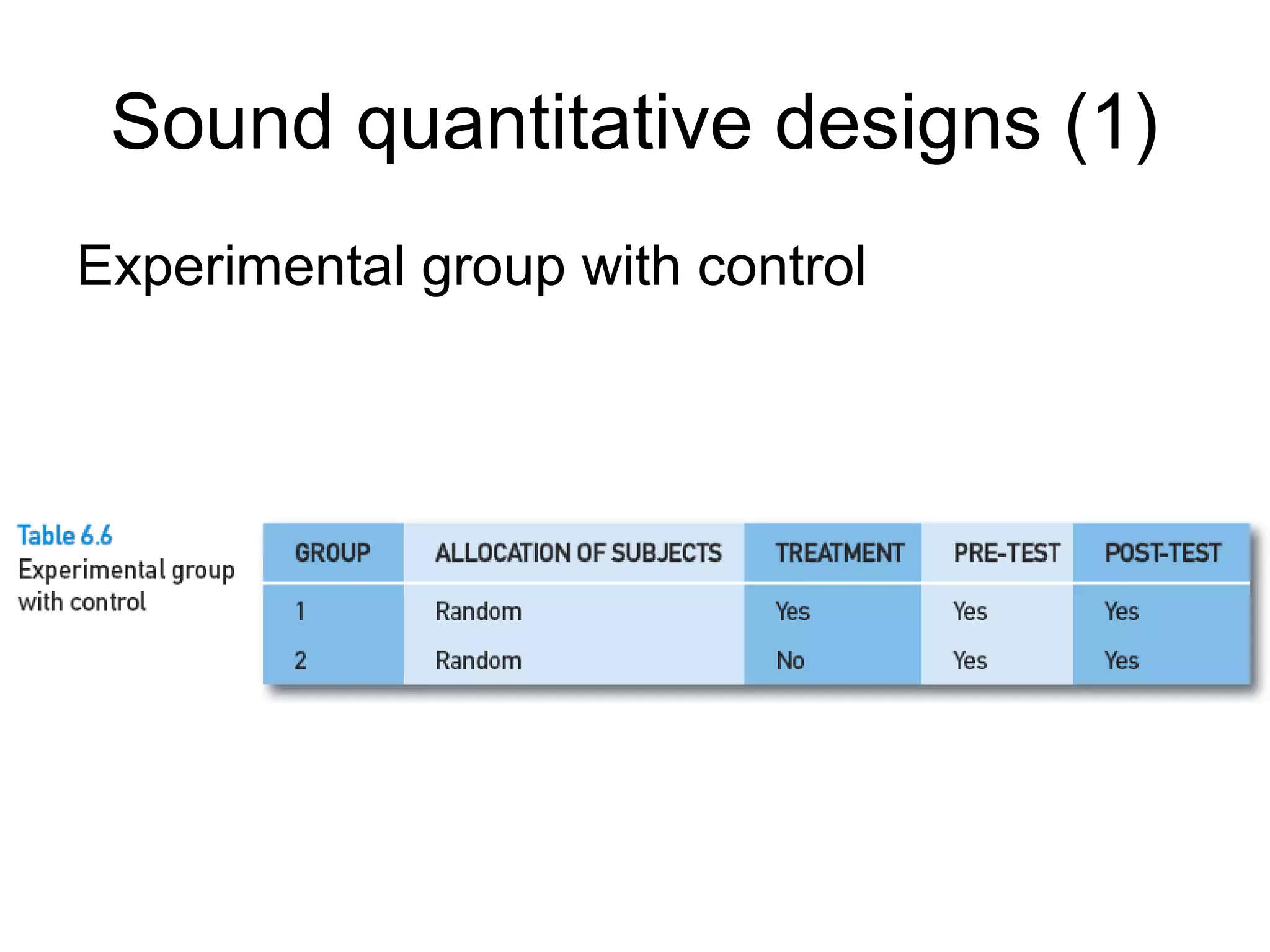

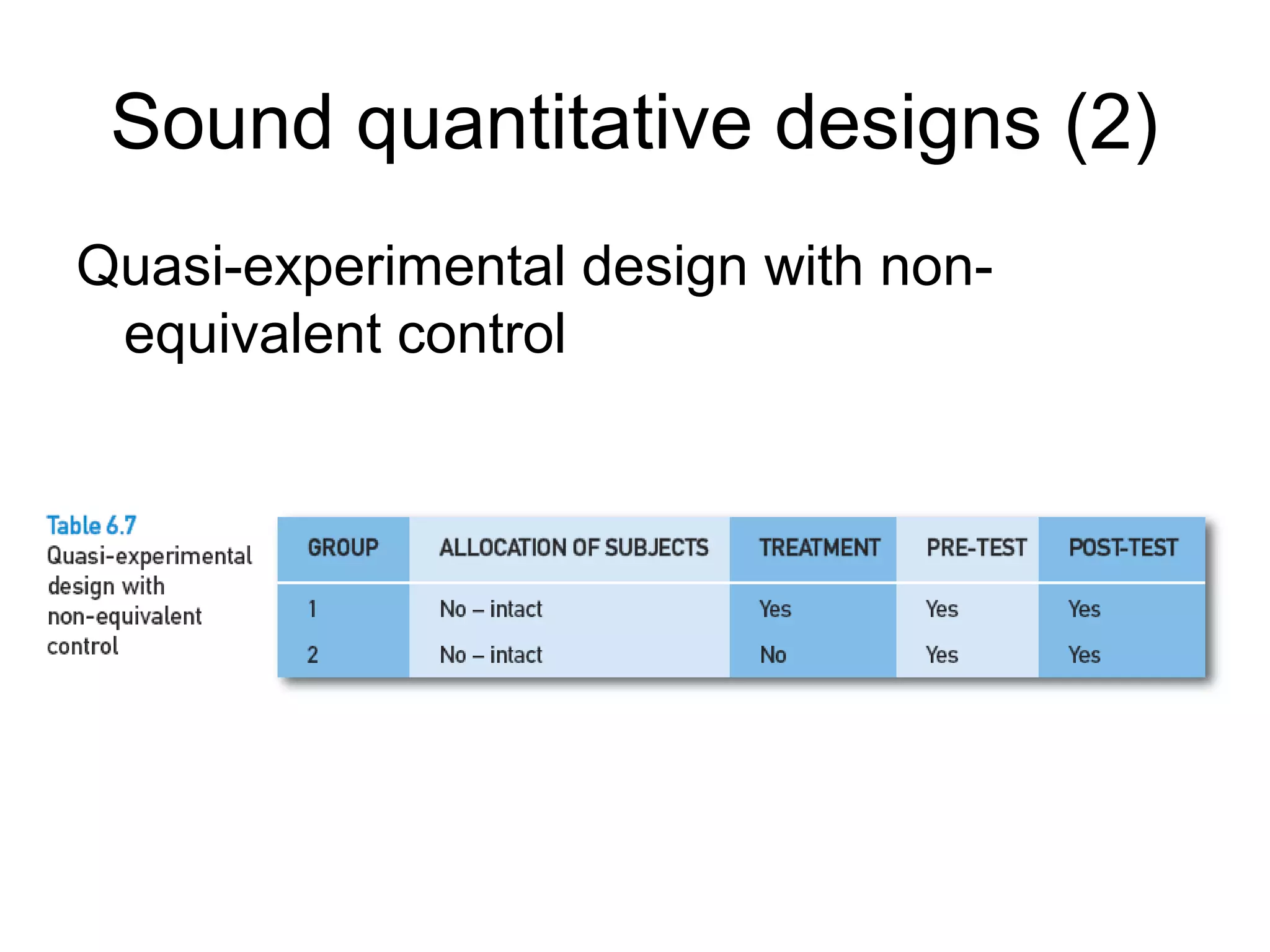

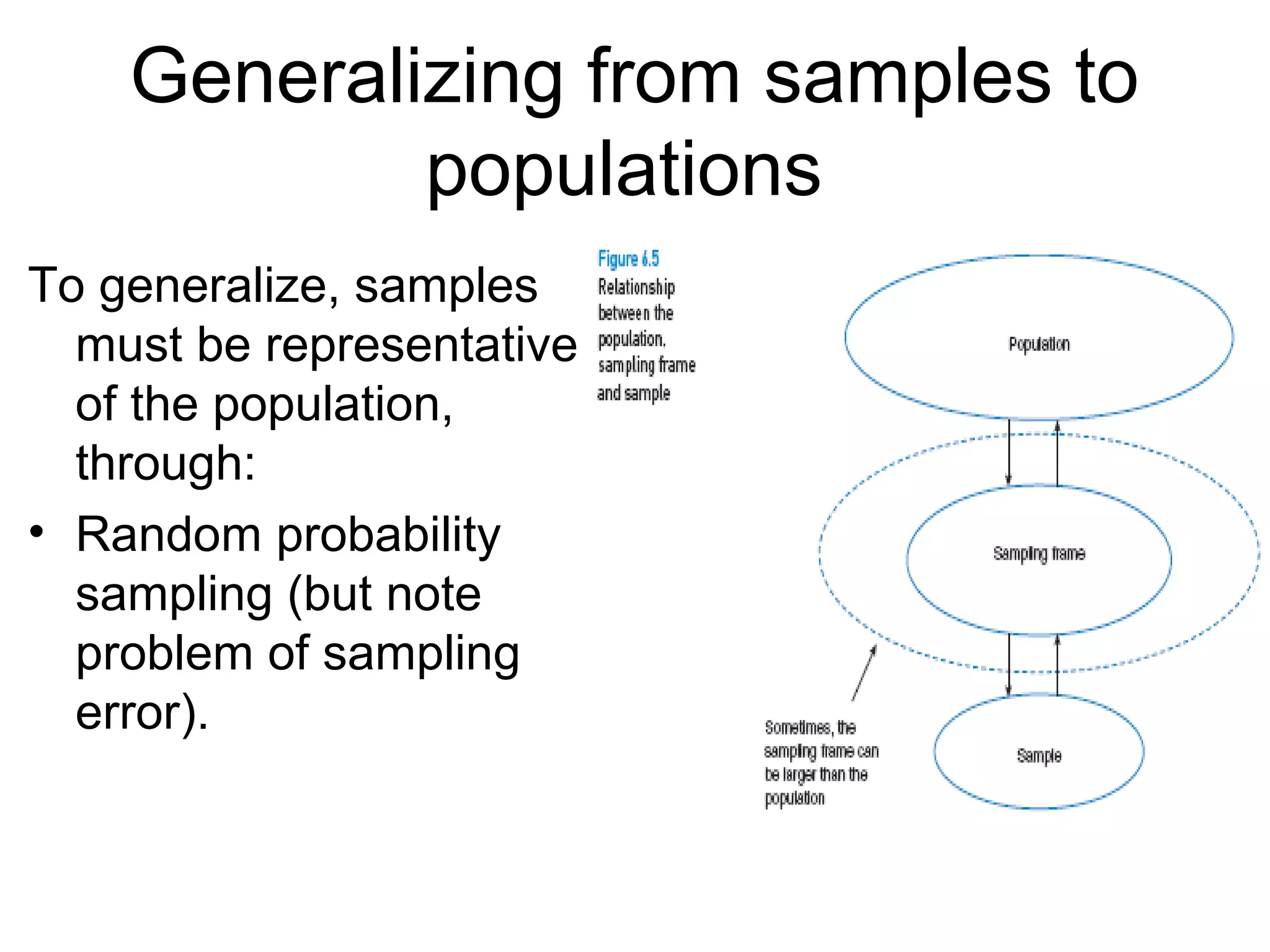

This document outlines the key stages and considerations in experimental research design using quantitative methods, including developing research questions and hypotheses, identifying variables, sampling strategies, instrument design, statistical analysis, and reporting findings. The main stages discussed are identifying issues, reviewing literature, developing testable questions/hypotheses, identifying independent and dependent variables, research implementation, analyzing results statistically, and preparing a formal report. Experimental designs aim to test hypotheses by manipulating independent variables and measuring effects on dependents, while quasi-experimental designs have less control. Research instruments require validity to accurately measure concepts and reliability to consistently do so.