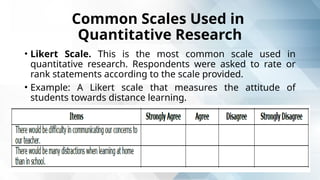

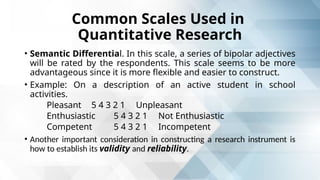

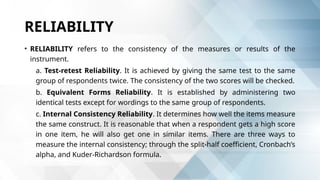

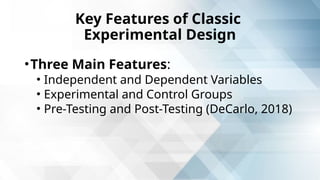

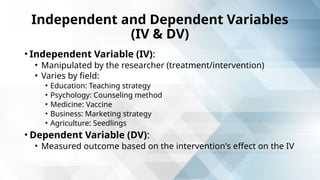

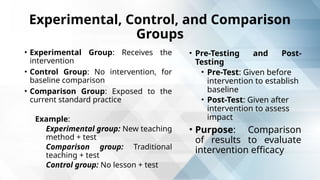

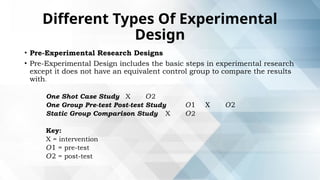

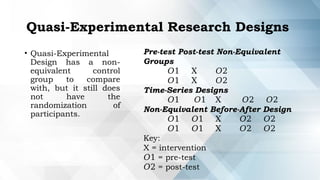

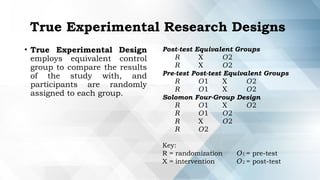

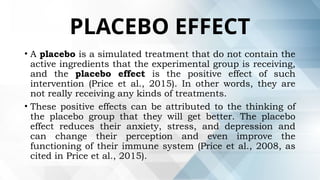

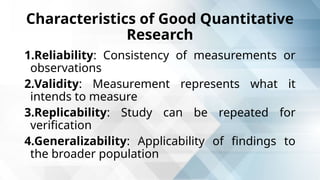

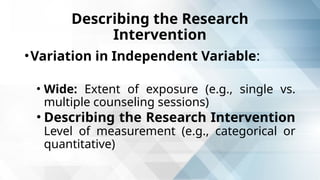

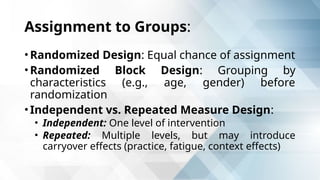

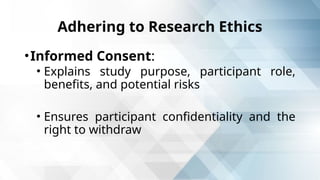

The document discusses the components of effective research instruments, emphasizing valid and reliable data collection methods such as questionnaires and performance tests. It outlines characteristics of good instruments, ways to develop them, and types of validity and reliability along with experimental design principles. Additionally, it highlights the importance of adhering to research ethics, including informed consent and participant confidentiality.