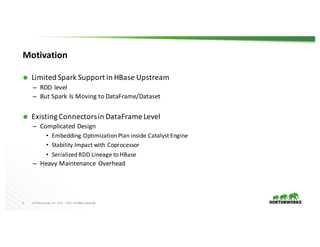

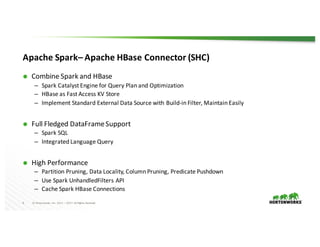

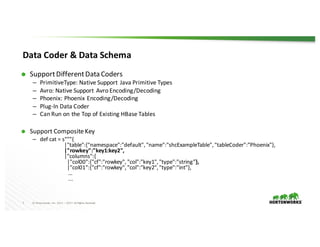

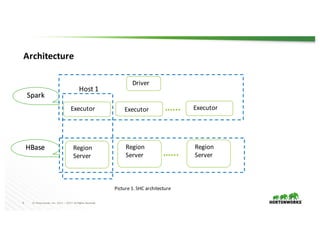

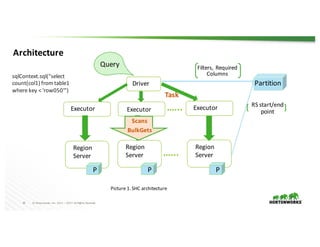

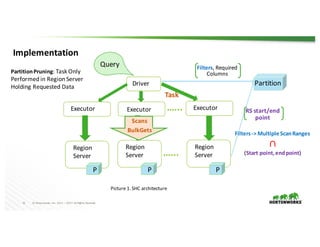

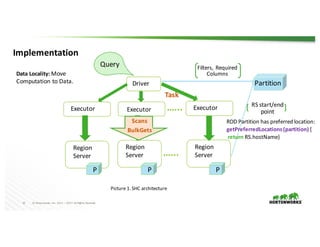

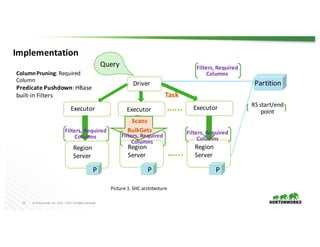

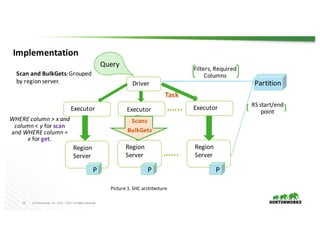

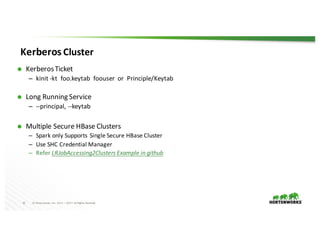

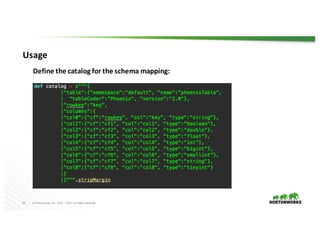

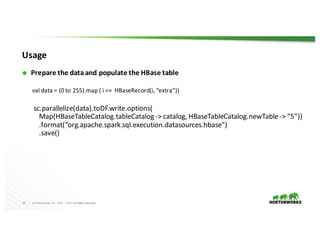

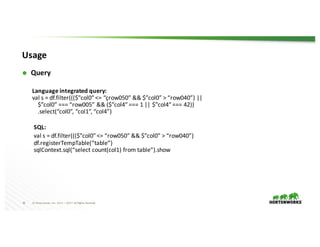

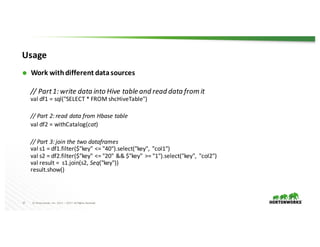

The document presents the Apache Spark - Apache HBase connector (SHC), highlighting its efficient integration with Spark for enhanced access to HBase through Spark SQL. It details the architecture, implementation, and high-performance features such as partition pruning and data locality. Additionally, it provides usage instructions and examples, along with references for further information.