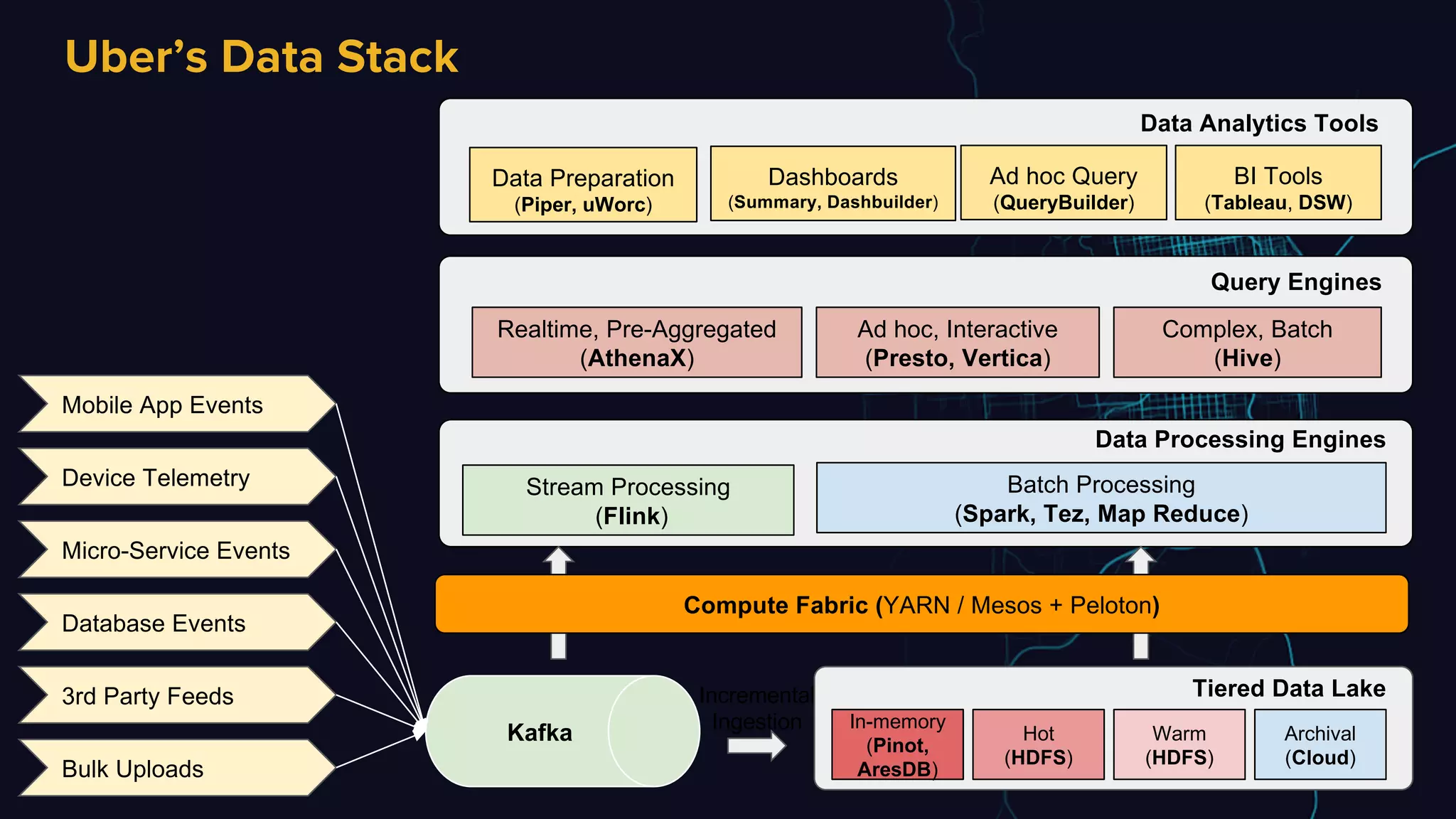

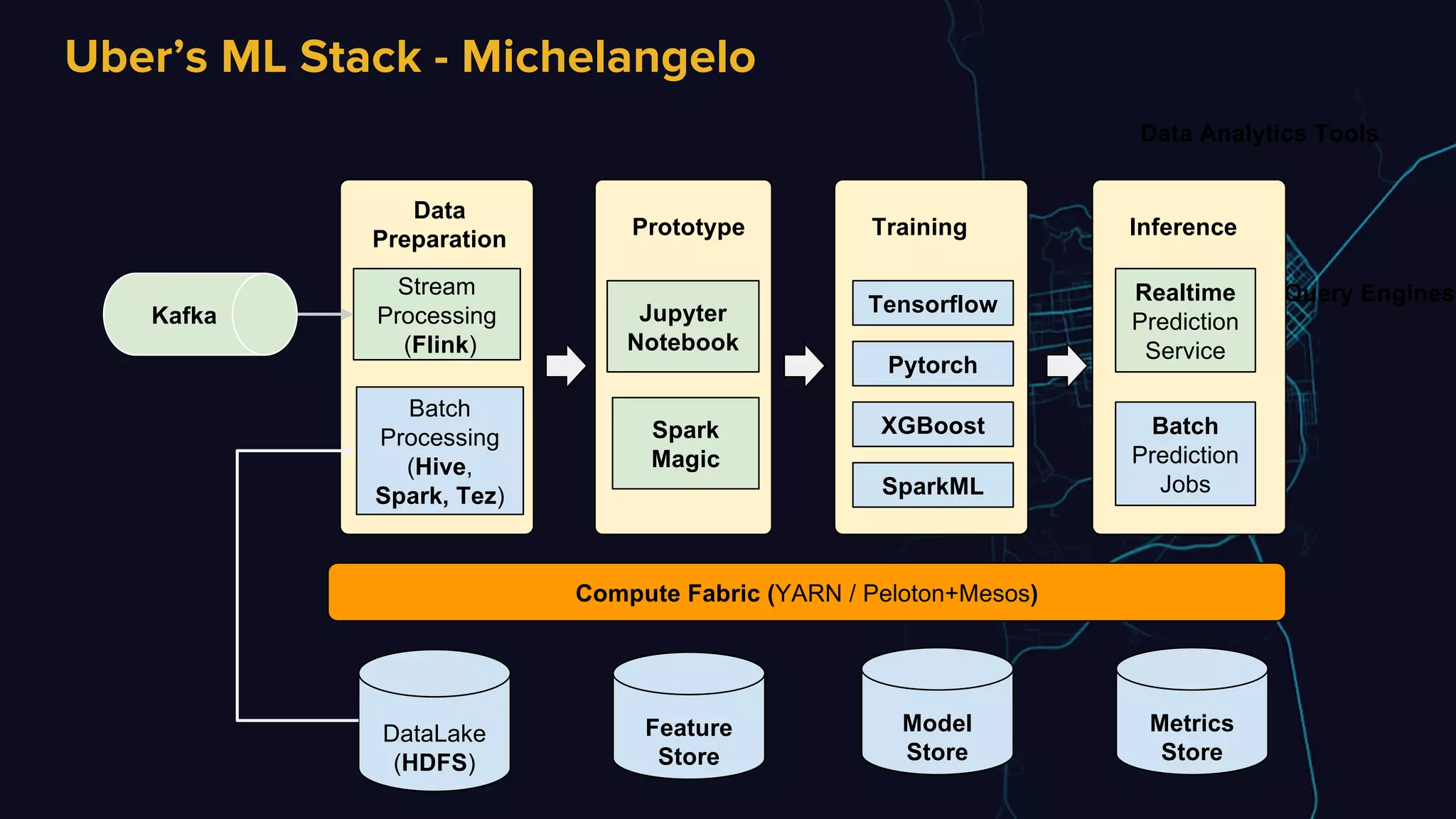

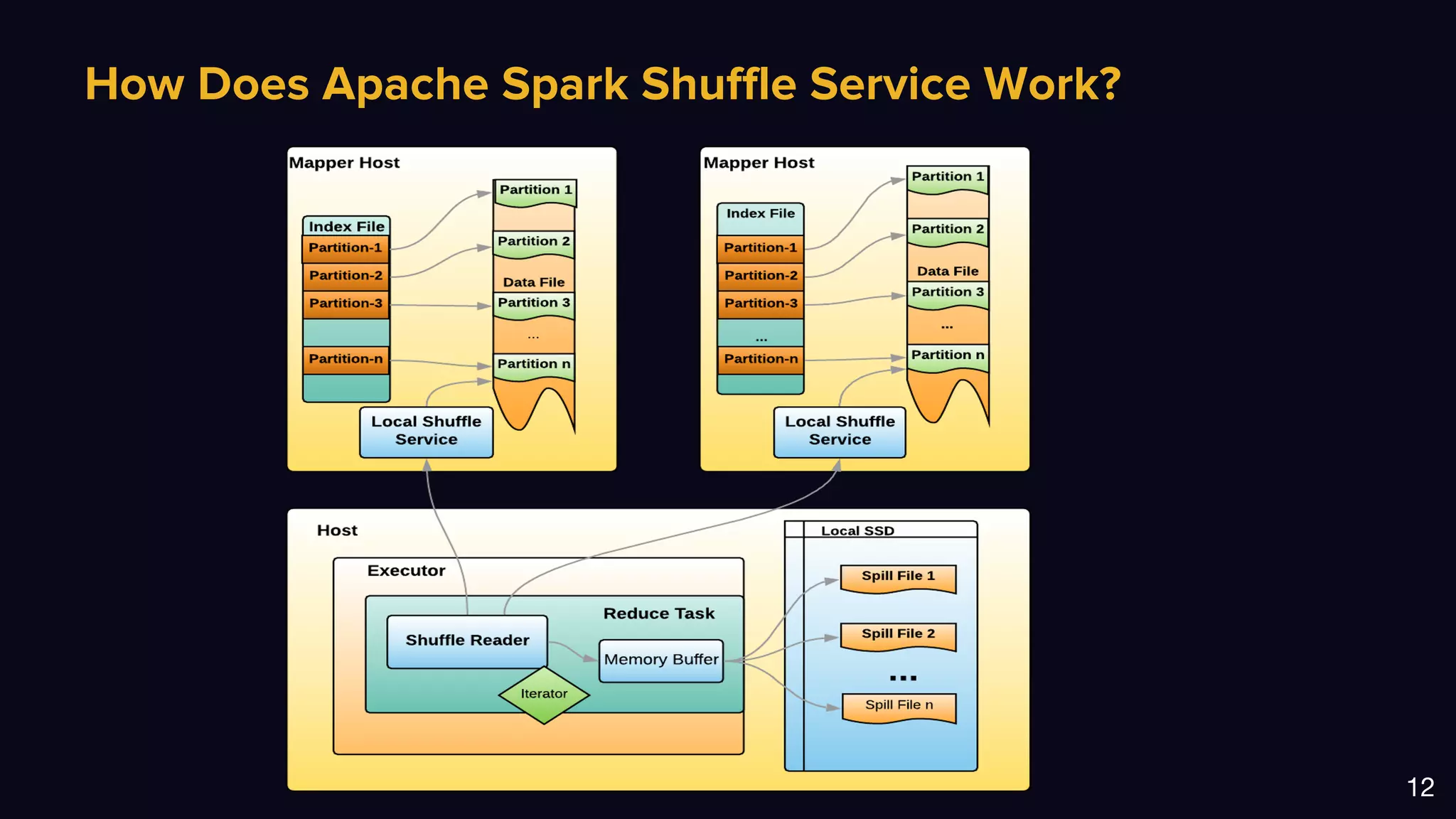

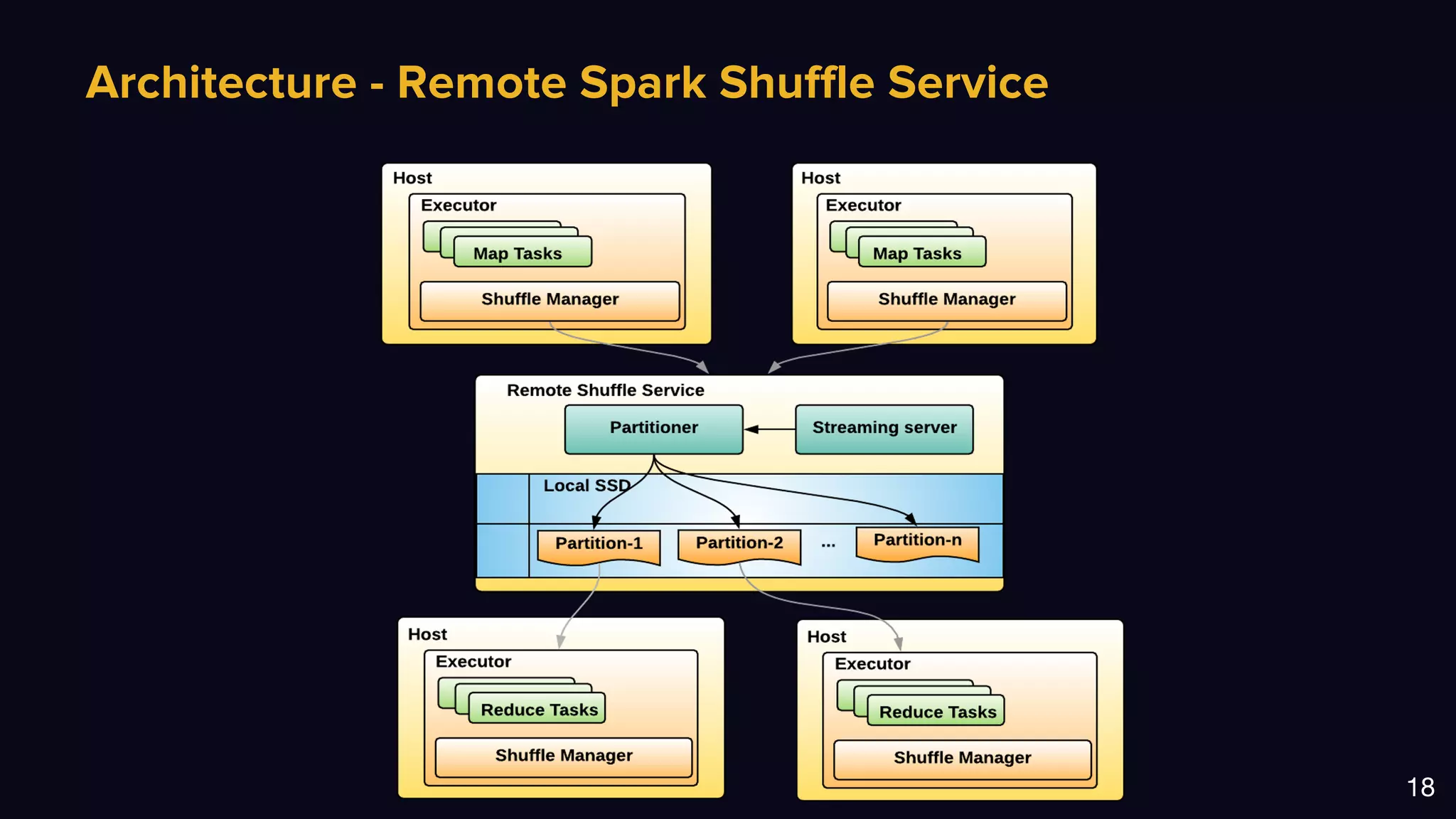

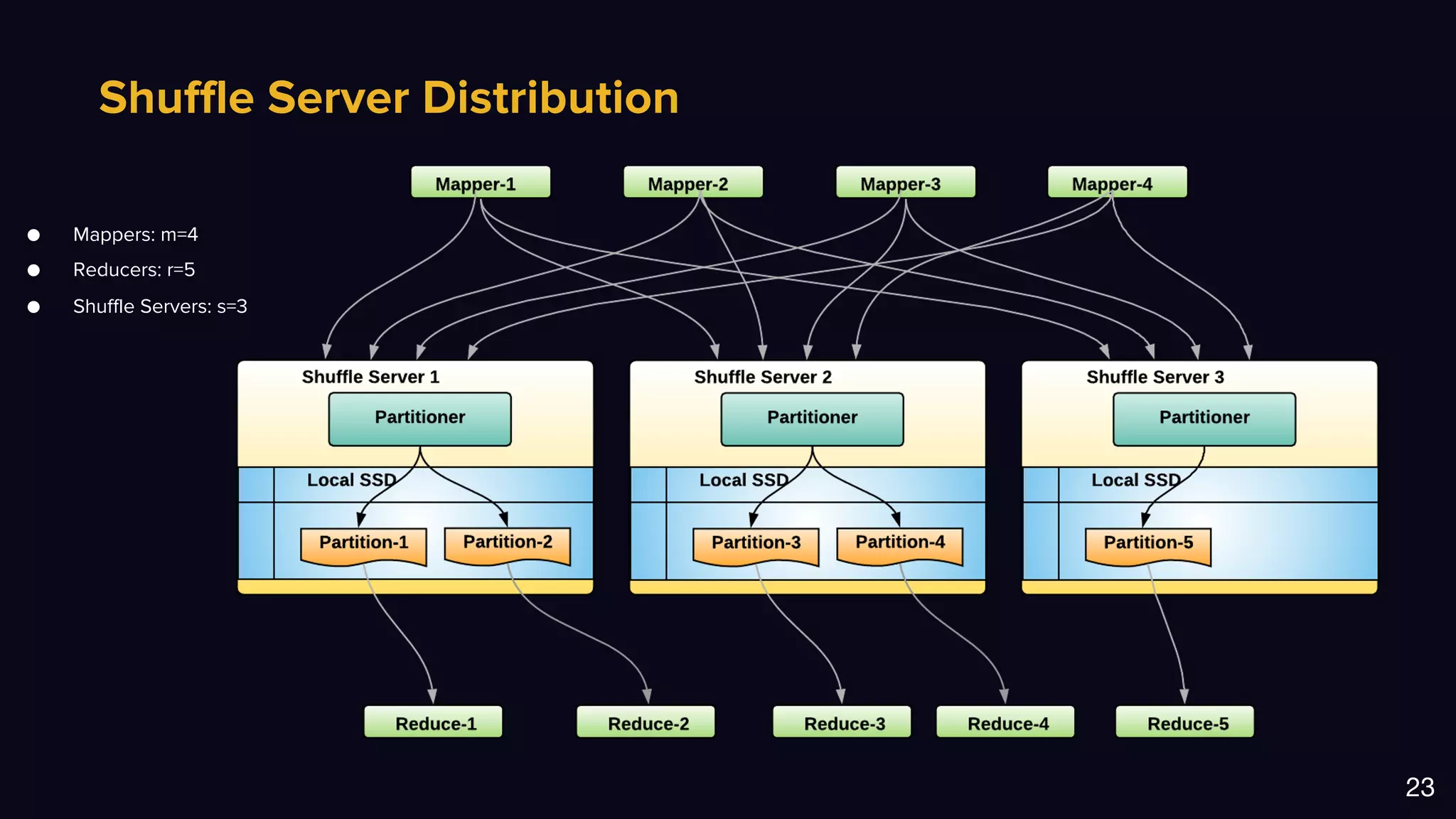

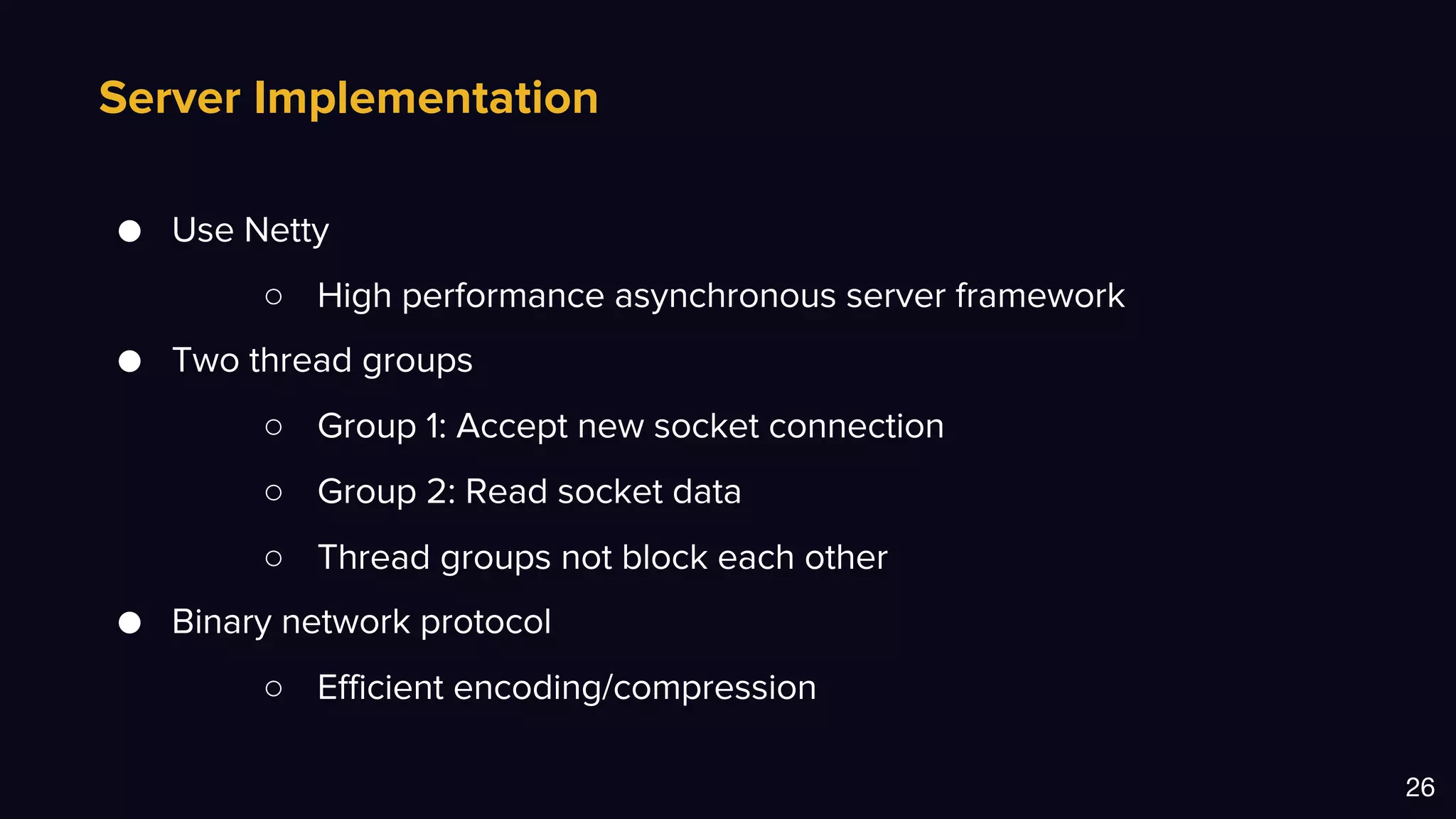

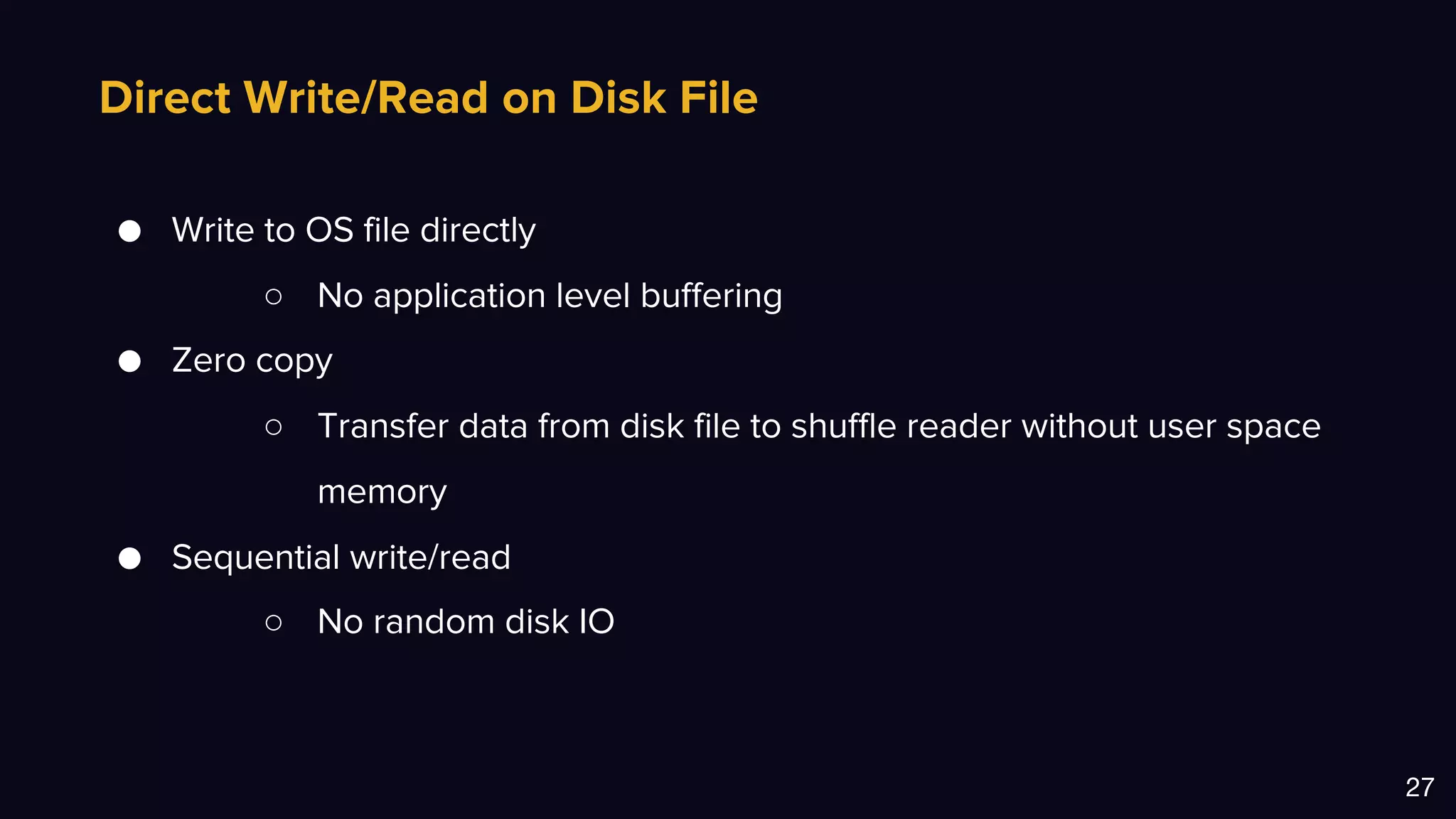

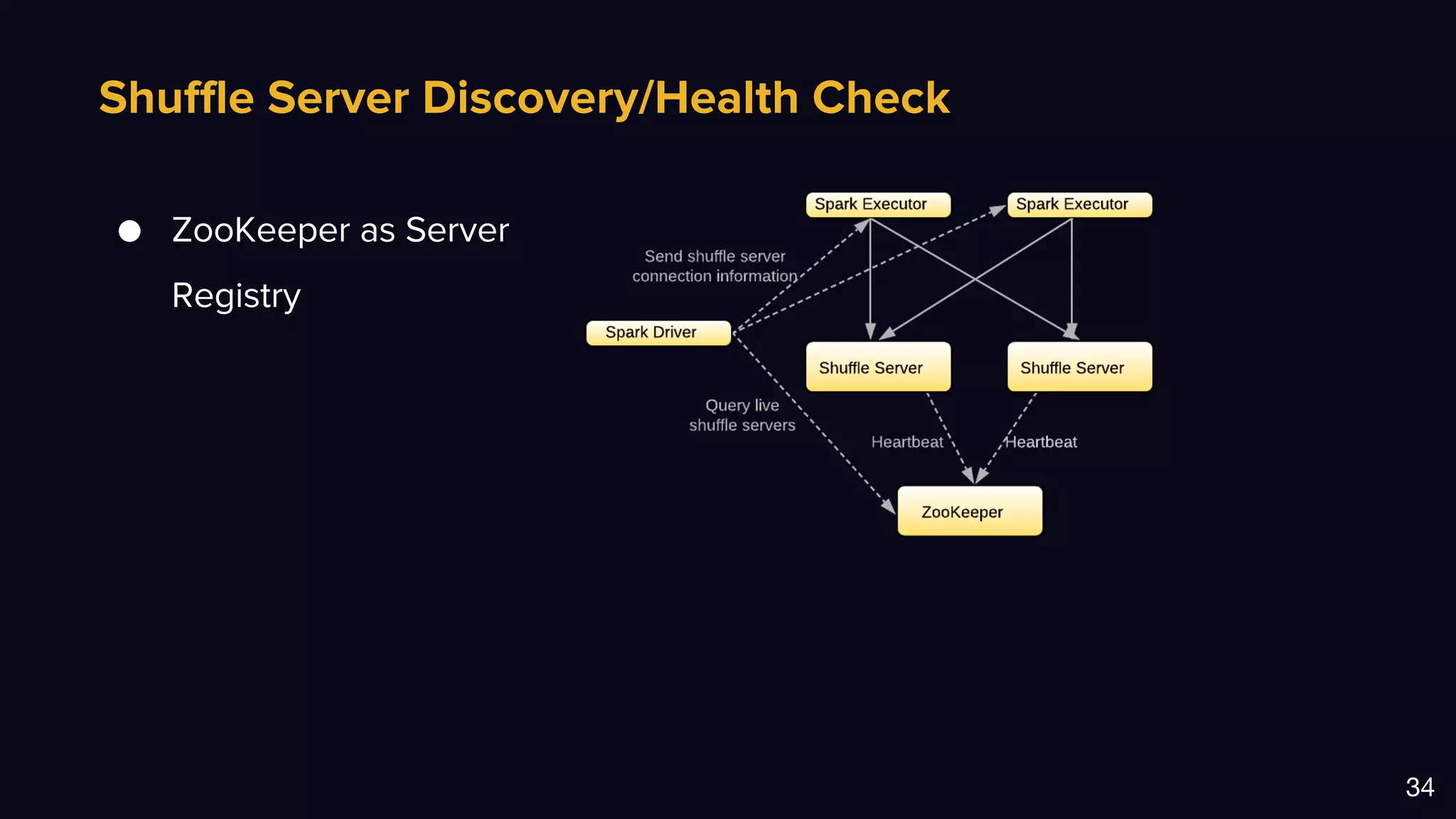

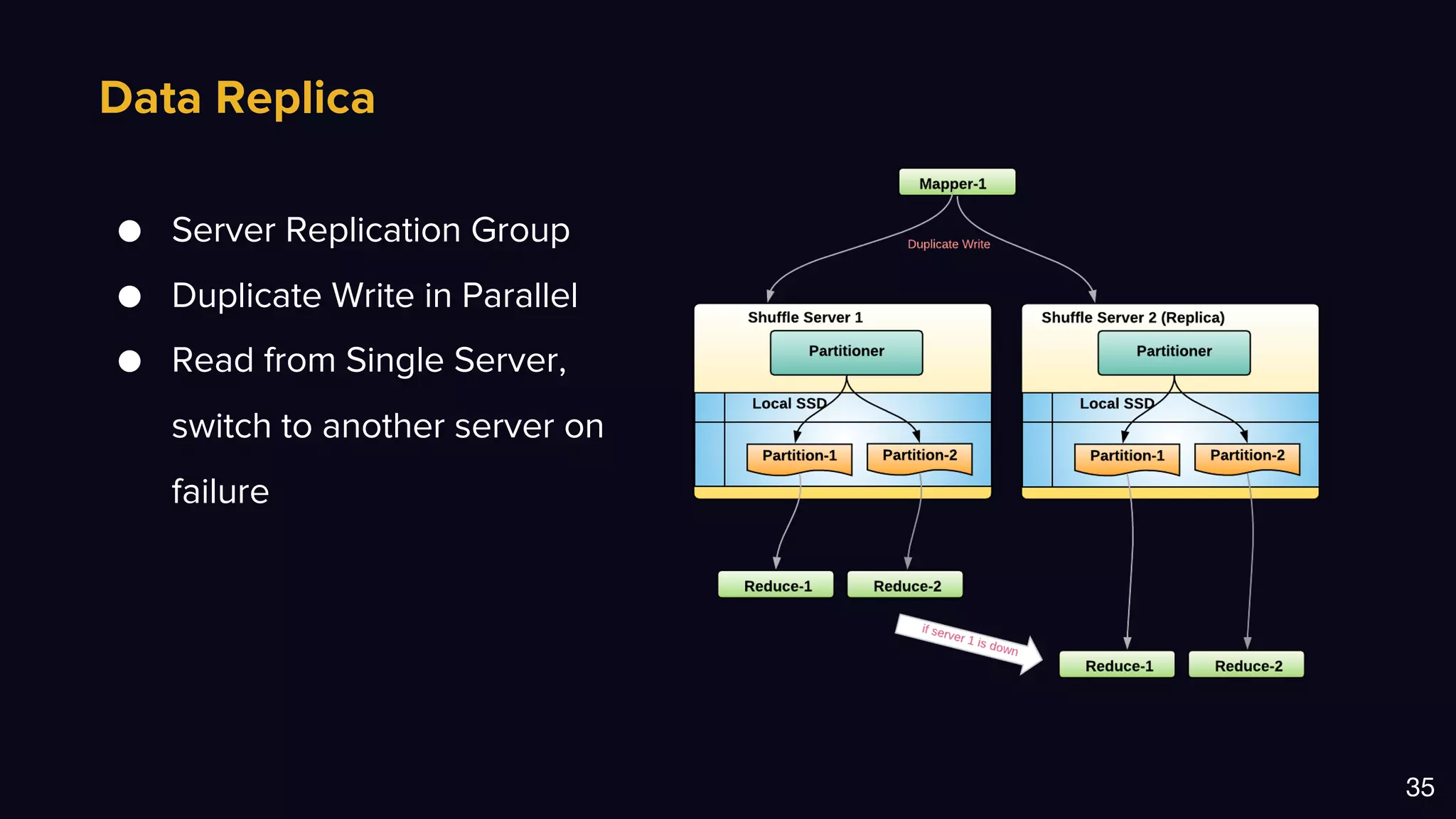

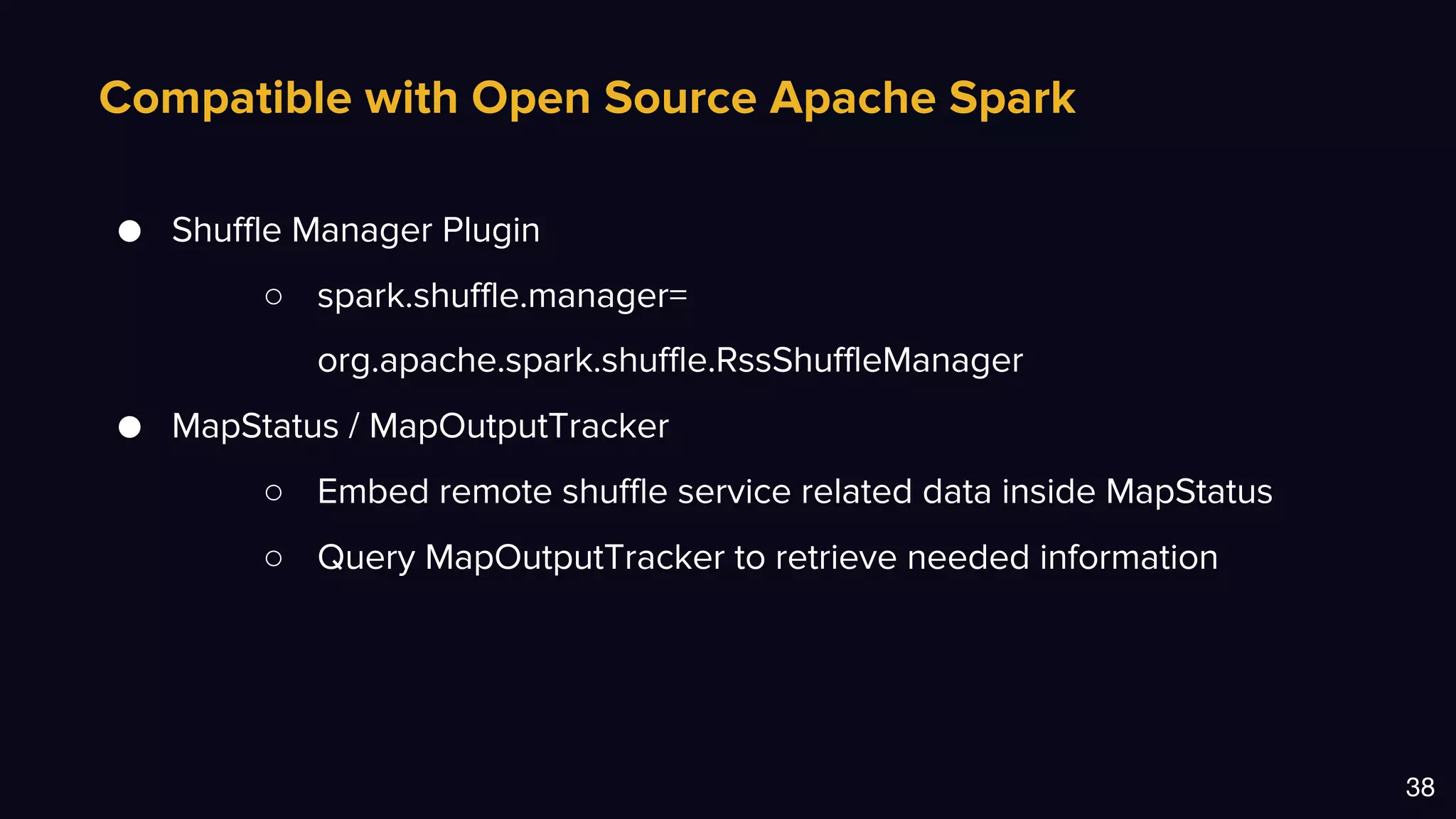

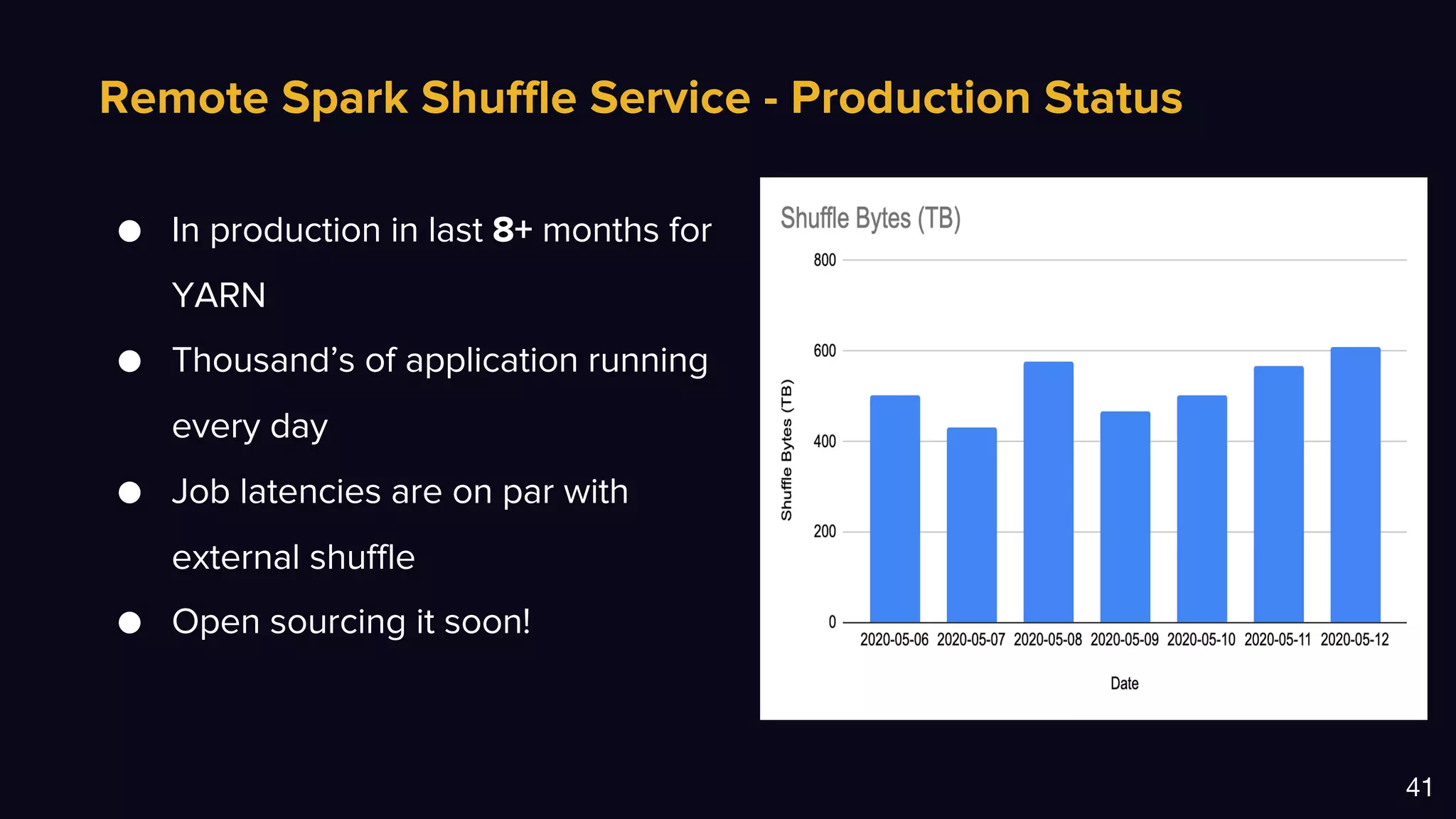

The document discusses Uber's data and machine learning infrastructure, highlighting various use cases such as ETA predictions, driver/rider matches, and Uber Eats functionalities. It outlines the technology stack including Apache Spark, data analytics tools, and machine learning models employed to enhance performance and accuracy. Additionally, it mentions the implementation details of the remote shuffle service, designed to optimize data processing and enhance operational efficiency.