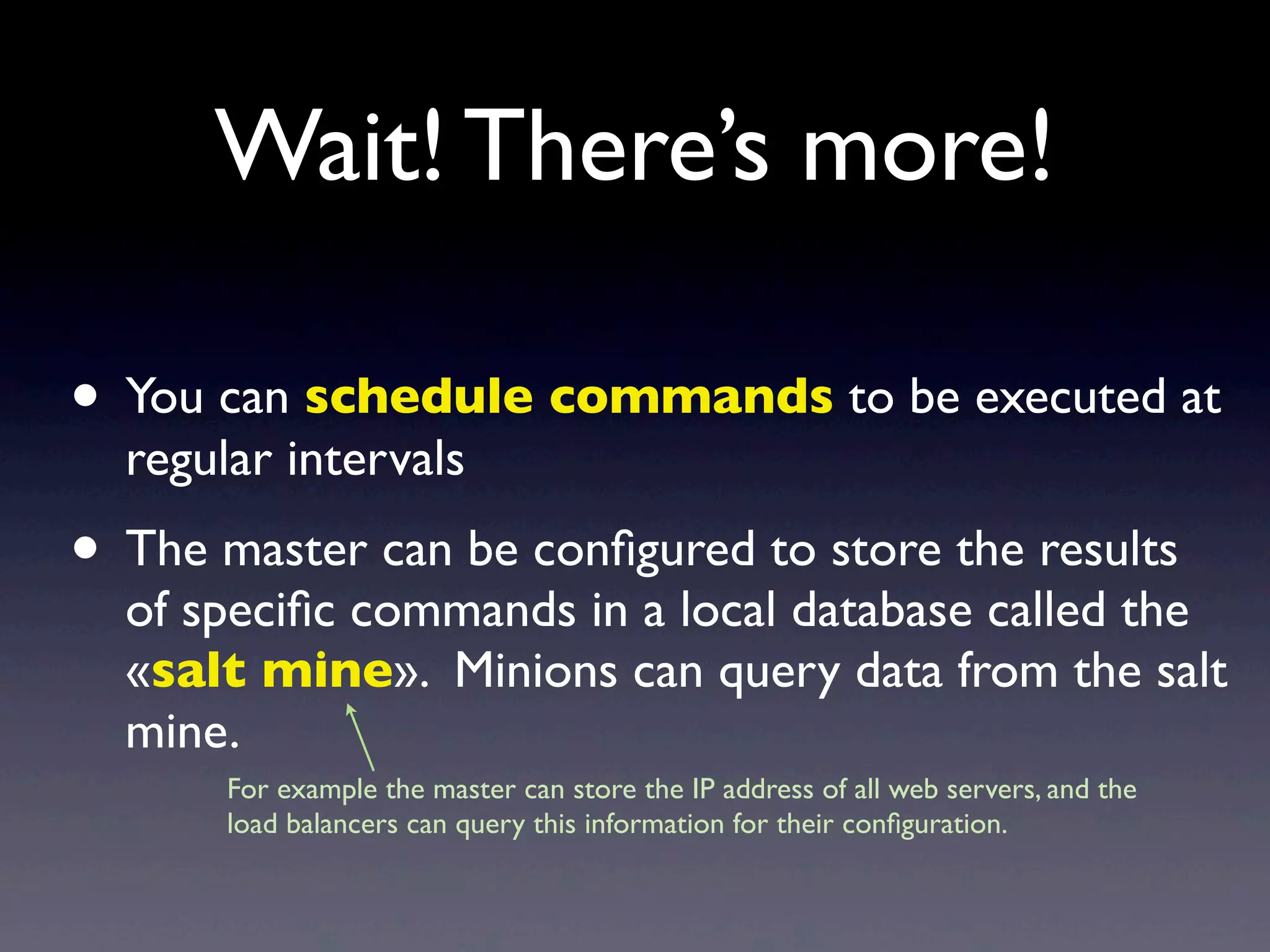

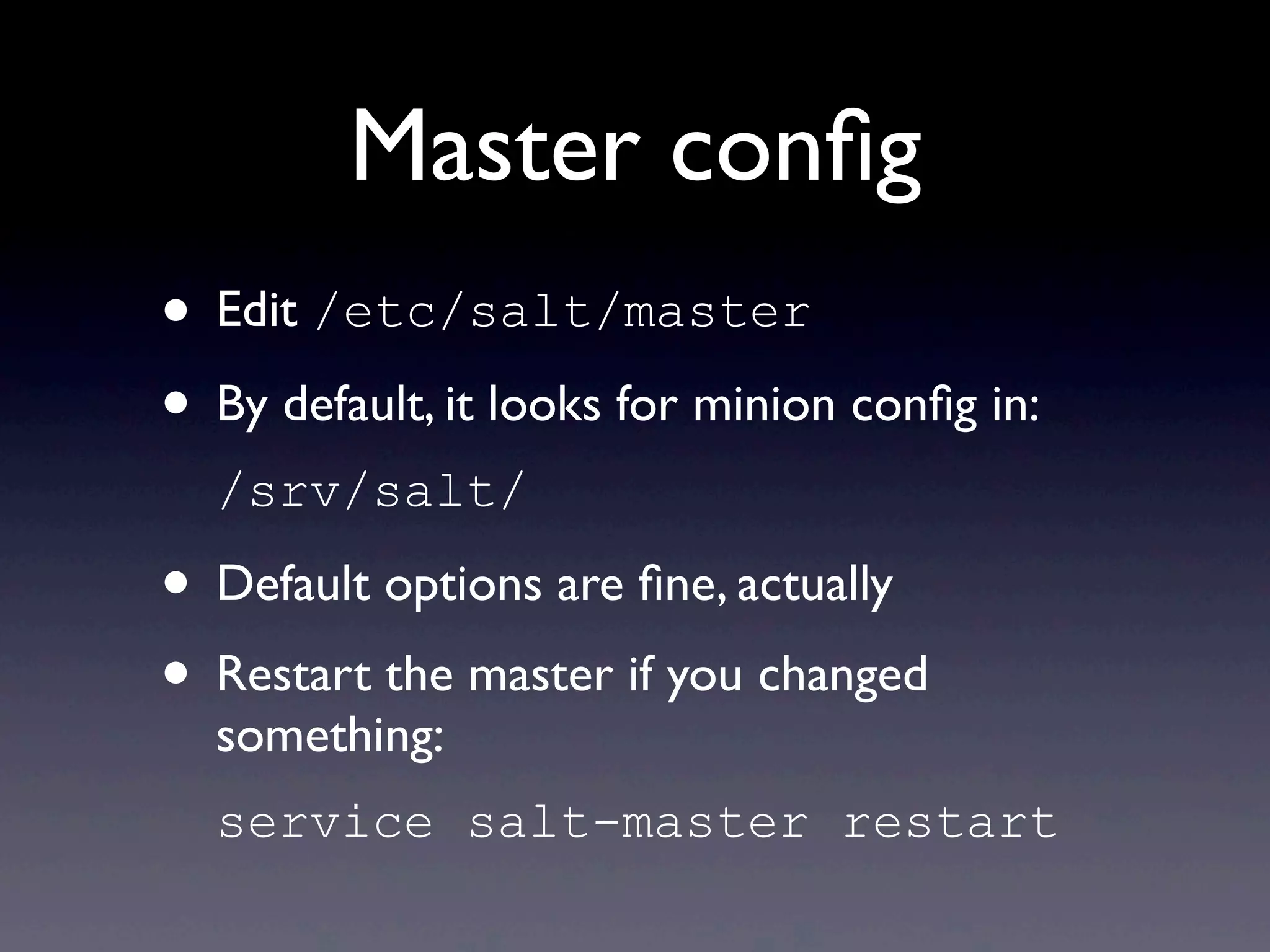

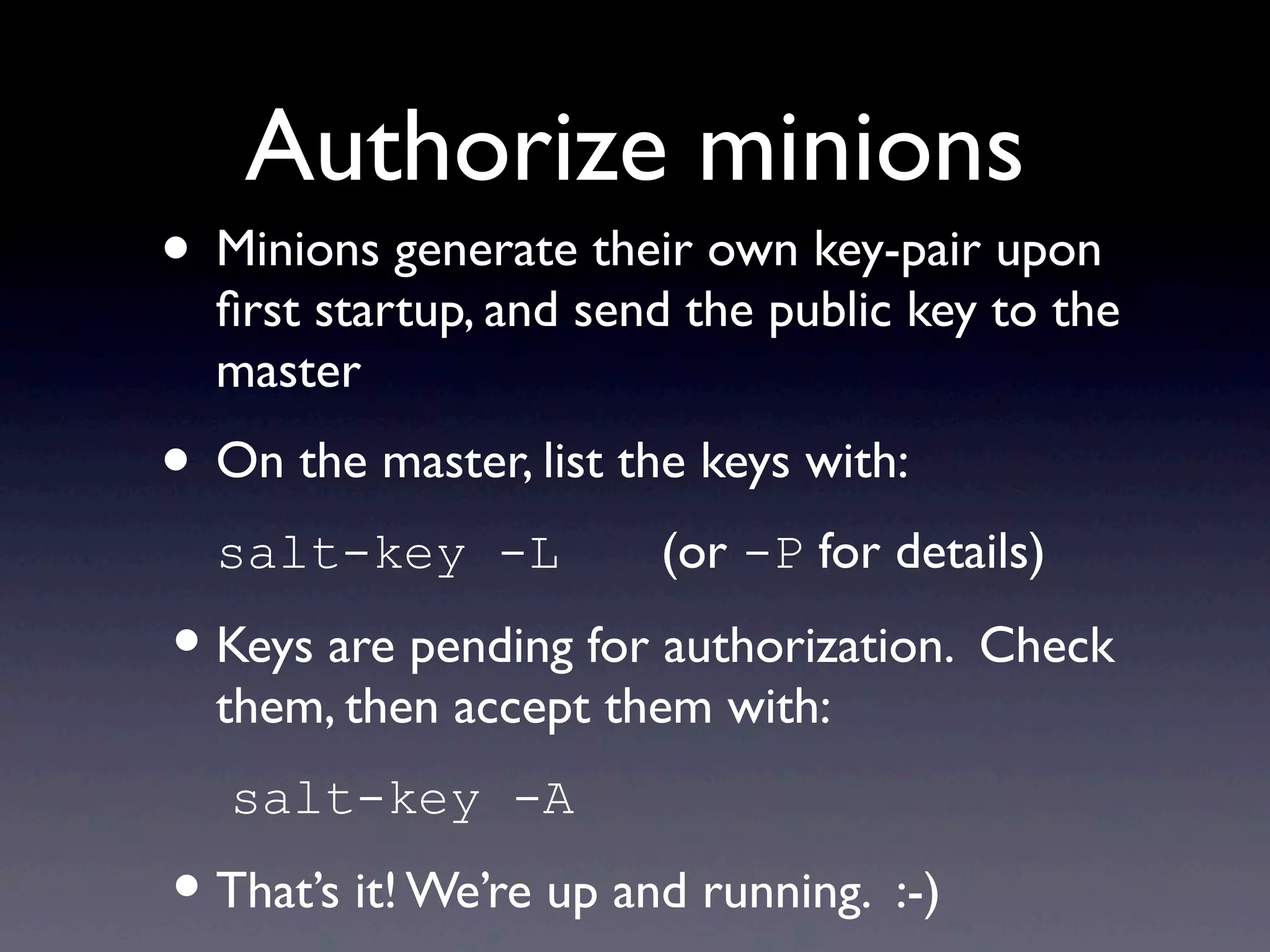

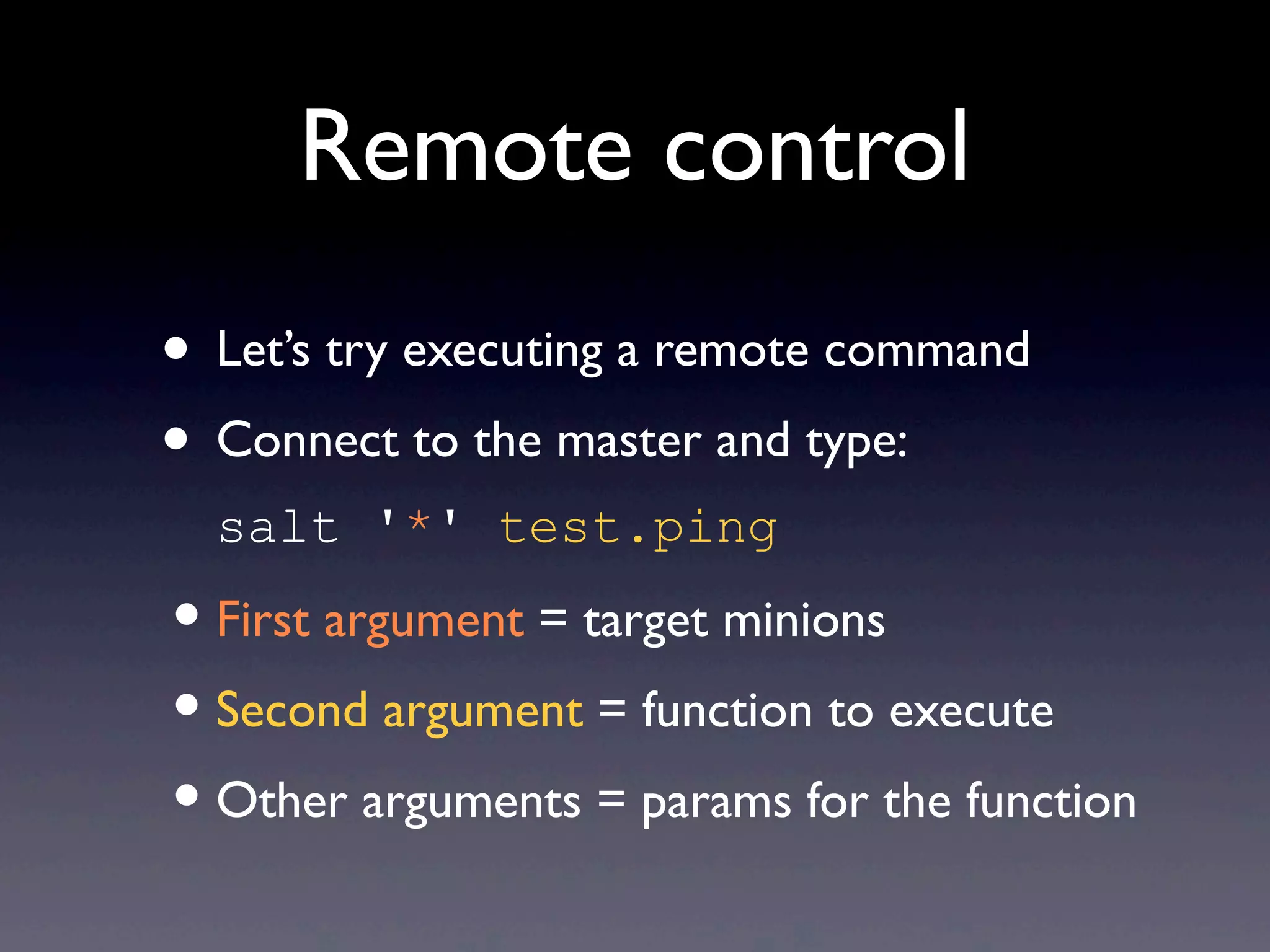

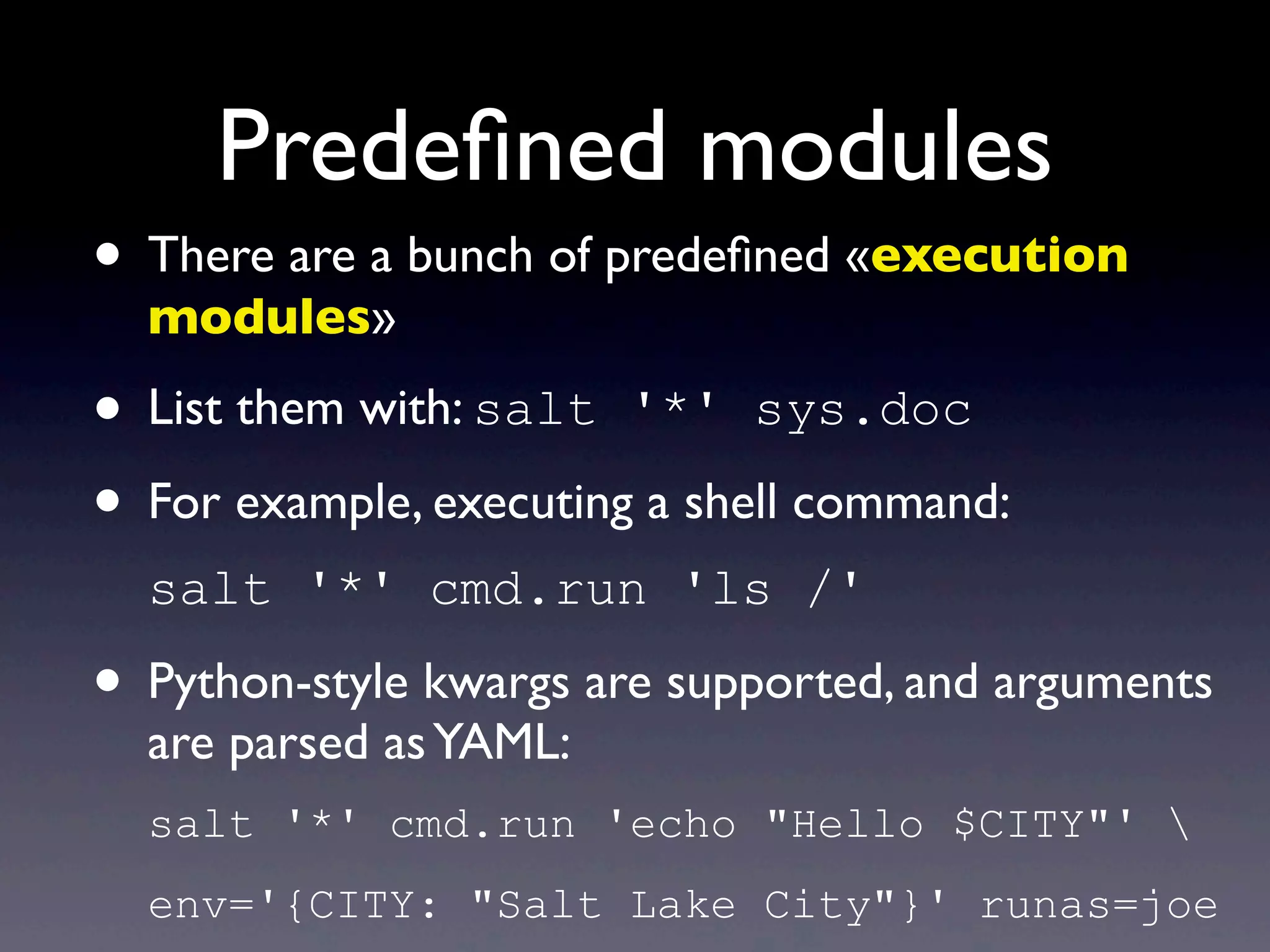

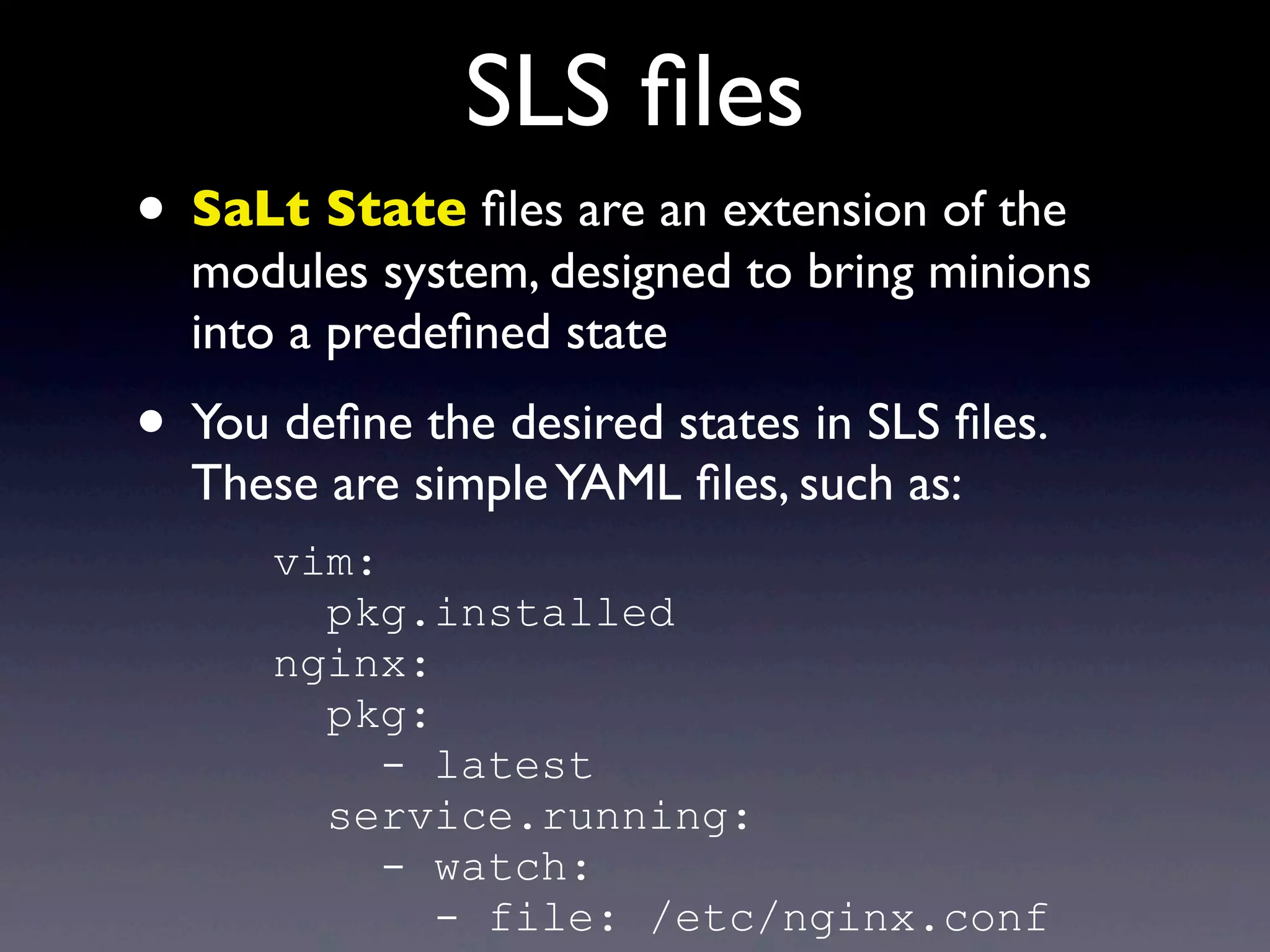

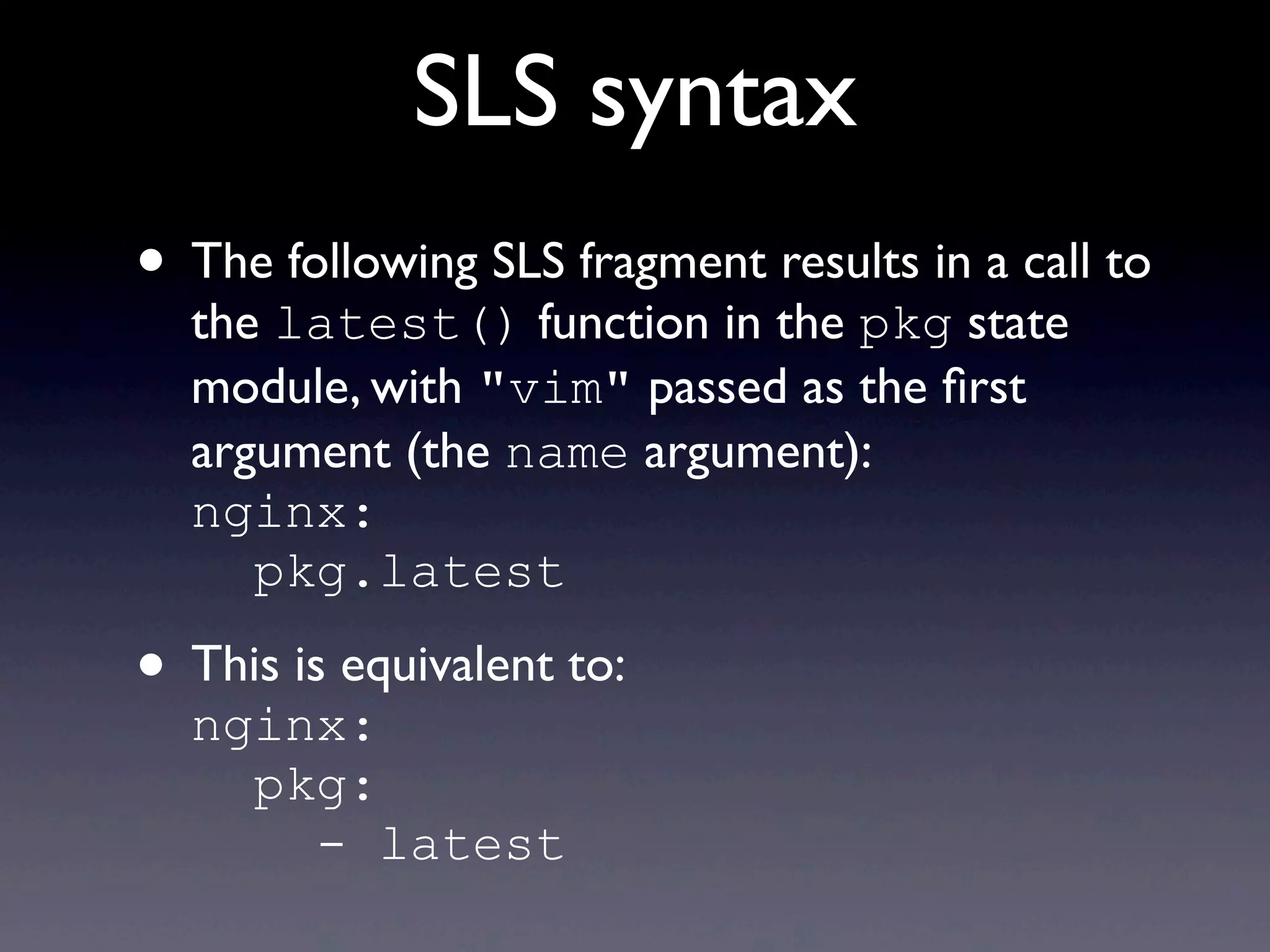

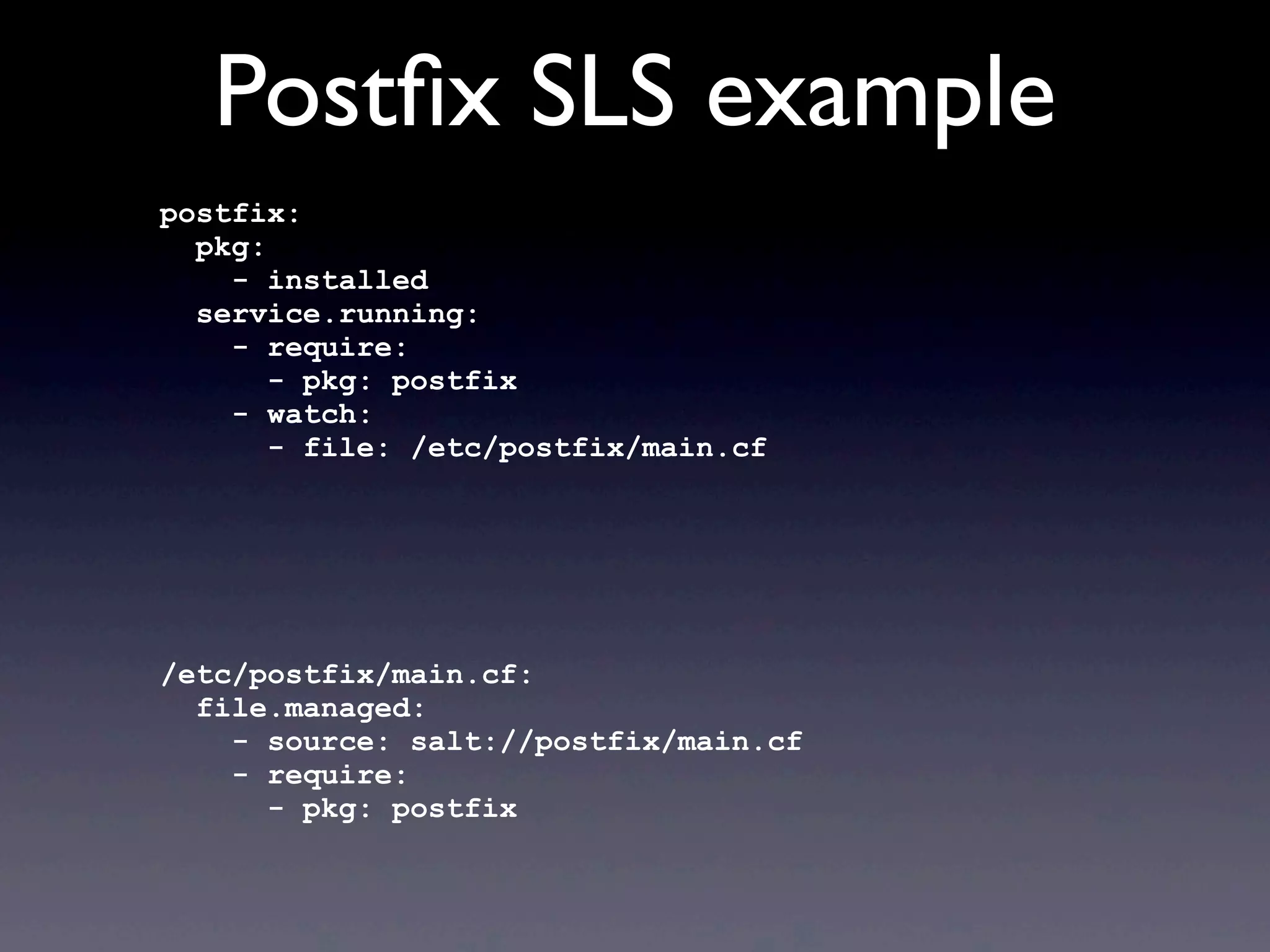

This document provides an overview of infrastructure management using SaltStack, covering key aspects such as hardware and network configuration, software management, and remote control tools. It details installation and configuration of Salt master and minions, the execution of remote commands, and the organization of states and sls files for managing system configurations. Additionally, it highlights advanced features like scheduling, storing arbitrary values in pillars, and the flexibility of using renderers for customization.

![SLS templates

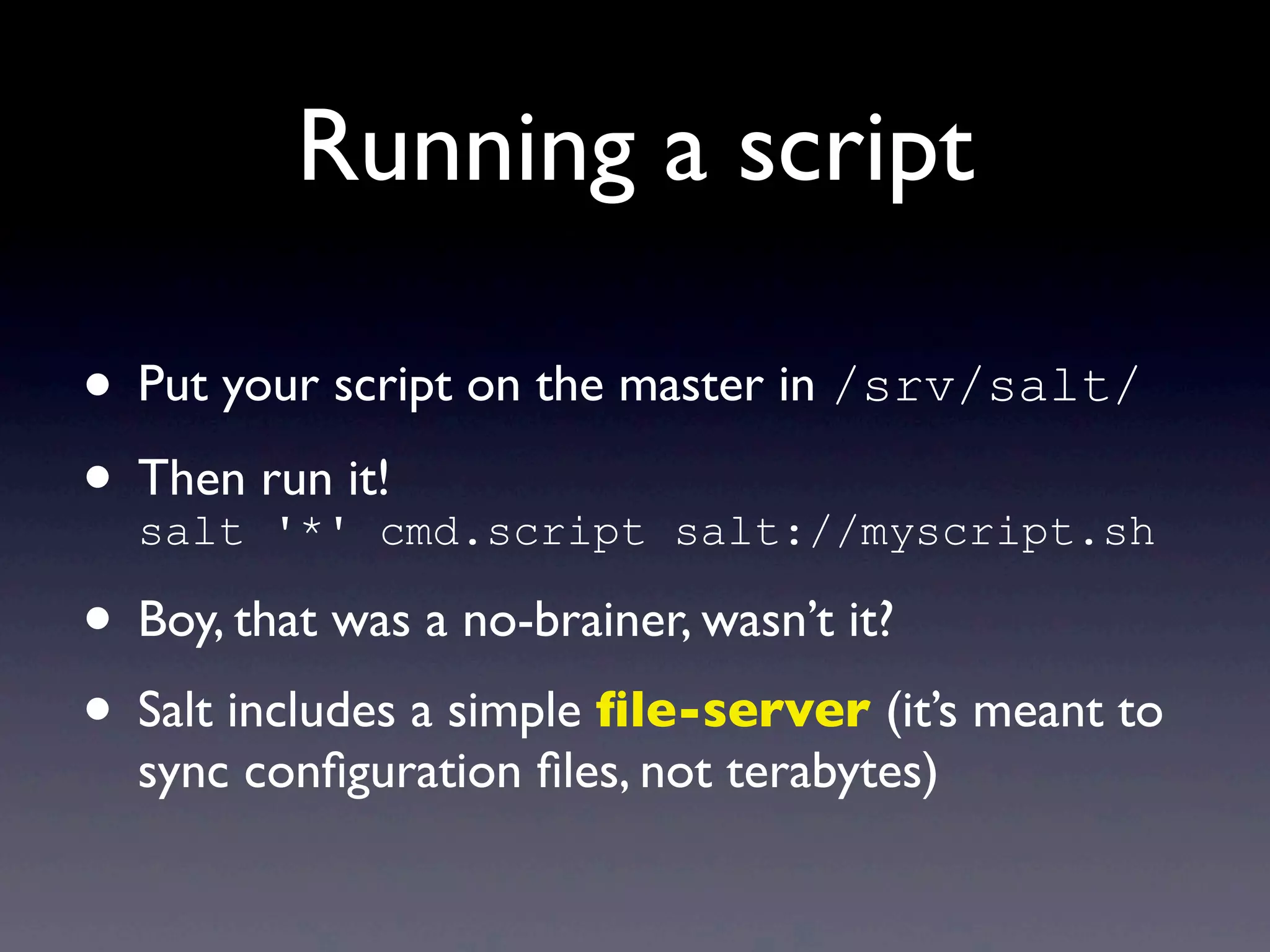

• The SLS files go through a (configurable)

template engine, by default jinja

• This gives SLS files a lot of flexibility, for example:

{% set motd = ['/etc/motd'] %}

{% if grains['os'] == 'Debian' %}

{% set motd = ['/etc/motd.tail', '/var/run/motd'] %}

{% endif %}

{% for motdfile in motd %}

{{ motdfile }}:

file.managed:

- source: salt://motd

{% endfor %}](https://image.slidesharecdn.com/saltstackpresentation-clean-130628214626-phpapp02/75/A-user-s-perspective-on-SaltStack-and-other-configuration-management-tools-33-2048.jpg)

![Config files templates

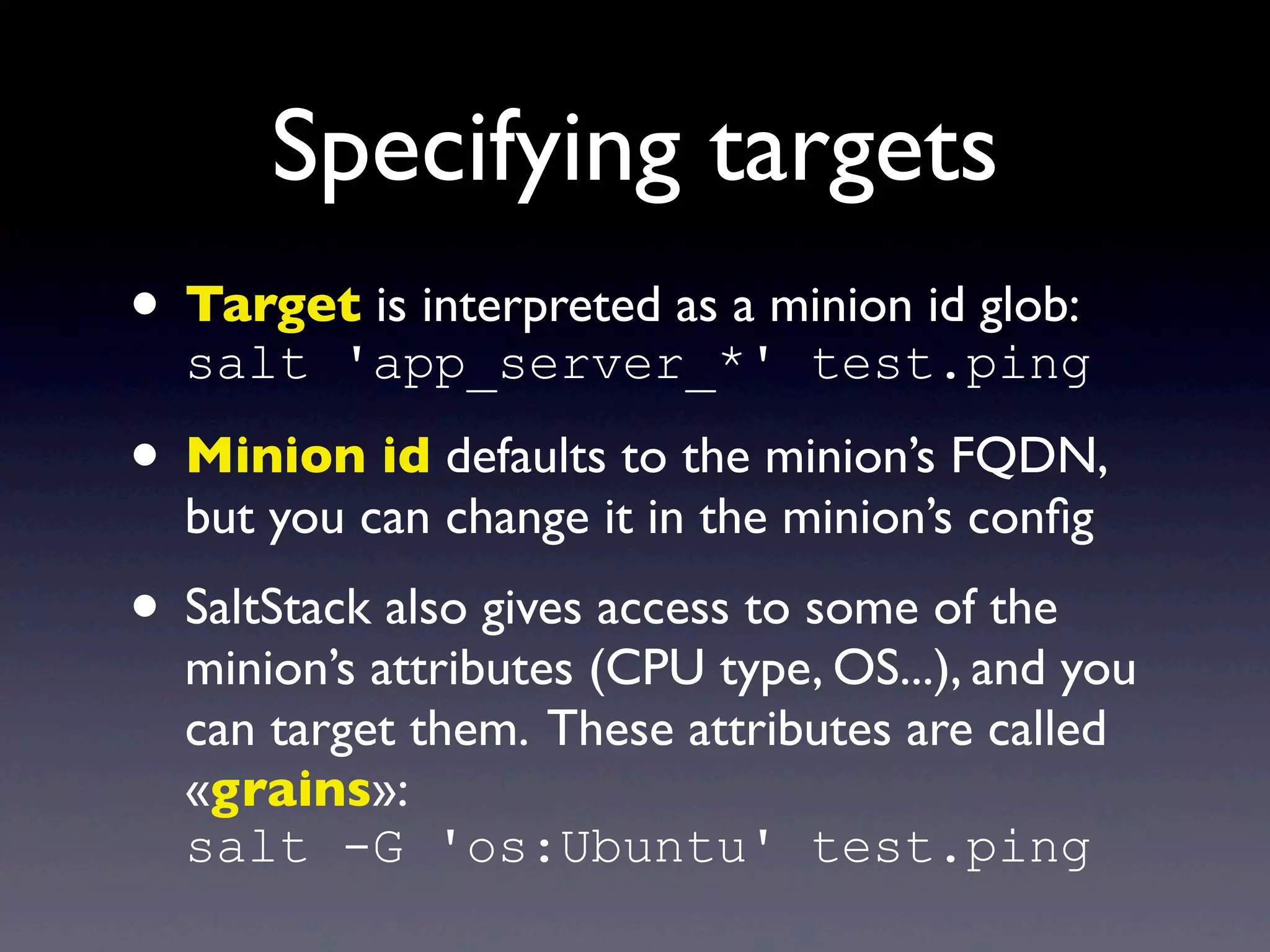

• The configuration files themselves can be

rendered through a template engine:

/etc/motd:

file.managed:

- source: salt://motd

- template: jinja

- defaults:

message: 'Foo'

{% if grains['os'] == 'FreeBSD' %}

- context:

message: 'Bar'

{% endif %}

The motd file is actually a jinja template. In this

example, it is passed the message variable and it can

render it using the jinja syntax: {{ message }}

file.managed allows two dictionaries

to be passed as arguments to the template:

defaults and context. Values in

context override those in defaults.](https://image.slidesharecdn.com/saltstackpresentation-clean-130628214626-phpapp02/75/A-user-s-perspective-on-SaltStack-and-other-configuration-management-tools-34-2048.jpg)