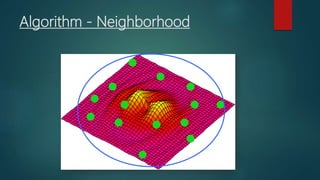

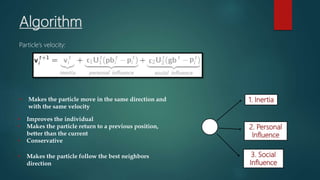

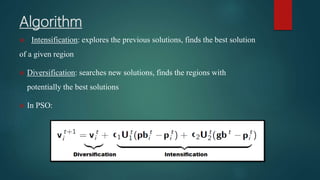

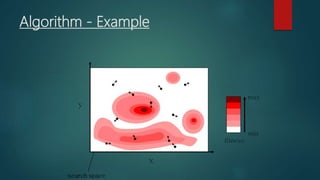

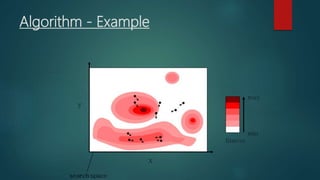

Particle swarm optimization is a metaheuristic algorithm inspired by the social behavior of bird flocking. It works by having a population of candidate solutions, called particles, that fly through the problem space, adjusting their positions based on their own experience and the experience of neighboring particles. Each particle keeps track of its best position and the best position of its neighbors. The algorithm iteratively updates the velocity and position of each particle to move it closer to better solutions.